Home > Information > News

#News ·2025-01-09

In the process of upgrading 2D dimension to 3D, the visible part and the invisible part can be modeled separately.

Since 2025, 3D generation has also ushered in a new breakthrough.

At CES, Stability AI announced a new two-stage approach to 3D generation, Stable Point Aware 3D (SPAR3D), designed to open up new ways of 3D prototyping for game developers, product designers, and environment builders.

Whether it's delicate artwork or intricately textured everyday objects, SPAR3D provides detailed predictions of precise geometry and a full 360-degree view, including areas that are usually hidden (such as the back of an object) :

SPAR3D also introduces real-time editing, which generates the complete structure of a 3D object from a single image in less than a second.

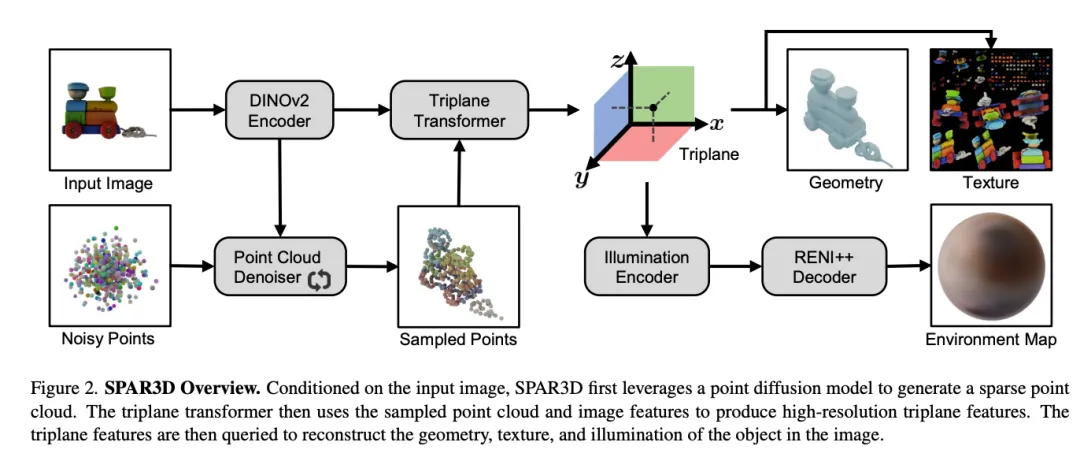

SPAR3D is a novel two-stage approach: In the first stage, a lightweight point diffusion model is used to generate sparse 3D point clouds with fast sampling speed; The second stage uses a cloud of sampling points and input images to create a highly detailed grid.

This two-stage design enables probabilistic modeling of ill-conditioned single-image 3D tasks while maintaining high computational efficiency and excellent output fidelity. The use of point clouds as intermediate representations further allows interactive user editing. When evaluated on different datasets, SPAR3D showed better performance than the SOTA method.

In short, SPAR3D has the following advantages:

Reconstructing a 3D object from an image is a challenging reverse engineering problem: although it is possible to infer the visible surface shape of an object by analyzing light and shadow in the image, it requires a wealth of 3D prior knowledge to accurately predict the obscured part.

At present, there are two main development directions in this field: feedforward regression and diffusion-based generation. Although the regression based model is fast in inference, it is not effective in reconstruction of obscured areas. The diffusion-based method can generate diverse 3D results through iterative sampling, but it has low computational efficiency and poor alignment with the input image.

In order to make full use of the advantages of diffusion models in distributed learning while avoiding the problems of poor output quality and low computational efficiency, the research team at Stability AI designed a two-stage reconstruction system: SPAR3D. The system divides the 3D reconstruction process into two stages: point sampling and meshing, which achieves the balance of high efficiency and high quality.

When entering an image The method can generate a 3D mesh model containing PBR materials, including albedo, metallicity, roughness and surface normals.

The method can generate a 3D mesh model containing PBR materials, including albedo, metallicity, roughness and surface normals.

The team designed a model with two phases of point sampling and meshing (shown in Figure 2). In the point sampling phase, the system will use the point diffusion model to learn the point cloud distribution corresponding to the input image. Due to the low resolution of the point cloud, iterative sampling can be completed quickly at this stage.

In the meshing stage, the system converts the sampled point cloud into a high-detail mesh by regression method, and uses local image features to ensure accurate matching with the input image.

This design concentrates complex uncertainty calculations in the point sampling phase, allowing the meshing phase to focus on generating high-quality detail. This not only improves the overall effect, but also effectively reduces unnecessary light effects in the texture, especially when dealing with reflective surfaces.

Choosing a point cloud as an intermediate representation to connect the two phases is a key design of the method. Not only is the point cloud the most computationally efficient 3D representation because all the information is used to represent the surface, its lack of connectivity also provides advantages for user editing.

When the 3D generated results don't match the user's expectations, local edits can be easily done on a low-resolution point cloud without worrying about the topology. Input the edited point cloud into the meshing stage to generate a grid that meets the needs of users better. This also makes SPAR3D significantly better than previous regression methods while maintaining high computational efficiency and input observation fidelity.

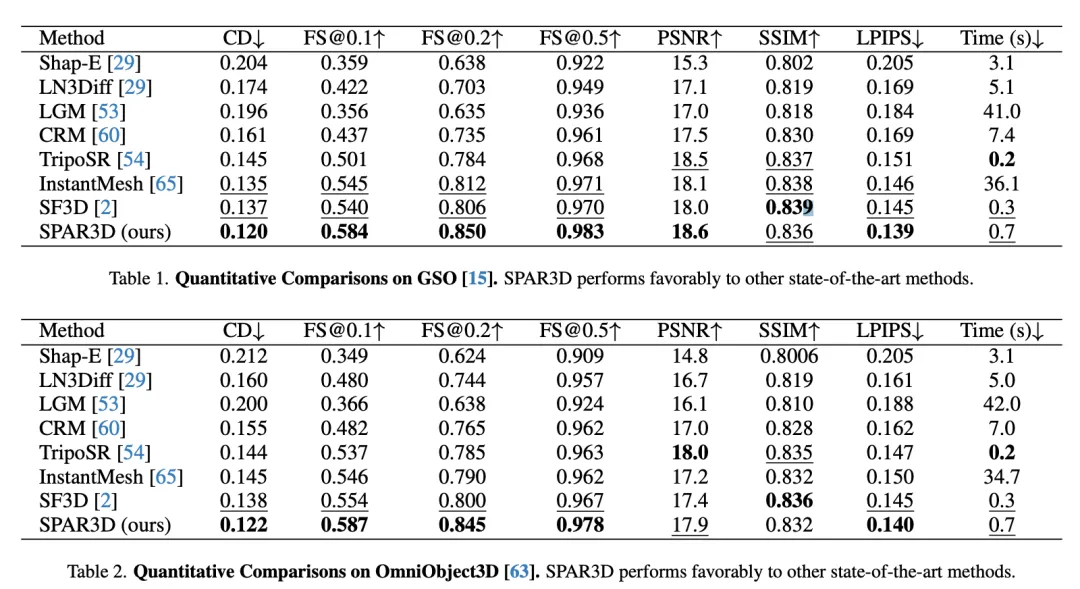

The team quantitatively compared SPAR3D to other baseline methods on GSO and Omniobject3D datasets. As shown in Tables 1 and 2, SPAR3D significantly outperformed other regression or generative baseline methods on most assessment measures in both datasets.

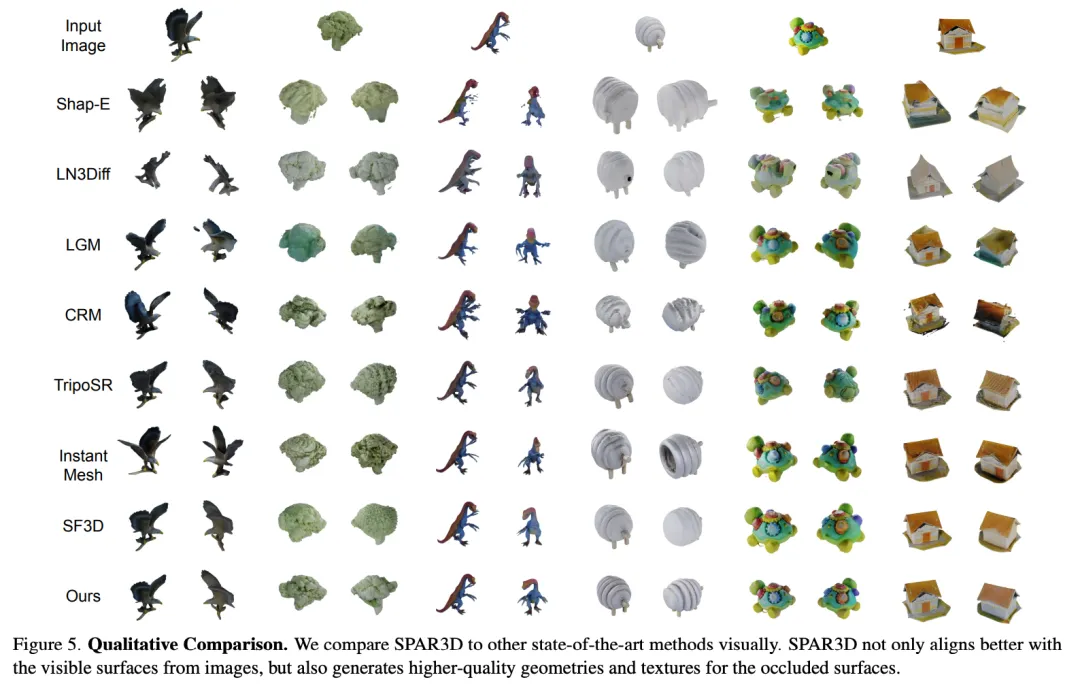

Figure 5 shows the comparison of qualitative results of different methods: Although the 3D assets generated by regression based methods (such as SF3D and TripoSR) maintain a good consistency with the input image, the back side is too smooth; 3D assets generated by multi-view diffusion methods (such as LGM and CRM) retain more details on the back, but there are obvious artifacts. While pure generation methods (such as Shap-E, LN3Diff) can produce a clear surface outline, the details are often wrong.

In contrast, SPAR3D can not only faithfully reproduce the input image, but also reasonably generate details of the obscured part.

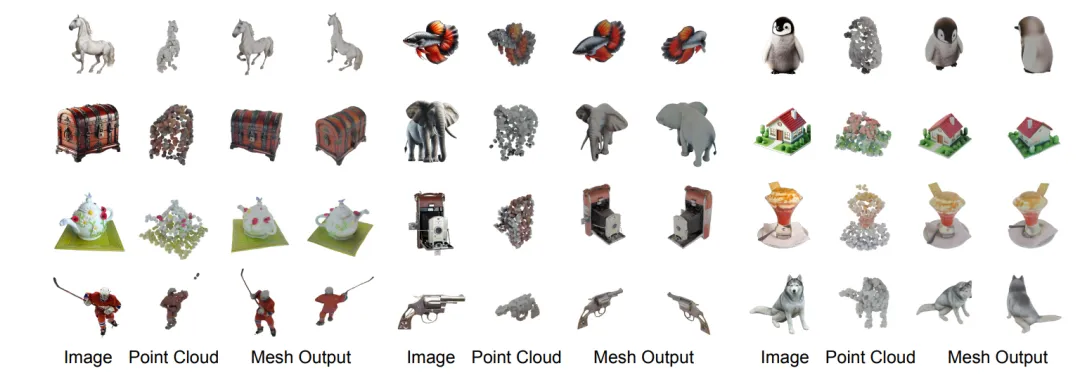

Figure 6 further illustrates its excellent generalization performance over real-world scene images.

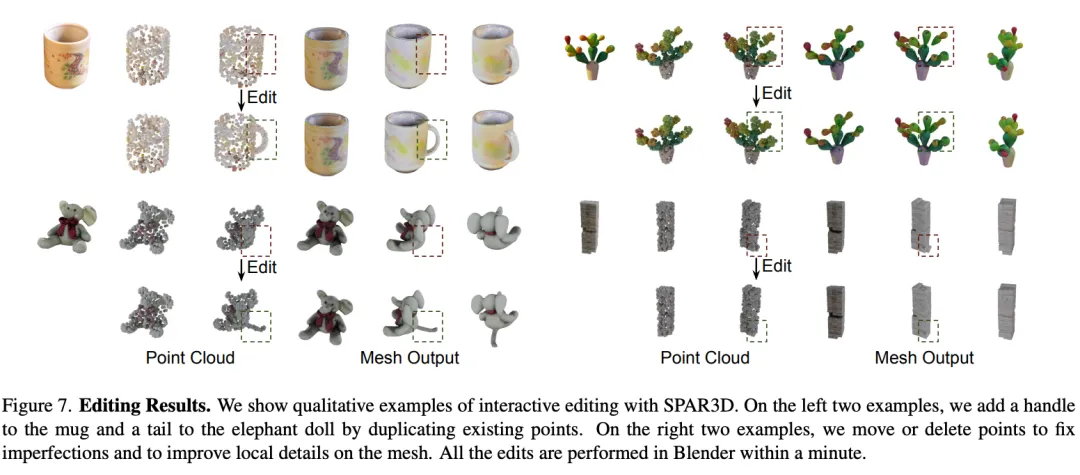

SPAR3D uses an explicit point cloud as an intermediate representation, giving users the ability to further edit the model. Through the point cloud, users can flexibly modify the invisible parts of the reconstructed grid.

Figure 7 shows several examples of editing, such as adding key components to a 3D model and optimizing the details that are not generated as well.

This editing method is simple and efficient, allowing users to easily adjust the reconstruction results according to their needs.

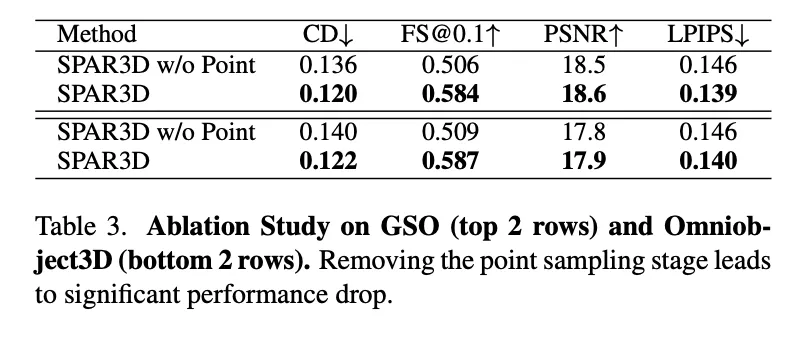

The team demonstrated the critical role of the point sampling phase through ablation experiments. They simplified SPAR3D to a pure regression model, SPAR3D w/o Point (removal point sampling phase), and compared it on the GSO and Omniobject3D datasets.

The experimental results show that the complete SPAR3D is significantly better than the simplified version, which verifies the effectiveness of the design.

The team designed experiments to further understand how SPAR3D works. When SPAR3D was designed, the core assumption was that a two-stage design could effectively separate the uncertain part (back modeling) from the deterministic part (visible surface modeling) of monocular 3D reconstruction.

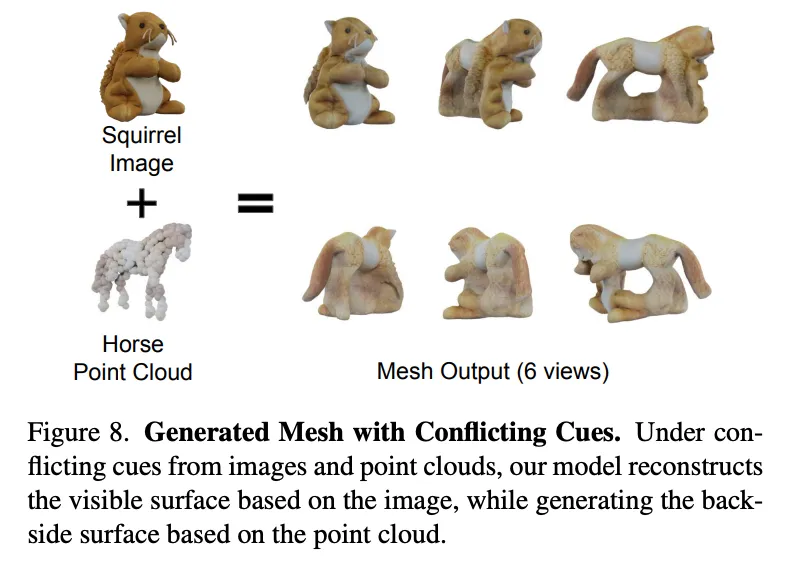

Ideally, the meshing phase should rely mainly on input images to reconstruct the visible surface, while relying on point clouds to generate the back side. To test this, the research team conducted a special experiment: deliberately feeding mismatched data into the system (a picture of a squirrel paired with point cloud data from a horse) to test how the system would handle such conflicting inputs.

As shown in Figure 8, the results of the experiment are interesting: the front of the reconstructed model is aligned with the squirrel, while the back follows the shape of a point cloud horse. This result confirms that the system can indeed handle the reconstruction of the visible and invisible parts separately.

See the original paper for more details.

Reference link:

https://stability.ai/news/stable-point-aware-3d?utm_source=x&utm_medium=social&utm_campaign=SPAR3D.

https://static1.squarespace.com/static/6213c340453c3f502425776e/t/677e3bc1b9e5df16b60ed4fe/1736326093956/SPAR3D+Research +Paper.pdf.

2025-02-17

2025-02-14

2025-02-13

13004184443

Room 607, 6th Floor, Building 9, Hongjing Xinhuiyuan, Qingpu District, Shanghai

gcfai@dongfangyuzhe.com

WeChat official account

friend link

13004184443

立即获取方案或咨询

top