Home > Information > News

#News ·2025-01-09

The paper's lead authors, Chaoyun Zhang, Shilin He, Liqun Li, Si Qin, and others from the Data, Knowledge, and Intelligence (DKI) team, Member of the core development team for Microsoft Windows GUI Agent UFO.

The Graphical User Interface (GUI), one of the most iconic innovations of the digital age, has greatly simplified the complexity of human-computer interaction. From simple ICONS, buttons, and Windows to complex multi-application workflows, GUIs provide users with an intuitive and user-friendly experience. However, in the process of automation and intelligent upgrading, the traditional GUI control mode has always faced many technical challenges. The scripted or rule-driven approaches of the past have been helpful in certain scenarios, but as modern application environments become more complex and dynamic, their limitations become more apparent.

In recent years, the rapid development of artificial intelligence and Large Language Models (LLMs) has brought transformative opportunities to this field.

Recently, the Microsoft research team released an 80-page review paper with more than 30,000 words, "Large Language Model-Brained GUI Agents: A Survey." This review systematically reviews the current status, technical framework, challenges and applications of GUI agents driven by large models. By combining large Language Models (LLMs) with Visual Language Models (VLMs), GUI agents can automatically operate graphical interfaces according to natural language instructions and complete complex multi-step tasks. This breakthrough not only surpasses the inherent bottleneck of traditional GUI automation, but also promotes the transition of human-computer interaction from "click + input" to "natural language + intelligent operation".

Link: https://arxiv.org/abs/2411.18279

In the past few decades, GUI automation technology has relied on two main approaches:

These traditional methods are inadequate in the face of highly dynamic, cross-application complex tasks. For example:

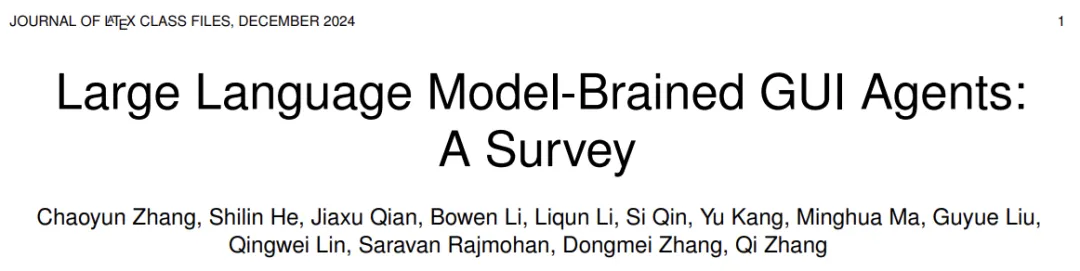

Figure 1: Conceptual demonstration of GUI agents.

Microsoft's review points out that the Large Language Model (LLM) plays a key role in solving the above problems, and its advantages are mainly manifested in the following three areas:

The large models represented by GPT series have excellent ability of natural language understanding and generation. They are able to automatically parse simple and intuitive user instructions such as "Open a file, extract key information, and send it to a colleague" into a series of actionable steps. Through Chain-of-Thought reasoning and task decomposition, agents can progressively complete extremely complex processes.

With the introduction of multimodal technology, visual language model (VLM) can process text and visual information. By analyzing GUI screenshots or UI structure trees, agents can understand the layout and meaning of interface elements (buttons, menus, text boxes). This provides the agent with human-like visual understanding, enabling it to perform precise operations in a dynamic interface. For example, automatically locate the search bar in the web page and enter keywords, or find specific buttons in the desktop application for copy and paste operations.

Compared to traditional scripting methods, GUI intelligence using large models can respond to real-time feedback and adjust policies dynamically. When the interface status changes or error messages appear, the agent can try new paths and schemes instead of relying on the fixed script flow.

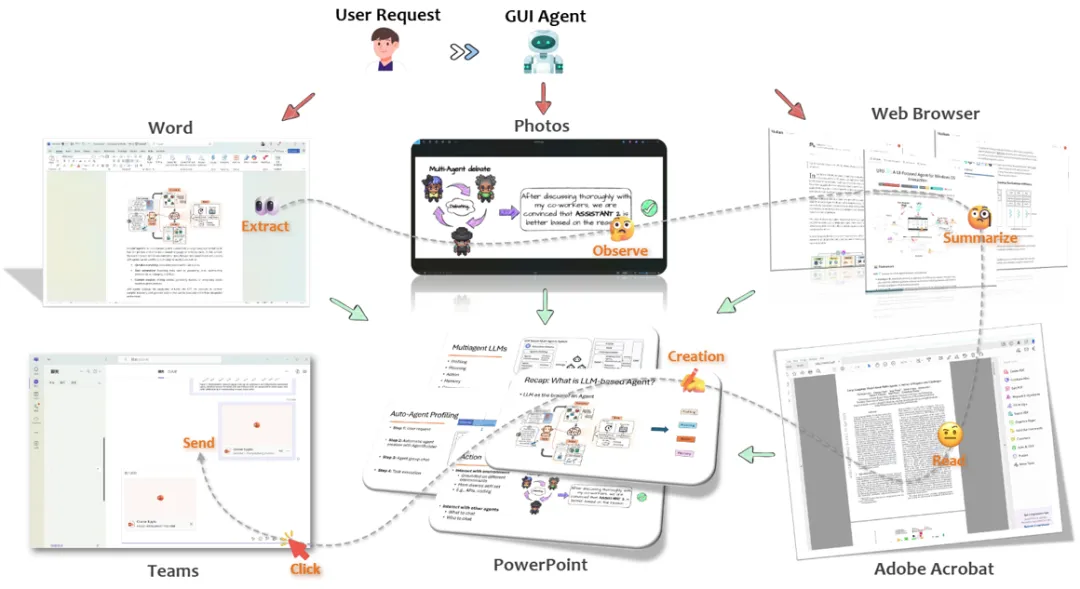

Figure 2: The development and main work of GUI agents.

With the support of large models, GUI agents bring qualitative improvement to human-computer interaction. Users only need natural language instructions, and the agent can complete the goals that would otherwise require tedious clicks and complex operations. This not only reduces the user's operation and learning costs, but also reduces the dependence on specific software apis and improves the system versatility. As shown in Figure 2, since 2023, research on GUI agents driven by large models has emerged in an endless stream and gradually become a frontier hot spot.

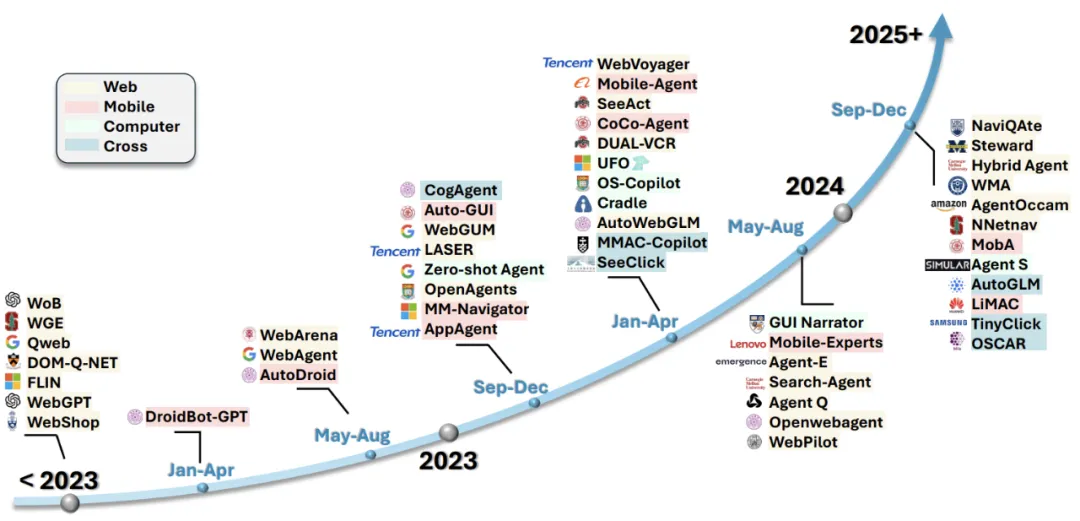

Microsoft's review states that a large model-driven GUI agent typically includes the following key components, as shown in Figure 3:

Figure 3: GUI agent basic architecture.

Input data includes GUI screenshots, UI structure trees, element attributes (type, label, location), and window level information. Through Windows UI Automation, Android Accessibility API and other tools, agents can effectively capture interface information.

The agent combines user instructions with the current GUI state, builds Prompt inputs, and uses a large language model to generate a plan for next actions. For example: "User command + screen shot + UI element attribute" after LLM processing, the agent will output a clear operation step (click, input, drag, etc.).

After the constructed Prompt is entered into the LLM, the model predicts subsequent execution actions and planned steps.

The agent performs actual operations, such as mouse clicks, keyboard inputs, or touches, based on high-level commands output by the LLM to complete tasks in web pages, mobile applications, or desktop systems.

To cope with complex multi-step tasks, GUI agents design short-term memory (STM) and long-term memory (LTM) mechanisms to track task progress and historical operations to ensure consistency and coherence of context.

In addition, higher-order technologies (such as GUI parsing based on computer vision, multi-agent collaboration, self-reflection and evolution, reinforcement learning, etc.) are also being explored. These technologies will make GUI agents increasingly powerful and perfect. Microsoft's review has covered these frontier directions in detail.

Microsoft's review systematically summarizes the evolution path of the field, covering framework design, data acquisition, model optimization, and performance measurement, providing a complete framework for researchers and developers to guide.

The current framework design of GUI agents can be divided into: according to application scenarios and platform characteristics:

The proposal and verification of these frameworks provide the possibility for GUI agents to be implemented in various application scenarios, and create a solid foundation for cross-platform automation.

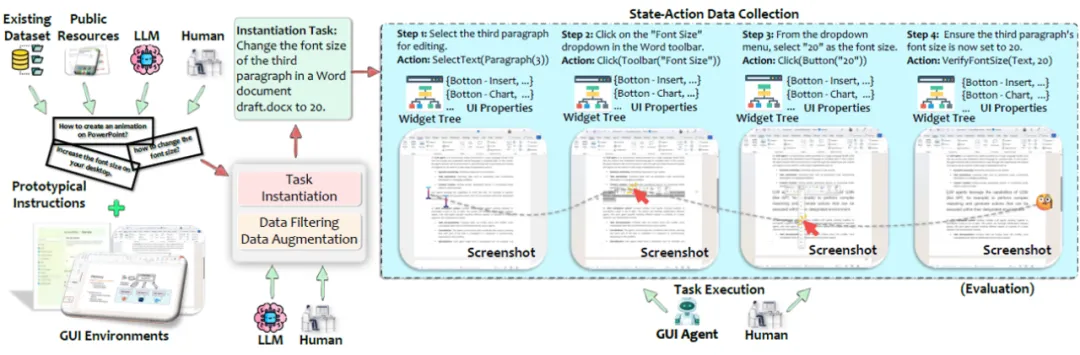

Efficient and accurate GUI operation cannot be achieved without rich, real data support, including:

Figure 4: GUI agent data acquisition process.

These data provide the basis for training and testing, as well as a solid foundation for standardized assessments in the field. Figure 4 shows the data acquisition process for training GUI agent.

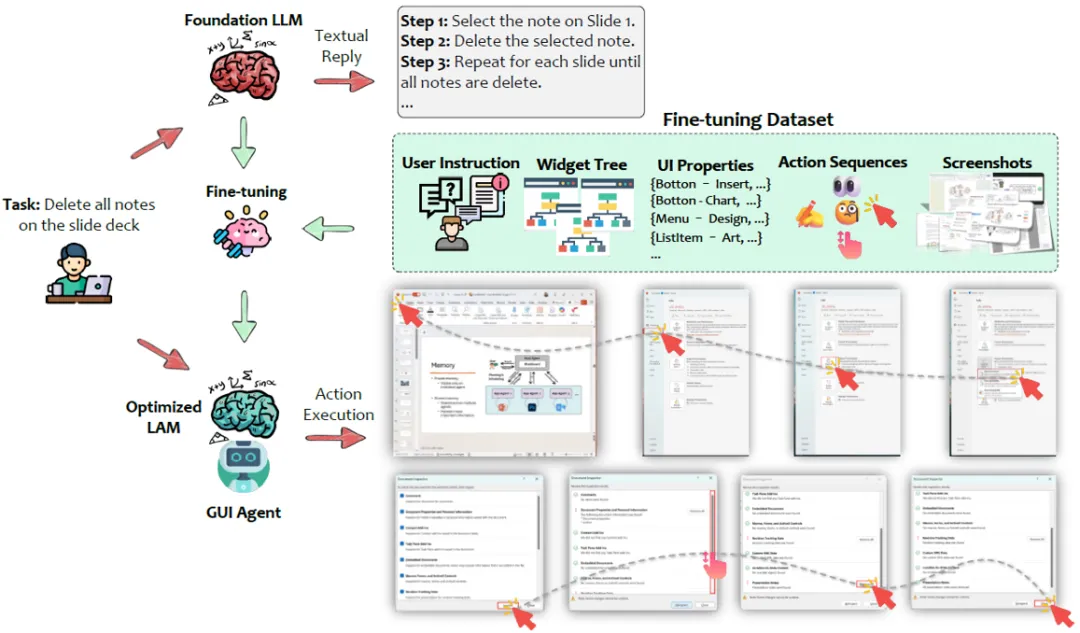

The review proposes the concept of "Large Action Model" (LAM), which is fine-tuned on the basis of LLM to solve the core challenges in GUI agent task execution:

Figure 5: Fine-tuning the "Big Action Model" for GUI agents.

As shown in Figure 5, by fine-tuning LAM in a real environment, the agent can significantly improve its execution efficiency and adaptability.

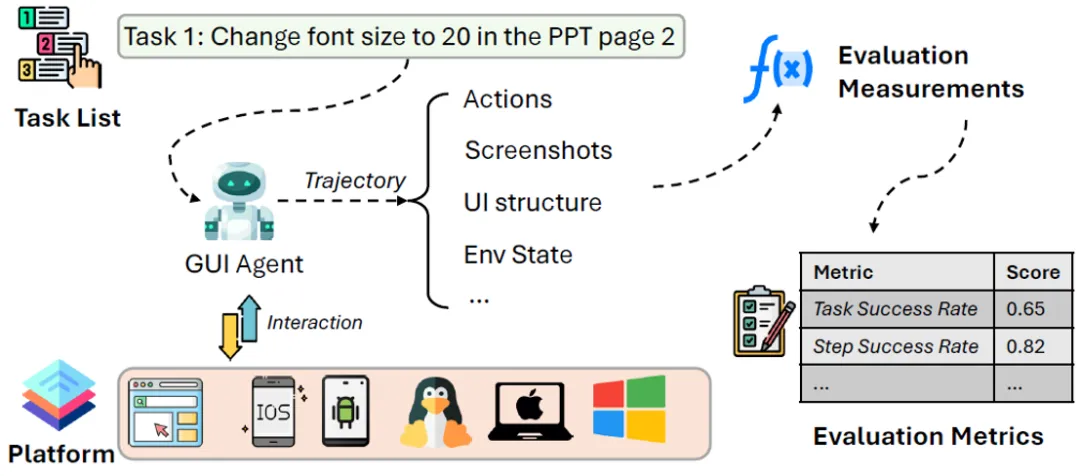

Figure 6: Evaluation process of GUI agent.

Evaluation is an important means to measure the ability of the agent. As shown in Figure 6, the ability of the agent in all aspects can be evaluated by observing the trajectory and log recording of the agent's task execution. The main evaluation indicators mainly include:

A series of standardized benchmarks have appeared in the field, which provide an objective basis and platform for the performance evaluation and comparison of GUI agents.

Traditional software GUI testing often relies on lengthy scripting and repeated manual verification, which is time-consuming and prone to missing key scenarios. Now, with Large Language Model (LLM) -enabled GUI agents, we are ushering in a revolution in testing. These agents are no longer simply repeating fixed scripts, but can directly generate test cases through natural language descriptions, "self-explore" interface elements, and dynamically respond to various changes in the user interface. Research has shown (as demonstrated by tools such as GPTDroid, VisionDroid, and AUITestAgent) that agents can efficiently catch potential defects and trace complex interaction paths without the deep involvement of professional software engineers. Implement a fully automated testing process from input generation, bug reproduction to functional verification.

Take font size debugging as an example, with just one sentence "Please test the process of changing font size in system Settings", the GUI agent can autonomously navigate the interface, simulate user click and swipe options, and accurately confirm whether the font adjustment is effective in the result interface. Such natural language driven testing not only effectively improves test coverage and efficiency, but also makes it easy for non-technical personnel to participate in the quality assurance process. This means faster iterations of software products and the freeing up of development and quality assurance teams to focus more on innovation and optimization.

Virtual assistants are no longer limited to simple alarm Settings or weather queries. When LLM-enabled GUI agents become the "brains" of virtual assistants, we get a true "generalist" - one who can automate tasks ranging from document editing and data table analysis to complex mobile operations, guided by natural language commands, across desktops, phones, Web browsers and enterprise applications.

These agents not only respond to commands, but also understand user requirements based on context and flexibly adapt to different interface elements. For example, they can autonomously find hidden feature entries in mobile apps and show new users how to take screenshots. Or in an office environment, a set of cross-platform data is collated to automatically generate reports. In such applications, users do not have to worry about memorizing cumbersome operation steps, nor do they have to face complex processes and dilemma, just describe the target in natural language, and the agent can quickly parse the context, locate the interface components and complete the instructions. Through continuous learning and optimization, these intelligent assistants can also become more and more "get you", effectively improving your productivity and experience satisfaction.

In summary, the GUI agent is no longer just a "tool" in real applications, but more like a 24/7 "digital assistant" and "quality expert". In the field of testing, they guarantee software quality and significantly reduce labor and time costs. In daily and business operations, they become cross-platform multi-functional helpers, allowing users to easily interact with the digital world in a more intuitive and human way. In the future, with the continuous iteration and upgrading of technology, these agents will continue to expand the application boundaries and inject new vitality into the digital transformation of all walks of life.

Despite the promise of GUI agents, Microsoft's review also makes clear where the current challenges lie:

Looking to the future, as large language models and multimodal technologies continue to evolve, GUI agents will land in more areas, bringing profound changes to productivity and workflow.

The rise of large models opens up a whole new space for GUI automation. When GUI agents no longer rely solely on rigid scripts and rules, but rely on natural language and visual understanding to make decisions and perform actions, human-computer interaction changes in a qualitative way. This not only simplifies user operations, but also provides strong support for application scenarios such as intelligent assistants and automated testing.

With the continuous iteration of technology and the maturity of ecology, GUI agents are expected to become a key tool in daily work and life, making complex operations more intelligent and efficient, and ultimately leading human-computer interaction to a new era of intelligence.

2025-02-17

2025-02-14

2025-02-13

13004184443

Room 607, 6th Floor, Building 9, Hongjing Xinhuiyuan, Qingpu District, Shanghai

gcfai@dongfangyuzhe.com

WeChat official account

friend link

13004184443

立即获取方案或咨询

top