Home > Information > News

#News ·2025-01-08

The large model inference engine is the engine of generative language model operation, the hub of receiving prompt input from customers and generating return response, and the transformer that pulls up heterogeneous hardware and converts physical electrical energy into human knowledge.

The basic working mode of the large model inference engine can be summarized as: receiving concurrent requests including input prompt and sampling parameters, dividing words and assembling them into batch input to the engine, dispatching GPU to perform forward inference, processing calculation results and returning to the user as words.

Since the tasks completed by the Prefill stage and the Decoder stage are different, the SLO (Service Level Object) is usually used from the user's perspective: TTFT (Time To First Token) and TPOT (Time Per Output Token) measure the engine.

Of course, these SLOs alone cannot fully evaluate the inference engine's use of resources, so, like other systems that use heterogeneous resources, throughput is used to evaluate the engine's use of resources, and the common indicator is the limit output rate.

TPS (Token Per Second) is the maximum number of Tokens that can be generated within 1s by using all available resources when the system is fully loaded. The higher this indicator is, the more efficient the hardware is and the larger the user scale it can support.

At present, there are many popular inference engines on the market, such as vLLM, SGLang, LMDeploy, TRT-LLM and so on. Among them, vLLM is the first inference engine in the industry that perfectly solves various problems with the indefinite length characteristics of large models, and it is also the most used and the most active inference engine in the market.

vLLM's original and efficient memory management, high throughput, easy to use, easy to expand, many modes, fast support for new features, and active community are the reasons for its popularity. However, vLLM's handling of complex scheduling logic is not extreme, and a large number of CPU operations are introduced, which elongates TPOT. The extension of TPOT will reduce the user experience, reduce the output rate, and cause a waste of GPU resources.

Different from the small model reasoning with batch as the smallest unit of inference, the smallest unit of large model reasoning is step. This is also determined by the characteristics of autoregression in large-scale model reasoning.

Each step will generate a keyword for each request in the batch. If a request generates a terminator, the request will be terminated early and removed from the batch of the next step, and the free resources will be dynamically allocated by the engine to the remaining requests that are queued. The observation index TPOT that can be perceived by the user is the execution time of each step.

The execution logic of each step can be simply summarized in the following two parts: forward reasoning and Token spacing.

The ultimate goal of optimizing the inference engine is actually to limit the throughput of forward inference, while limiting the compression of the interval between tokens, and ultimately increasing the limit output rate.

However, in the implementation of vLLM, there are naturally contradictions between the two. The throughput of forward inference (i.e., the full utilization of GPU computing power) requires that the number of requests in the batch be increased as much as possible within the appropriate range. However, the number of requests lengthens the interval between tokens, which will not only lengthen the TPOT, but also cause the GPU to cut off and appear idle. In the worst case (such as batch 256), the interval between tokens and the forward inference time are almost the same, and the GPU utilization is only 50-60%.

In order to increase the limit output rate while ensuring high GPU utilization, optimizing the spacing between tokens has become the key to improving the reasoning speed.

Baidu's AI acceleration kit AIAK, based on vLLM, continues to make efforts in optimizing TPOT, and always maintains a technical lead for the community in the same cycle.

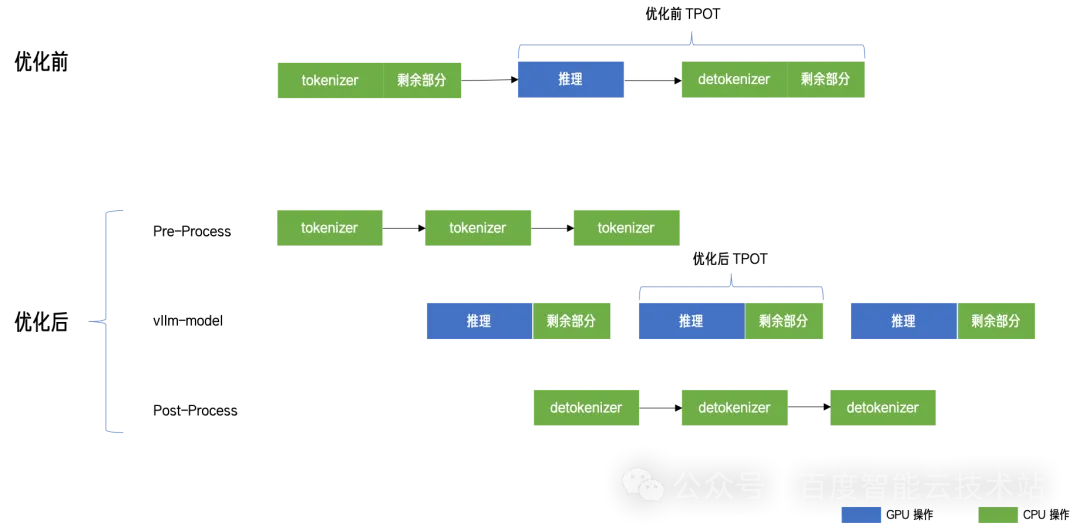

The goal of this scheme is to shorten the interval between tokens as much as possible, taking the time spent by detokenizer away from the TPOT.

We found that the tokenize/detokenize process (the conversion of token id and string) is a logical operation that is completely independent of GPU inference operations when processing input requests and generating returns.

Therefore, we use the NVIDIA Triton framework to abstract the tokenize/detokenize process from the inference flow as a separate Triton model deployment, using Triton's ensemble mechanism, The serial process is transformed into a 3-stage (3-process) pipeline, the tokenize/detokenize and GPU inference overlap is realized, and the Token interval time is effectively shortened. Although this optimization only eliminates a portion of the CPU operations in the intertoken interval, it still results in a gain of nearly 10%.

picture

picture

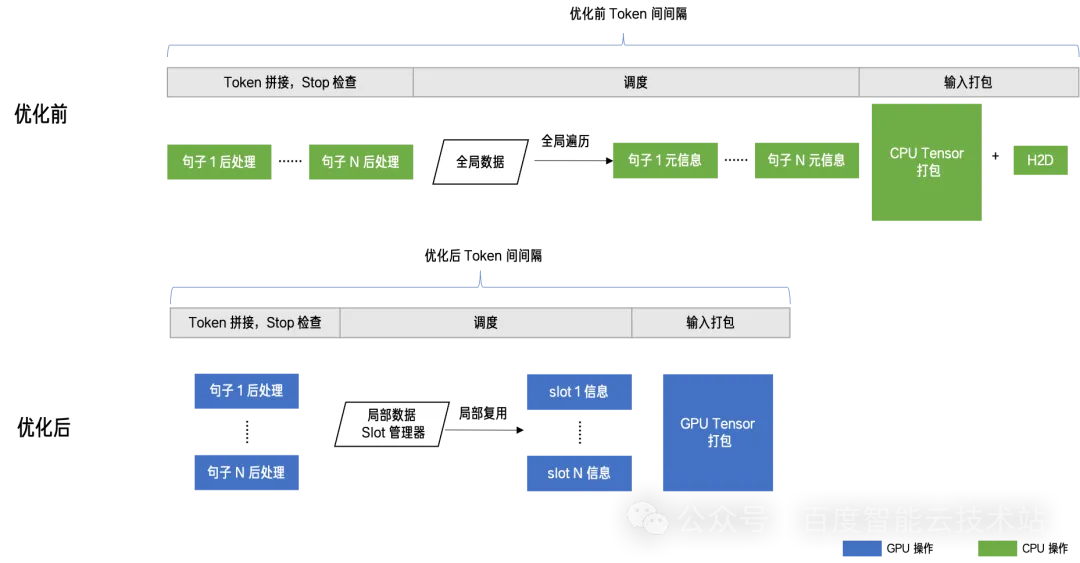

This scheme mainly reformed the scheduling logic of vLLM, comprehensively optimized the lexical concatenation, end detection, user response, request scheduling, input preparation, improved the parallel efficiency of each module, and realized the time compression of the "remaining part" in the previous scheme.

We find that the scheduling logic of vLLM is oriented to the global perspective. That is to say, the scheduling of each step will be re-filtered from the global, which is equivalent to after the end of the current step, the scheduler will put the sentence in the current batch "put back" in the global request pool, and then "retrieve" the appropriate request from the global pool before the next step starts. This switch introduces an extra overhead.

In order to achieve global scheduling, vLLM introduces a large number of for loops in other links such as lexical concatenation to process each request in serial. As these operations all occur on the CPU, time-consuming host to device operations must be introduced in the input packaging process.

In fact, much of the information between steps can be reused (a large proportion of each put back request and fetch request are repeated). It is also based on this insight, Baidu Hundred GE AIAK the GPU can be iterated each batch as a batch of fixed slot, once a request is scheduled to a slot, before completing all the reasoning iterations of the request, will not be called out, it is also with these fixed slot abstract, AIAK implements:

picture

picture

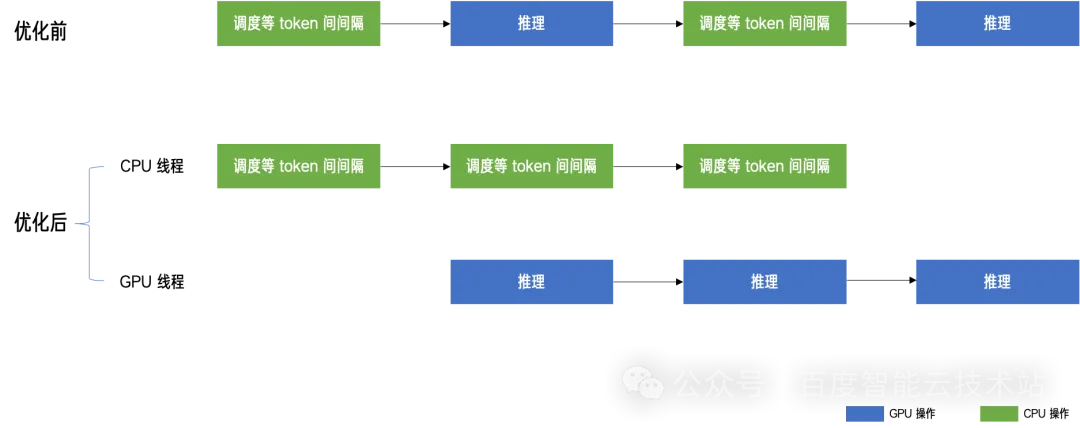

The multi-process architecture decouples logically independent parts to other processes for pipelining-parallel, while the static Slot scheme faces the time consuming problem between tokens and optimizes the scheduling mode to squeeze the time consuming of each link. With these two schemes, the Token interval has been reduced from 35ms to 14ms, and the GPU utilization has been increased from 50% to 75%, but the goal of 100% GPU utilization and zero time between tokens is still far away.

Baidu Baige AIAK through asynchronous scheduling mode, the "remaining part" of the previous scheme is taken out, and finally achieved the above limit goal.

In simple terms, it is to completely separate the CPU operation-intensive Token interval and the GPU computation-intensive forward reasoning into two pipelines to do secondary pipelining parallel.

To simplify the implementation, we run the relatively simple forward inference as a task in the background thread, and the main thread runs the core complex scheduling logic. The two threads interact through a queue, acting as producers and consumers respectively, synchronizing signals through the thread semaphore and events on the GPU stream to achieve the overlap of the two streams.

picture

picture

As with any other system that uses GPU-like hardware as an accelerator, the pursuit of 100% utilization has always been the ultimate goal of all engineers. Baidu Baige's AI acceleration suite AIAK experienced a long and hard exploration in optimizing TPOT while playing the full GPU utilization goal, and finally completely realized the goal of 0 Token interval and 100% utilization.

Of course, in addition to the many ingenious optimization methods used in this process, Baidu's AIAK has also done a lot of work in the fields of quantification, speculation, service, separation, multi-core adaptation, and is committed to realizing a full-scene, multi-chip, high-performance inference engine to help users in "reducing inference costs," Optimize the user experience on the next level.

2025-02-17

2025-02-14

2025-02-13

13004184443

Room 607, 6th Floor, Building 9, Hongjing Xinhuiyuan, Qingpu District, Shanghai

gcfai@dongfangyuzhe.com

WeChat official account

friend link

13004184443

立即获取方案或咨询

top