Home > Information > News

#News ·2025-01-08

Tell me and I forget, teach me and I remember, involve me and I learn.

Tell me and I will forget, teach me and I will remember, engage me and I will learn.

-- Benjamin Franklin

Breaking down the data wall, what else can we do?

Recently, researchers from Tsinghua University UIUC and other institutions proposed PRIME (Process Reinforcement through IMplicit REwards) : process reinforcement through implicit rewards.

GitHub address: https://github.com/PRIME-RL/PRIME

This is an online RL open source solution with process rewards that improves the reasoning power of language models beyond methods such as SFT (supervised fine-tuning) or distillation.

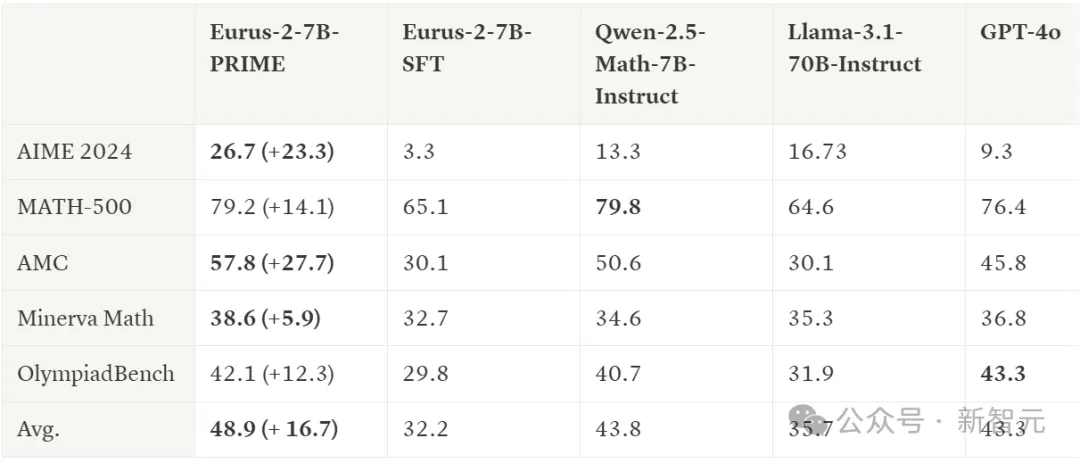

Compared to SFT, PRIME enabled the model to achieve a significant improvement on key benchmarks: an average improvement of 16.7%, and an improvement of more than 20% in both AMC and AIME.

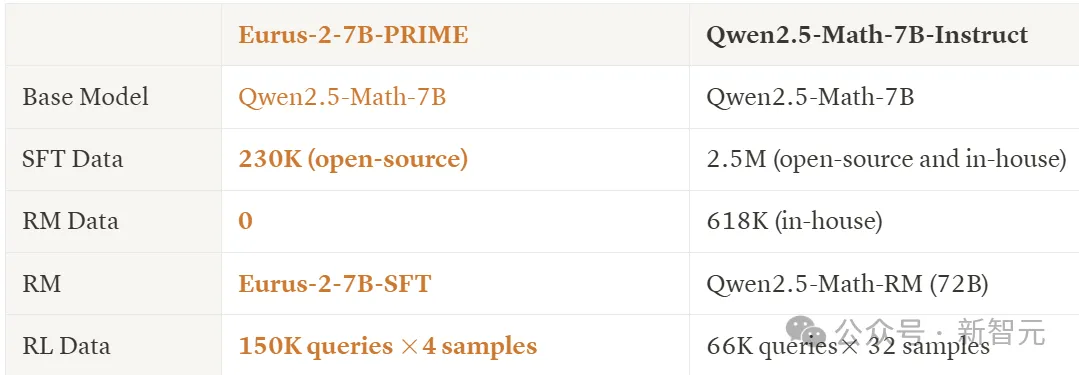

Eurus-2-7B-PRIME uses the same base model (Qwen2.5-math-7B-instruct) as Qwen2.5-Math-7B, but 5 of the 6 tests in the table above exceed the Instruct version, as well as GPT-4o.

This score uses only 1/10 of the data resources of Qwen Math (230K SFT + 150K RL)!

The authors have published all the models and data used in this study, and interested readers can see the link at the end of the article.

Process reward model

As mentioned earlier, select Qwen2.5-Math-7B-Base as a starting point, then go up the difficulty level, using competition-level math and programming benchmarks, These include AIME 2024, AMC, Math-500, Minerva Math, OlympiadBench, LeetCode, and LiveCodeBench (v2).

The base model is first supervised fine-tuned to obtain an introductory model of RL (teaching the model to learn certain inference patterns).

To do this, the researchers designed an action-centric chain reasoning framework, in which the strategy model selects one of seven actions at each step and stops after performing each action.

To build the SFT dataset, the researchers collected inference instructions from several open source datasets.

It is worth noting that for many datasets with real answers, the authors chose to reserve them for later RL training, with the goal of having SFT and RL use different datasets to diversify exploration in RL, and the authors believe that real labels are more important in PL.

The authors responded to instructions with LLaMA-3.1-70B-Instruct and used system prompts to ask the model to execute an action-centric thought chain.

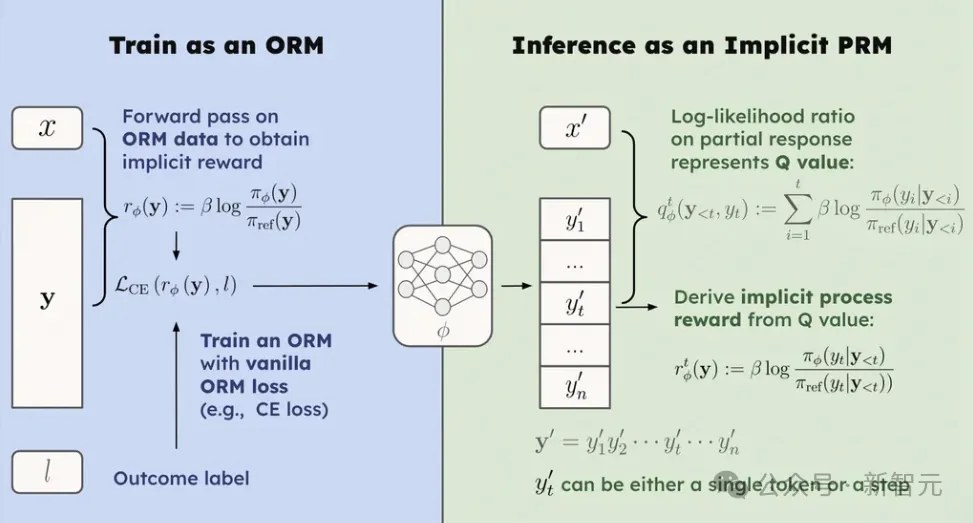

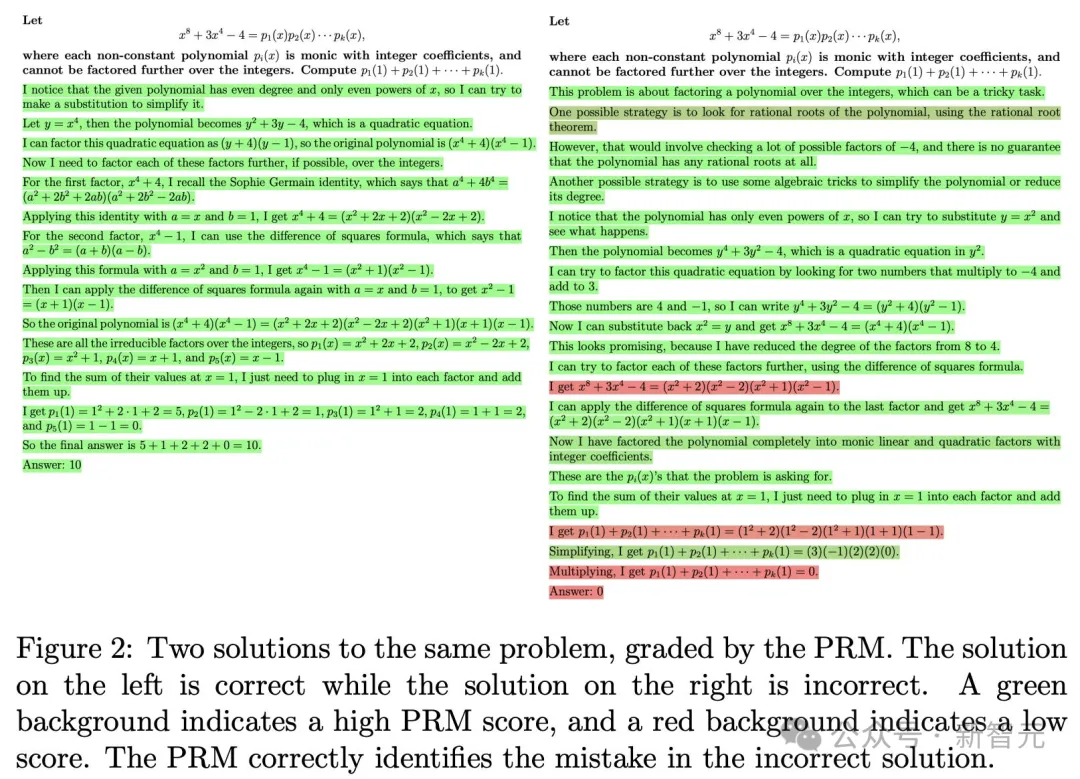

Next comes the Process Reward Model (PRM), which uses an implicit PRM and only needs to train the ORM on the response level label.

A simple understanding of the process reward model is to score each inference step, for example:

The PRM evaluates the response in this granularity.

In the implicit PRM of this article, process rewards can be earned for free in the following ways:

PRM is obtained by simply collecting response level data and training ORMs without annotating step labels.

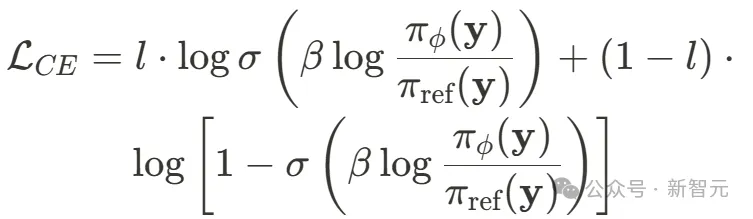

This is independent of the specific choice of ORM training target, such as using cross-entropy loss to instantiate an implicit PRM, which can be replaced with:

The goal of this paper is to make extensive use of reinforcement learning (RL) to improve reasoning ability. In response to this resource-limited situation, the authors summarize some best practices:

Starting with a Ground Truth validator and high-quality data: The authors conducted rigorous data collection and cleansing to obtain verifiable RL data and found that using only a result validator was sufficient to build a robust baseline.

The authors compared different RL algorithms and concluded that the REINFORCE class method for the valueless model was sufficiently efficient.

Stability training with "mid-difficulty" problems: The authors propose a mechanism called an online prompt filter that largely stabilizes RL training by filtering out both difficult and easy problems.

Integrating PRM into online reinforcement learning is no easy task, and there are several key challenges that need to be addressed.

How to provide intensive rewards for reinforcement learning?

Reward sparsity has been a long-standing problem in reinforcement learning. So far, we still don't have a particularly good solution for building intensive rewards for online reinforcement learning in LLM.

Previous approaches focused on building an additional value model for intensive rewards, which are notoriously difficult to train and offer little performance improvement.

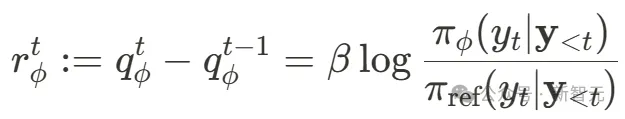

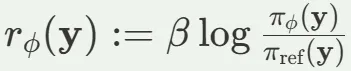

Based on the previous introduction to implicit PRM, use

Token-level process rewards can be earned for free from implicit PRM.

This approach can directly replace the value model in PPO and is very easy to combine with any advantage estimation function and outcome reward. In practice, the authors integrated process rewards with REINFORCE, RLOO, GRPO, ReMax, and PPO with minor modifications.

How to set up a good PRM to start RL?

Even if we find a way to use process rewards in RL, training a good PRM is no easy task: large-scale (process) reward data needs to be collected (which is expensive), and the model should have a good balance between generalization and distribution skew.

Implicit PRM is essentially a language model. Therefore, in theory, any language model can be used as a PRM. In practice, the authors found that the original strategy model itself was a good choice.

How to update PRM online to prevent reward hacking?

In online RL, it is critical to avoid RM being over-optimized or hacked, which requires constant updating of RM along with policy models. However, given the high cost of step labels, it is difficult to update PRM during RL training - scalability and generalization issues.

However, the implicit PRM in this article only requires that the result label be updated. In other words, the PRM can be easily updated during training using the result validator.

In addition, it is possible to do dual forwarding: first update the PRM with policy deployment, and then recalculate the process rewards with the updated PRM, providing a more accurate estimate of the rewards.

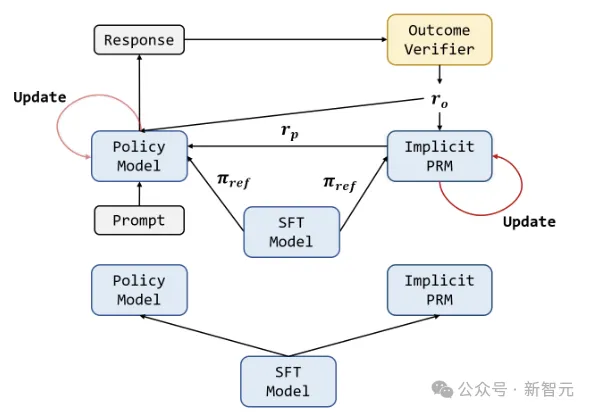

The following diagram shows the entire cycle of the PRIME algorithm:

Both the policy model and the PRM are initialized using the SFT model. For each RL iteration, the policy model first generates the output. The output is then scored by an implicit PRM and a result validator, and the implicit PRM is updated with a result reward as it is output. Finally, the result reward ro and process reward rp are combined to update the policy model.

Here is the pseudo-code for the algorithm:

By default, the implicit PRM is initialized using the SFT model and the SFT model is retained as the reference logarithm detector. In terms of hyperparameters, the learning rate of the strategy model is fixed at 5e-7, the learning rate of the PRM is 1e-6, the AdamW optimizer is used, the mini batchsize is 256, and the micro batchsize is 8.

The rollout phase collects 256 prompts, sampling 4 responses for each prompt. β=0.05 during PRM training, KL coefficient was set to 0 in all experiments.

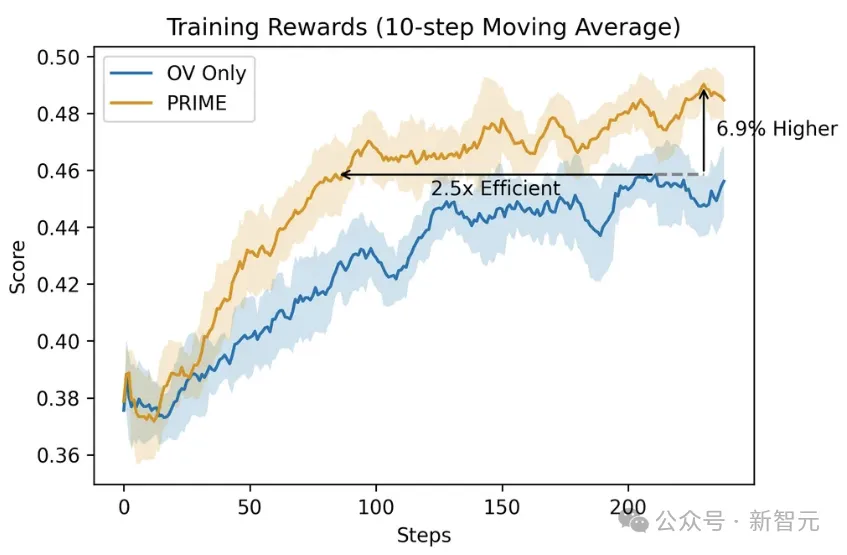

Comparing PRIME to RLOO with only a result validator (OV), PRIME speeds up RL training by 2.5 times and increases the final reward by 6.9% with lower variance compared to sparse rewards. PRIME also consistently outperforms OV on downstream tasks.

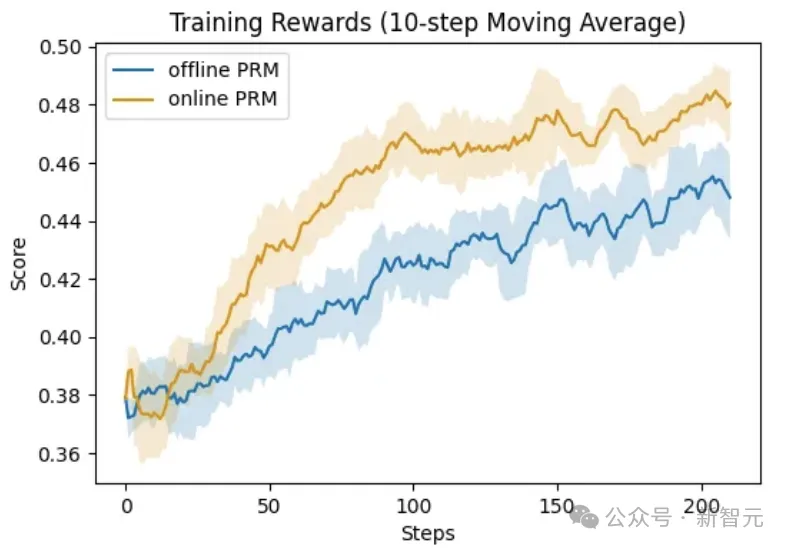

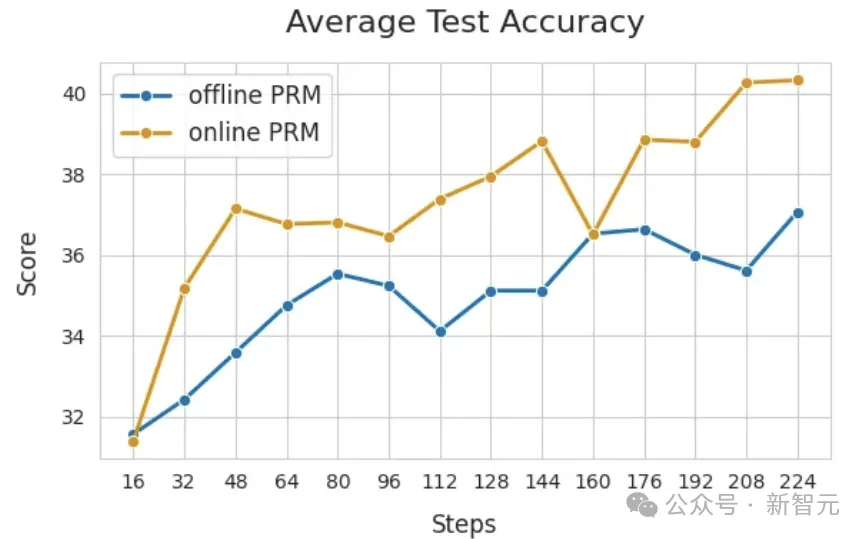

The following shows the importance of PRM online updates. Compare the two Settings: the online PRM is initialized using Eurus-2-7B-SFT, and the offline PRM is initialized using EurusPRM-Stage1.

As can be seen from the figure below, online PRM performs significantly better than offline PRM on both the training set and the test set.

2025-02-17

2025-02-14

2025-02-13

13004184443

Room 607, 6th Floor, Building 9, Hongjing Xinhuiyuan, Qingpu District, Shanghai

gcfai@dongfangyuzhe.com

WeChat official account

friend link

13004184443

立即获取方案或咨询

top