Home > Information > News

#News ·2025-01-08

With the explosive growth in demand for artificial intelligence and high-performance computing, graphics processors (Gpus) have become critical infrastructure to support complex computing tasks. However, the traditional GPU resource allocation method usually adopts the static allocation mode, that is, the fixed GPU resources are pre-allocated when the task is started. This static allocation method often leads to low resource utilization, especially in scenarios with large workload fluctuations or uncertain resource demands, resulting in idle and wasted valuable computing resources.

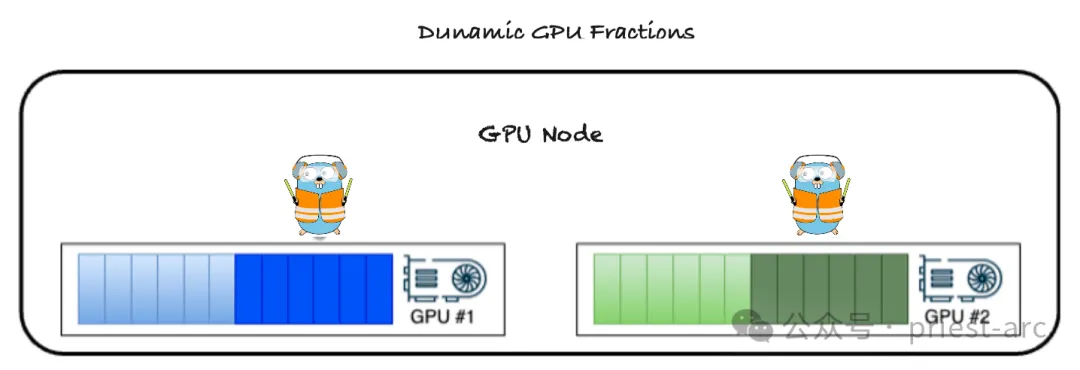

In order to solve this bottleneck, Dynamic GPU Fractions (fractions) came into being.

As we all know, with the vigorous development of artificial intelligence technology, the demand for high-performance computing resources in various industries has increased exponentially, and the demand for graphics processors (Gpus) is particularly prominent as the key hardware to accelerate AI computing. However, how to ensure that AI workloads run at high performance while minimizing the cost of GPU resource usage remains an important challenge for the industry.

To effectively address this challenge, the industry has introduced two breakthrough features: Dynamic GPU Fractions and Node Level Scheduler are designed to radically optimize GPU resource utilization and significantly improve the overall performance of AI workloads.

Dynamic GPU allocation represents a major revolution in the concept of GPU resource management. Traditional approaches to GPU resource management typically adopt a static allocation model, in which each workload is allocated a fixed proportion of GPU memory and computing power at startup. The disadvantage of this static allocation method is that when the actual GPU demand of the workload is low, the allocated resources are idle, resulting in serious resource waste and low GPU utilization.

Dynamic GPU allocation completely changes this situation. It allows AI workloads to dynamically request GPU resources based on their instantaneous needs, enabling on-demand allocation and elastic scaling of resource management. In general, the core mechanism of dynamic GPU allocation is to allow users to specify two key parameters for each workload:

For example, in our actual business scenario, we may need to configure 0.25 GPU allocation ratio for a workload based on business requirements, and set the upper limit of 0.80 GPU utilization. This means, then, that this workload will get at least 25% of its GPU resources to ensure that its basic operational needs are met.

At the same time, if there are GPU resources left, the workload can dynamically scale its resource usage up to 80%, making full use of the available GPU computing power and significantly improving computing efficiency. This flexibility avoids idle resources, maximizes overall GPU utilization, and reduces operating costs.

In general, dynamic GPU allocation technology provides users with significant advantages in multiple aspects, not only in resource management, but also greatly improves the performance and flexibility of AI workloads. The following is an analysis of the core advantages of this technology:

Dynamic GPU allocation Dynamically adjusts the allocation of GPU resources based on the real-time requirements of the workload to ensure the optimal utilization of resources. This method effectively avoids the waste of GPU resources and greatly improves the overall computing efficiency. For enterprises, this not only significantly reduces the operating costs of GPU infrastructure, but also maximizes the return on investment (ROI) and enables the refined management and value maximization of resources.

Dynamically allocating GPU resources enables workloads to quickly get the resources they need when they need them, optimizing overall performance and reducing latency in task execution. This approach significantly reduces the execution time of AI applications and models, making task processing more efficient. At the same time, it further increases the productivity of developers and data science teams, enabling them to focus more on innovation and problem solving, rather than being constrained by resource constraints.

With the ability to dynamically request GPU resources on demand, users can quickly adjust computing resources as business needs change. Whether it is a sudden high-performance demand or a long period of large-scale tasks, dynamic GPU allocation can be easily handled, providing organizations with a high degree of flexibility and scalability, so that AI projects can be seamlessly scaled without sacrificing performance, thereby helping enterprises better adapt to complex and changing business environments.

Dynamic GPU allocation allows you to flexibly adjust the ownership of GPU resources. For example, while ensuring that the notebook stays running, the unused GPU can be freed up from the current notebook for allocation to other tasks that require more resources. In this way, users can not only continue to seamlessly use the interactive notebook, but also provide computing support for other high-priority tasks by freeing up idle resources. This flexibility in resource scheduling ensures a smooth user experience while greatly improving the overall utilization efficiency of the GPU cluster.

Dynamic GPU allocation technology provides users with comprehensive technical support through the above related features. In the rapid evolution of AI and high-performance computing, this innovative solution will help enterprises manage their computing resources more efficiently, reduce costs and accelerate the pace of innovation. Whether for a single user or an entire organization, dynamic GPU allocation will be a powerful enablement for unlocking AI's potential and driving business success.

The Dynamic GPU (Fractions) technology is in the rapid development stage, and its future development trend mainly focuses on the following aspects to further improve the efficiency, flexibility and ease of use.

Mainly focus on the following two points, specific reference:

This is mainly reflected in the native support level of Kubernetes. Whether it is the native Kubernetes orchestration system, or the twice improved orchestration system, or the commercial orchestration system owned by major manufacturers, the dynamic GPU allocation is deeply integrated into the container orchestration system such as Kubernetes to achieve unified management and scheduling of GPU resources. This simplifies the deployment and management of GPU resources and increases the portability and resiliency of containerized applications.

For example, Kubernetes implements the description and management of GPU resources based on CRD (Custom Resource Definitions) through the extension mechanism, and uses the Operator pattern to automate the allocation and reclamation of GPU resources.

Based on different computing environments, the dynamic GPU allocation technology is extended to support GPU hardware of different architectures (such as NVIDIA, AMD, Intel, etc.) and other accelerators such as cpus and FPgas to achieve unified resource management and scheduling in heterogeneous computing environments. This will provide users with greater flexibility and choice, and improve overall system performance and efficiency.

The ecological construction of Dynamic GPU (Fractions) technology is the key to its maturity and wide application. A healthy ecosystem promotes technological innovation, lowers user barriers, and accelerates market adoption.

With the rapid development of artificial intelligence (AI) and high performance computing (HPC), the demand for GPU resources is increasing exponentially. In light of its flexibility and efficiency, Dynamic GPU (Fractions) technology is becoming an important direction in the next generation GPU resource management. The ecological realization of dynamic GPU allocation technology requires not only the optimization of the technology itself, but also the establishment of a complete collaborative environment and application scenarios around it to fully release its potential and create greater value for users and developers.

Therefore, in a sense, the ecological development of dynamic GPU allocation technology is an inevitable trend to promote the efficient management of AI computing resources. From integration with container orchestration systems, AI frameworks, and observability tools to widespread use in diverse application scenarios, dynamic GPU allocation is becoming a core solution for GPU resource management. In the future, with the continuous optimization of technology and the gradual improvement of ecology, its value in the field of high-performance computing will become more and more significant, bringing more possibilities and innovation space for enterprises and developers.

In summary, the introduction of dynamic GPU allocation and node-level scheduler not only fills the gaps in the existing GPU management mechanism, but also provides a new solution for the refined management and intelligent scheduling of GPU resources. The implementation of these functions can not only help enterprises significantly reduce operating costs while improving AI workload performance, but also lay a solid foundation for future GPU technology evolution and application scenario expansion.

In the context of the increasing demand for Gpus, these innovative technologies will help users make more efficient use of limited computing resources, so as to stand out in the increasingly competitive era of artificial intelligence. Through this pioneering approach to resource scheduling, we hope to set a new technical benchmark for the industry and drive continued development and innovation in the field of high performance computing.

That's all for today's analysis. For more in-depth analysis of GPU-related technologies, best practices and related technology frontiers, please follow our wechat official account "Architecture Station" for more exclusive technical insights!

Happy Coding ~

Reference:

2025-02-17

2025-02-14

2025-02-13

13004184443

Room 607, 6th Floor, Building 9, Hongjing Xinhuiyuan, Qingpu District, Shanghai

gcfai@dongfangyuzhe.com

WeChat official account

friend link

13004184443

立即获取方案或咨询

top