Home > Information > News

#News ·2025-01-02

Microsoft has revealed OpenAI secrets again?? In the paper, it plainly reads:

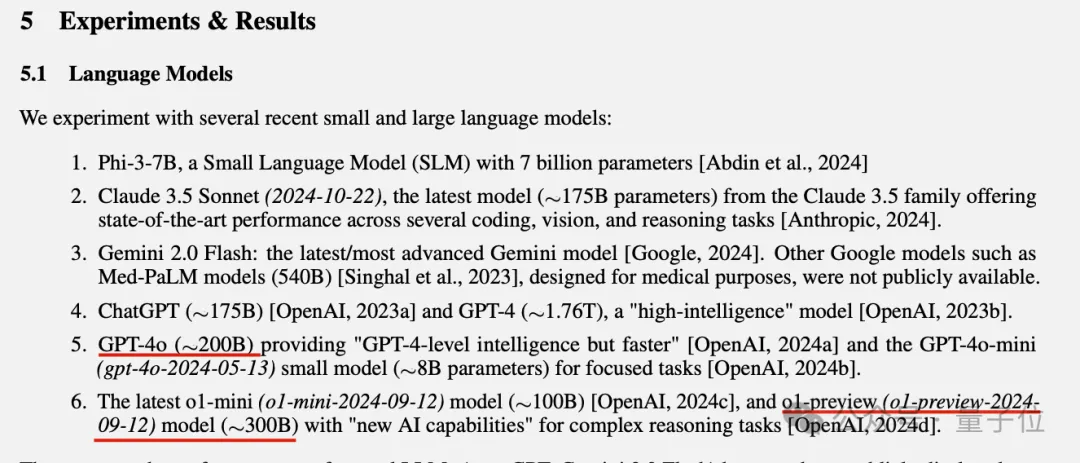

o1-preview about 300B parameters, GPT-4o about 200B, GPT-4O-MINI about 8B......

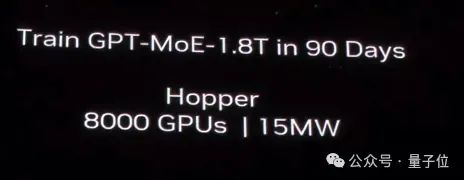

When Nvidia released the B200 in early 2024, it showed that GPT-4 is 1.8T MoE, which is 1800B, and Microsoft's number is more accurate, 1.76T.

In addition, parameters are also attached to OpenAI's mini series and Claude3.5 Sonnet in this paper, which are summarized as follows:

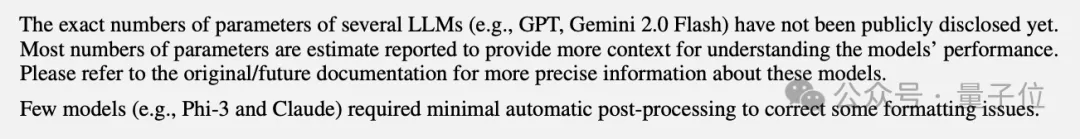

Although there is a disclaimer at the end of the paper:

Exact figures are not publicly available, and most of the numbers here are estimates.

But many people still think it's not that simple.

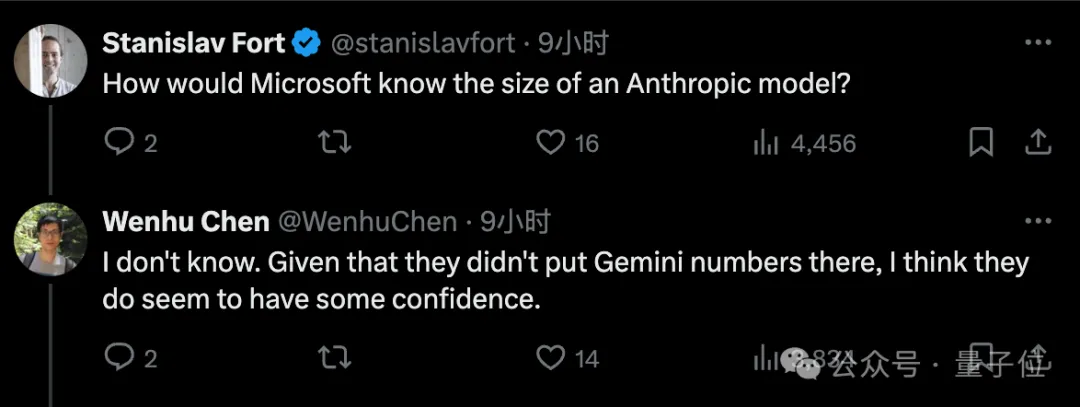

For example, why not include the parameter estimates of the Google Gemini model? Maybe they have faith in the numbers they put out.

It is also argued that most models run on Nvidia Gpus, so it can be estimated by token generation speed.

Only the Google model runs on the TPU, so it's hard to estimate.

And it's not the first time Microsoft has done this.

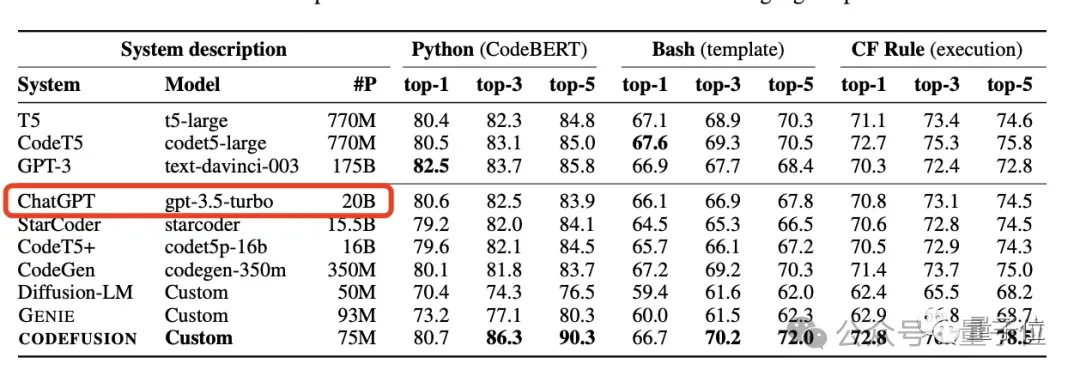

In October 23, Microsoft "accidentally" exposed the 20B parameter of the GPT-3.5-Turbo model in a paper, and deleted this information in subsequent paper versions.

Did you do it on purpose or accidentally?

In fact, the original paper introduces a medically related benchmark called MEDEC.

It was released on December 26, but it is a more vertical field of paper, and people who may not be related to the direction will not see it, and it was found by Leeuwenhoek netizens after the year.

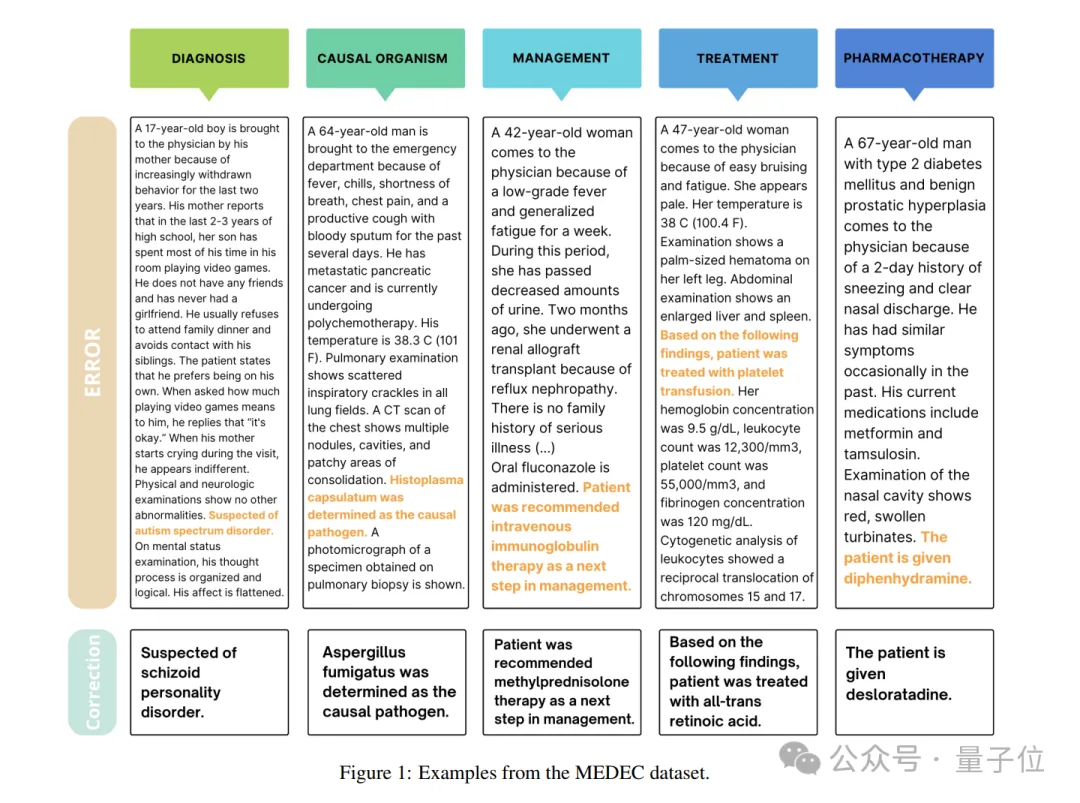

One in five patients who read clinical notes reported finding errors, and 40 percent believed these errors could affect their care, according to a survey of U.S. medical organizations.

On the other hand, LLMs(large language models) are increasingly being used for medical documentation tasks (such as generating treatments).

So MEDEC has two tasks this time. First, identify and find errors in clinical notes; Second, it can be corrected.

To conduct the study, the MEDEC dataset contained 3,848 clinical texts, including 488 clinical notes from three U.S. hospital systems that had not previously been seen by any LLM.

It covers five types of errors (diagnosis, management, treatment, medication, and causative agents), which were selected by analyzing the most common types of questions in the Medical Board exam and incorrectly labeled with the participation of eight medical personnel.

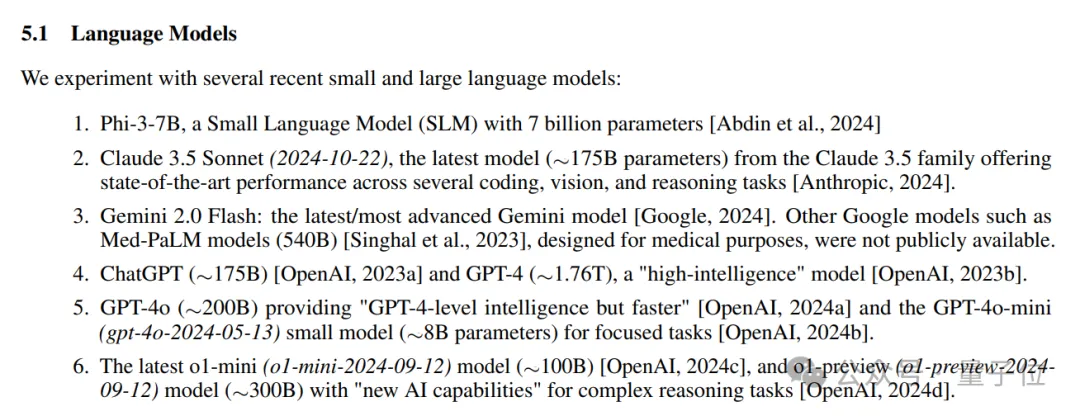

The leakage of parameters occurs in the experiment.

According to the experimental design, researchers will select recent mainstream large models and small models to participate in note recognition and error correction.

When the final selected model is introduced, the model parameters and release time are all disclosed at once.

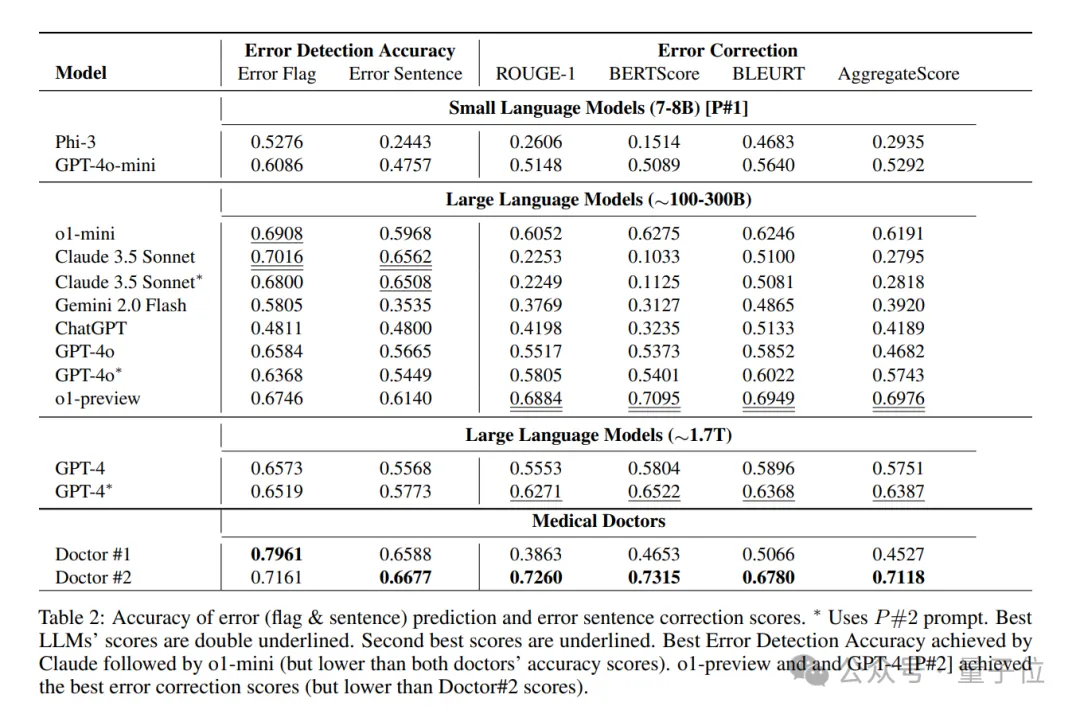

Oh, and leaving out the intermediate process, the study concluded that Claude 3.5 Sonnet outperformed other LLM methods in error flag detection, scoring 70.16, with o1-mini in second place.

Every time CHATGPT-related model architecture and parameters leak, it will cause an uproar, and this is no exception.

In October 23, when the Microsoft paper claimed that GPT-3.5-Turbo only had 20B parameters, some people lamented: No wonder OpenAI is so nervous about the open source model.

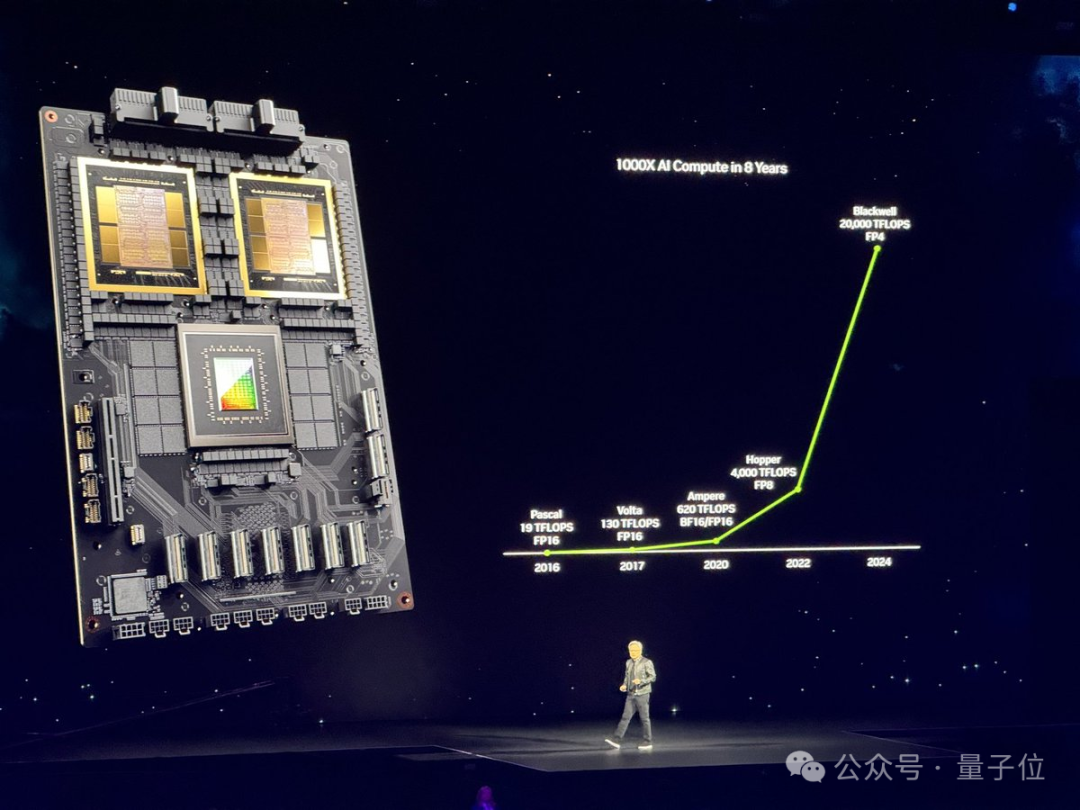

In March 24, when Nvidia confirmed that GPT-4 was 1.8T MoE, and 2000 B200 could complete training in 90 days, we felt that MoE had been and would remain a large model architecture trend.

This time, based on Microsoft's estimated data, netizens have several main concerns:

If Claude 3.5 Sonnet really is smaller than GPT-4o, then the Anthropic team has a technological advantage.

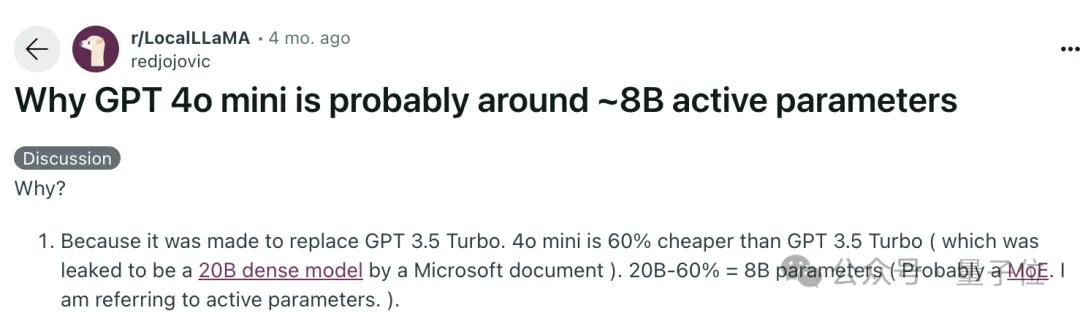

And disbelief that the GPT-4o-mini is as small as an 8B.

However, some people have previously calculated according to the reasoning cost, the price of 4o-mini is 40% of 3.5-turbo, if the 3.5-turbo 20B figure is accurate, then 4o-mini is just about 8B.

However, 8B here also refers to the activation parameter of the MoE model.

Anyway, OpenAI probably won't release the exact numbers.

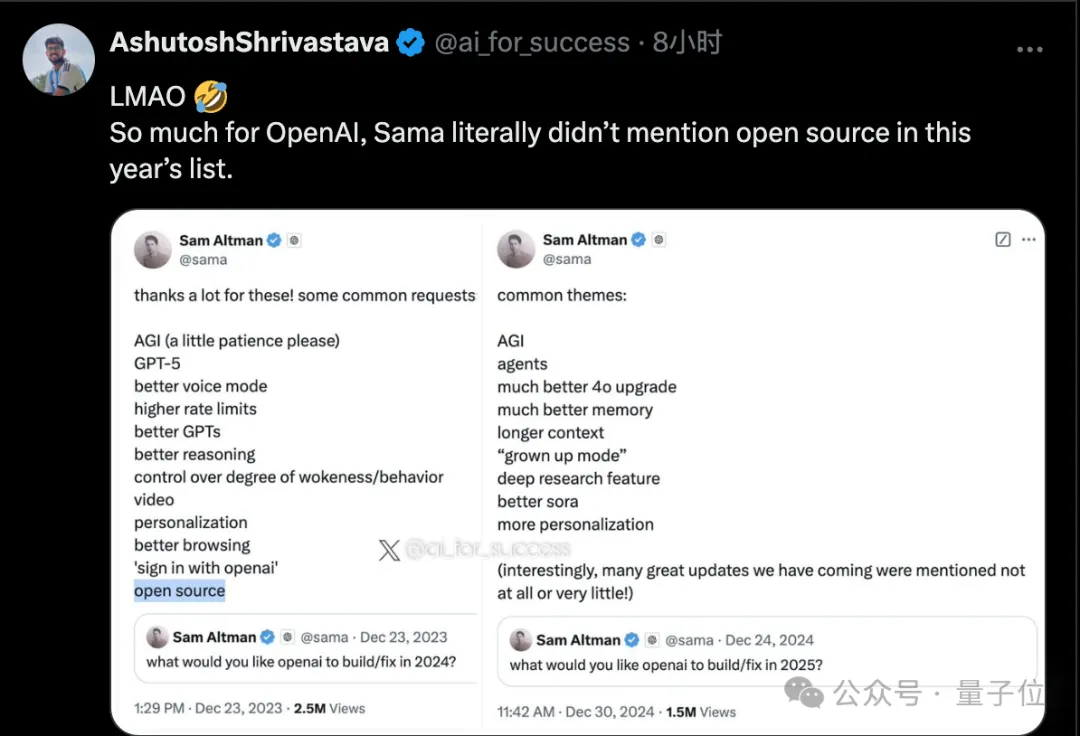

Previously, Altman solicited New Year's resolutions for 2024, and the final list released also included "open source." In the latest version of 2025, open source has been removed.

Address: https://arxiv.org/pdf/2412.19260

Reference link:

[1]https://x.com/Yuchenj_UW/status/1874507299303379428[2]https://www.reddit.com/r/LocalLLaMA/comments/1f1vpyt/why_gpt_4o _mini_is_probably_around_8b_active/.

2025-02-17

2025-02-14

2025-02-13

13004184443

Room 607, 6th Floor, Building 9, Hongjing Xinhuiyuan, Qingpu District, Shanghai

gcfai@dongfangyuzhe.com

WeChat official account

friend link

13004184443

立即获取方案或咨询

top