Home > Information > News

#News ·2025-01-02

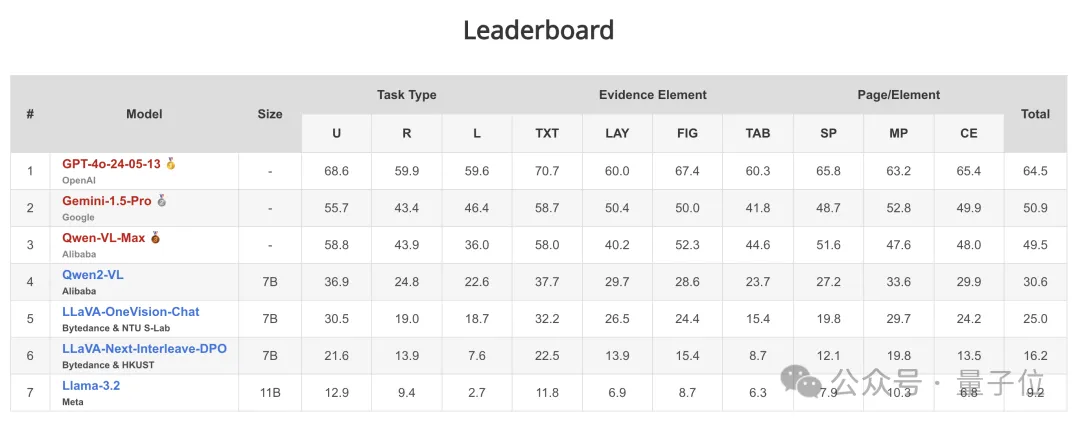

GPT-4o only scored 64.5, and the rest of the models failed!

A comprehensive, fine-grained evaluation of the multimodal long document understanding ability of the model has been evaluated

Called LongDocURL, it integrates the three main tasks of long document understanding, numerical inference, and cross-element localization, and contains 20 fine-molecule tasks.

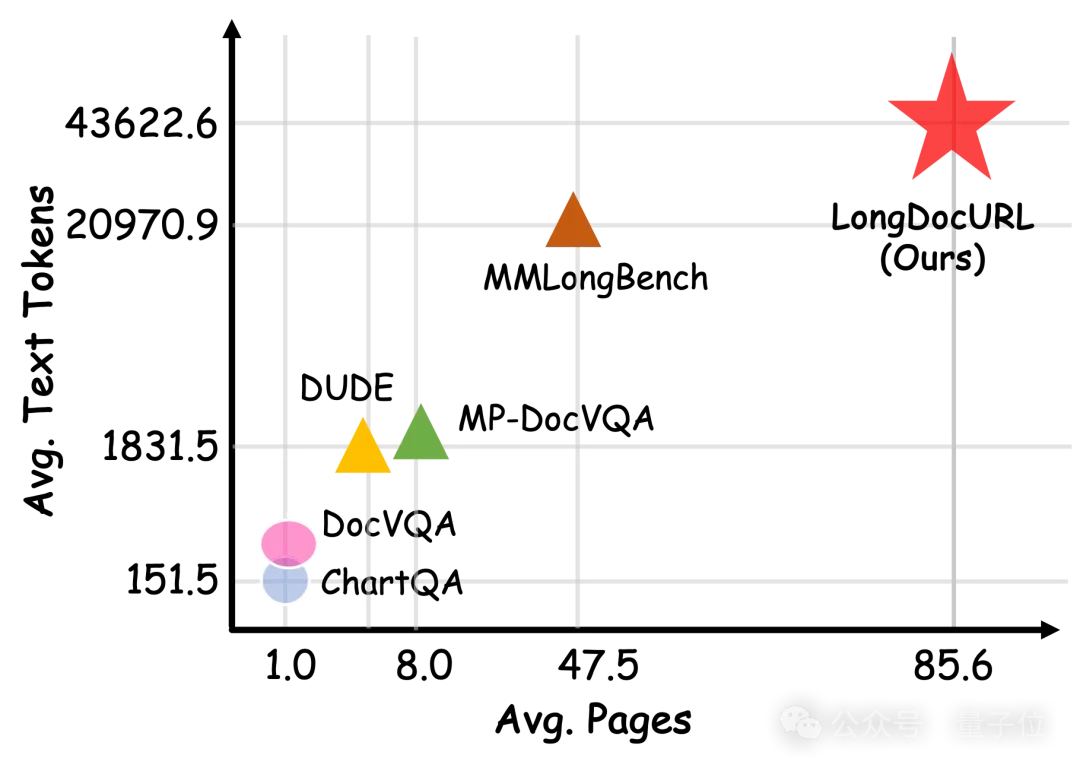

LongDocURL focuses on multimodal, long-context documents that are 50 to 150 pages long, with an average page count of 85.6 and document markers of 43622.6.

The data quality was also high, with automatic model validation and manual validation, including the supervision of 21 full-time outsourced taggers and 6 experienced postgraduate students.

△ Figure 1 Comparison of the new Benchmark with other datasets in terms of average pages per document and number of text tags

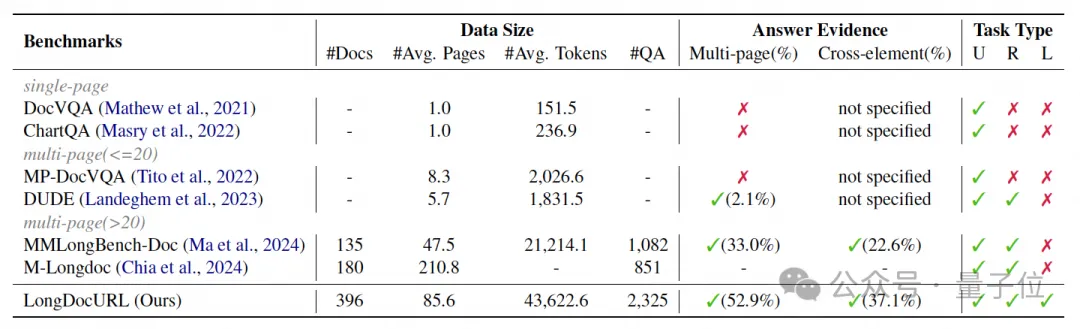

△ Figure 2 Comparison of the new Benchmark with other document understanding benchmarks. (U) Understanding task, (R) reasoning task, and (L) location task

This work was completed by the Liu Chenglin research group of the Institute of Automation of the Chinese Academy of Sciences and the Taotian Group Algorithm technology - Future Life Laboratory team.

The team comprehensively evaluated the mainstream open source and closed source large models at home and abroad under 26 configurations of multimodal input and plain text input.

At present, GPT-4o ranks first in the evaluation set, but it is only just over the pass line, with a correct rate of 64.5.

Large Visual Language models (LVLMs) significantly improve document understanding, being able to handle complex document elements, longer contexts, and a wider range of tasks.

However, existing document understanding benchmarks are limited to single-page or small-page documents, and do not provide a comprehensive analysis of the model's ability to locate document layout elements.

The research team points out some limitations of existing document understanding benchmarks:

So what's new about LongDocURL, and what's so hard about it?

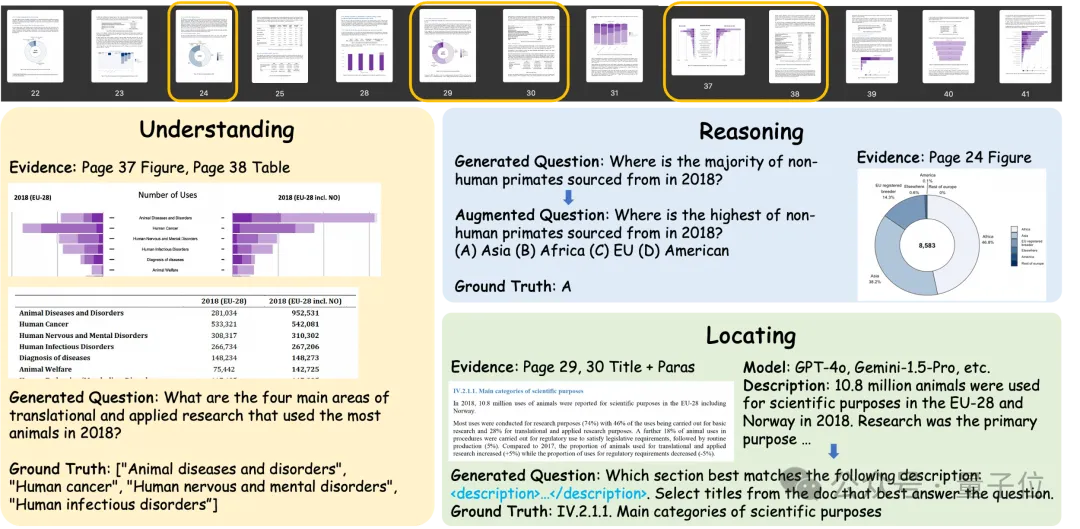

The team first defined three main task categories:

Understanding: Extracting information from documents by identifying keywords, parsing table structures, and so on. The answer is found directly in the documentation.

For example, in the paragraph title positioning task, the model must summarize the relevant chapters to identify the parts that match a given summary, and then determine the relationship between the paragraphs and their chapter titles. This task requires switching element types (i.e., paragraphs to headings) during the answer process.

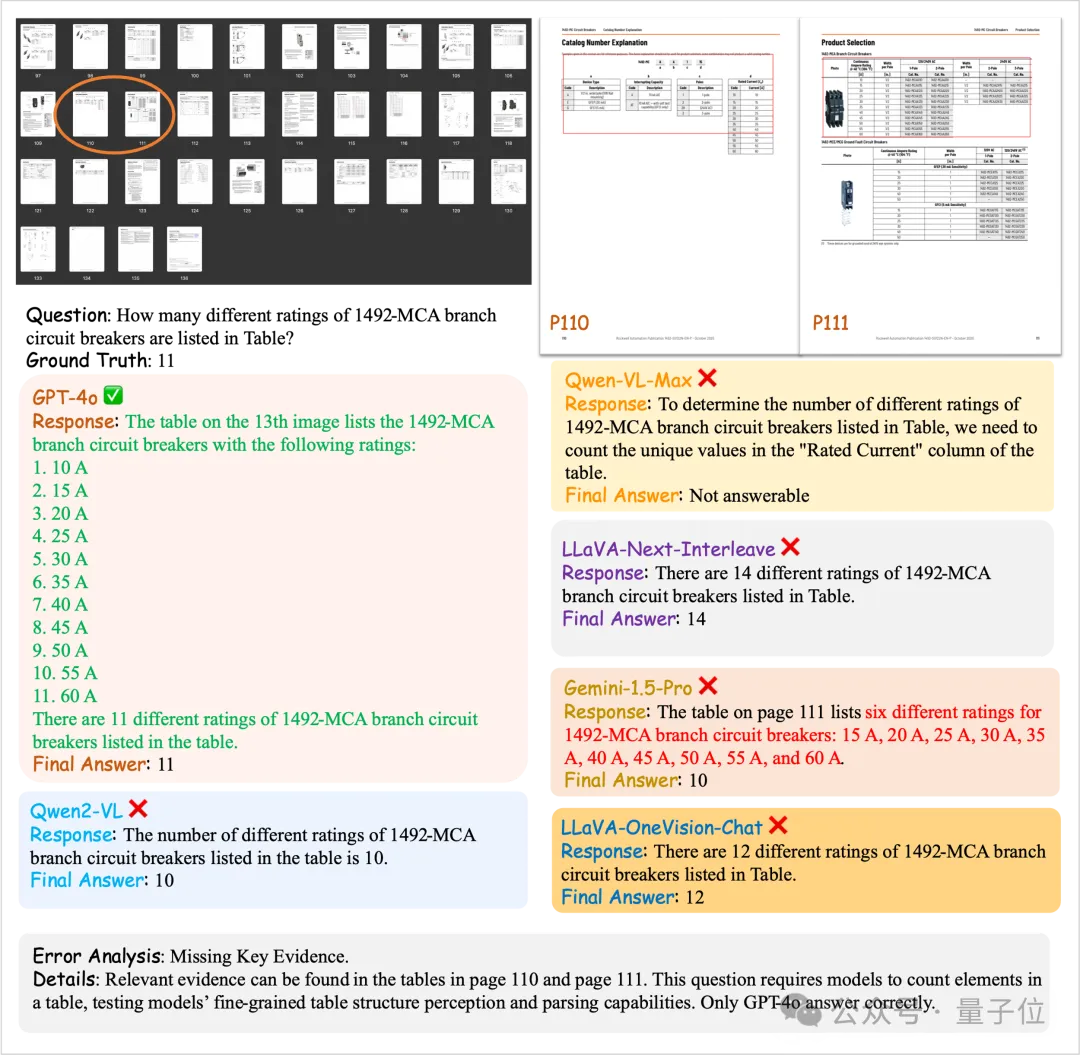

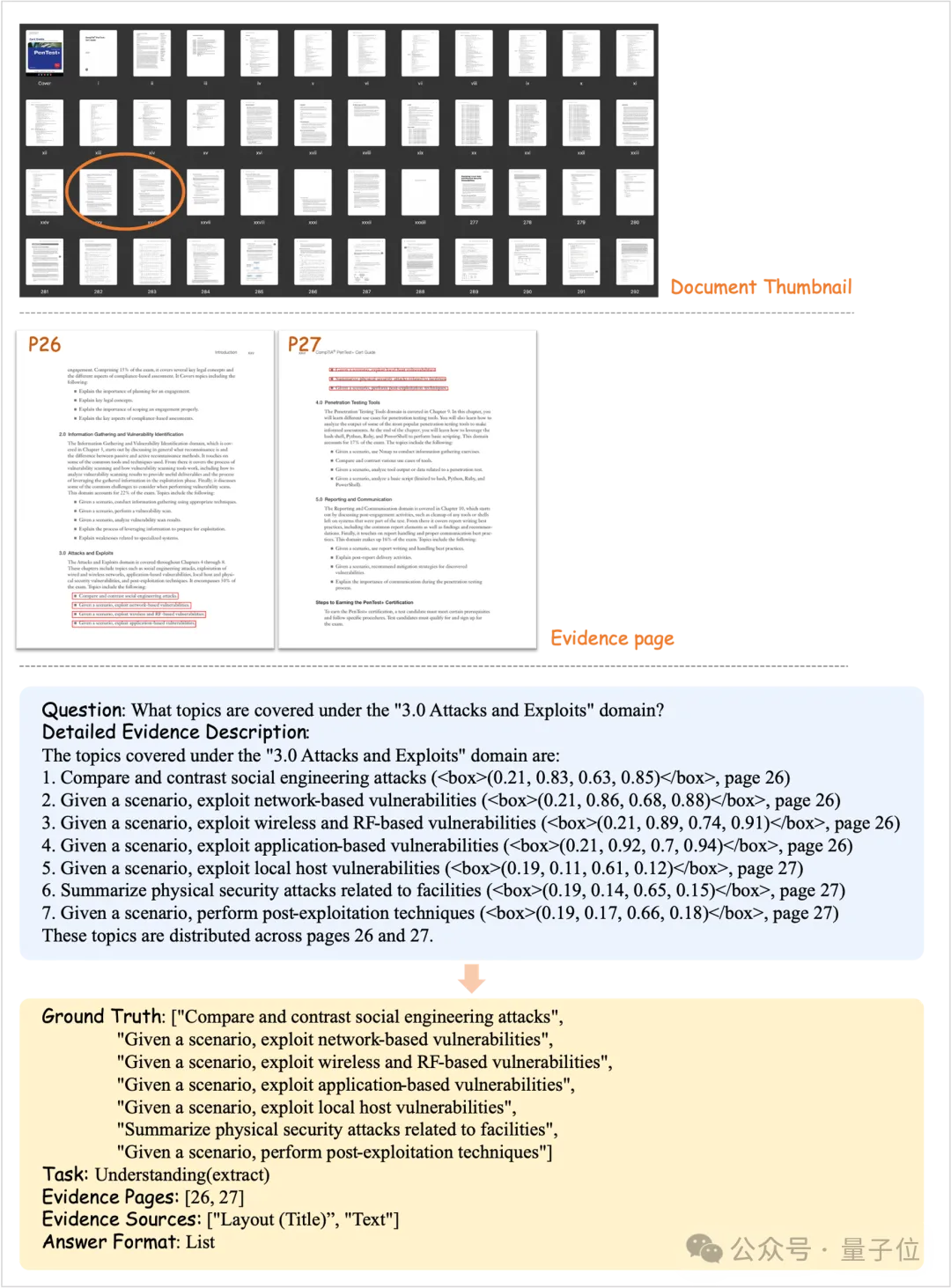

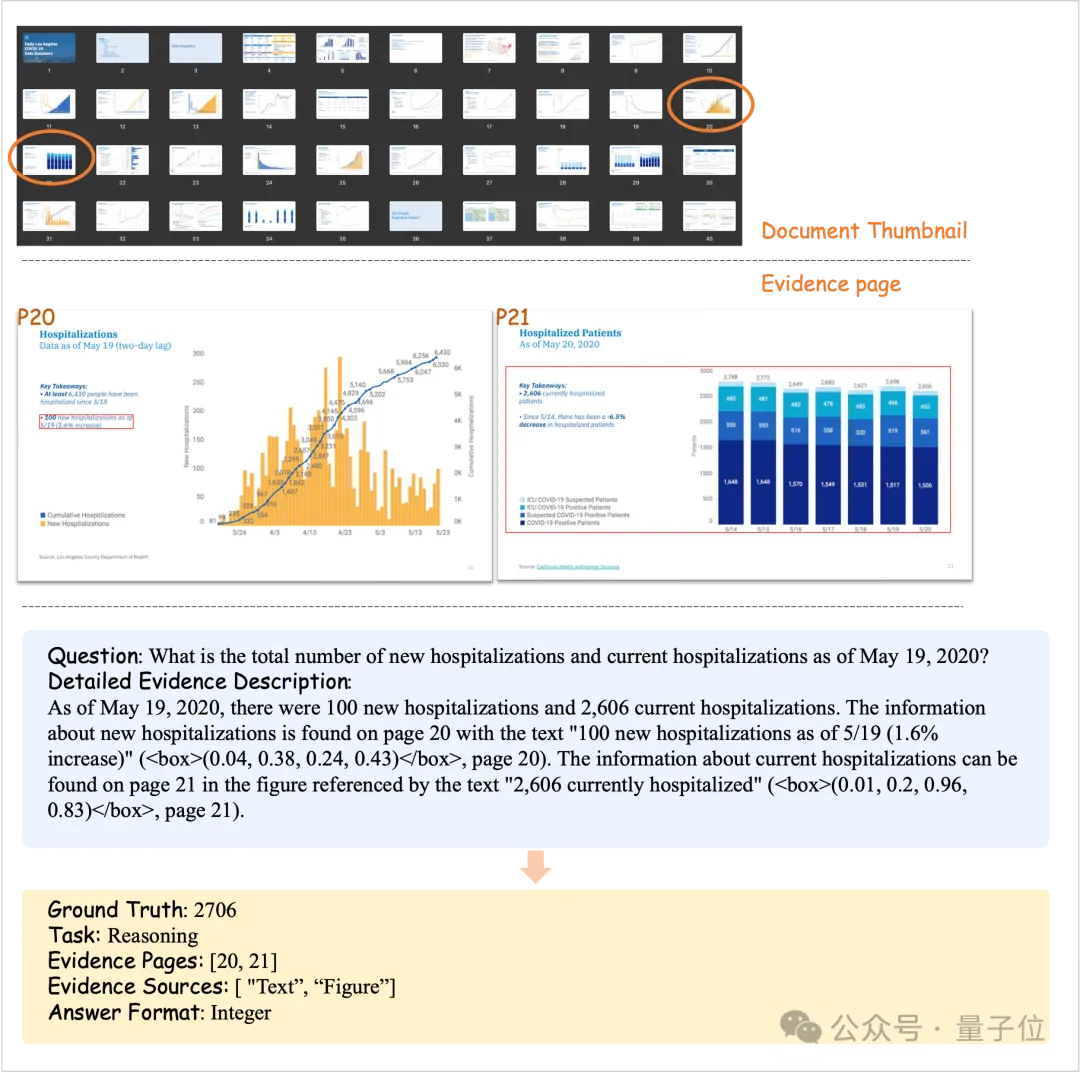

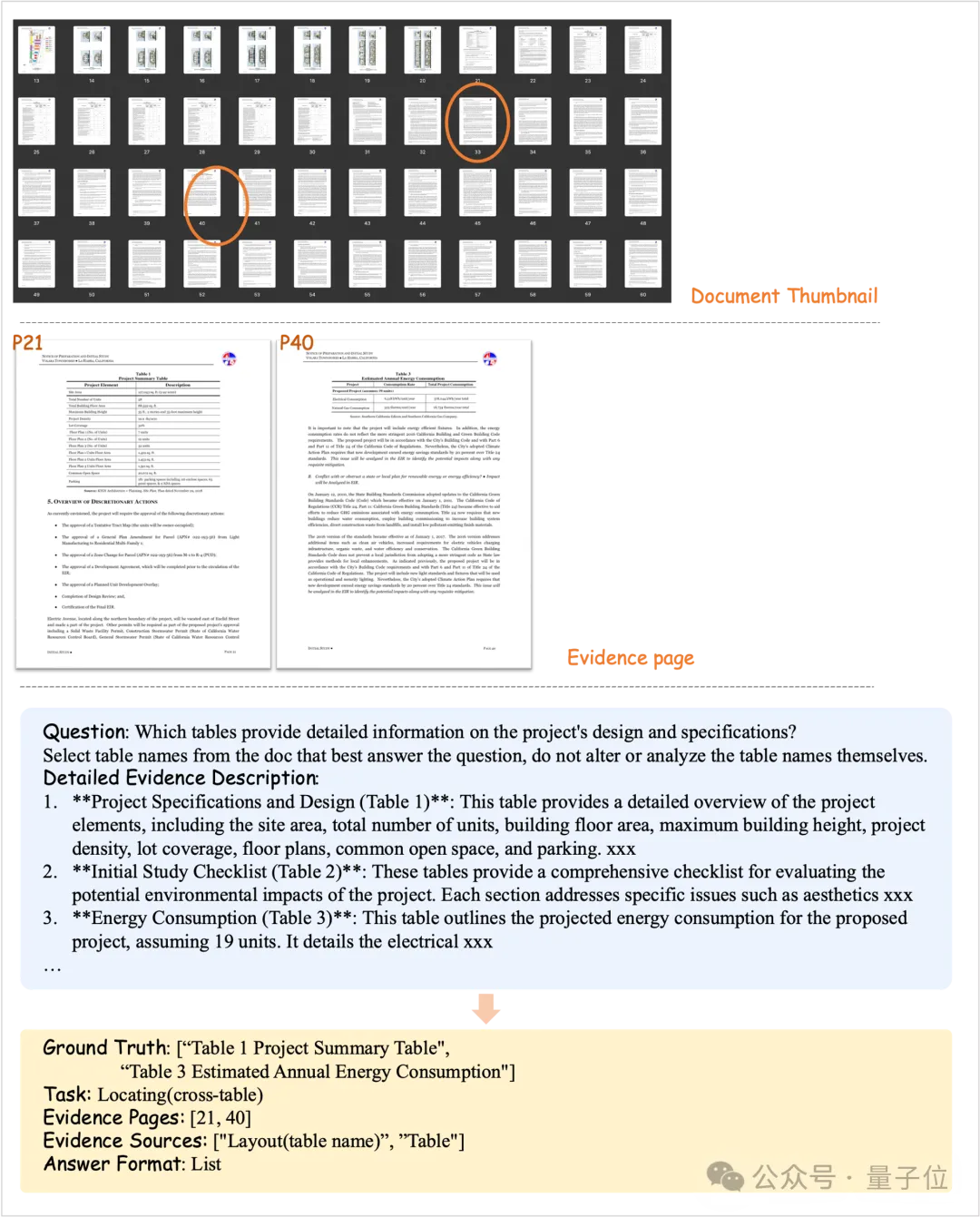

△ Figure 3 Schematic diagram of question and answer pairs for three types of tasks.

(Top) Thumbnail of a sample document. The orange box represents the answer evidence page. (below) Sample data generated from the document and screenshots of relevant parts of the answer evidence page.

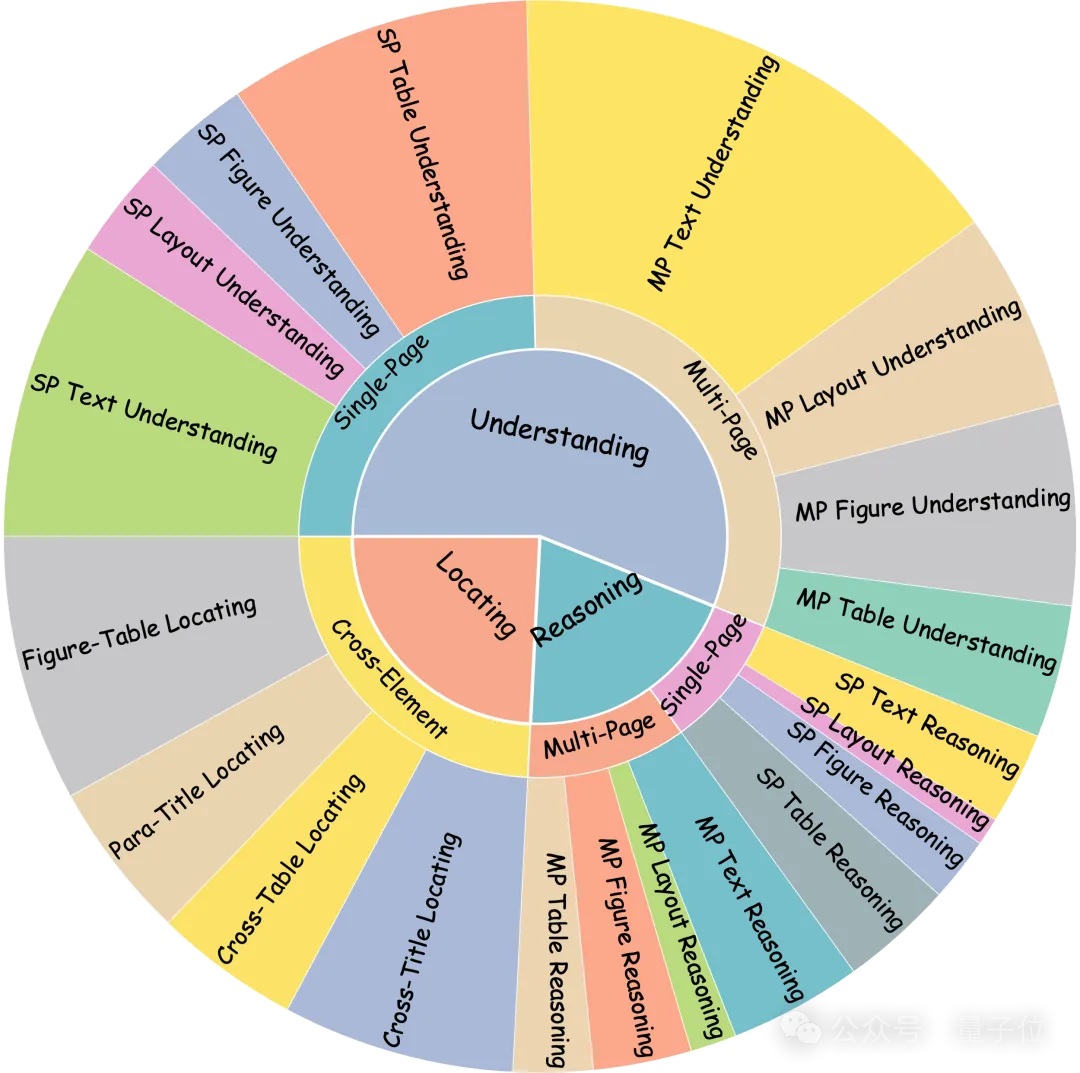

The team further subdivided the dataset into 20 subtasks based on different main task categories and answer evidence.

First, each question-and-answer pair can be categorized according to three main tasks: understanding, reasoning, and positioning. Next, define four types of answer evidence based on the element type:

In addition, each question-and-answer pair can be divided into single or multiple pages based on the number of pages of evidence in the answer, single or cross-element based on the number of evidence element types.

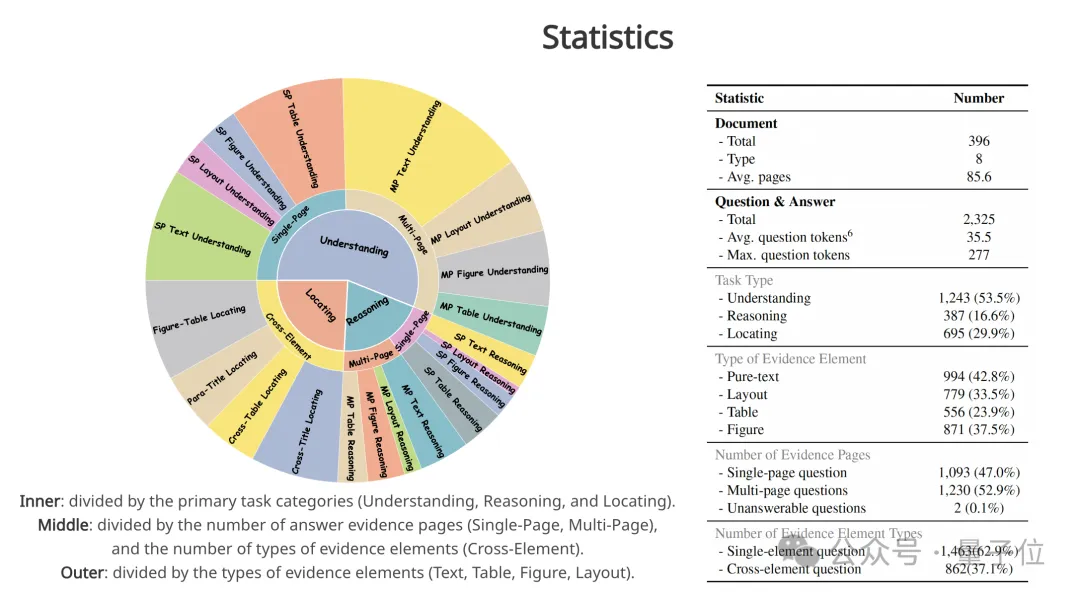

△ Figure 4 Task classification system.

Inner ring: Divided by primary task categories (understanding, reasoning, and positioning). Central: Divided by the number of pages of answer evidence (single, multiple pages) and the number of types of evidence elements (cross-elements). External: Divided by evidence element type (text, table, graph, layout).

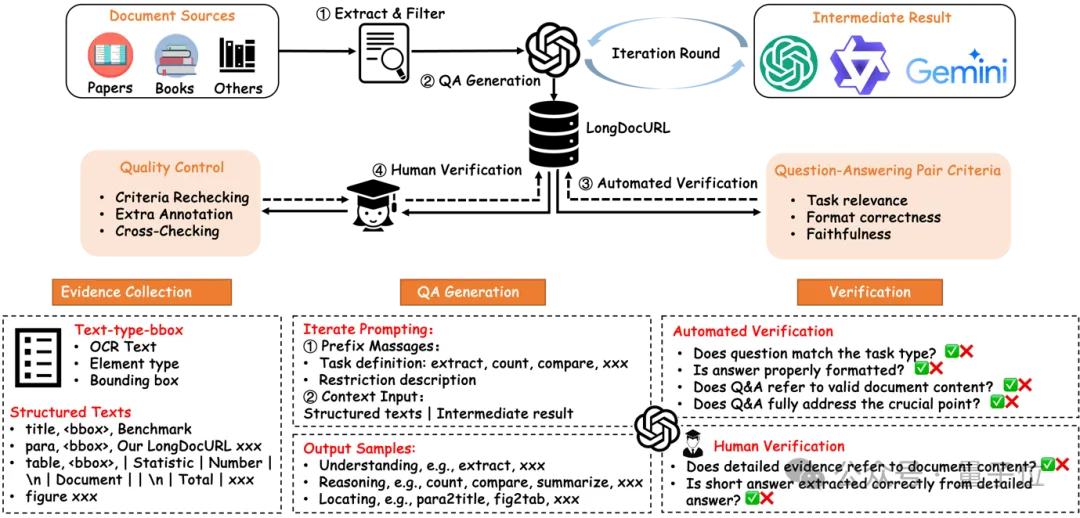

To build LongDocURL's evaluation dataset efficiently and cost-effectively, the team designed a semi-automated process consisting of four modules.

Figure 5 Overview of the build process.

The process consists of four modules: (a) extraction and filtration; (b) QA generation; (c) Automatic verification; (d) Manual verification

First, the Extract&Filter module selects documents of rich layout and appropriate length from different document sources, and uses the Docmind tool to obtain the "text-type-bbox" triplet symbol sequence.

Second, the QA Generation module is based on triplet symbol sequences and strong models (such as GPT-4o), and multi-step iterations prompt the generation of Q&A pairs with evidence sources.

Finally, the Automated Verification module and Human Verification module ensure the quality of the question and answer pairs.

Through this semi-automated process, the team ended up generating 2,325 question and answer pairs, covering more than 33,000 pages of documentation.

△ Figure 6 Normalization accuracy score (0~1).

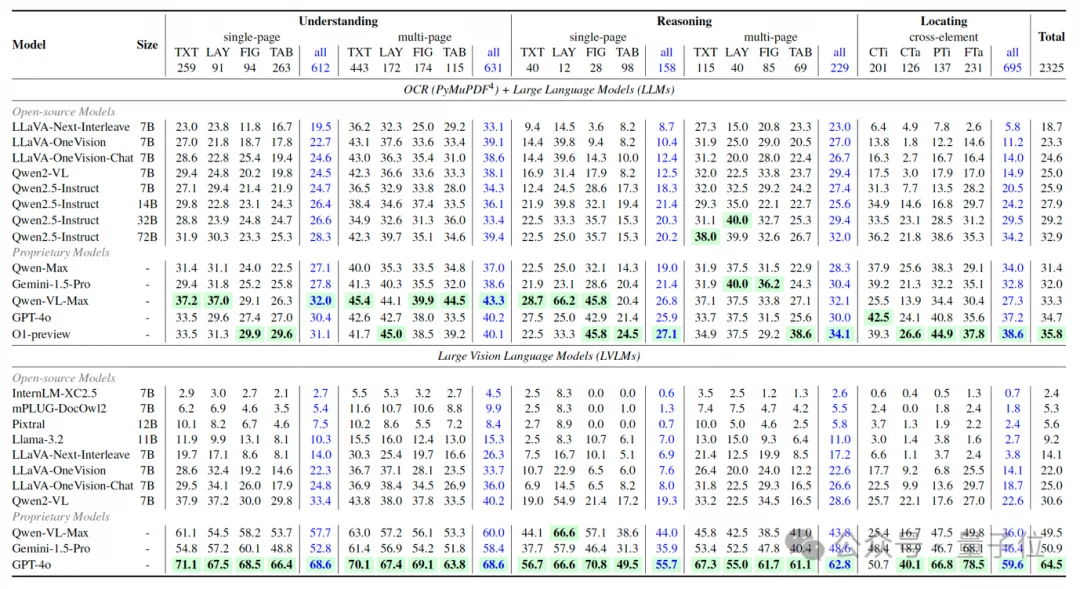

There are three types of tasks: understanding (U), reasoning (R), and positioning (L). There are four types of evidence elements: plain text (TXT), layout (LAY), charts and images (FIG), and tables (TAB). There are three types of evidence pages/elements: single page (SP), multi-page (MP), and cross-element (CE). CTi: Cross heading, CTa: Cross Table, PTi: Subheading, FTa: Chart - Table. The models with the highest scores are highlighted in green

Regarding LVLMs, the team reached the following conclusions:

(1) The highest-scoring model: only GPT-4o passed with a score of 64.5, indicating that LongDocURL is a challenge to current models.

(2) Comparison of open source and closed source models: Closed source models show better overall performance than open source models. Among open source models, only Qwen2-VL (score 30.6) and LLaVA-OneVision (score 22.0 and 25.0) scored above 20, while all other models with parameters less than 13B fell below this threshold.

To compare the performance of models using text input and image input, the team added the O1-preview and Qwen2.5 series.

The results showed that the overall score of LLM was significantly lower than that of LVLM, and the highest LLM score lagged behind the highest LVLM score by about 30 points.

The team analyzed that this gap is mainly due to the loss of important document structure information when parsing to plain text using PyMuPDF. Given that our dataset contains a large number of question-and-answer pairs related to tables and graphs, the loss of structural information hinders the LLM's ability to extract critical evidence. These results highlight the importance of LongDocURL as a benchmark for evaluating LVLM document structure parsing capabilities.

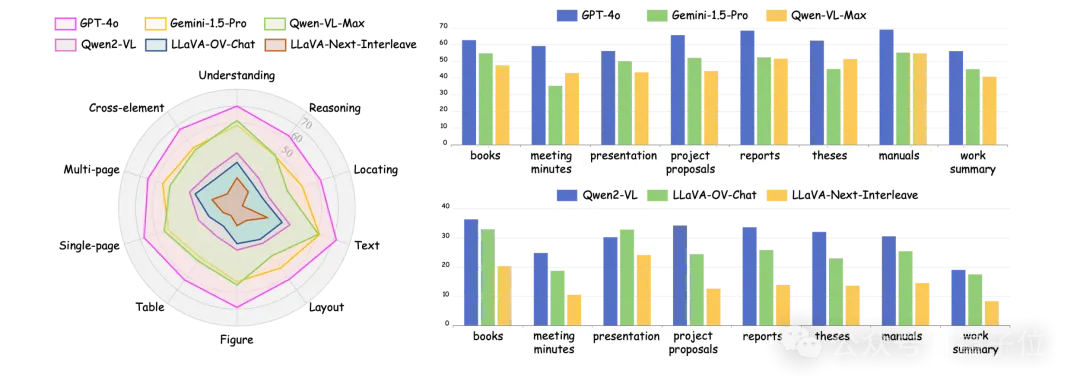

The team selected three closed source and open source models and performed a more granular analysis of the experimental results based on document sources, task categories, document elements, and evidence pages.

Task Type:

Proprietary LVLM performed equally well on reasoning and location tasks, but image-to-text conversion had a greater impact on reasoning ability. Switching to text input, for example, reduced GPT-4o's reasoning score by 31.6 points, while location scores dropped by 22.4 points.

Strong models perform evenly in reasoning and positioning, while weaker models perform poorly in positioning, suggesting that in positioning tasks, training focuses on comprehension and reasoning skills rather than spatial and logical relationships.

Document elements:

The model gets the highest score on the text problem and the lowest score on the table problem, which highlights the insufficiency of the document structure analysis. Graphics and layout question types have similar scores. Scores for cross-element tasks fall somewhere between single-page and multi-page QA and are closely related to the overall assessment.

Single and multi-page:

Single-page QA accuracy is lower than multi-page QA. This suggests that answers to some questions can be gleaned from multiple pages, reducing the difficulty. However, models like GPT-4o and Qwen-VL-Max had lower accuracy on multi-page QA, suggesting that, paradoxically, they scored lower on the positioning task in multi-page QA, thus affecting overall performance.

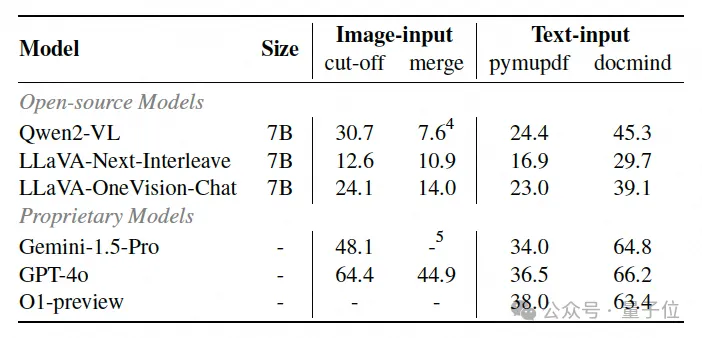

To explore the optimal input format for long document question answering, the team conducted ablation experiments in two image input paradigms and two text input paradigms.

Image input paradigms include: (1) cut-off, configuration for the main experiment, and (2) merge, combining document images from the original document length (50 to 150) into 20 to 30 new images.

The team noted that the table structure information was significantly reduced when parsed by PyMuPDF, while the markdown format table text parsed by Docmind retained greater structural integrity. To assess the impact of structural information loss on model performance, the team conducted experiments on two input types: docmind parsing text input and pymupdf parsing text input.

△ Figure 8 Ablation experiment with input method

Text input vs. image input: The score in the truncated paradigm is higher than that in the text-input-PymupDF paradigm, but lower than that in the text-input-docmind paradigm, indicating that the method can extract table structure information efficiently, but it can be further improved.

Truncation vs. merge: The merge method preserves more context markup by concatenating multiple images, while the truncation method successfully obtains prior information by shortening the context window. The experimental results show that truncation may produce better problem-solving capabilities than merging, providing insights for building multimodal search enhancement generation (RAG) systems in the future.

Effect of structural information: For proprietary models, performance using Docmind is at least 25 points higher than using PyMuPDF, while the difference for open source models is 15 points. Lack of table structure information can seriously hinder the performance of open source and proprietary models.

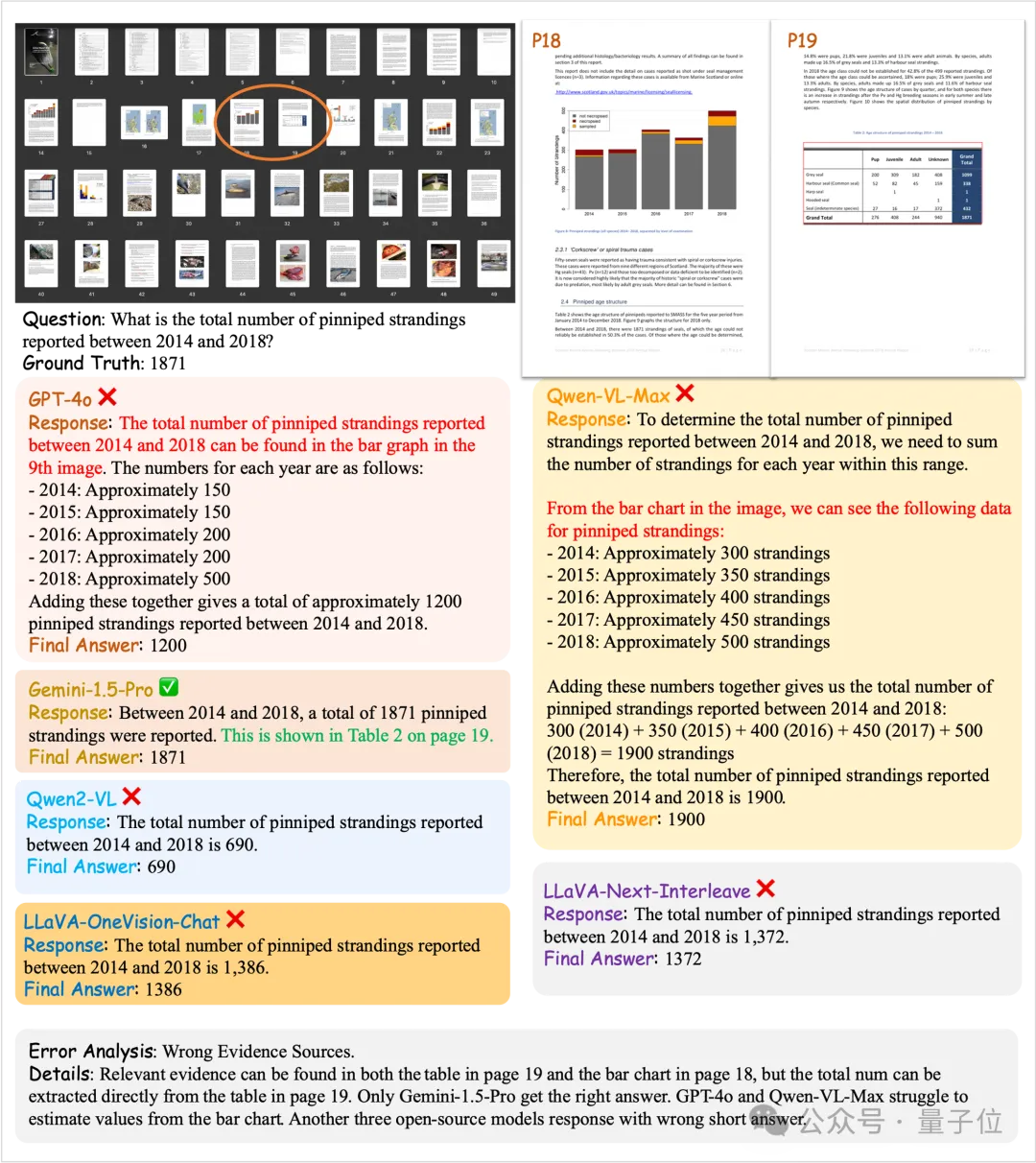

(a) False sources of evidence

△ Figure 9 Example 1

(b) Missing sources of evidence

△ Figure 10 Example 2

Understanding

Figure 11 Understanding QA example

Reasoning

Figure 12 Reasoning QA example

Locating

Figure 13 Locating QA example

The thesis links: https://arxiv.org/abs/2412.18424

Project home page: https://longdocurl.github.io/

Data set: https://huggingface.co/datasets/dengchao/LongDocURL

2025-02-17

2025-02-14

2025-02-13

13004184443

Room 607, 6th Floor, Building 9, Hongjing Xinhuiyuan, Qingpu District, Shanghai

gcfai@dongfangyuzhe.com

WeChat official account

friend link

13004184443

立即获取方案或咨询

top