Home > Information > News

#News ·2025-01-02

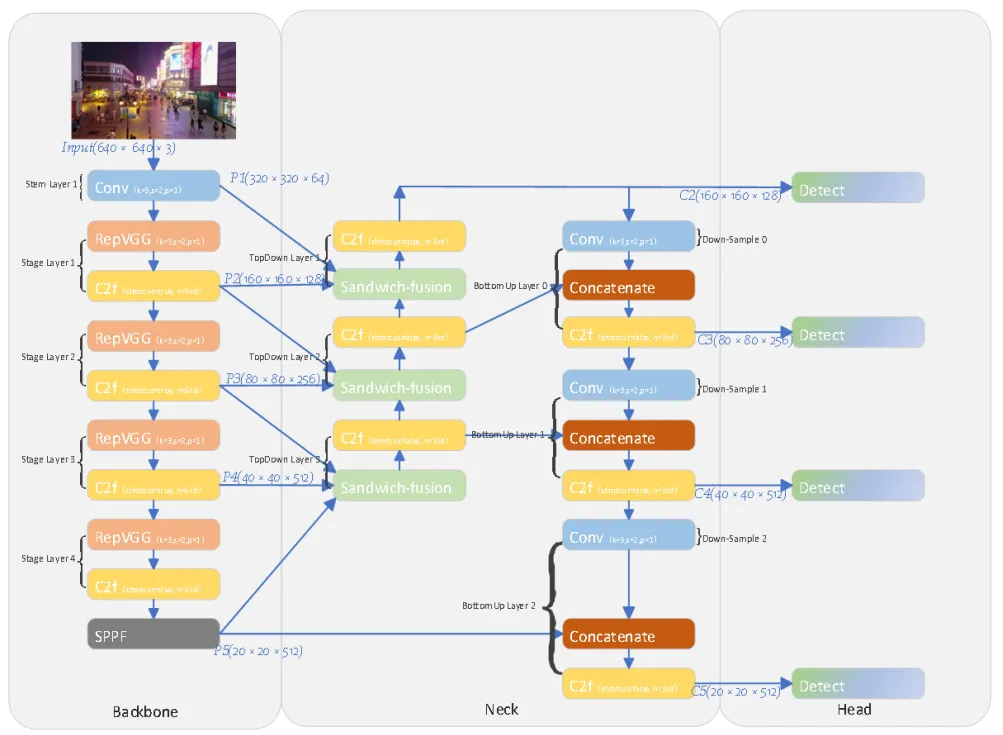

In today's share, we present DRONE-YOLO, a series of multi-scale Drone image target detection algorithms based on the YOLOv8 model, designed to overcome specific challenges associated with drone image target detection. In order to solve the problem of large scene size and small detection object, we improved the neck component of YOLOv8 model. Specifically, we employ a three-layer PAFPN structure combined with a detection head tailored to small targets using large-scale feature maps, significantly enhancing the algorithm's ability to detect small targets. In addition, we integrate sandwich fusion modules into each layer of the upper and lower branches of the neck. This fusion mechanism combines network features with low-level features to provide rich spatial information about objects at different layers of detection heads. We achieve this fusion using deep separable evolution, which balances parametric costs with large receptive fields. In the network backbone, we use the RepVGG module as the undersampling layer, which enhances the ability of the network to learn multi-scale features and outperforms the traditional convolutional layer.

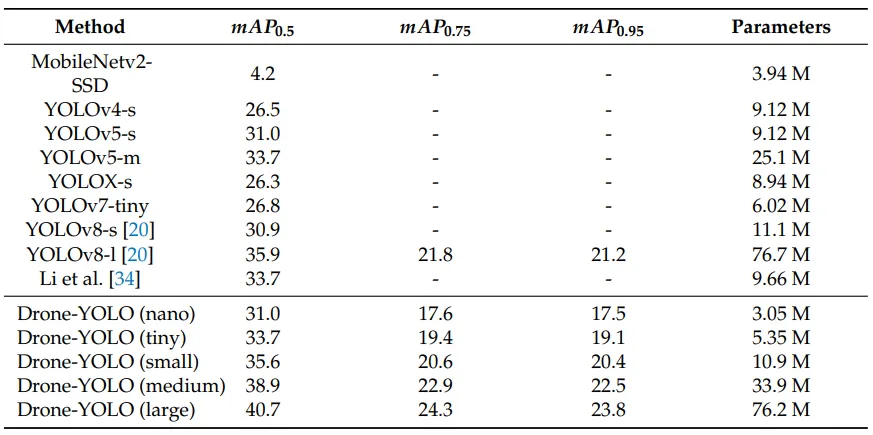

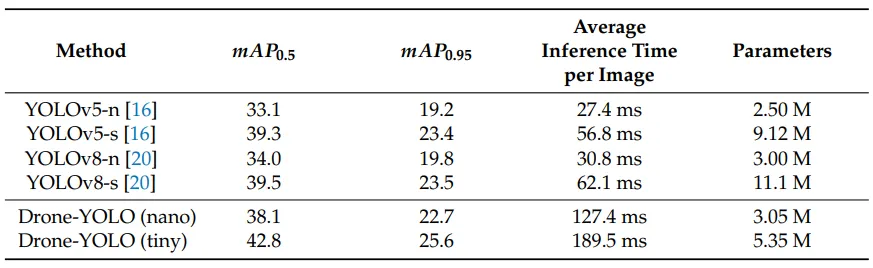

The proposed Drone-YOLO method has been evaluated in ablation experiments and compared with other state-of-the-art methods on the VisDrone2019 dataset. The results show that our Drone-YOLO (L) outperforms other baseline methods in object detection accuracy. Compared to YOLOv8, our approach achieved significant improvements in mAP0.5 metrics, a 13.4% increase in VisDrone2019 testing, and VisDrone 2019-val. That's an increase of 17.40%. In addition, the parametric efficient Drone-YOLO (tiny) with only 5.25M parameters performs equally or better on the data set than the baseline method with 9.66M parameters. These experiments validate the effectiveness of Drone-YOLO method in target detection tasks in UAV images.

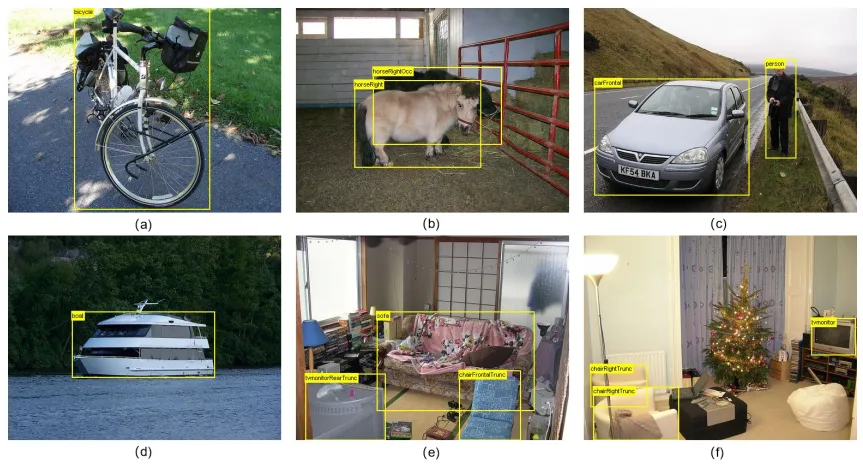

In the past 15 years, with the gradual maturity of UAV control technology, UAV remote sensing image has become an important data source in the field of low altitude remote sensing research because of its cost effectiveness and easy access. During this time, deep neural network methods have been extensively studied and have gradually become the best method for tasks such as image classification, object detection, and image segmentation. However, most of the deep neural network models currently applied, such as VGG, RESNET, U-NET, PSPNET, are mainly developed and validated using manually collected image data sets, such as VOC2007, VOC2012, MS-COCO, as shown in the figure below.

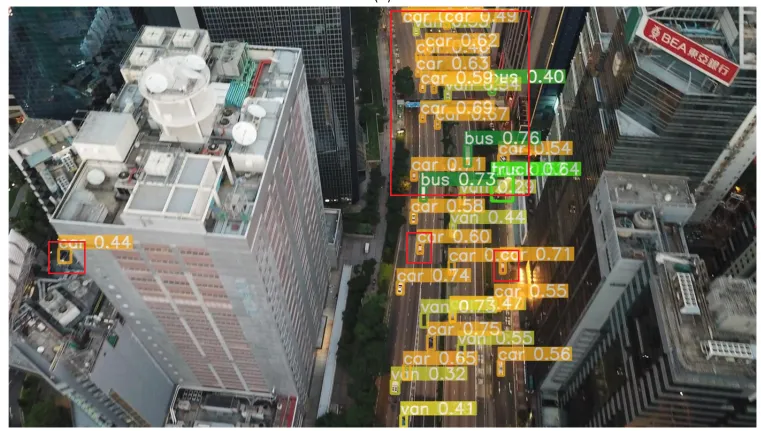

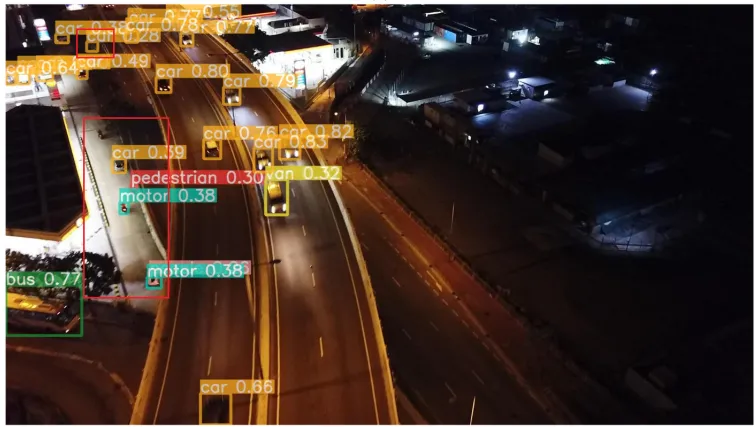

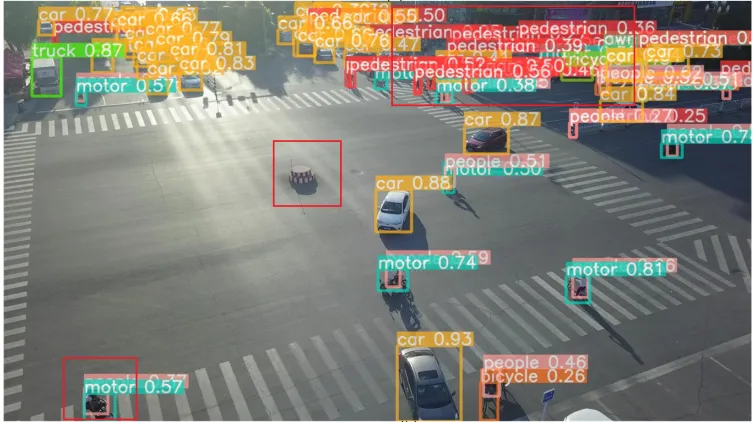

The images obtained from drones showed significant differences compared to the real images taken by humans. The images captured by these drones are as follows:

In addition to these image data features, there are two common application scenarios for UAV remote sensing target detection methods. The first involves the use of large desktop computers for post-flight data processing. After the drone flies, the data captured is processed on a desktop computer. The second involves real-time processing during flight, where the embedded computer on the drone synchronizes the aerial image data in real time. The app is commonly used for obstacle avoidance and automated mission planning during drone flight. Therefore, the object detection method using neural network needs to meet the different requirements of each scenario. For methods suitable for desktop computer environments, high detection accuracy is required. For methods suitable for embedded environments, the model parameters need to be within a certain range to meet the operational requirements of embedded hardware. After satisfying the operating conditions, the detection accuracy of the method should be as high as possible.

Therefore, neural network methods for object detection in UAV remote sensing images need to be able to adapt to the specific characteristics of these data. They should be designed to meet the requirements of post-flight data processing that can provide results with high accuracy and recall rates, or they should be designed as models with smaller scale parameters that can be deployed in embedded hardware environments for real-time processing on drones.

The following diagram shows the architecture of our proposed Drone-YOLO (L) network model. The network structure is an improvement of YOLOv8-l model. In the backbone of the network, we use the reparameterized convolutional module of the RepVGG structure as the undersampling layer. During training, this convolution structure trains both 3×3 and 1×1 convolution. During inference, the two convolution nuclei are merged into a single 3×3 convolution layer. This mechanism enables the network to learn more robust features without affecting the speed of inference or increasing the size of the model. In the neck, we extended the PAFPN structure to three layers and attached a small size object detection head. By combining the proposed sandwich fusion module, space and channel features are extracted from three different layer feature maps of the network backbone. This optimization enhances the ability of multi-scale detection head to collect the spatial positioning information of the object to be detected.

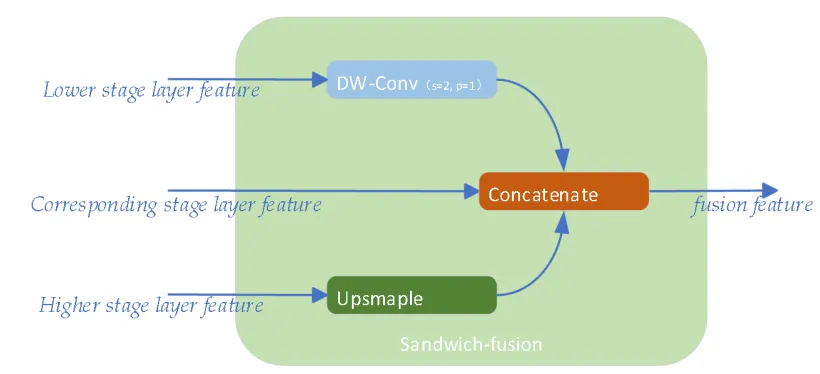

As shown in the figure below, we propose sandwich-fusion (SF), a new fusion module for three-dimensional feature maps that optimizes the spatial and semantic information of the target for detecting headers. The module is applied to the top down layer of the neck. The module is inspired by the BiC model proposed in YOLOv6 3.0 [YOLOv6 v3.0: A Full-Scale Reloading]. The SF input is shown in the figure, including the feature maps of the lower, corresponding, and higher stages of the trunk. The goal is to balance the spatial information of low-level features and the semantic information of high-level features in order to optimize the recognition and classification of target location by the network header.

For the project, we used Ubuntu 20.04 as the operating system, Python 3.8, PyTorch 1.16.0, and Cuda 11.6 as the software environment. The experiment uses NVIDIA 3080ti graphics card as hardware. The implementation code of the neural network is modified based on Ultralytics version 8.0.105. The hyperparameters used in the training, testing and validation of the project are consistent. The training epoch is set to 300, and the image input into the network is re-scaled to 640×640. In some of the results listed below, all YOLOv8 and our proposed Drone-YOLO networks have results from our detection. In none of these landings did the networks use pre-training parameters.

For our embedded application experiments, we used the NVIDIA Tegra TX2 as our experimental environment, which features a 256-core NVIDIA Pascal architecture GPU that delivers 1.33 TFLOPS of peak computing performance and 8GB of memory. The software environment is Ubuntu 18.04 LTS operating system, NVIDIA JetPack 4.4.1, CUDA 10.2 and cuDNN 8.0.0.

test the effects at visdrone 2019-test

Results based on NVIDIA Tegra TX2

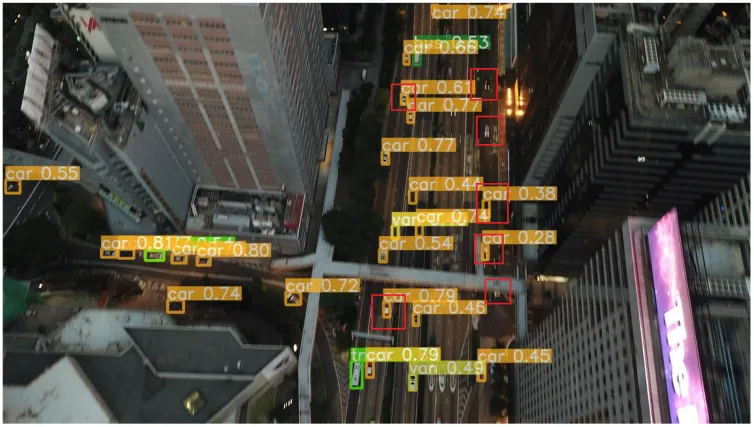

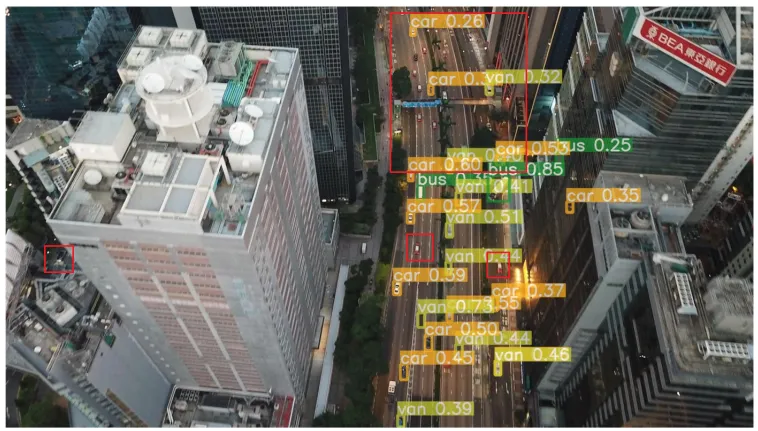

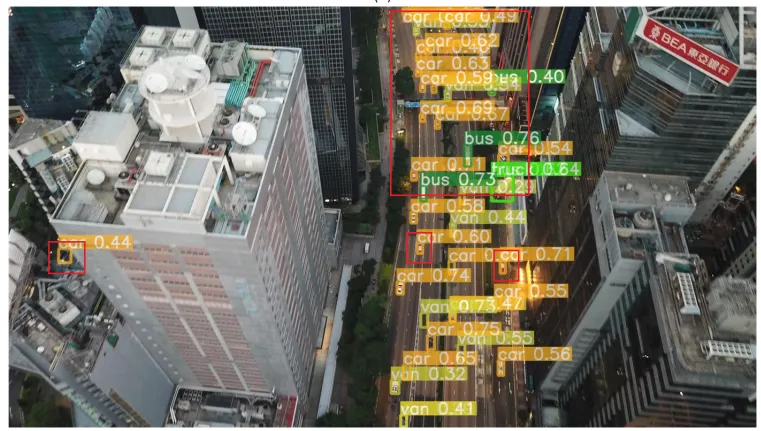

Drone-YOLO actual effect

On the left is the result of Yolov8, which shows that most of the targets in the red box were not detected

Thesis address: www.mdpi.com/2504-446X/7/8/526

2025-02-17

2025-02-14

2025-02-13

13004184443

Room 607, 6th Floor, Building 9, Hongjing Xinhuiyuan, Qingpu District, Shanghai

gcfai@dongfangyuzhe.com

WeChat official account

friend link

13004184443

立即获取方案或咨询

top