Home > Information > News

#News ·2025-01-09

Spreadsheets also had their ChatGPT moment.

Just two days ago, a table processing model named TabPFN was published in Nature, and subsequently triggered a heated discussion in the field of data science.

picture

picture

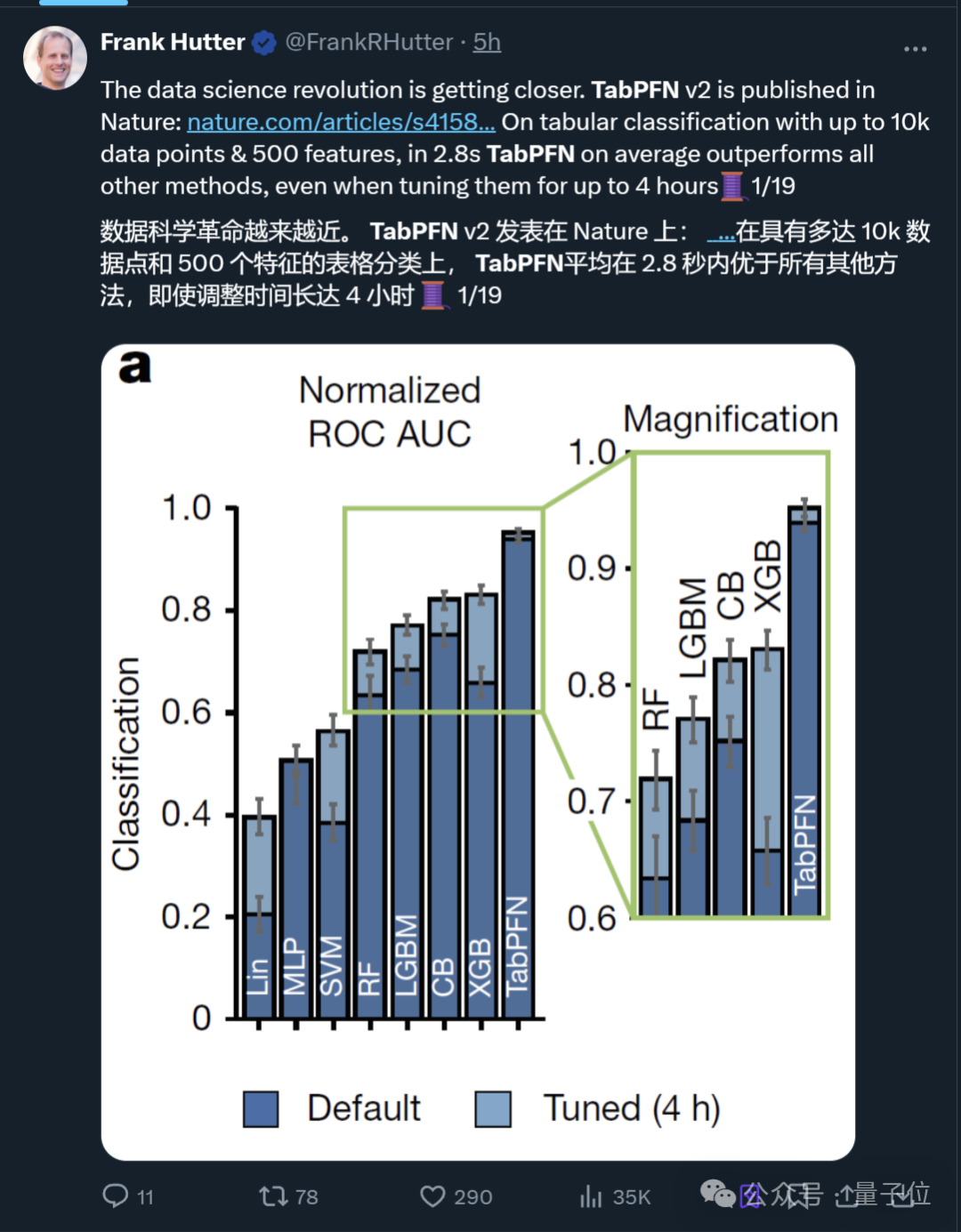

According to the paper, TabPFN is designed for small tables and achieves new SOTA performance when the dataset sample size does not exceed 10,000.

Specifically, it achieved better results than all previous methods in an average of 2.8 seconds.

Even if other methods have up to four hours of "rectification" time, they still can't beat it.

picture

picture

More importantly, its pre-trained neural network approach completely ended the dominance of traditional ML (such as gradient tree) in the tabular field.

picture

picture

picture

picture

TabPFN is currently available out of the box and can quickly interpret any table without special training.

In another article in Nature, the limitations of traditional table machine learning are mentioned.

For example, for the following common application scenarios:

If you run a hospital and want to determine which patients are most at risk for deterioration so that staff can prioritize them, you can create a spreadsheet with one patient for each row, a column for age, blood oxygen levels and other relevant attributes, and a final column for whether the patient's condition worsened during the hospital stay. These data were then used to fit a mathematical model to estimate the risk of deterioration in newly admitted patients.

In this example, traditional tabular machine learning uses tables of data to make inferences, which often requires the development and training of custom models for each task.

The TabPFN, developed by researchers from institutions such as the ML Laboratory at the University of Freiburg in Germany, can handle arbitrary forms without special training.

picture

picture

And according to the authors, the TabPFN v2 is a big upgrade from the original version two years ago.

At the time, TabPFN v1 was supposed to "potentially revolutionize data science," and now:

We are one step closer to that goal.

picture

picture

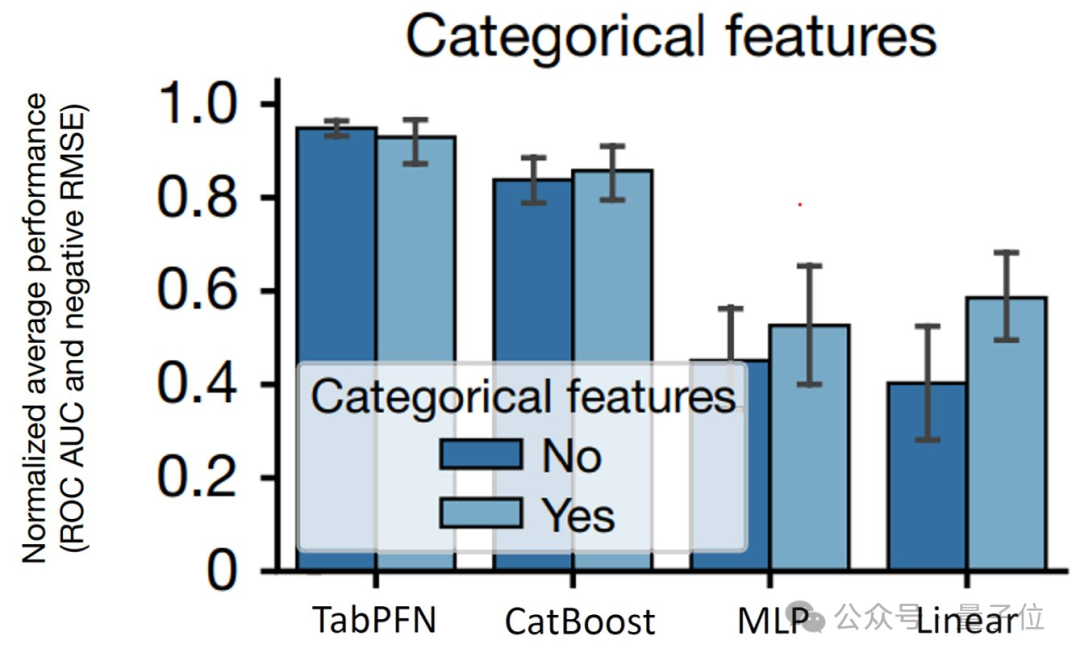

In summary, version v2 improves classification capabilities and extends functionality to support regression tasks, where it performs better than the baseline model that has been tuned over time.

picture

picture

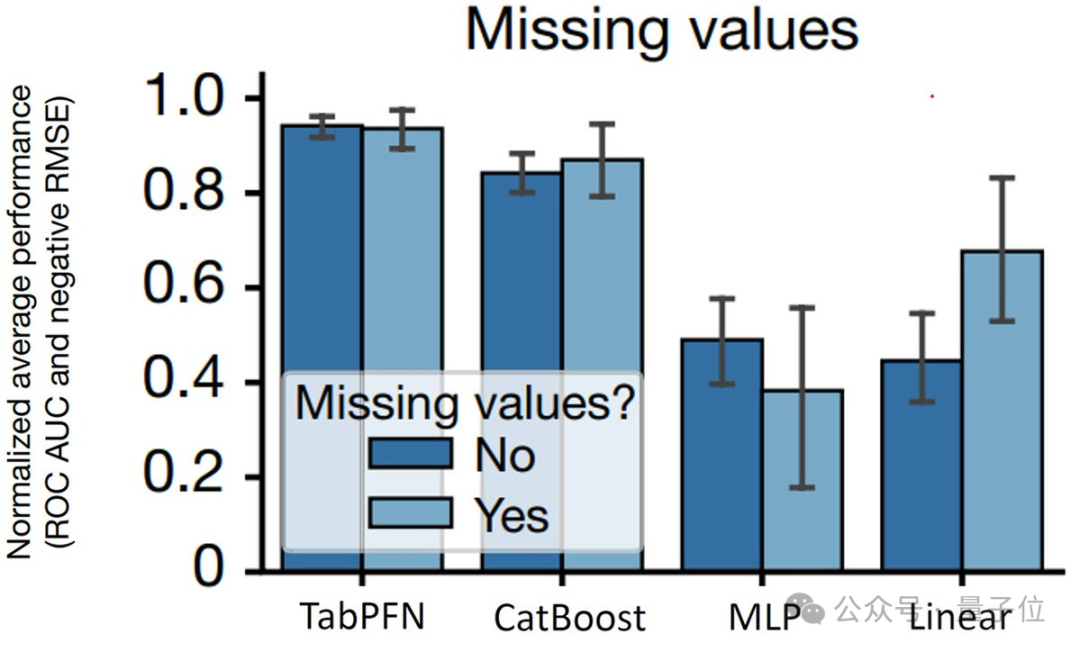

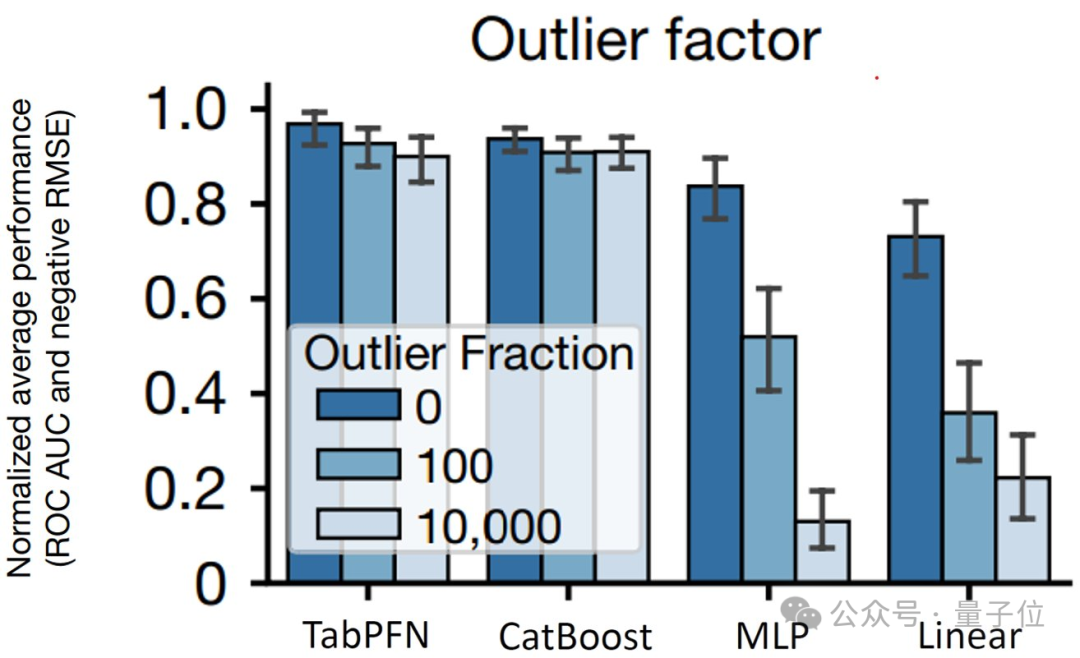

In addition, it has native support for missing values and outliers, making it efficient and accurate when working with a variety of data sets.

picture

picture

picture

picture

Overall, TabPFN v2 is suitable for processing small and medium-sized datasets with no more than 10,000 samples and 500 features.

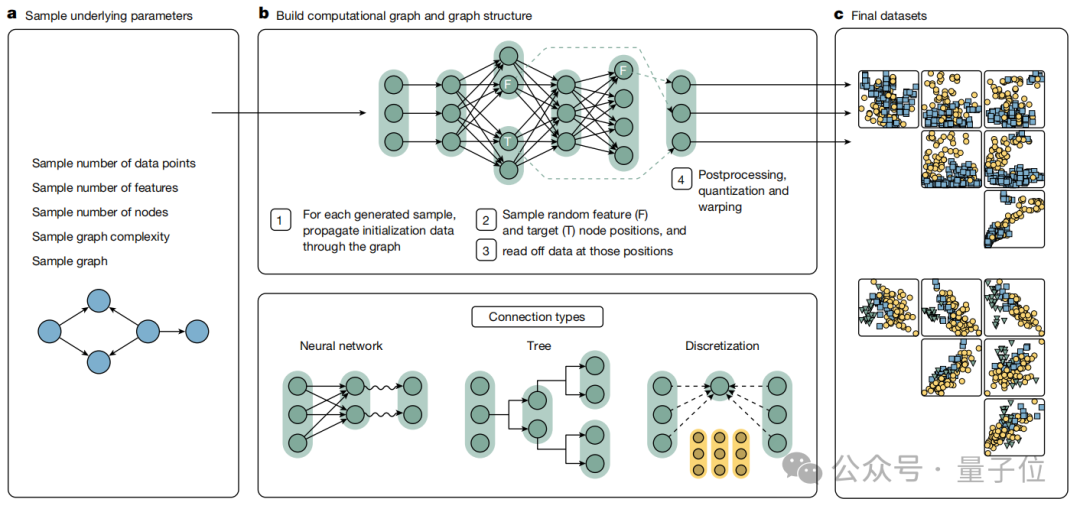

Let's take a look at the complete training and application process of TabPFN model.

Let's start with data set sampling. To prepare the model for a variety of real-world situations, the researchers generated a large amount of synthetic data.

In the first step, they sample some key parameters (such as the number of data points, features, nodes, etc.), then build a computational graph and graph structure in the middle section to process the data, and finally produce a dataset with different distributions and features.

It should be emphasized that in order to avoid common problems with the base model, the middle section is based on the structural causal model (SCMs) to generate a synthetic training data set.

Simply put, building causal graphs by sampling hyperparameters, propagating the initial data, and applying a variety of computational mapping and post-processing techniques can create a large number of synthetic data sets with different structures and features so that models can learn strategies for dealing with real-world data problems.

picture

picture

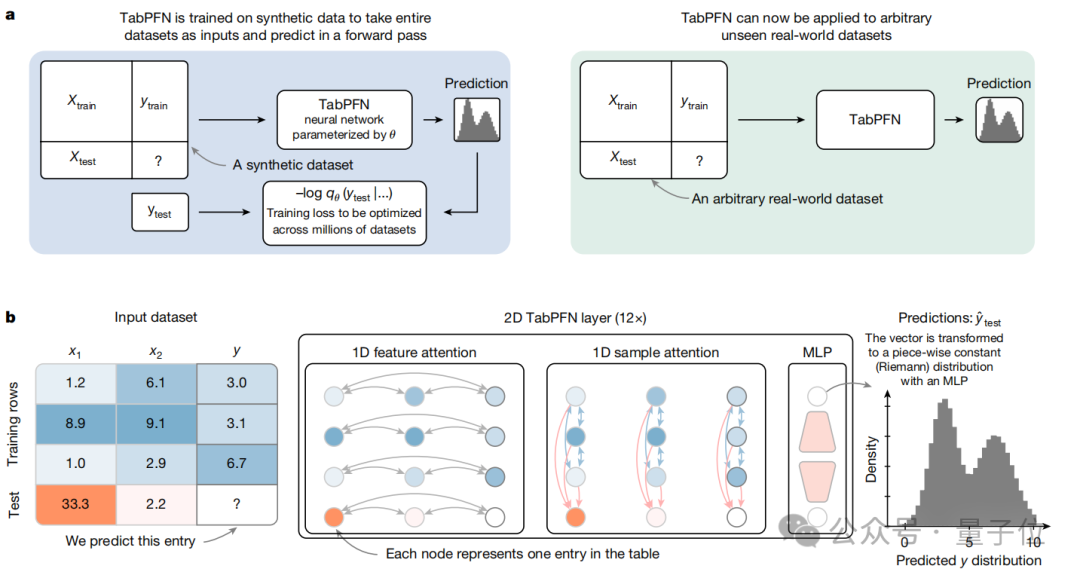

Next, the model was pre-trained, and they adapted the new schema to the table structure.

For example, the TabPFN model assigns a separate representation to each cell, which means that each cell's information can be processed and focused on separately.

Moreover, two-way attention mechanism is used to further enhance the model's ability to understand tabular data.

On the one hand, through the 1D feature attention mechanism, cells in the same feature column can relate to each other and transmit information, so that the model can capture the change rules and relations of different samples on the same feature.

On the other hand, the 1D sample attention mechanism allows cells in different sample rows to interact with each other to identify overall differences and similarities between different samples.

This bidirectional attention mechanism ensures that no matter how the order of samples and features changes, the model can stably extract and utilize the information in it, thus improving the stability and generalization ability of the model.

picture

picture

Furthermore, the model training and inference process are further optimized.

For example, in order to reduce repeated calculation, the model allows direct use of the previously saved training state when inferring test samples, avoiding repeated calculation of training samples. Because the tabular data in the training phase are processed and learned separately, they have been saved.

At the same time, the model further reduces the memory usage by using semi-precision calculation and activation checkpoint.

Finally, in the actual prediction generation stage of the model. Thanks to the context learning (ICL) mechanism, the model does not need to undergo extensive retraining for each new data set and can be directly applied to a variety of previously unseen real-world data sets.

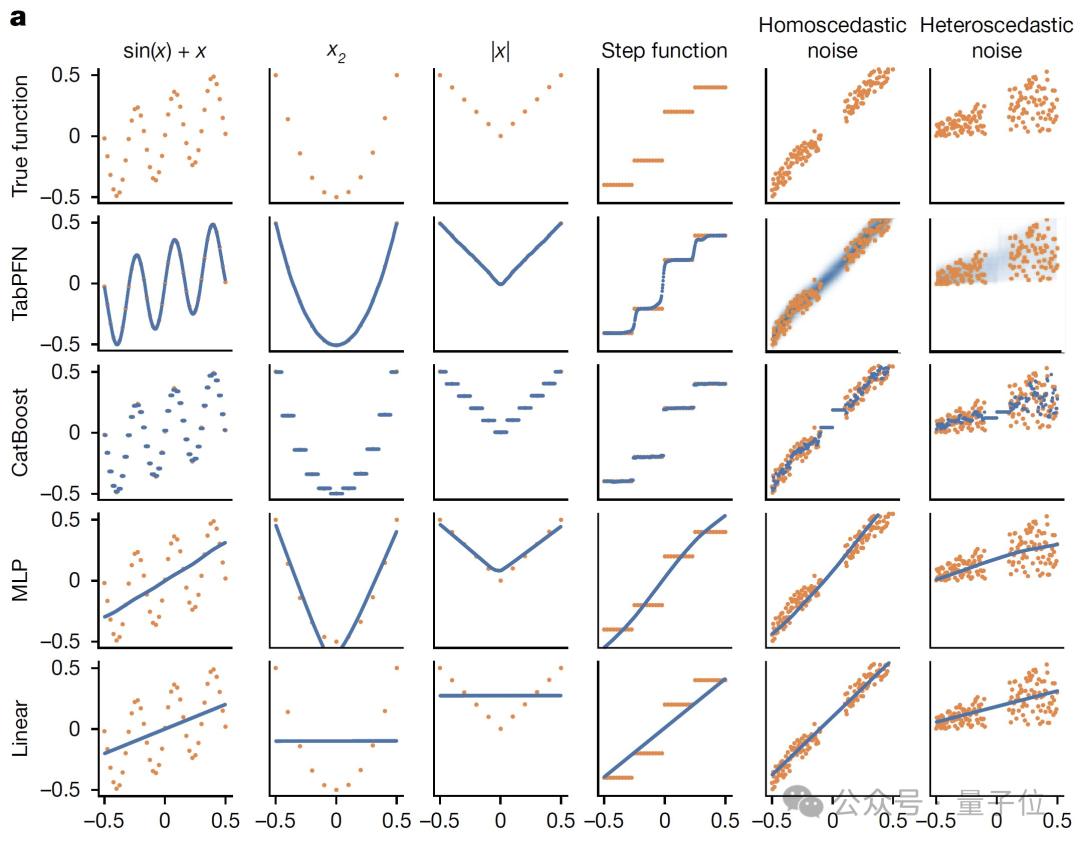

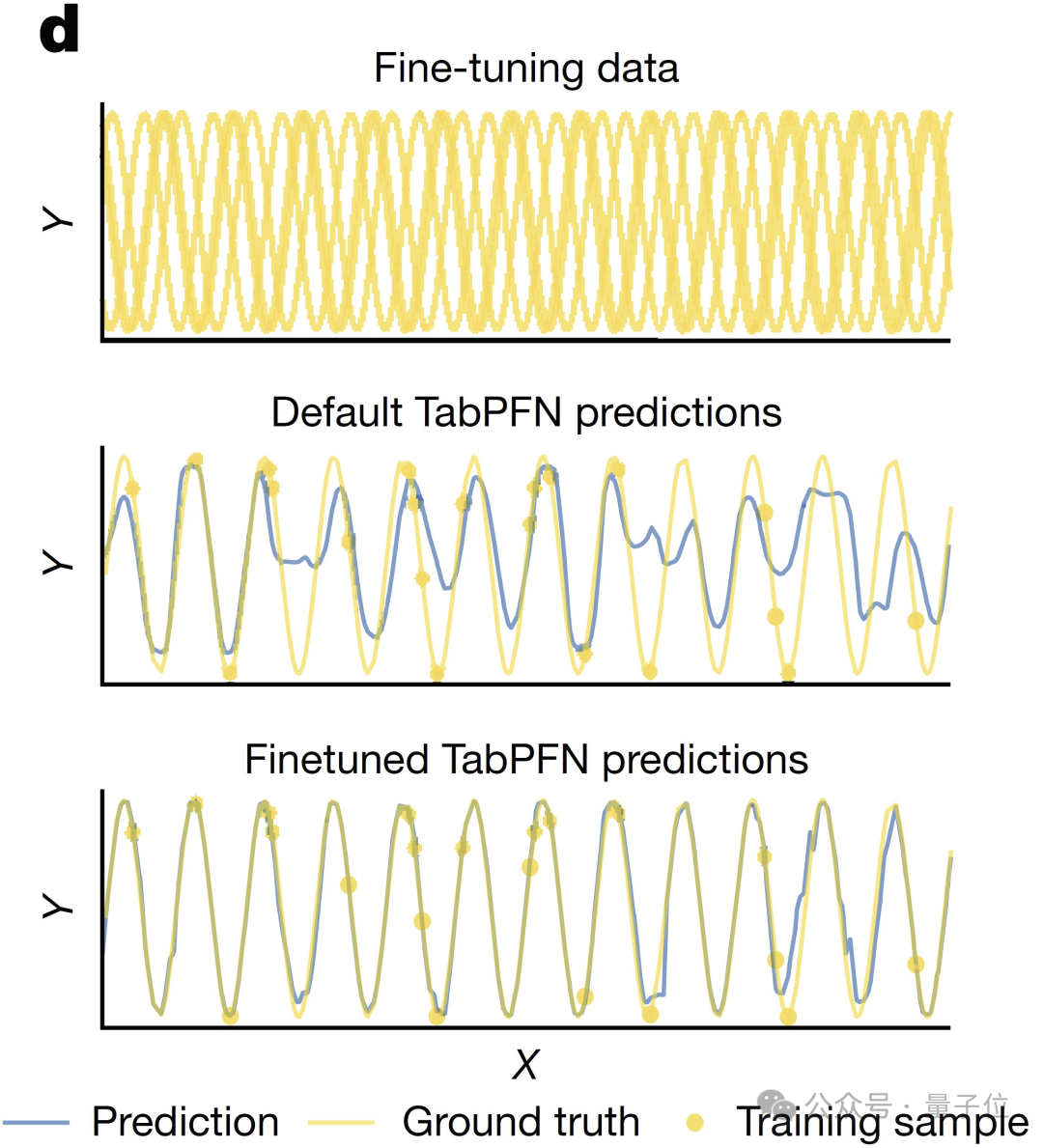

In qualitative experiments, it is able to model many different function types effectively compared to linear regression, multi-layer perceptrons (MLP), CatBoost, etc. (Orange for training data, blue for predictions)

picture

picture

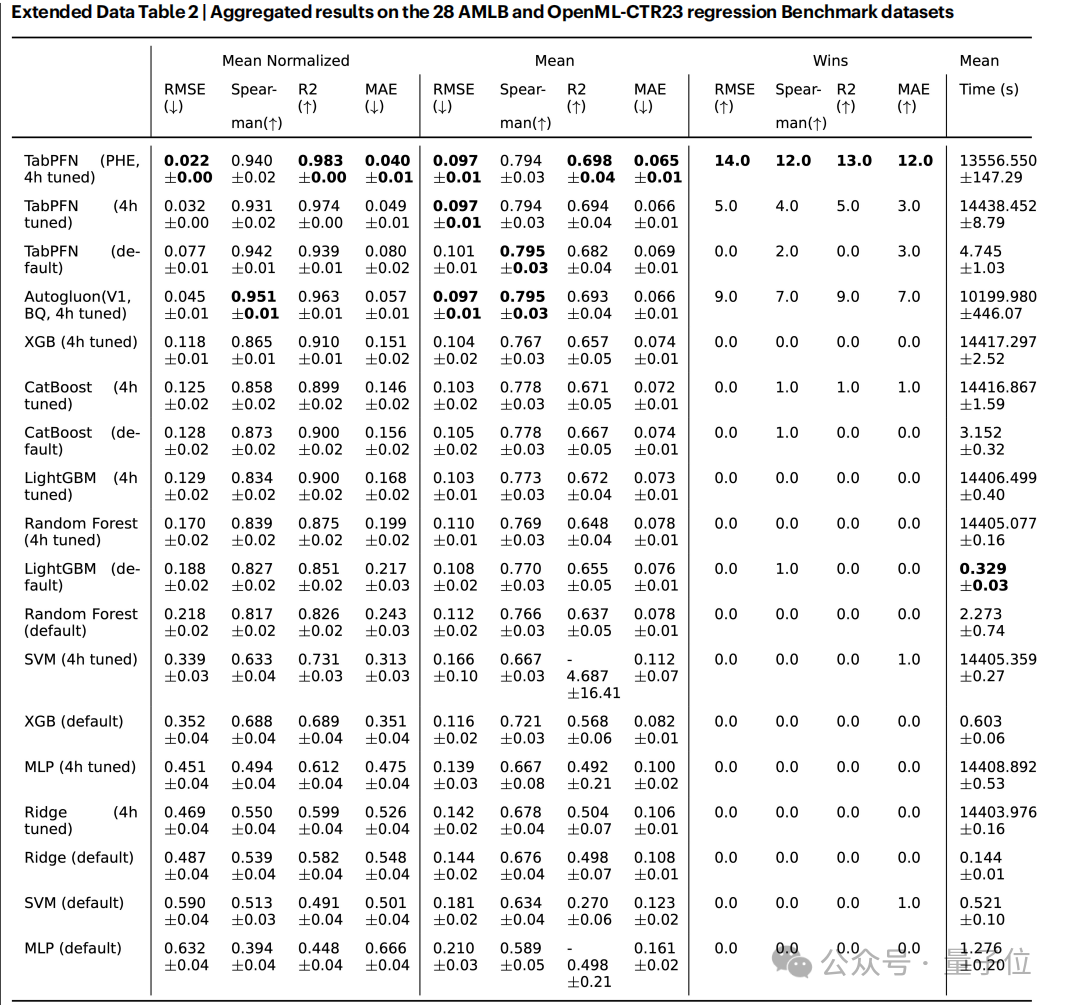

On the other hand, TabPFN achieves more SOTA when evaluated on widely used and representative datasets like AutoML Benchmark and OpenML-CTR23 than advanced baseline methods like Random Forest and XGBoost. Multiple indicators covering the two main tasks of classification and regression.

picture

picture

Even in the actual five Kaggle competitions, with fewer than 10,000 training samples, TabPFN beat CatBoost.

Finally, TabPFN also enables fine-tuning for specific data sets.

picture

picture

The code is now open source, and the authors have released an API that allows them to perform calculations using their GPU.

Interested students can squat a wave ~

API call:

https://priorlabs.ai/tabpfn-nature/ code:

https://github.com/PriorLabs/TabPFN

Reference link:

[1]https://www.nature.com/articles/s41586-024-08328-6

[2]https://www.automl.org/tabpfn-a-transformer-that-solves-small-tabular-classification-problems-in-a-second/

[3]https://x.com/FrankRHutter/status/1877088937849520336

2025-02-17

2025-02-14

2025-02-13

13004184443

Room 607, 6th Floor, Building 9, Hongjing Xinhuiyuan, Qingpu District, Shanghai

gcfai@dongfangyuzhe.com

WeChat official account

friend link

13004184443

立即获取方案或咨询

top