Home > Information > News

#News ·2025-01-09

In RAG systems, the core task is to find the stored information that is most similar to the contents of the query. However, vector similarity search does not achieve this, which is why RAG has suffered setbacks in practical applications.

RAG's failure in production was attributed to the use of vector embedding to measure the similarity of information, which was clearly not an appropriate choice. We can illustrate this point with a simple example. Suppose there are three words:

"King" and "ruler" may refer to the same person (so they can be treated as synonyms), but "king" and "queen" clearly refer to different people. Measured as a percentage of similarity, "King/ruler" should have a high similarity score, while "King/queen" should have a zero score.

In other words, if the query is about "king," then the text fragment containing information about "queen" should be irrelevant; Snippets of text that contain information about the "ruler" may be relevant. However, vector embedding considers "queen" to be more relevant to "king" than "ruler". Here are the vector similarity scores for "queen" and "ruler" versus "king" when using OpenAI's ADA-002 embedded model:

This means that when searching for information about "king," the system will prioritize snippets of text about "queen" over those about "ruler," even though the latter may be more relevant in content, while information about "queen" is not relevant at all.

The problem of vector embedding arises not only in terms involving people (such as Kings), but also in terms involving other things.

Imagine a query about the characteristics of cats. In theory, texts that mention dogs should have zero similarity, while texts about felines should have extremely high similarity scores. But again, vector embedding gives the wrong result:

Even though the difference between the two scores was only 1%, it still meant that texts about dogs took precedence over texts about cats; This clearly doesn't make sense, because the text talking about dogs is irrelevant to the query, while the text talking about cats is extremely relevant.

Absolute synonyms are words that have exactly the same meaning. However, even when dealing with absolute synonyms, vector embedding mistakenly prioritises terms that are not synonyms at all - as the following example further demonstrates.

"The Big Apple" is another name for New York City. Suppose Susan is a New Jersey resident who blogs about her experiences at restaurants, museums, and other locations in her hometown. But in one post, Susan mentioned her wedding at "The Big Apple." When a visitor on Susan's website asked the Chatbot, "Has Susan ever been to New York?" When the problem arises.

It's a shame that a lot of stuff about New Jersey will come before Susan's post about getting married. Why? From a vector embedding perspective, "New Jersey" is semantically closer to "New York" than "The Big Apple" :

Based on The number of posts referring to "New Jersey," it's possible that a mention of "The Big Apple" could have been ignored among the hundreds of candidates the Chatbot retrieved. This suggests that vector embedding can be just as errant when processing location information (such as New York) as it is when processing people (such as Kings) and other foods (such as cats).

In fact, vector embedding is also problematic when dealing with behavioral operations.

In the case of "bake a cake," a text discussing "bake a pie" (93%) might take precedence over a text discussing "make a chocolate cake" (92%); Although the former is completely unrelated to the query, the latter is directly relevant.

The above example shows that vector similarity is not a reliable measure of content similarity. Not only does it not apply to people (like Kings), things (like cats), and places (like New York), but it also doesn't apply to behavioral operations (like baking a cake). In other words, vector embeddings are not reliable when it comes to the percentage of similarity that answers questions about people, things, places, and actions. In other words, vector embeddings have their own inherent flaws in dealing with almost every type of problem one might ask.

You may wonder if the examples above have been filtered, or if percentage scores really matter. Let's contrast RAG's misleading portrayal with how it actually works.

Take OP-RAG[1], which was released on September 3, 2024.

picture

picture

OP-RAG is the work of three researchers at Nvidia, so the research comes from a reputable researcher.

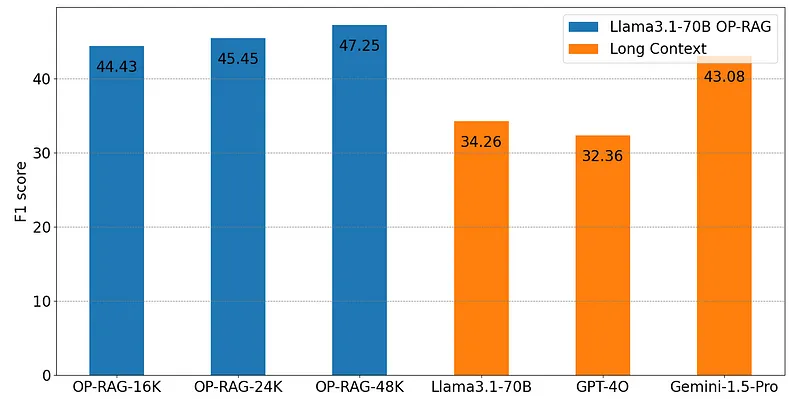

Take a look at the results shown in the chart above, which is based on the EN.QA dataset. The first two questions in the data set are:

The answers to these questions are short and don't require complicated explanations. Moreover, this dataset represents only 3.8% of the entire Wikipedia corpus.

Despite Nvidia's wealth of resources, the modest size of the data set, and the relatively short answers, the researchers broke the state of the art with a new RAG method that sends 48K fragments of text along with user queries. An F1 score of 47.25 was achieved (the F1 score would be lower if less content was sent).

Don't these Nvidia researchers realize that they should have been able to store 25 times more vectors and always find relevant answers in the first three matches? Of course not. In practice, that's not how RAG works in the real world. Similarly, Nvidia's LongRAG[2], released on November 1, 2024, is a great example.

Take a look at the research published by Databricks[3] in October 2024.

picture

picture

To be correct more than 80% of the time, RAG has to send a 64K character text fragment to OpenAI's o1 model. None of the other models, including the GPT-4o, GPT-4 Turbo, and Claude-3.5 Sonnet, met this standard. But the results of the o1 model are fraught with problems.

First, the hallucination rate of information production is high.

Second, even when dealing with short content, o1 is unbearably slow. Processing 64K of text is unacceptably slow.

Moreover, the cost of running o1 is also a considerable expense.

What's more, industry rumors say that the upcoming batch of new models will not bring significant performance improvements - Anthropic has even postponed the release of the new models indefinitely.

Even if larger models could solve the problem, they would be slower and more expensive. In other words, they are too slow and too expensive for practical use. Would a business be willing to pay more for a Chatbot than a real person? In particular, it took nearly a minute for the robot to give an unreliable answer.

This is the current state of RAG. This is the consequence of dependency vector embedding.

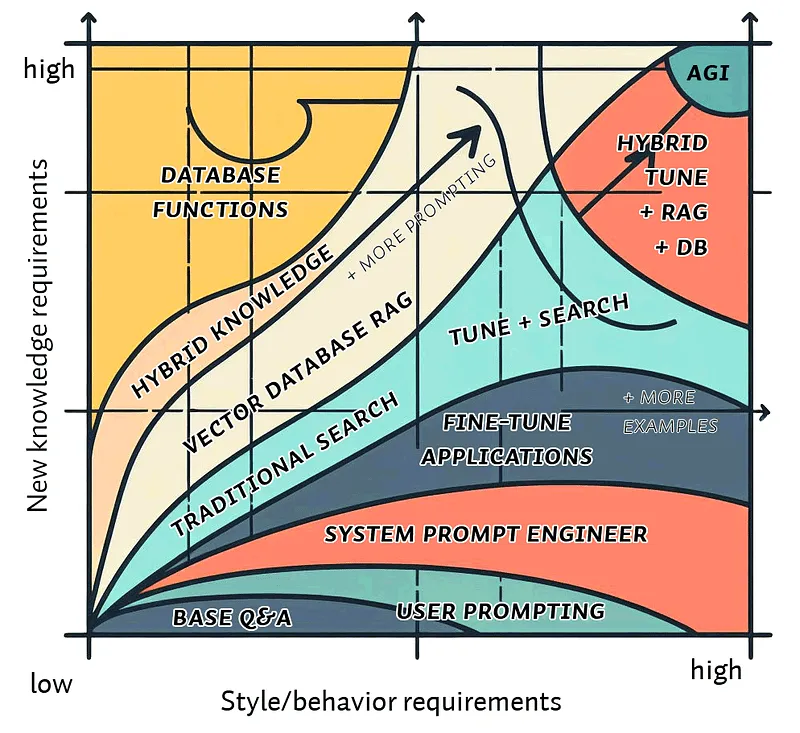

I am writing this post because I see a lot of self-doubt among data scientists and programmers on the forum that they are not doing it right. More often than not, someone is eager to come up with a series of solutions: reordering, rewriting queries, using BM25 algorithms, building knowledge graphs, and so on, hoping to hit the road and find a solution.

picture

picture

The problem is that what millions of people are learning is simply wrong. Here's an excerpt from an October 2024 update by the co-founder of a company that has trained 400,000 people:

RAG is best suited for scenarios where the amount of data is large, the LLM context window cannot be loaded at once, and fast response and low latency are required. ...

RAG systems now have a standard architecture that has been used in many popular frameworks, so developers don't have to start from scratch. ...

After the data is converted into a vector, the vector database can quickly find similar items, because similar items exist in the vector space in the form of similar vectors, we call it a vector store (i.e. storing vectors). Semantic search takes place in vector stores and understands the meaning of a user query by comparing the embedding vector of the user query with the embedding vector of the stored data. This ensures the relevance and accuracy of search results, regardless of the keywords used in a user's query or the type of data searched.

Mathematical principles tell us that vector embedding techniques do not locate information by comparing percentages of similarity. They fail to understand the true intent of the user's query. They do not guarantee the relevance of search results for even the simplest user queries, let alone "regardless of the keywords used in the query or the type of data searched."

As the OP-RAG research paper shows, even when 400 blocks of data can be retrieved through vector search, the large language model (LLM) fails to find relevant information on the most basic tests more than half the time. Nonetheless, the textbook tells data scientists: "In practice, we can upload an entire website or course content to Deep Lake [vector database] to search through thousands of documents... To generate a response, we extract the first k (say the first 3) blocks that best match the user's question, organize them into prompt words, and send them to the model in a temperature parameter of 0."

Textbooks generally teach students that vector embedding techniques are powerful enough to store "millions of documents" and be able to find answers to user queries in the first 3 most relevant blocks of data. However, based on mathematical principles and cited research results, this statement is clearly inaccurate.

The key to solving the problem is to no longer rely solely on vector embedding technology.

But that doesn't mean vector embeddings are worthless. Not so! They play a crucial role in the field of natural language processing (NLP).

For example, vector embedding is a powerful tool for dealing with polysemous words. Take the word "glasses" for example. It can refer either to the glasses you drink every day or to the glasses you wear on your face.

Suppose someone asked: What kind of glasses does Julia Roberts wear? With vector embedding technology, we can ensure that the information related to the glasses is above the information related to the drinking glass, which is the embodiment of its semantic processing power.

The advent of ChatGPT has triggered a less optimistic change in the data science community. Important NLP tools like synonyms, hyponyms, hypernyms, holonyms, etc., were sidelined in favor of Chatbot queries.

There is no doubt that large language models (LLMs) do simplify some aspects of NLP. But at the moment, we seem to be leaving valuable technology behind.

LLMs and vector embedding are the key technologies of NLP, but they are not the complete solution.

For example, many companies have found that when a Chatbot fails to present a list of products that visitors want, visitors often choose to leave. To this end, these companies are trying to replace traditional keyword search with synonym-based search.

Synonym-based search does find products that keyword searches miss, but it comes at a cost. Because of the polysemy of the term, there is often irrelevant information that overlays what the visitor really wants. A visitor looking to buy drinking glasses, for example, might see a list of glasses.

Faced with this situation, we do not have to completely deny, vector embedding technology can just play a role. Instead of relying entirely on vector embedding, we should use it as an optimization tool for search results. On top of synonym-based search, vector embedding is used to push the most relevant results to the top.

2025-02-17

2025-02-14

2025-02-13

13004184443

Room 607, 6th Floor, Building 9, Hongjing Xinhuiyuan, Qingpu District, Shanghai

gcfai@dongfangyuzhe.com

WeChat official account

friend link

13004184443

立即获取方案或咨询

top