1. Introduction

Have you ever thought that the mobile phone can be like the intelligent butler Jarvis in the movie Iron Man, and can complete various complex tasks smoothly and easily with one sentence.

Recently, domestic and foreign mobile phone manufacturers and AI companies have released mobile phone AI agent-related products, so that the once fantasy has gradually become feasible.

vivo, as the industry leader, launched its mobile phone smart body product "PhoneGPT" at the developer conference in October, which can help users to order coffee in one sentence, order takeout, and even find the nearest private restaurant in one sentence and book boxes by phone through AI, which is called" i saviour "by netizens.

Figure 1 vivo PhoneGPT reservation (Blue Heart Small V conversation or Small V main interface slide exploration - Agent Square experience)

At the same time, the major manufacturers seem to have agreed in advance, and are aiming at the scene of ordering coffee in one sentence, which is quite the instant sense of Steve Jobs ordering Starbucks with the original iPhone. There is a joke that the first cup of coffee this fall is the mobile phone smart body to help you order.

Figure 2: vivo PhoneGPT ordering coffee (Blue Heart V conversation or V main interface slide exploration - Agent Square experience)

Despite the rapid development of the industry, the recent explosion of papers on mobile AI agents, and the rapid development of related technical route iterations, there is still a lack of systematic reviews in this field. vivo AI Lab, together with MMLab and other teams from the Chinese University of Hong Kong, released a review paper on "Large model-driven mobile phone AI Agents", which is 48 pages long and covers more than 200 papers, and carries out a comprehensive and in-depth research on the relevant technologies of mobile phone automatic operation agents based on large models. It is hoped to serve as a reference for academia and industry to jointly promote the development of the industry.

- Title: LLM-Powered GUI Agents in Phone Automation: Surveying Progress and Prospects

- Address: https://www.preprints.org/manuscript/202501.0413/v1

1.1 Research Background

- Mobile GUI automation aims to simulate human interaction with the mobile interface through programming to complete complex tasks. Traditional approaches include automated testing, shortcut commands, and robotic process automation (RPA), but there are challenges in versatility, flexibility, maintenance costs, intent understanding, and screen perception.

- The emergence of large language model (LLM) has brought a new paradigm for mobile phone automation, and mobile phone GUI agents based on LLM can understand natural language instructions, perceive the interface and perform tasks, which is expected to achieve more intelligent and adaptive automated operations.

1.2 Research Objectives

- This paper summarizes the research results of LLM-driven mobile phone GUI agent, including framework, model, data set and evaluation method.

- The application status of LLM in mobile phone automation is analyzed, and its advantages and challenges are discussed.

- Indicate the direction of future research and provide references for researchers and practitioners in related fields.

1.3 Major Contributions

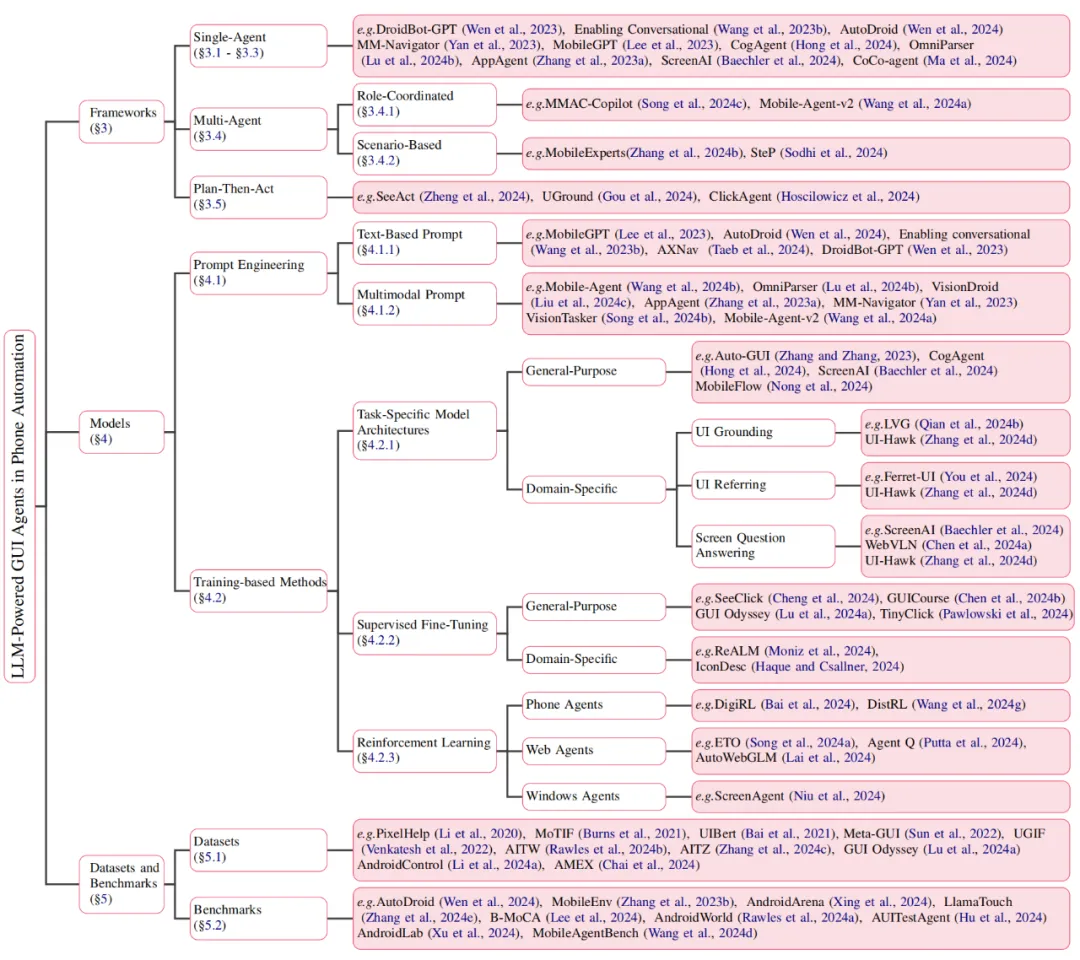

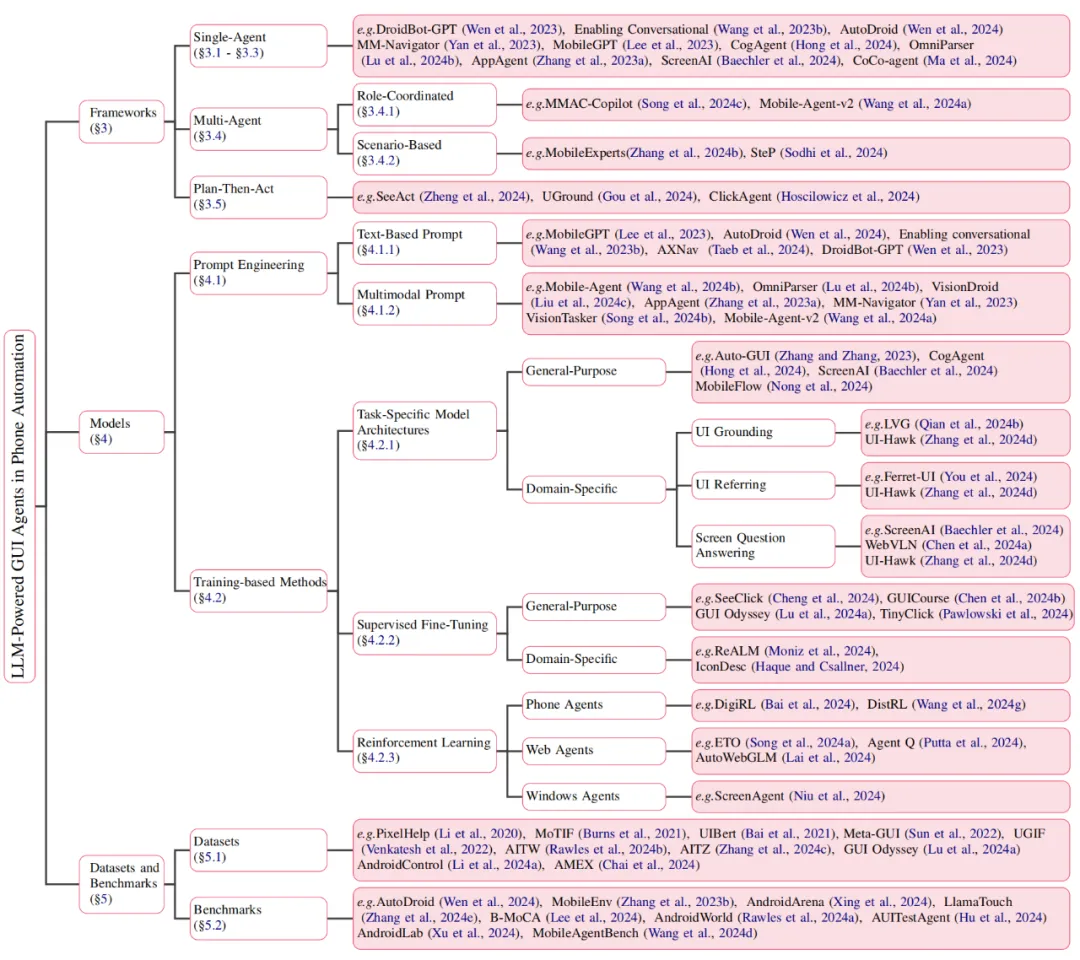

Figure 3 Big model-driven mobile phone GUI agent literature classification

- This paper gives a comprehensive and systematic review of LLM-driven mobile GUI agents, covering development trajectory, core technologies and application scenarios.

- A multi-perspective methodology framework is proposed, including framework design, model selection and training, data set and evaluation index.

- In-depth analysis of the reasons why LLM enables mobile phone automation, explore its advantages in natural language understanding, reasoning and decision making.

- Present and evaluate the latest advances, data sets and benchmarks to provide resources to support research.

- Identify key challenges and propose new perspectives for future research, such as data set diversity, device-side deployment efficiency, and security issues.

2. The development of mobile phone automation

2.1 Mobile phone automation before the LLM era

- Automated testing: In order to solve the testing problem caused by the increasing complexity of mobile applications, it has experienced the development of random testing to model-based testing, learning-based testing, and then reinforcement learning testing, but it still faces challenges such as test coverage, efficiency, cost, and model generalization ability.

- Shortcut instructions, such as Tasker and iOS Shortcuts, automate tasks with predefined rules or trigger conditions, but have limited scope and flexibility.

- Robotic Process Automation (RPA) : Simulates humans performing repetitive tasks on mobile phones, but has difficulty handling dynamic interface and script updates.

2.2 Challenges of traditional methods

- Limited versatility: Traditional methods are specific to specific applications and interfaces, difficult to adapt to different applications and dynamic environments, lack flexibility and context adaptability.

- High maintenance costs: Writing and maintaining automation scripts requires expertise, and scripts need to be modified frequently as applications are updated, which is time-consuming and laborious, and the high entry barrier limits users.

- Poor understanding of intent: rule-based and script-based systems can only perform predefined tasks, have difficulty understanding complex natural language instructions, and cannot meet the diverse needs of users.

- Weak on-screen GUI perception: Traditional methods are difficult to accurately identify and interact with various GUI elements in different applications, and have limited ability to handle dynamic content and complex interfaces.

2.3 LLM promotes mobile phone automation

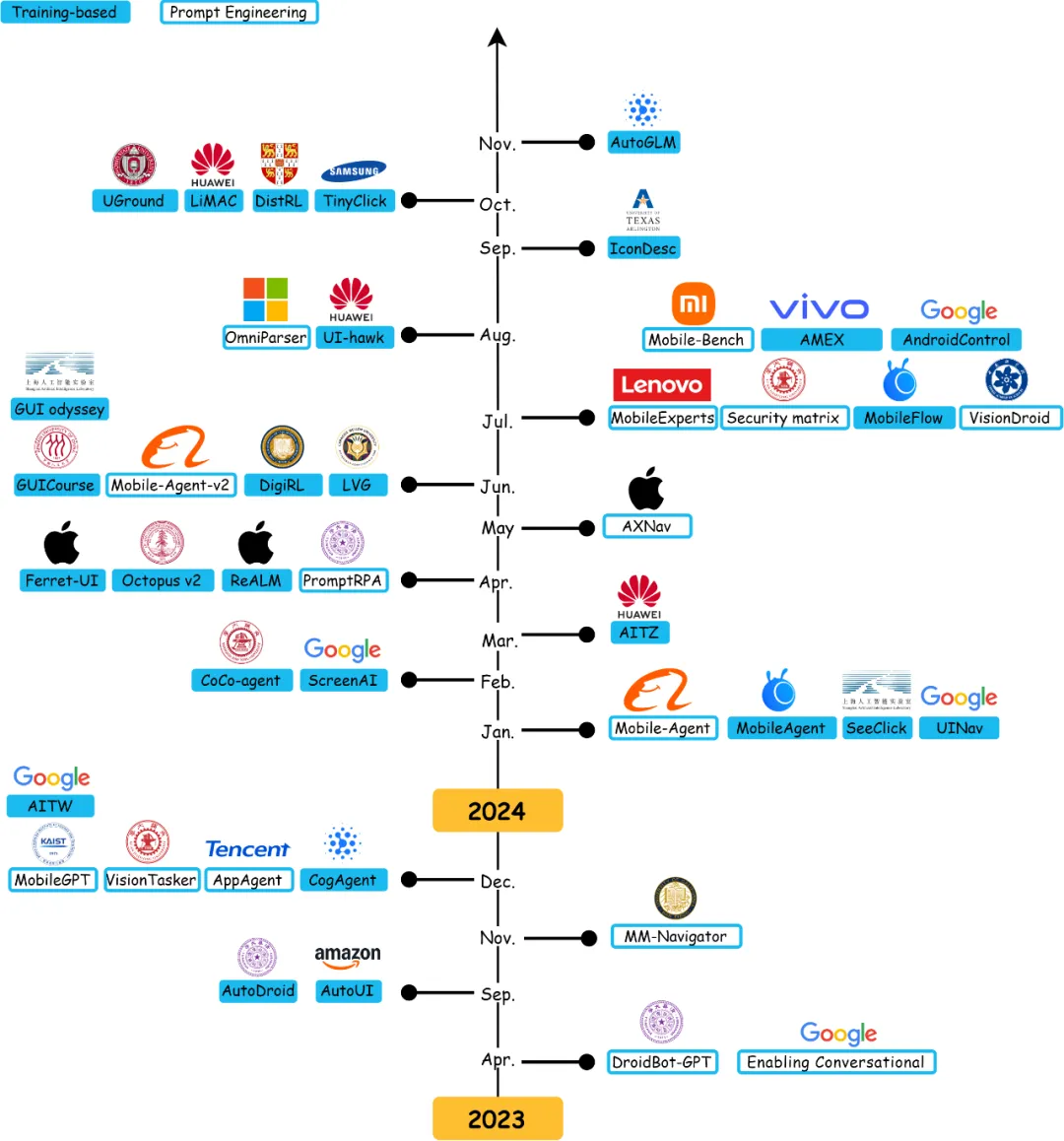

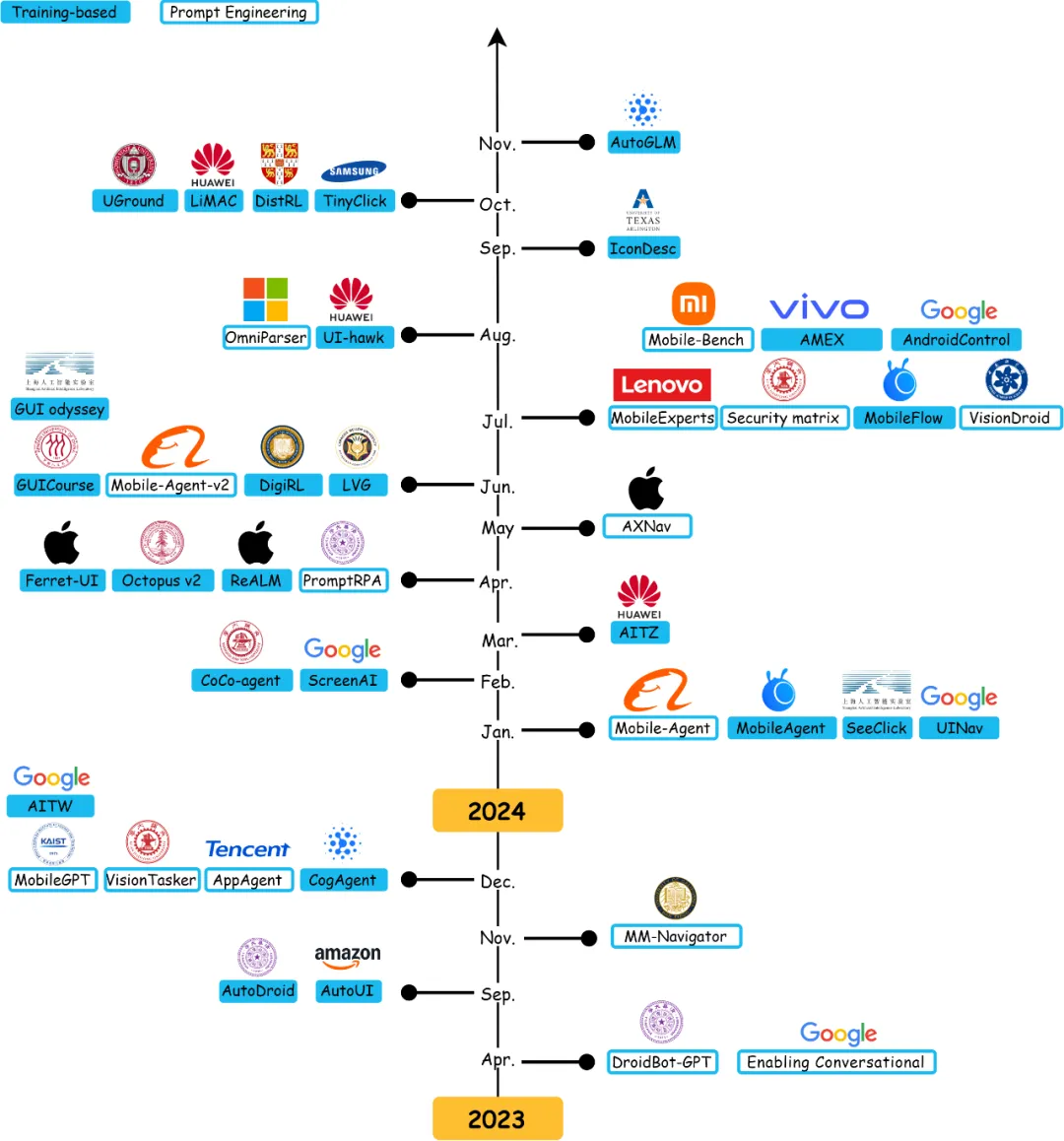

Figure 4 Big model-driven mobile phone GUI agent development milestones

- Development history and milestones: The application of LLM in mobile phone automation continues to evolve, and gradually realizes the automation of more complex tasks through the improvement of natural language understanding, multi-modal perception and reasoning decision-making capabilities.

- LLM is a way to solve traditional challenges

- Contextual semantic understanding: Learn from a large corpus of texts, understand complex language structures and domain knowledge, and accurately parse multi-step commands.

- Screen GUI Multimodal perception: Use multimodal perception capabilities to unify text and visual perception information to achieve accurate positioning and interaction of screen elements.

- Reasoning and decision making: Complex reasoning, multi-step planning, and context-aware adaptation based on language, visual context, and historical interactions to improve task execution success.

2.4 Emerging business applications

- Apple Intelligence: Launched in June 2024, integrates AI capabilities into iOS, iPadOS, and macOS to enhance communication, productivity, and focus with intelligent summarization, priority notifications, and context-aware replies, ensuring user privacy and security.

- vivo PhoneGPT: Launched in October 2024, the personal AI assistant in OriginOS 5 operating system has the ability to independently disassemble requirements, proactively plan paths, identify real-time environments and dynamically feed back decisions. It can help users to order coffee in one sentence, order takeout, and even find the nearest private restaurant in one sentence and book boxes by phone through AI.

- Honor YOYO Agent: Released in October 2024, Honor Yoyo Agent ADAPTS to user habits and complex commands, automates multi-step tasks through voice or text commands, such as shopping price comparison, automatic form filling, customized drinks and meeting silence, and improves user experience.

- Anthropic Claude Computer Use: In October 2024, the Computer Use function of the Claude 3.5 Sonnet model was launched, enabling AI intelligence to operate the computer like humans, observe the screen, move the cursor, click buttons and enter text, changing the human-computer interaction paradigm.

- Zhipu.AI AutoGLM: Launched in October 2024, it simults human operation of smartphones through simple commands, such as "like" comments, shopping, booking and ordering food, and can navigate interfaces, interpret visual cues and perform tasks, demonstrating the potential of LLM-driven mobile automation in business applications.

3. Mobile GUI agent framework

3.1 Basic Framework

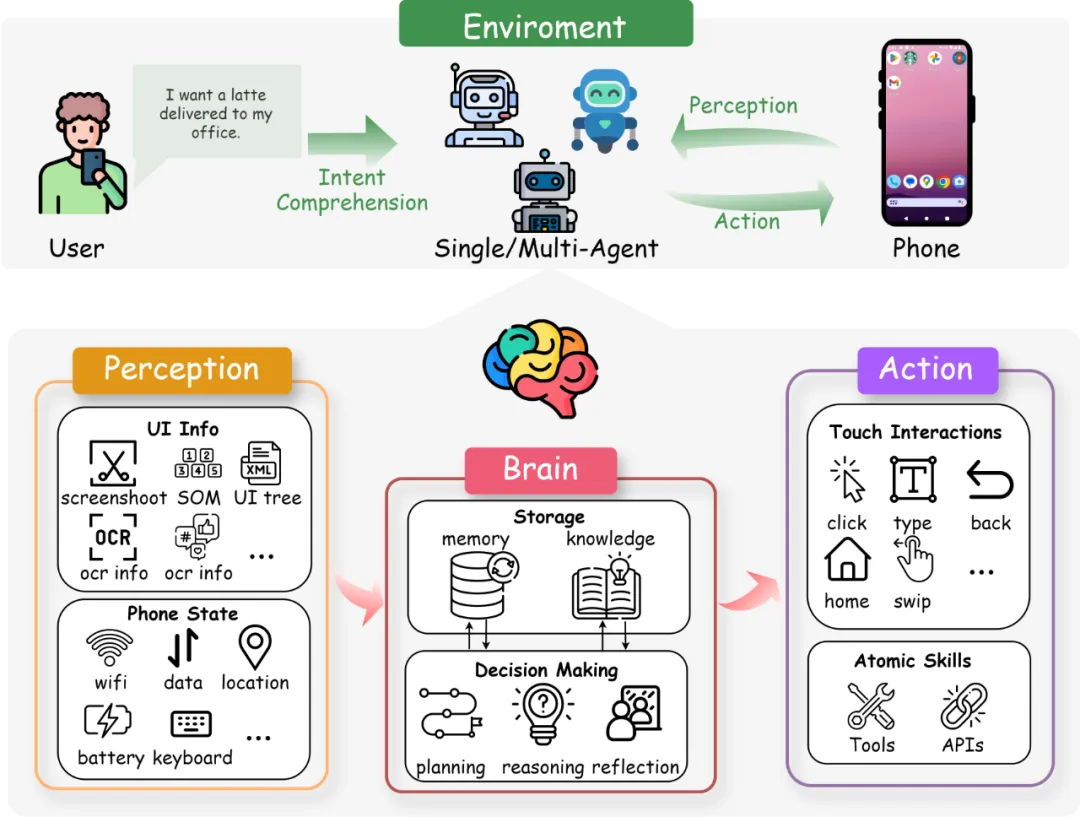

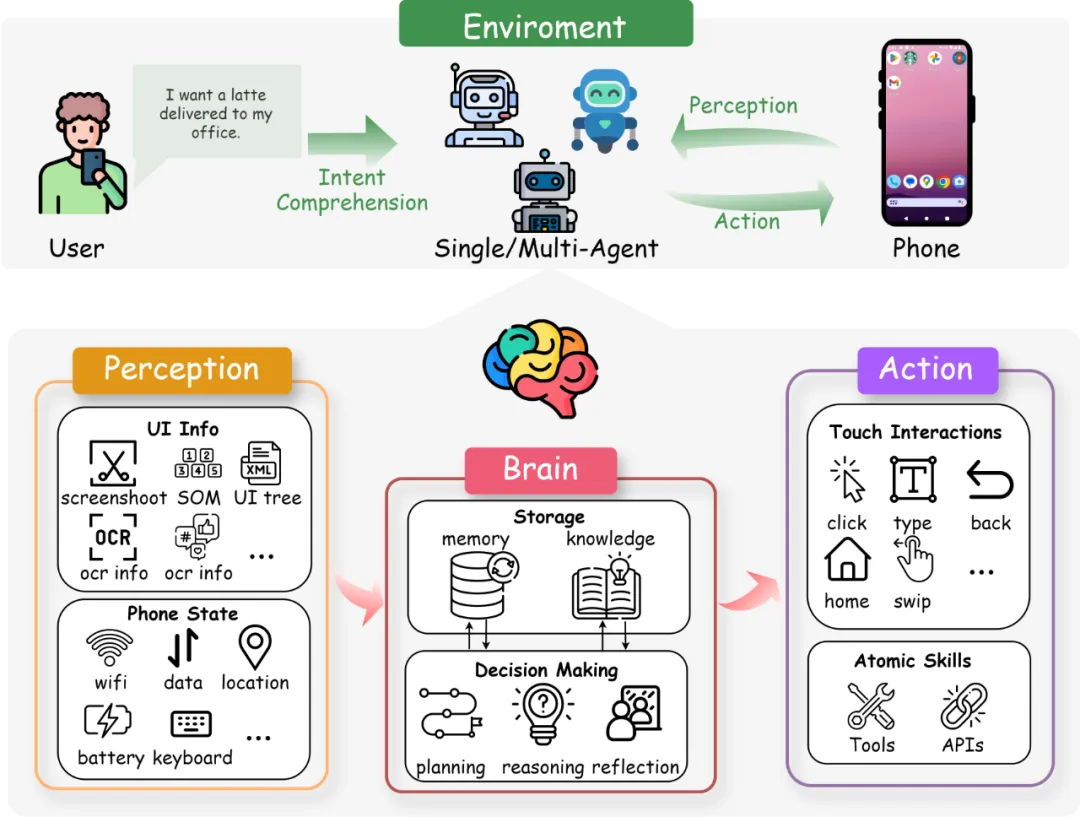

Figure 5 Big model-driven mobile phone GUI agent base framework

- Sensing module

- UI Information: These include UI trees (such as DroidBot-GPT converting them into natural language sentences), screenshots (such as AutoUI relying on screenshots for GUI control), Set-of-Marks (for labeling screenshots, Such as MM-Navigator) and Icon & OCR enhancements (such as Mobile-Agent-v2 integration of OCR and icon data).

- Phone status: such as keyboard status and location data for context-aware operations.

- Brain module

- Storage: includes memory (such as recording historical screen task related content) and knowledge (from pre-training knowledge, domain-specific training, and knowledge injection).

- Decision making: These include planning (such as the planning agent of Mobile-Agent-v2 to generate task progress), reasoning (which can be enhanced with Chain-of-thought), and reflection (such as the reflective agent of Mobile-Agent-v2 to evaluate decisions and adjust).

- Action module: By performing touch interaction, gesture operation, input text, system operation and media control and other types of actions, to achieve interaction with the UI and system functions of the phone, to ensure that decisions are translated into actual operations on the device.

3.2 Multi-Agent Framework

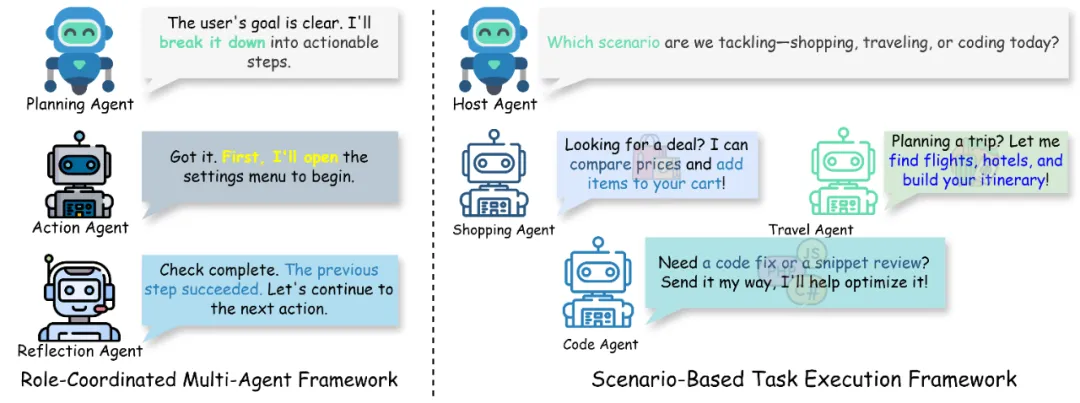

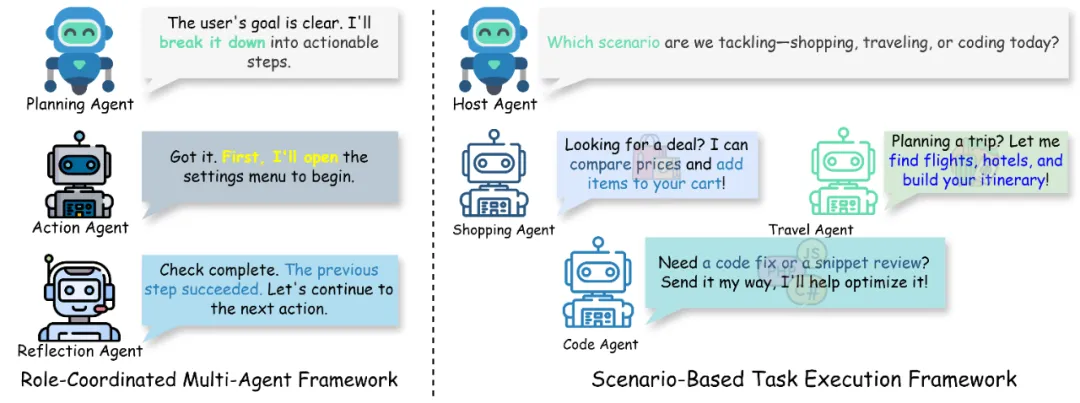

Figure 6 multi-agent framework classification

- Role-Coordinated Multi-Agent Framework: For example, in MMAC-Copilot, multiple agents with different functions work together, including planning, decision making, memory management, reflection, and tool invocation, to complete tasks together through predefined workflows.

- Scenario-Based Task Execution Framework: For example, MobileExperts dynamically assigns tasks to expert agents based on specific task scenarios, and each agent can have the ability to target specific scenarios (such as shopping, coding, and navigation) to improve task success and efficiency.

3.3 Plan-Then-Act Framework

- Efforts such as SeeAct, UGround, LiMAC, and ClickAgent demonstrate the effectiveness of the framework, improving the clarity, reliability, and adaptability of task execution by generating action descriptions and then locating controls based on the action descriptions, allowing independent improvements to the planning and UI positioning modules.

4. A large language model for mobile phone automation

Figure 7 Model classification

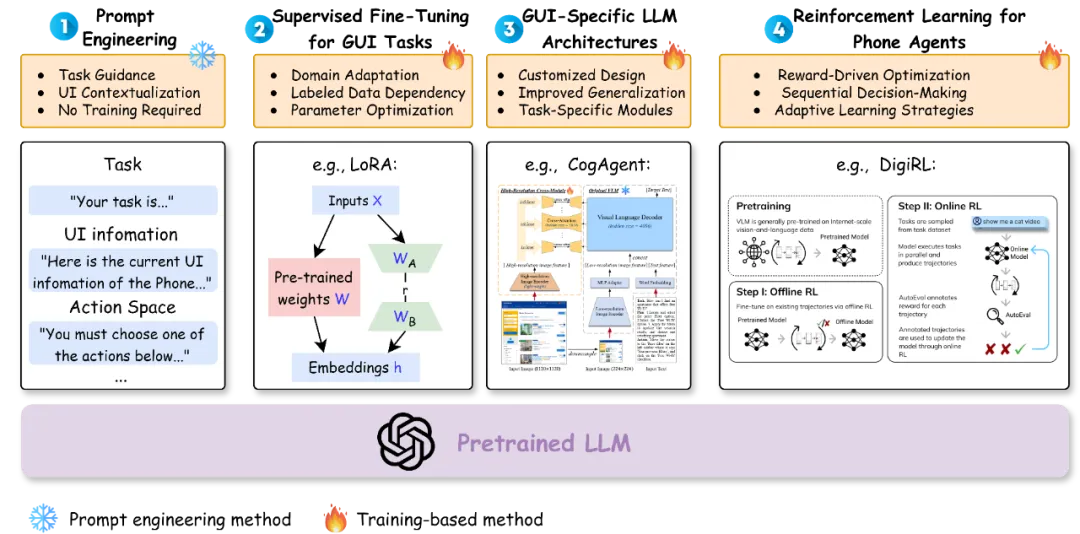

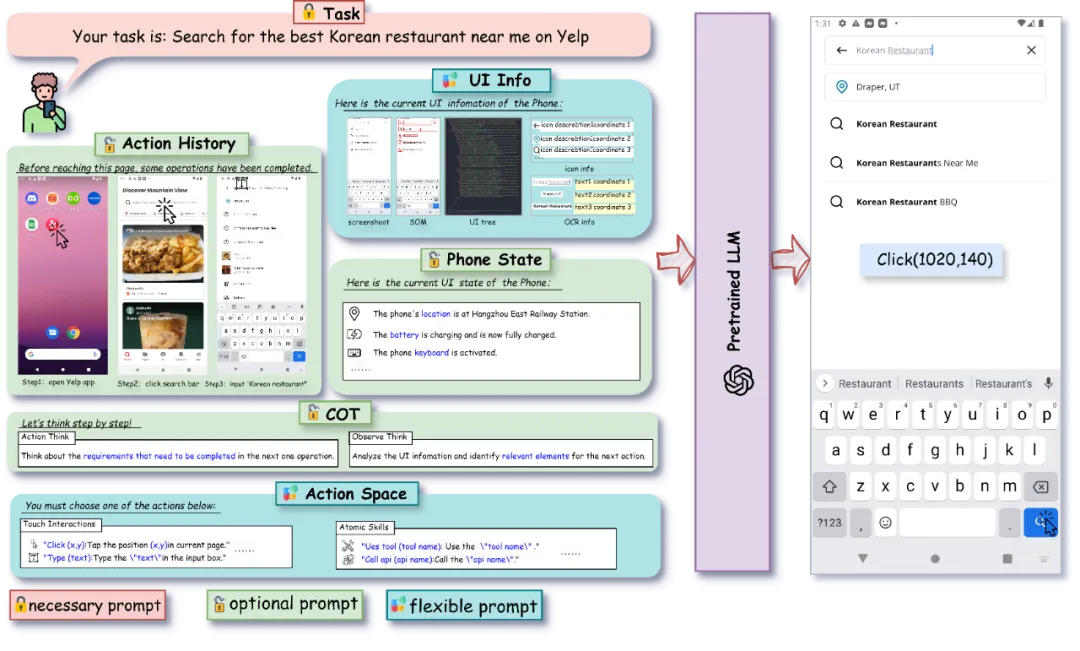

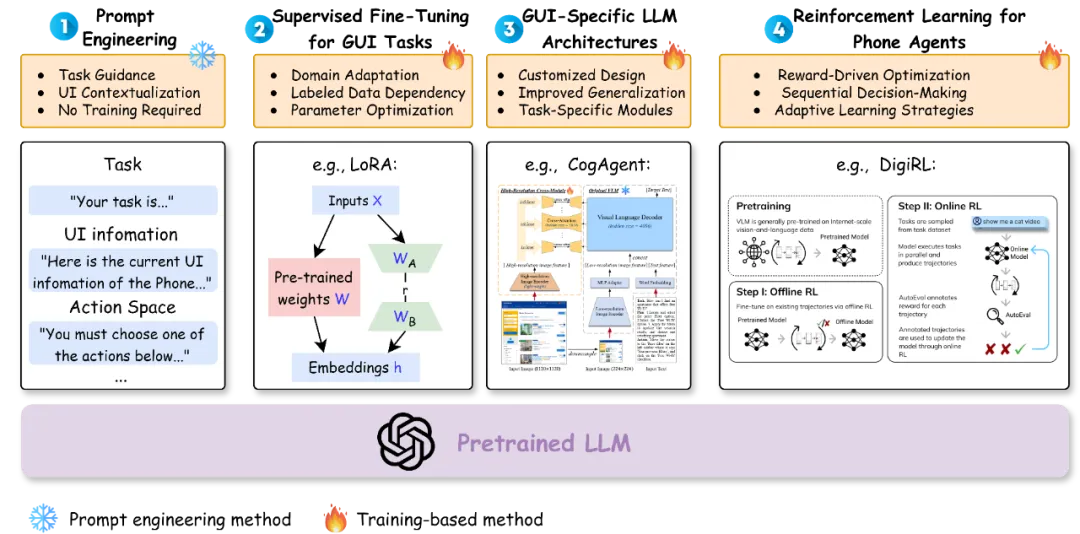

4.1 Prompt Engineering

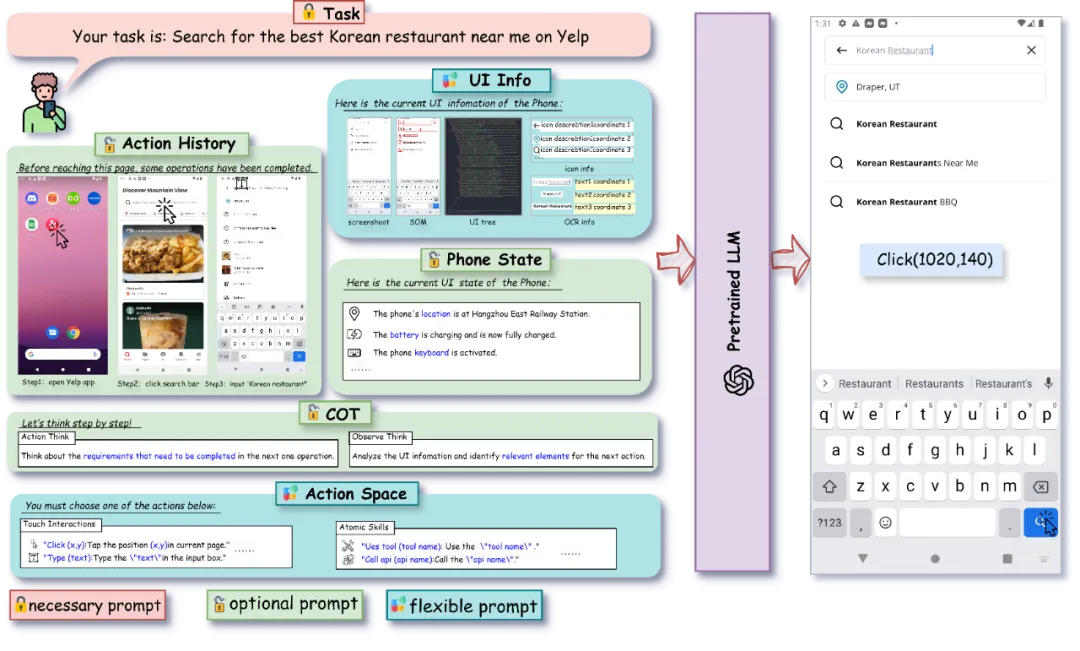

Figure 8 Prompt word design

- Text-Based Prompt words: The main architecture is single-text mode LLM, which interprets UI tree information to make decisions, such as DroidBot-GPT, Enabling Conversational, etc., which has made some progress in different applications, but still has some problems such as insufficient understanding and use of global information on the screen.

- Multimodal Prompt words: Multimodal Large language models (MLLM) integrate visual and text information to make decisions through screenshots and supplementary UI information. Including SOM-based output indexing methods (such as MM-Navigator, AppAgent) and direct coordinate output methods (such as VisionTasker, Mobile-Agent family), improve accuracy and robustness, but still face challenges in UI positioning accuracy.

4.2 Training-Based Methods

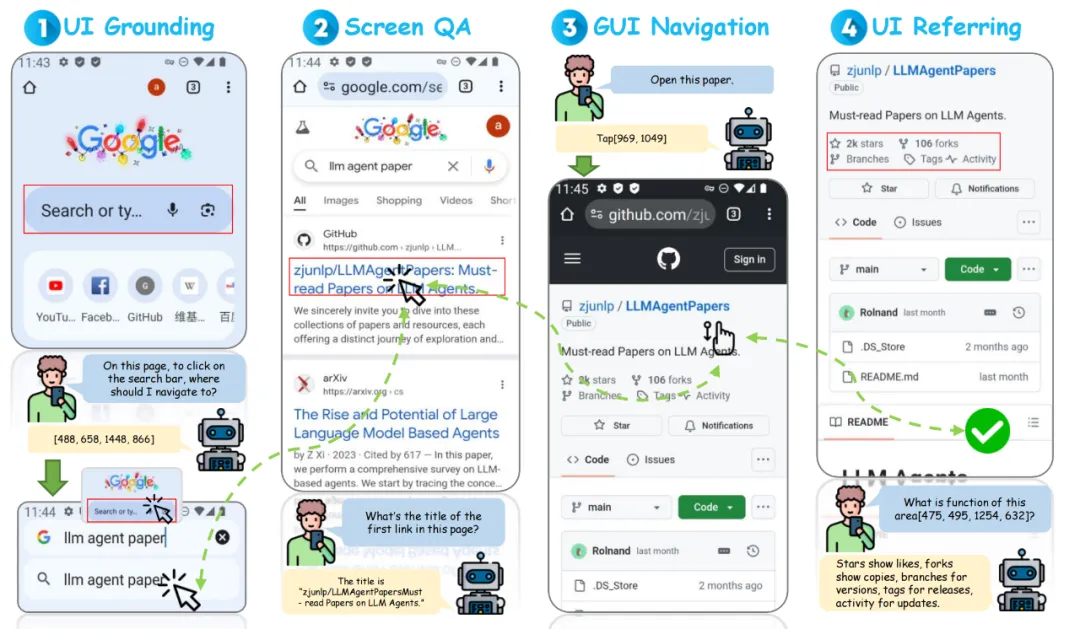

- GUI Task Specific Model Architectures

- General purpose: such as Auto-GUI, CogAgent, ScreenAI, CoCo-Agent, and MobileFlow are designed to enhance direct GUI interaction, high-resolution visual recognition, comprehensive environment awareness, and conditional action prediction capabilities for different applications and interface tasks.

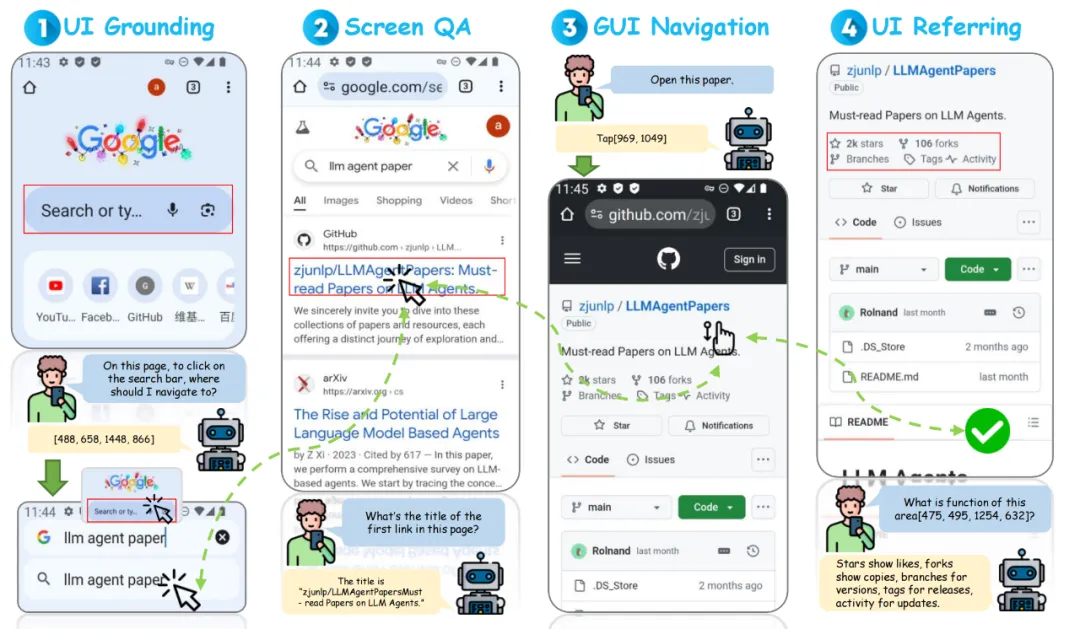

Figure 9 Different UI understanding tasks

- Specific areas: Focus on screen understanding tasks, including UI positioning (e.g. LVG, UI-Hawk), UI referencing (e.g. Ferret-UI, UI-Hawk), and on-screen question-and-answer (e.g. ScreenAI, WebVLN, UI-Hawk), The ability of agents to interact in complex user interfaces is enhanced through specific technologies.

- Supervised Fine-Tuning

- General purpose: Enhance the model's capabilities for GUI localization, OCR, cross-application navigation, and efficiency by fine-tuning on task-specific data sets, such as SeeClick, GUICourse, GUI Odyssey, and TinyClick.

- Domain specific: Used for specific tasks such as ReALM to solve reference resolution issues, IconDesc is used to generate UI ICONS instead of text, improving the model's performance in specific domains.

- Reinforcement Learning

- Mobile phone agents: such as DigiRL, DistRL and AutoGLM, through reinforcement learning to train agents to adapt to the dynamic mobile phone environment, improve decision-making ability and success rate, AutoGLM also realizes cross-platform applications.

- Web Agents: ETO, Agent Q, and AutoWebGLM use reinforcement learning to adapt agents to complex web environments and improve performance in web navigation and operational tasks by learning interactions and improving decision making.

- Windows Agent: ScreenAgent uses reinforcement learning to enable agents to interact with real computer screens in a Windows environment and complete multi-step tasks, demonstrating its potential in desktop GUI automation.

5. Data sets and benchmarks

5.1 Relevant data sets

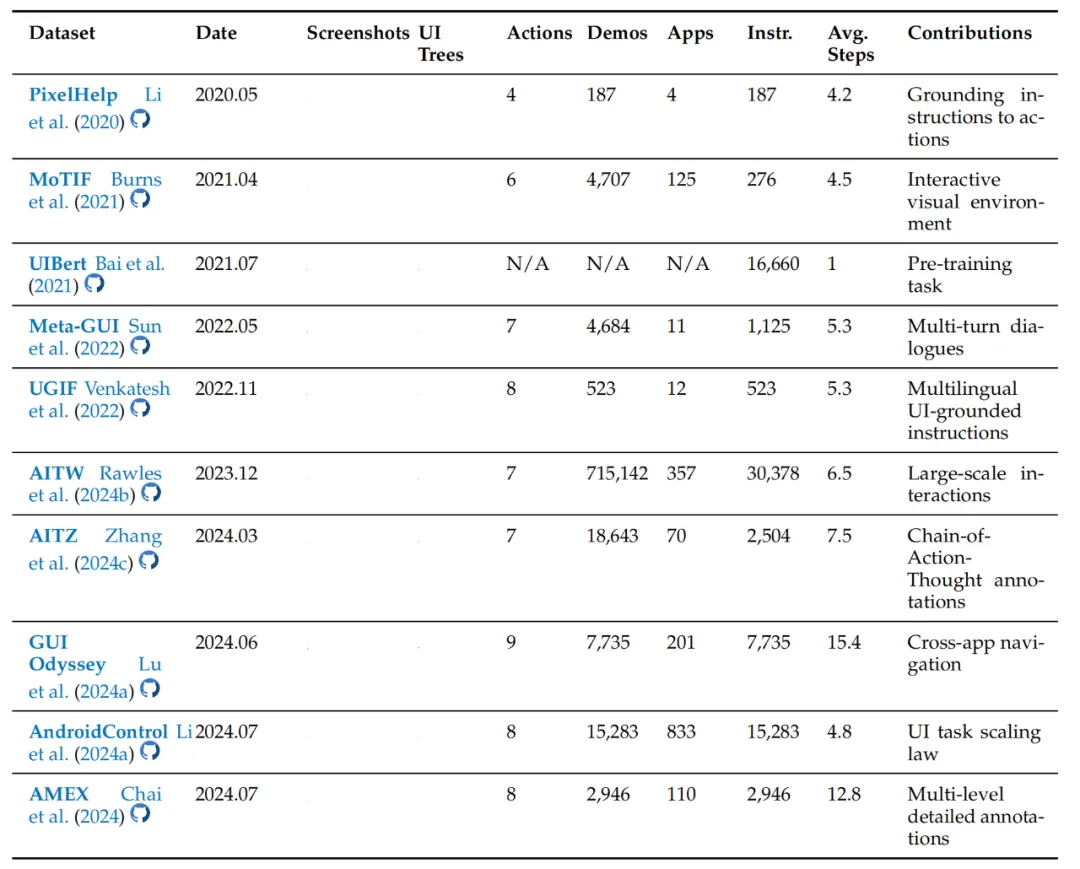

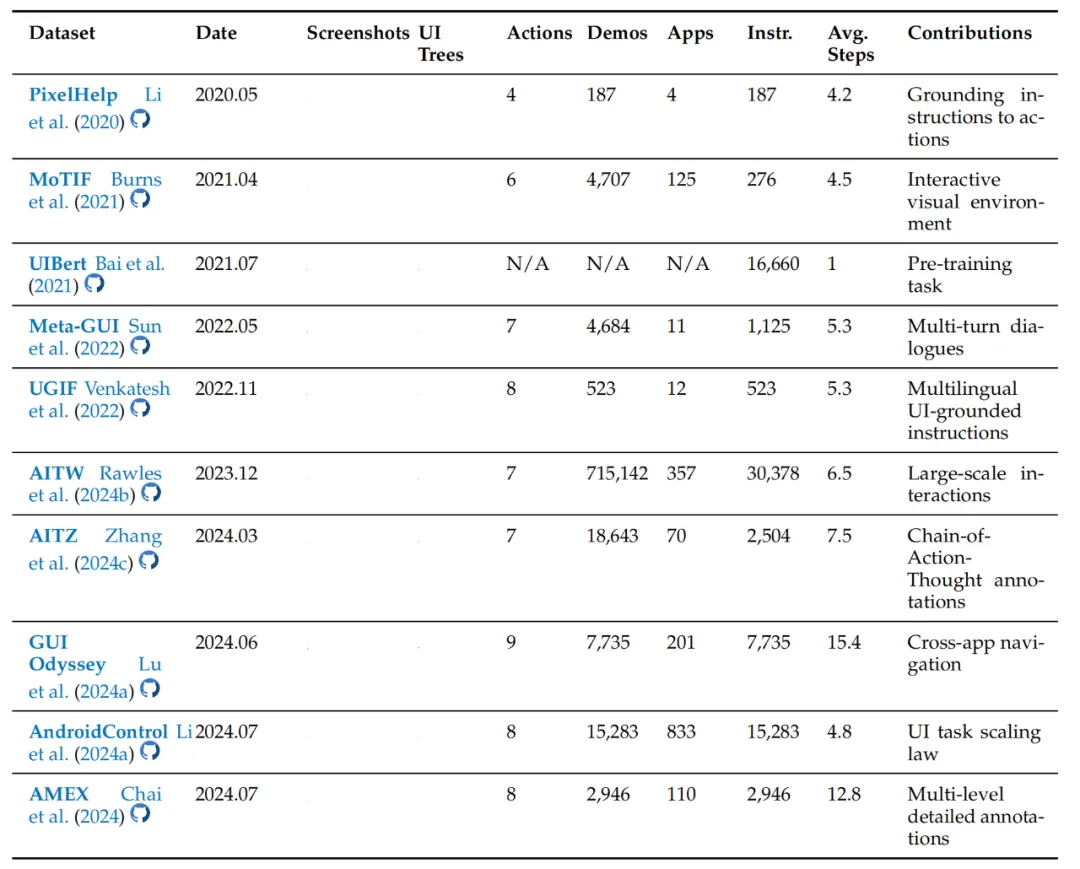

Table 1 Data sets

- Early data set: For example, PixelHelp maps natural language instructions to UI actions, UIBert improves UI understanding through pre-training, Meta-GUI collects dialogue and GUI operation traces, UGIF solves the problem of following multi-language UI instructions, and MoTIF introduces task feasibility and uncertainty.

- Large-scale data sets: Android In The Wild (AITW) and Android In The Zoo (AITZ) provide a large amount of device interaction data, GUI Odyssey is used for cross-application navigation training and evaluation, and AndroidControl studies the impact of data scale on agent performance. AMEX provides detailed comments to enhance an agent's understanding of UI elements.

5.2 Base

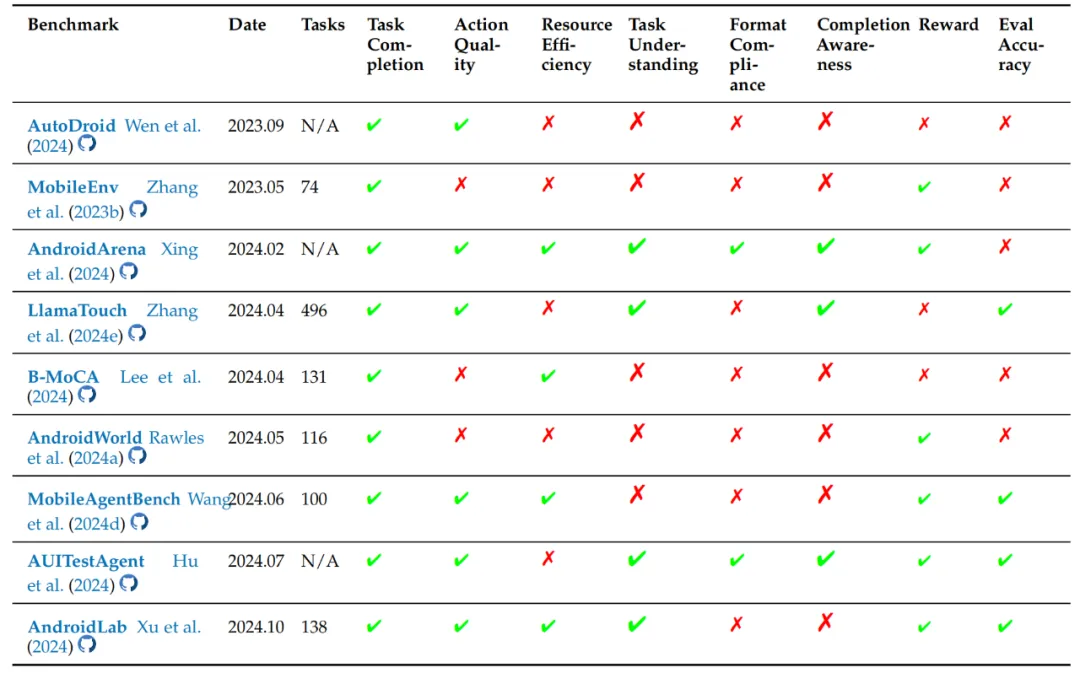

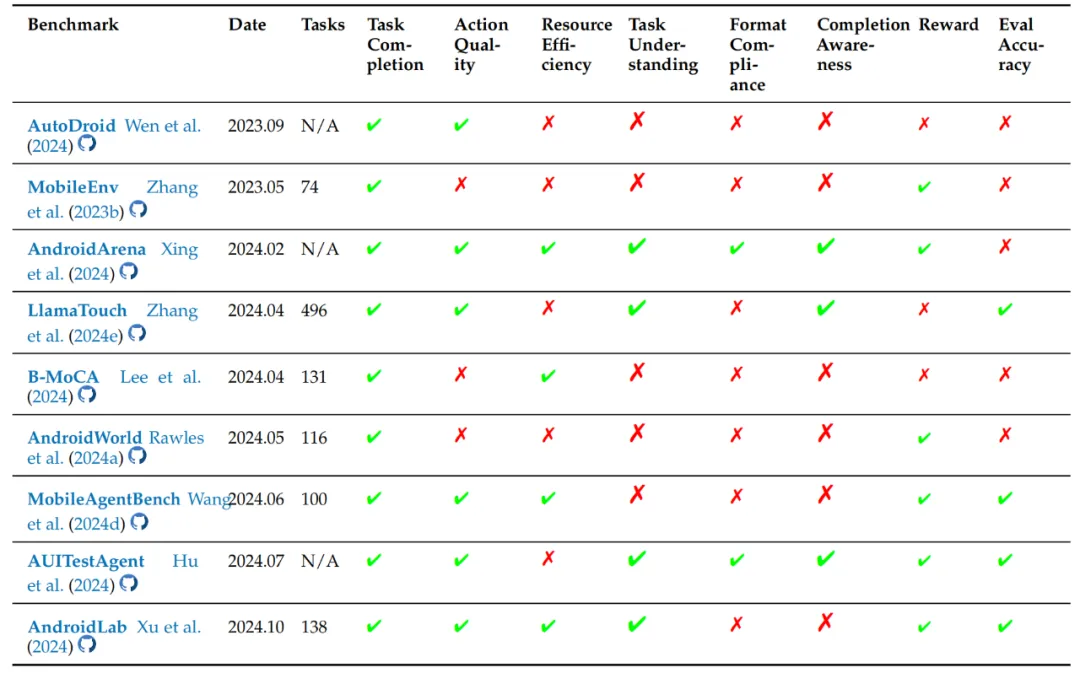

Table 2 Benchmarks

- Evaluation Pipelines: MobileEnv provides a universal training and evaluation platform, AndroidArena evaluates LLM agent performance in complex Android environments, LlamaTouch enables device-side execution and evaluation of mobile UI tasks, B-MoCA evaluates mobile device control agents in different configurations, AndroidWorld provides dynamic parameterized task environment, MobileAgentBench provides efficient benchmarking for mobile LLM agents, AUITestAgent implements automatic GUI testing, AndroidLab provides the system framework and benchmarks.

- Evaluation Metrics

- Task completion indicators: such as task completion rate, sub-target success rate and end-to-end task completion rate, evaluate the effectiveness of the agent to complete the task.

- Action execution quality index: including action accuracy, correct steps, correct trajectory, operational logic and reasoning accuracy, to measure the accuracy and logic of the agent's actions.

- Resource utilization and efficiency indicators: such as resource consumption, step efficiency, and reverse redundancy ratio, evaluate the agent resource utilization efficiency.

- Task understanding and reasoning indicators: such as Oracle accuracy, point accuracy, reasoning accuracy and key information mining ability, to examine the agent's understanding and reasoning ability.

- Format and compliance metrics: Verify that the output of the agent complies with format constraints.

- Completion awareness and Reflection indicators: assessing the agent's ability to recognize and learn from task boundaries.

- Evaluation accuracy and reliability metrics: Ensure consistency and reliability of the evaluation process.

- Reward and overall performance indicators: such as task reward and average reward, comprehensive evaluation of agent performance.

6. Challenges and future directions

6.1 Data set development and fine-tuning scalability

- The lack of diversity in existing datasets requires the development of large-scale, multimodal datasets that cover a wide range of applications, user behaviors, languages, and device types.

- Address the challenge of fine-tuning out-of-domain performance, exploring hybrid training methods, unsupervised learning, transfer learning, and assistive tasks to reduce reliance on large-scale data.

6.2 Lightweight and Efficient device Deployment

- Overcome the compute and memory limitations of mobile devices with approaches such as model pruning, quantification, and efficient transformer architecture, such as innovations such as Octopus v2 and Lightweight Neural App Control.

- Take advantage of specialized hardware accelerators and edge computing solutions to reduce your reliance on the cloud, enhance privacy, and improve responsiveness.

6.3 User-centric adaptation: interaction and personalization

- Improve the agent's ability to understand user intent, reduce manual intervention, and support voice commands, gestures, and continuous learning of user feedback.

- Personalize the adaptation of the agent by integrating multiple learning techniques, enabling it to quickly adapt to new tasks and user-specific contexts without extensive retraining.

6.4 Improved ability of model positioning and reasoning

- Improve the precise mapping of language instructions to UI elements, integrate advanced visual models, large-scale annotations, and effective fusion technologies, and improve multimodal positioning capabilities.

- Enhance the reasoning, long-term planning and adaptability of agents in complex scenarios, develop new architectures, memory mechanisms and reasoning algorithms, and surpass the current LLM capabilities.

6.5 Standardized assessment criteria

- Establish a common benchmark covering multiple tasks, application types and interaction modes, and provide standardized metrics, scenarios and evaluation protocols to facilitate fair comparison and comprehensive evaluation.

6.6 Ensuring Reliability and security

- Develop robust security protocols, error handling techniques, and privacy protection methods to protect against attacks, data breaches, and accidental actions to protect user information and trust.

- Implement continuous monitoring and validation processes to detect and mitigate risks in real time, ensuring that agents behave predictably, respect privacy, and maintain stable performance under a variety of conditions.

Step 7 Summarize

- Llm-driven mobile automation technology developments are reviewed, including multiple frameworks (single agent, multi-agent, plan-then - action), model approaches (cue engineering, train-based), and data sets/benchmarks.

- This paper analyzes the role of LLM in improving the efficiency, intelligence and adaptability of mobile phone automation, as well as the challenges and future development directions.

- The importance of standardized benchmarks and evaluation indicators to advance the field was emphasized and to facilitate the fair comparison of different models and methods.

Looking ahead, with improved model architecture, optimized device-side reasoning, and multi-modal data integration, large-model-based mobile GUI agents are expected to achieve greater autonomy in complex tasks, incorporate more AI paradigms, and provide users with a seamless, personalized, and secure experience.