Home > Information > News

#News ·2025-01-07

This article is reprinted with the authorization of AIGC Studio public account, please contact the source for reprinting.

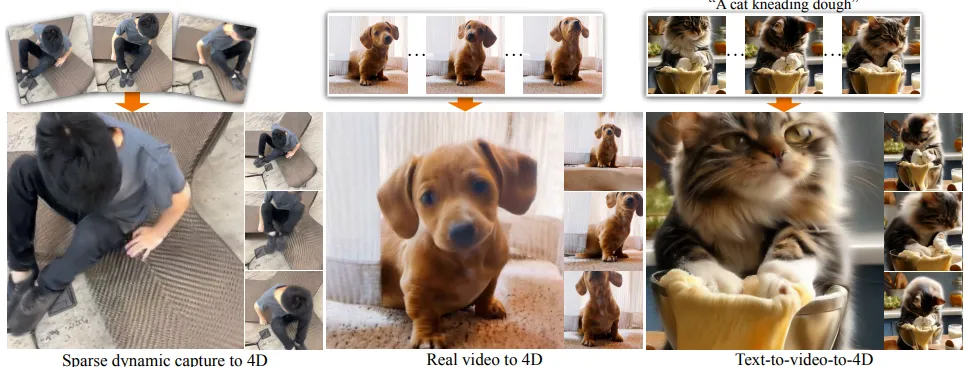

Monocular vision 4D reconstruction breakthrough! Google DeepMind and other teams have launched a multi-view video diffusion model CAT4D, which supports the input of a single view of the video, which can be dragged by itself after conversion.

The model can fix the viewing Angle and time respectively, and then output the effects of the static viewing Angle (fixed position) time change, the time constant viewing Angle change, and the viewing Angle time change respectively. As shown in the picture below:

Thesis: https://arxiv.org/pdf/2411.18613

• Home: https://cat-4d.github.io/

CAT4D: Create any content in 4D form using a multi-view video diffusion model

Given an input monocular video, we use a multi-view video diffusion model to generate a multi-view video with a new viewpoint. These generated videos are then used to reconstruct the dynamic 3D scene into anamorphic 3D Gauss.

At the heart of CAT4D is the multi-view video diffusion model, which unlocks the control of camera and scene motion. We demonstrate this by giving 3 input images (with camera pose) to generate three types of output sequences: 1) fixed view and change time, 2) changing view and change time, and 3) changing view and change time.

Compare our approach to baselines for different tasks. Try choosing different tasks and scenarios!

Given 3 input images, we generate three types of output sequences:

1. Fixed viewpoint and change time

2. Change viewpoint and fix time

3. Change viewpoint and change time.

Given just a few pose images of a dynamic scene, we can create a "bullet time" effect by reconstructing a static 3D scene that corresponds to the time of an input view. Three input images are displayed on the left, the first of which is the target bullet time frame.

Comparison of monocular video dynamic scene reconstruction on DyCheck dataset.

2025-02-17

2025-02-14

2025-02-13

13004184443

Room 607, 6th Floor, Building 9, Hongjing Xinhuiyuan, Qingpu District, Shanghai

gcfai@dongfangyuzhe.com

WeChat official account

friend link

13004184443

立即获取方案或咨询

top