Home > Information > News

#News ·2025-01-07

For quite a long time, WEilai has several similar knowledge base systems inside the company. The lack of interoperability between these systems forms a state of data island, so it brings about problems such as difficult to find knowledge and inconvenient to use. Users do not know where to obtain knowledge, and even if they find relevant data, they cannot be sure of its completeness and accuracy.

In this context, we have set up a knowledge project with the aim of building a company-level knowledge platform to achieve the following goals:

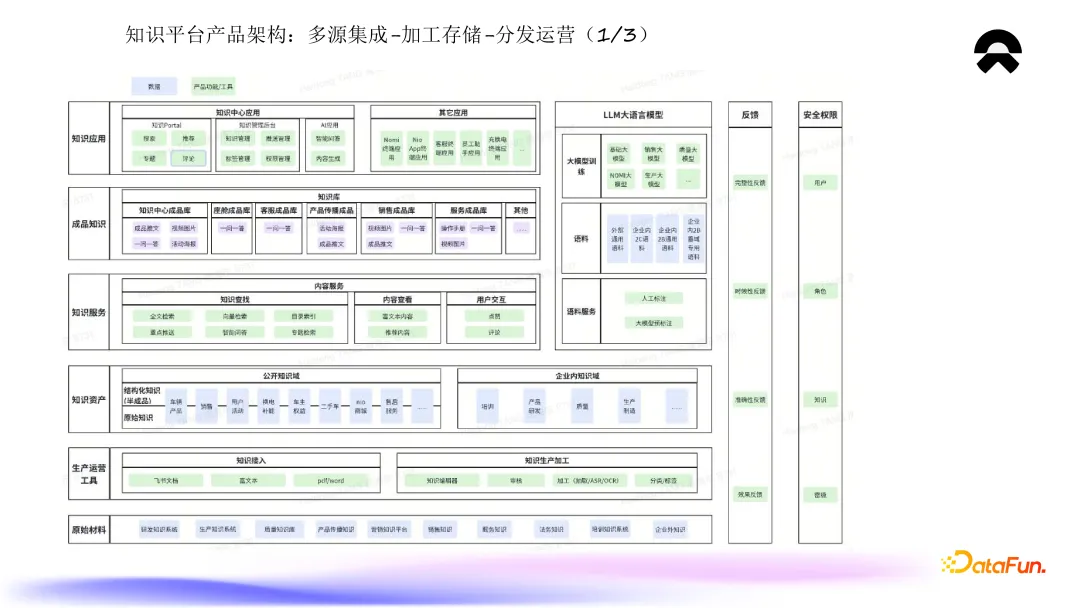

The first part, as shown in the figure below, is the overall structure of the knowledge platform. The overall structure may seem complex, but it becomes clear when you understand the layers.

Start at the bottom, which we call the "primary material layer." This layer includes the original corpus provided by various knowledge base systems within the company. As mentioned earlier, these knowledge base systems have existed in the past as data silos with little interoperability between them. They serve as the basic data source of the knowledge platform, covering knowledge data in the fields of production, research and development, quality and so on.

On the basis of these raw materials, we have established a set of unified knowledge production and operation tools, covering knowledge access, production and processing functions. Through this suite of tools, a variety of original materials (such as flying book documents, rich text documents, PDF and Word files, etc.) are integrated and connected to the knowledge platform. The knowledge production process includes a series of functions such as editing, auditing, processing, and analysis, aiming to transform fragmented source materials into unified knowledge assets.

According to the secret level of knowledge, we divide corporate knowledge assets into two categories: public knowledge and internal knowledge. Public knowledge is further subdivided into structured and unstructured categories. The internal knowledge involves training, product research and development, sales, quality management and manufacturing.

On this basis, we build a series of knowledge services, including content services and corpus services for large models. The specific content service includes knowledge search, view and related interaction functions. We provide a variety of knowledge search methods, such as full-text search, content search, vector search, directory index and focus push, and support rich text content viewing and recommended content display. In addition, the knowledge service platform also includes an interactive part with users to ensure the effective transfer and application of knowledge.

After the above process, the finished product knowledge base is finally formed, including customer service products, sales products and other finished product knowledge base, including Q&A database, finished tweets and operation manual. Based on this finished knowledge, we can build diverse knowledge applications, such as knowledge portals, knowledge management backend, and AI applications. At the same time, the entire knowledge platform also supports the construction of company-wide intelligent services, such as NIO APP, Nomi and customer service systems, which are supported by the underlying knowledge platform for building knowledge-related intelligent applications.

In addition, the knowledge platform also provides services for large models by providing corpus to support their training and fine-tuning, extending on the base large models, and building professional models in various fields. This part of the work includes providing corpus services for different internal and external needs of the enterprise, and promoting the application and development of large models.

Finally, we set up a feedback link to deal with problems found during the application of knowledge, such as knowledge errors or expiration. These problems will be fed back to the original production link through the link for secondary production and processing to solve the problem. At the same time, we have a perfect rights management system, including user role setting and other security measures to ensure the security of the knowledge platform and prevent information leakage.

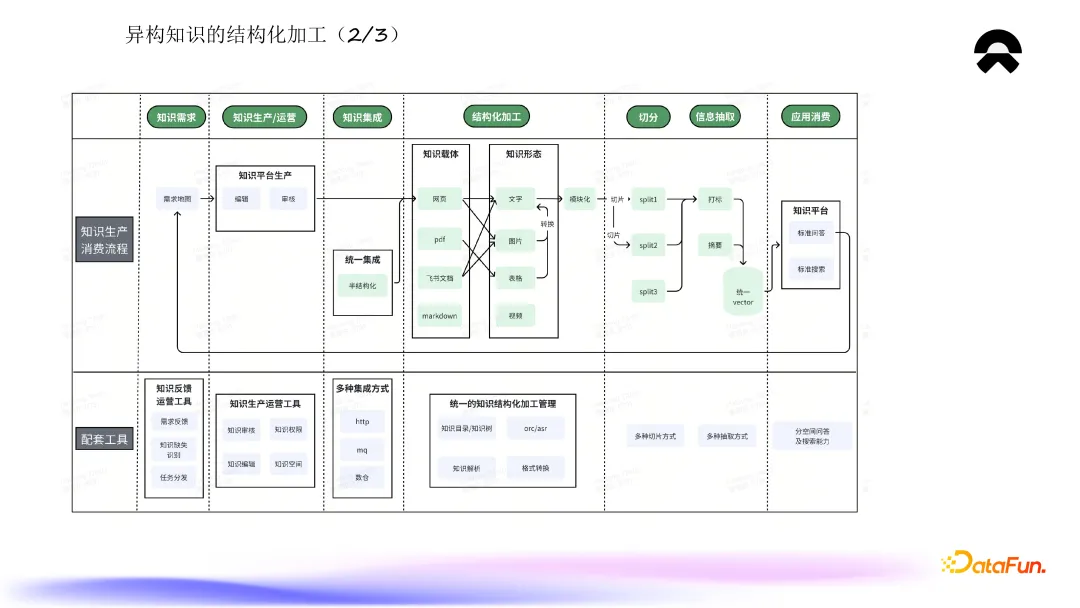

The second part is the structure of structured processing system of heterogeneous knowledge. There are various forms of knowledge, from all kinds of unstructured information to the knowledge that can be applied directly, which needs to go through a complex and long link knowledge processing process.

In the figure above, the detailed steps of the whole knowledge processing are listed vertically, while the knowledge processing process and the knowledge tools built for this purpose are shown horizontally. First of all, from the knowledge demand analysis, the required knowledge content is defined, and the demand map is built accordingly. Then, it enters the knowledge production operation stage, in which the platform provides a series of functions such as editing and auditing. In order to realize these functions, we have developed corresponding production and operation tools.

The knowledge produced through the above process and the unified integrated knowledge will be integrated into the knowledge platform. In order to achieve this goal, we adopt a variety of integration methods, such as HTTP, MR, data warehouse and other means, to comprehensively integrate knowledge resources in each knowledge island within the company. The carrier of knowledge can be a PDF document, a flying book document, code, or a Web page. Forms include text, pictures, tables, and videos. To support this structured machining process, we have developed a unified set of structured machining tools. The tool converts knowledge into text, modularizes it, and then performs operations such as segmentation and information extraction. Specifically, the text will be divided into multiple fragments according to certain rules, then annotated and abstract generation, and finally unified input into the database. Various segmentation methods and information extraction techniques are used.

Finally, our knowledge platform implements various application consumption functions, such as knowledge search and knowledge question answering, which implement question answering and search capabilities depending on the knowledge space. The feedback generated by the application consumption link will flow back to the knowledge demand module and update the demand map, thus forming a complete closed loop.

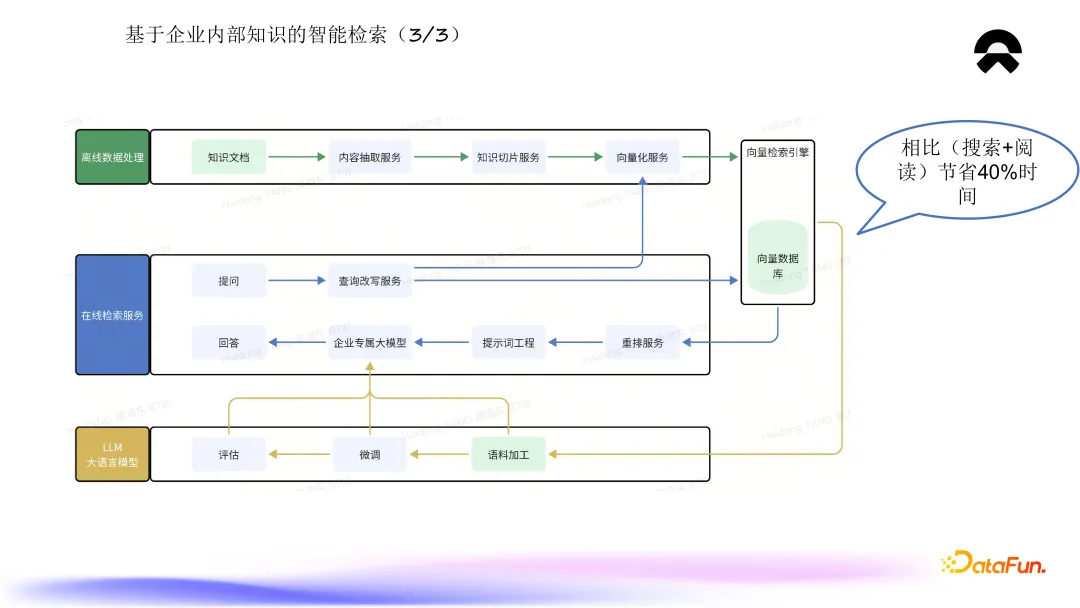

The third part is the enterprise internal knowledge intelligent Retrieval structure, namely the traditional sense of RAG (Retrieval-Augmented Generation).

As shown in the figure above, NIO's knowledge platform adopts the following methods when implementing RAG: First, for each knowledge document update, the system will carry out content extraction service to extract the text part of the document. Then, the knowledge slicing service is executed, and the content after slicing is vectorized, and finally stored in the vector database.

Entering the online service stage, when the user raises a question, the system will first rewrite the query, and then vectorize the rewritten question to match the knowledge slice in the vector database. The relevant points matched are reordered to determine the most relevant information. Finally, through the prompt word project of the large model, the final answer is generated using the large model of the enterprise, including the answer text and the knowledge point referenced, and the complete answer is returned to the user.

Throughout the knowledge corpus processing process, in addition to the storage of vector databases, the data is also used to support large model services, such as corpus fine-tuning and evaluation, to build our own large model.

In the traditional way, after the user enters the question, he needs to search and view the relevant knowledge documents one by one to obtain the required information; By using RAG process, users can get answers and related knowledge points directly after asking questions, which significantly saves time. This time saving is mainly due to the fact that large models can provide highly responsive answers, reducing the need for users to consult further for detailed knowledge. Because the proportion of users who are not satisfied with the initial answer and need to further search or click on the link to view the document is less, the overall calculation can save about 40% of the time, which significantly improves the efficiency of users' knowledge search.

In this chapter, we will introduce the problems, challenges, and solutions encountered during the actual operation.

The first challenge is the accuracy of the trivia system. At present, our knowledge question answering system has reached an accuracy rate of more than 90% in internal tests of the company. This achievement was made possible by dividing the quizzes in the company into three main categories: professional questions, sensitive questions, and general questions, and developing different strategies for each category, as shown in the figure below.

For professional and sensitive questions, we take the way of keyword search to deal with. RAG (Retrieval-Augmented Generation) technology may have limited understanding of professional terms, which may lead to inaccurate answers or incorrect answers. Therefore, for highly professional problems, it is more effective to guide users to specific knowledge points directly through keyword retrieval. Similarly, for sensitive questions, such as sales policies and other scenarios with zero fault tolerance, keyword search is also used to ensure accurate answers.

All questions except the above two categories are classified as ordinary questions and further subdivided into three processing types: When there is sufficient relevant information in the knowledge base after vector retrieval, the system will normally generate answers; If the relevant information is insufficient in the vector retrieval process, that is, the matching degree is low or the score is not high, the system will issue a risk warning to inform the user that there may be errors in the answer, please judge carefully; For questions that are completely unable to retrieve relevant information from the knowledge base, the system degenerates into a general answer using a large model, and explicitly prompts the user that the answer is based on the power of the large model rather than the content of the knowledge base.

Through this method of classification processing, we have built a complete knowledge question and answer system, which aims to improve the accuracy of answers and user satisfaction, while ensuring that sensitive information is handled in accordance with high standards.

The second challenge is to control access to knowledge points. Different from the open large-model Q&A (such as chat-gpt), the knowledge point access in the enterprise needs to be subject to strict permission management. This means that a large, publicly available model might give the same or similar answers to 1,000 users for the same question; In an internal enterprise environment, because each employee may have different access to knowledge, 1,000 employees may get 1,000 different answers to the same question.

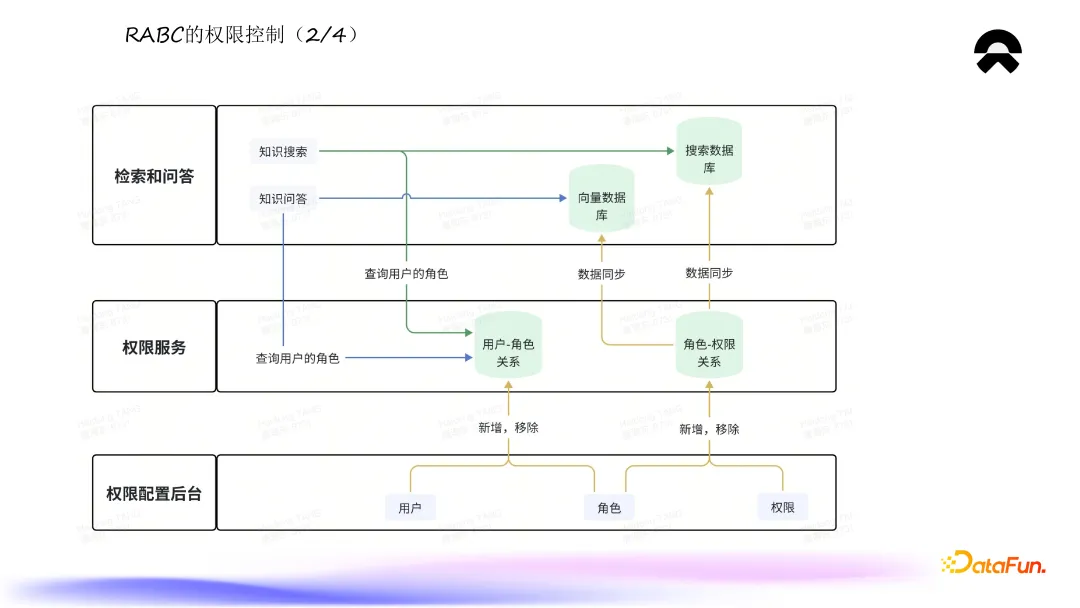

As shown in the figure above, we have adopted a Role-Based Access Control (RBAC) scheme to address this challenge. We set up a permission configuration backend to manage access control through user roles and permission Settings. Each user is assigned a specific role based on their position and responsibilities, and each role is associated with a certain scope of authority.

On this basis, we build a set of rights service system, which is responsible for querying the user's current role and the rights it has. In knowledge search and question answering scenarios, the system matches the knowledge content within the user's permission in the vector database based on the user's current role. Only the knowledge with access permission is used for intelligent retrieval and question and answer, ensuring that the content of the answer is strictly limited to the scope of knowledge that the user has access to, so as to ensure the security and confidentiality of the data.

In this way, our permission control system not only supports personalized knowledge access, but also effectively prevents the disclosure of sensitive information and ensures the security of internal information of the enterprise.

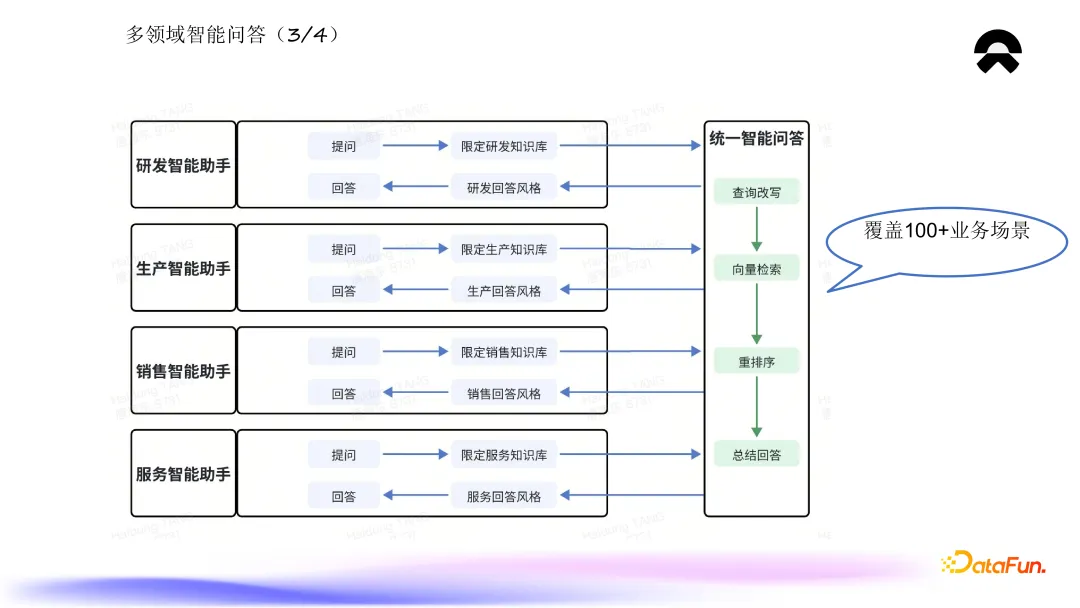

The third challenge is multi-domain intelligent question-and-answer services. For NIO's corporate-level knowledge platform, its core mission is to support company-wide knowledge usage scenarios. Each knowledge usage scenario, including knowledge question answering scenario, has its own unique individual needs. How to meet the needs of almost all scenarios across the company through a unified intelligent question and answer system? We use a specific link to achieve this goal, the following intelligent question answering as an example to illustrate.

As shown in the figure above, in the course of each question, the questioner limits the scope of his knowledge base in his particular scenario. As mentioned above, a number of independent knowledge bases have been established on the knowledge platform, and various fields such as research and development, production and sales have their own independent knowledge bases. For example, when an intelligent assistant in research and development asks a question, the search and answer are limited to the research and development knowledge base, and then enter the company-wide unified intelligent answer link, and finally, before providing the answer to the user, we will adjust the answer style according to the characteristics of the specific domain to ensure that it meets the requirements of the scenario. For example, in the field of research and development, we will set a response model that is in line with the development style, so that the final answer is accurate and relevant to the actual needs.

By limiting the scope of knowledge base and customizing the answer style, the personalized requirements of different fields can be met. At the same time, using a unified intelligent question answering service, we achieve a wide range of reusability, avoiding duplication of construction, thereby improving efficiency and resource utilization.

The fourth challenge is high concurrency. In the intelligent question and answer scenario, the graphics card resource consumption is significant, and because of the huge amount of calculation, the calculation time is long, each answer usually takes several seconds, and it may take tens of seconds for particularly complex questions. This makes it difficult for intelligent Q&A services to be embedded in key systems in production, sales and other fields, and most scenarios cannot accept such a long response time. In addition, in some scenarios, the volume of visits to intelligent question answering tools is very large, which poses a challenge to intelligent question answering services that have consumed a lot of resources.

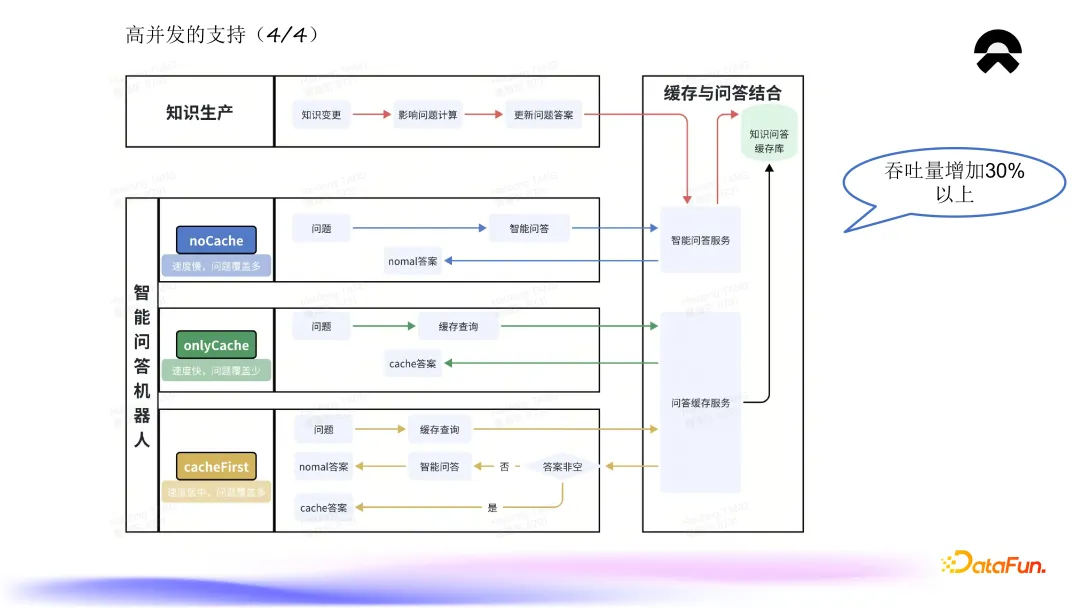

In view of the above background, we have increased the handling of high concurrency in order to enable the intelligent quiz service to cover more scenarios and support more users. As shown in the picture below:

First, we introduce a knowledge production process that includes the calculation of the impact problem of the knowledge change. When the knowledge is updated, we calculate which questions are affected and update the answers to these questions uniformly. This process follows the traditional RAG (Retrieval-Augmented Generation) process, ensuring accuracy and consistency of answers. The updated Q&A service results will be stored in the Q&A cache database. This database stores the question and its corresponding answer, and once the question in the cache is hit, the user can get the answer in a very short time, without having to calculate again. Because we have a real-time update mechanism, the answers in the cache library are basically kept up to date, and the latency is controlled in the second level.

Based on this service, we design three kinds of intelligent Q&A robots: No Cache, Only Cache and Cache First.

No Cache: In this mode, the intelligent Q&A service directly provides normal answers. It is characterized by a wide response coverage but slow speed, does not support high concurrency, so the application scenarios are limited.

Only Cache: In this mode, the cache service is directly queried. If the answer is found, return immediately; If it is not found, a null value is returned indicating that it cannot be answered. This method is characterized by high speed but low problem coverage, and is suitable for scenarios that require high response time and performance.

Cache First: This is our main recommended mode and is suitable for most scenarios. After asking a question, first query the cache. If there is an answer in the cache, it is returned immediately; If no, enter the normal intelligent question and answer process to obtain the normal answer. This approach combines the advantages of fast response and wide coverage, the average throughput can be increased by more than 30%, especially in the case of hit cache, the response time is almost negligible, significantly improving the concurrent processing capability of the system.

In summary, by building a knowledge cache database and intelligent question-answering robot supporting different modes, we realize the support of high concurrency, which not only improves the response speed and efficiency of the system, but also ensures a wide range of question-answering coverage.

Finally, the outlook for future work. On the basis of the current platform construction, we plan to promote the following aspects of work.

At present, we have introduced RAG (Retrieval-Augmented Generation) process in the knowledge platform to realize intelligent knowledge retrieval and Q&A service, so that users can easily get the required information. However, when the direct answer does not fully meet the user's needs, the user still needs to consult the detailed text content. To improve this situation, we will add AI-assisted knowledge understanding capabilities. Specifically, when users browse knowledge details, the system will use AI capabilities to automatically generate document summaries, reduce user reading time, and provide term interpretation services to help users understand the professional terms in the document. This AI-assisted understanding capability is designed to optimize knowledge browsing and consumption experiences.

In the knowledge production process, although it is still mainly human, we plan to introduce AI-assisted knowledge creation tools. These tools can help to write new documents or extend and continue existing knowledge, thereby increasing the speed and efficiency of knowledge creation and expanding the knowledge reserve of the entire knowledge platform.

In addition to traditional text forms, we also plan to research and develop multimodal knowledge processing capabilities. Although the data storage already supports various formats such as pictures and videos, in the field of intelligent question answering, we want to further expand its capabilities, such as uploading pictures to ask questions and generating answers based on the content of the pictures. In addition, we will also explore multi-modal interaction methods such as graphic mixing to enhance the intelligence level of the system.

The existing types of knowledge cover a variety of formats, such as PDF, web pages, but not all possible forms. In the future, we will consider expanding to support more types of knowledge resources on this basis to ensure that any type of knowledge can be integrated into our knowledge platform for processing, governance and management, so as to further enrich the platform's knowledge reserve and improve its comprehensiveness and applicability.

To sum up, by introducing AI-assisted knowledge understanding, AI-assisted knowledge creation, multimodal capabilities and knowledge type expansion, we will continue to optimize and improve the functions and services of the knowledge platform to better meet user needs and enhance the overall value of the platform.

A1: Yes, for professional questions and sensitive questions, the system degenerates to full-text based search. This kind of question is not suitable for inference processing through language model, so full-text search is used to ensure the accuracy and security of the answer.

A2: The answers in the cache library are accurate because they depend on three constants: the questions asked by the user, the content of the knowledge base, and the big model behind it. Specifically, when the large model behind the Q&A and the question itself have not changed, the only thing that can affect the accuracy of the answer is an update of the knowledge base. However, in the event of a change in knowledge, we will update the answers in the cache to ensure their accuracy. Therefore, there is no need to rely on human expert annotation every time, but the accuracy of cached answers is guaranteed by an automated mechanism.

2025-02-17

2025-02-14

2025-02-13

13004184443

Room 607, 6th Floor, Building 9, Hongjing Xinhuiyuan, Qingpu District, Shanghai

gcfai@dongfangyuzhe.com

WeChat official account

friend link

13004184443

立即获取方案或咨询

top