Home > Information > News

#News ·2025-01-06

BERT was released in 2018, which is a thousand years ago if you follow the chronology of AI! It's been all these years, but it's still widely used today: in fact, it's currently the second most downloaded model in the HuggingFace hub, with over 68 million downloads per month.

The good news is that now, six years later, we finally have a replacement!

Recently, Answer.AI, a new AI research and development laboratory, and Nvidia released ModernBERT.

ModernBERT is a new model family with two models: the base version 139M and the larger version 395M. In terms of speed and accuracy, it is significantly improved over BERT and its similar models. The model leverages dozens of advances in large language models (LLMS) in recent years, including updates to the architecture and training process.

In addition to being faster and more accurate, ModernBERT also increases the context length to 8k tokens, compared to 512 tokens for most encoders, and is the first encoder-only model to include a large amount of code in the training data.

Jeremy Howard, co-founder of Answer.AI, said ModernBERT does not hype generative AI (GenAI), but is a real workforce model that can be used for retrieval, classification, and really useful work. It's also faster, more accurate, longer in context, and more useful.

Recently, there has been a rapid rise of models such as GPT, Llama, and Claude, which are decoder-only models, or generative models. Their emergence has given rise to amazing new fields, GenAI, such as generative art and interactive chat. What this paper has done, in essence, is port these advances back to the encoder-only model.

Why would you do that? Because many practical applications require a simplified model! And it doesn't need to be a generative model.

To put it more bluntly, the decoder-only model is too big, too slow, too private, and too expensive for many jobs. Consider that the original GPT-1 was a 117 million parameter model. In comparison, the Llama 3.1 model has 405 billion parameters, an approach that is too complex and expensive for most companies to replicate.

GenAI's popularity has overshadowed the role of encoder-only models. These models have played a huge role in many scientific and commercial applications.

The output of the encoder-only model is a list of values (embedded vectors) that encodes the "answer" directly into a compressed numerical form. This vector is a compressed representation of the model's input, which is why encoder-only models are sometimes called representation models.

While decoder-only models (such as GPT) can do the job of encoder-only models (such as BERT), they suffer from a key constraint: since they are generative models, they are mathematically "not allowed to peek" into the tokens behind them. This is in contrast to the encoder-only model, which is trained so that each token can be viewed forward and backward (bidirectional). They are built for that, which makes them very efficient at what they do.

Basically, cutting-edge models like the OpenAI o1 are like the Ferrari SF-23, which is clearly a triumph of engineering designed to win races. But just replacing a tire requires a dedicated repair station, and you can't buy it yourself. By comparison, the BERT model is like the Honda Civic, which is also a triumph of engineering, but more subtly, it's designed to be affordable, fuel-efficient, reliable, and extremely practical. So it's everywhere.

We can look at this problem from different angles.

The first is the encoder-based system: before GPT, there were content recommendations in platforms like social media and Netflix. These systems are not built on generative models, but on representational models (such as encoder-only models). All of these systems still exist and are still operating at scale.

Then there are the downloads: on HuggingFace, RoBERTa, one of the leading Bert-based models, has more downloads than the 10 most popular LLMS on HuggingFace combined. In fact, the encoder-only model now totals more than 1 billion downloads per month, almost three times as many as the decoder-only model (397 million downloads per month).

Finally, there is the inference cost: many times more inference per year is performed on a pure encoder model than on a pure decoder or generative model. An interesting example is FineWeb-Edu, where the team chose to generate comments using the decoder-only model Llama-3-70b-Instruct and perform most of the filtering using a fine-tuned Bert-based model. This filtration takes 6,000 H100 hours, for a total of $60,000 at $10 per hour. On the other hand, even with the lowest-cost Google Gemini Flash and its low inference cost ($0.075 per million tokens), providing 15 trillion tokens to the popular decoder-only model would cost over a million dollars!

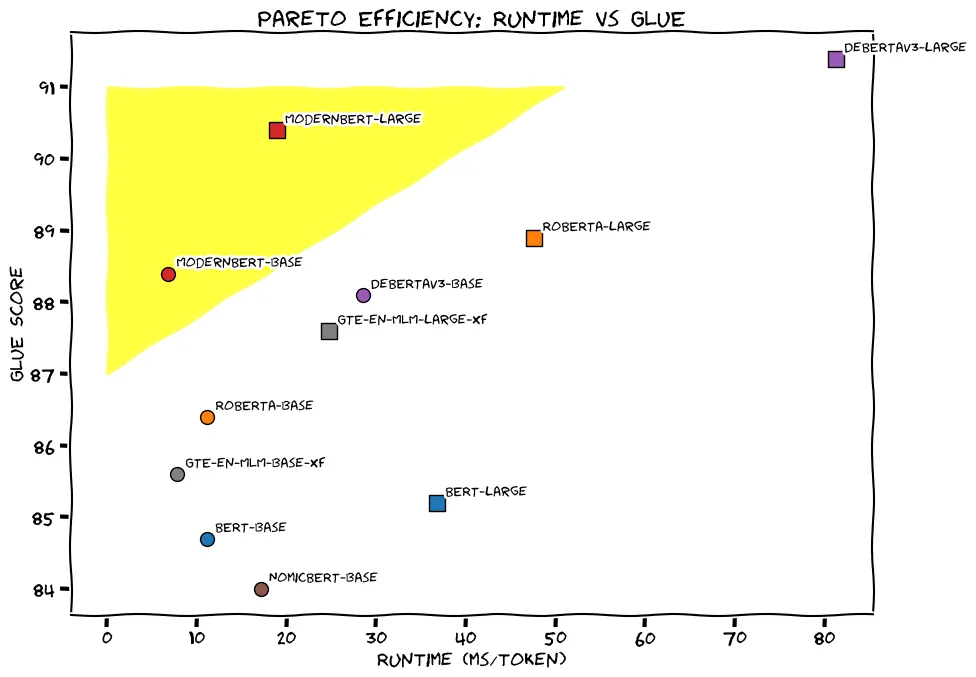

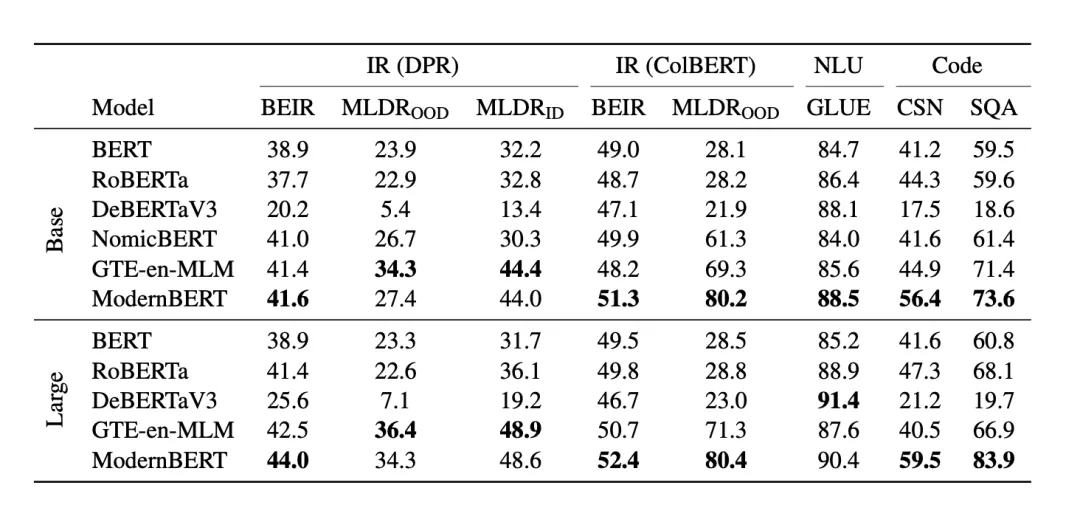

Here's how ModernBERT's accuracy compares to other models across a range of tasks. ModernBERT was the only model to receive the highest score in every category:

If you know about NLP competitions on Kaggle, then you know that DeBERTaV3 has been in the top spot for many years. But things have changed: ModernBERT is not only the first model to beat DeBERTaV3 on GLUE, but it uses less than 1/5 of Deberta's memory.

Of course, ModernBERT is also fast, it's twice as fast as DeBERTa - in fact, up to four times faster with input mixing lengths. Its long-context reasoning is nearly 3 times faster than other high-quality models such as NomicBERT and GTE-en-MLM.

The context length of ModernBERT is 8,192 tokens, which is more than 16 times longer than most existing encoders.

For code retrieval, ModernBERT's performance is unique because no encoder model has ever been trained on large amounts of code data before. For example, on the StackOverflow-QA dataset (SQA), a hybrid dataset that mixes code and natural language, ModernBERT's expert code understanding and long context made it the only model to score over 80 on this task.

Compared to mainstream models, ModernBERT excels in three broad categories of tasks: retrieval, natural language understanding, and code retrieval. While ModernBERT is slightly behind DeBERTaV3 in natural language understanding tasks, it is many times faster.

Compared to domain-specific models, ModernBERT is equal to or better at most tasks. In addition, ModernBERT is faster than most models at most tasks and can handle inputs of up to 8,192 tokens, which is 16 times longer than the mainstream model.

efficiency

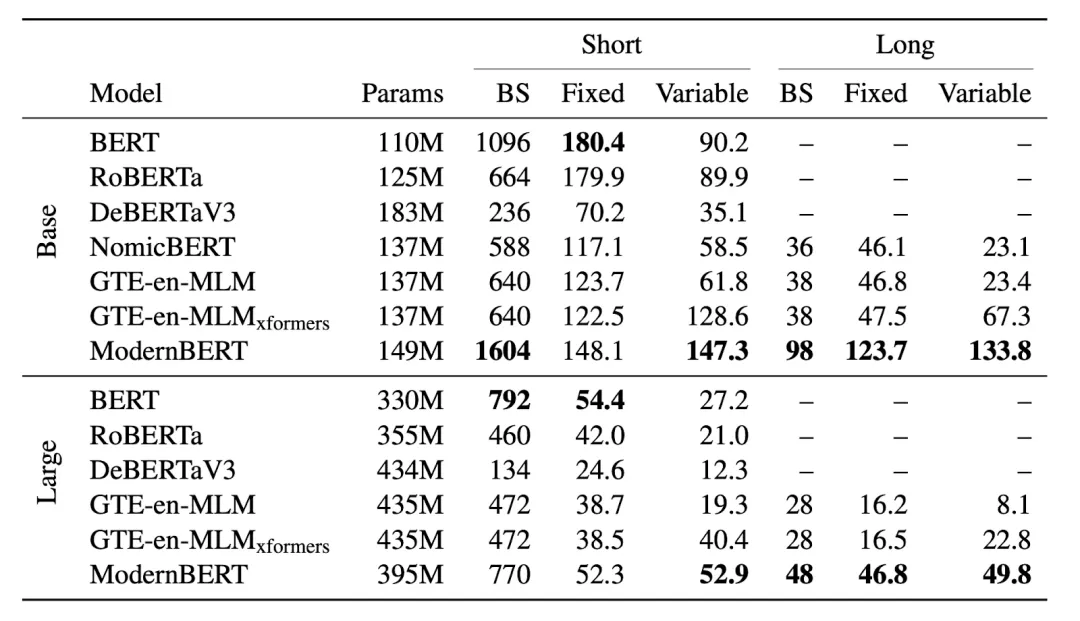

Here is a comparison of the memory (Max bulk size, BS) and inference (in thousands of tokens per second) efficiency of ModernBERT and other decoder models on NVIDIA RTX 4090:

For variable length inputs, ModernBERT is much faster than other models.

For long context inputs, ModernBERT is 2-3 times faster than the next fastest model. In addition, due to the efficiency of ModernBERT, it can use larger batch sizes than almost any other model and can be used efficiently on smaller, cheaper Gpus. In particular, the efficiency of the underlying model may enable new applications to run directly on browsers, mobile phones, and so on.

The following article explains why we should pay more attention to the encoder model. As trusted, yet underrated workhorse models, they've been surprisingly slow to update since BERT's release in 2018!

Even more surprising: no encoder since RoBERTa has been able to offer an overall improvement: DeBERTaV3 has better GLUE and sorting performance, but at the expense of efficiency and retrieval. Other models (such as AlBERT) or newer models (such as GTE-en-MLM) all improve on the original BERT and RoBERTa in some ways, but regress in others.

The ModernBERT project has three core points:

The Transformer architecture has gone mainstream and is used by the vast majority of models today. But it's worth noting that there's not just one Transformer, but many. The main thing they have in common is that they all believe that the attention mechanism is all that is needed, so they build various improvements around it.

Replace the old positional encoding with a RoPE: This gives the model a better understanding of the relationships between words and allows scaling to longer sequence lengths.

Global and local attention

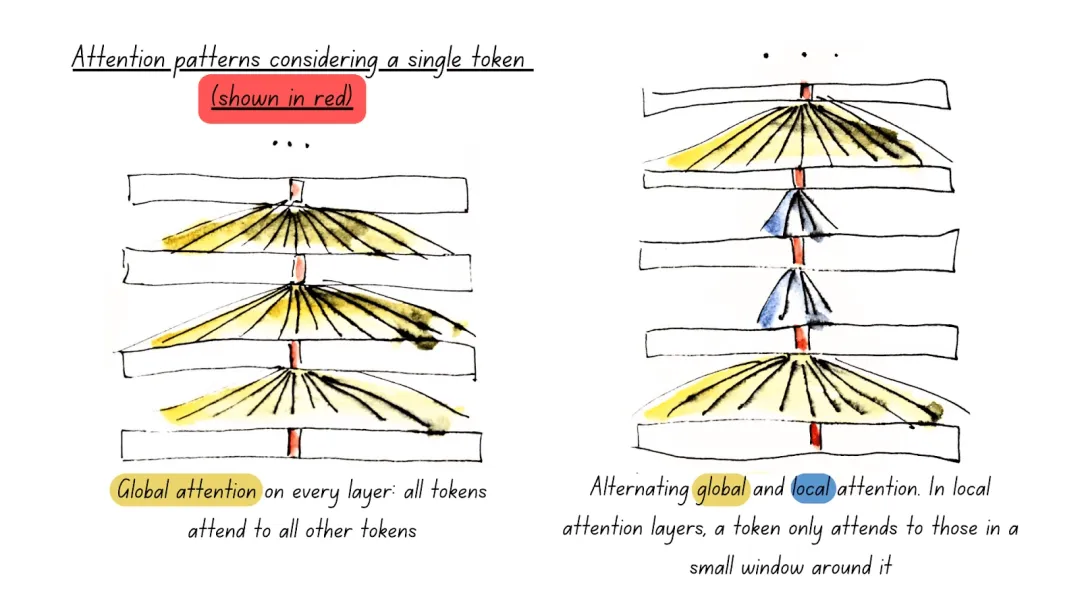

One of the most influential features of ModernBERT is the Alternating attention mechanism rather than the global attention mechanism.

With attentional computational complexity swelling with each additional token, this means ModernBERT can process long input sequences much faster than any other model. In fact, it looks like this:

Unpadding and sequence Packing

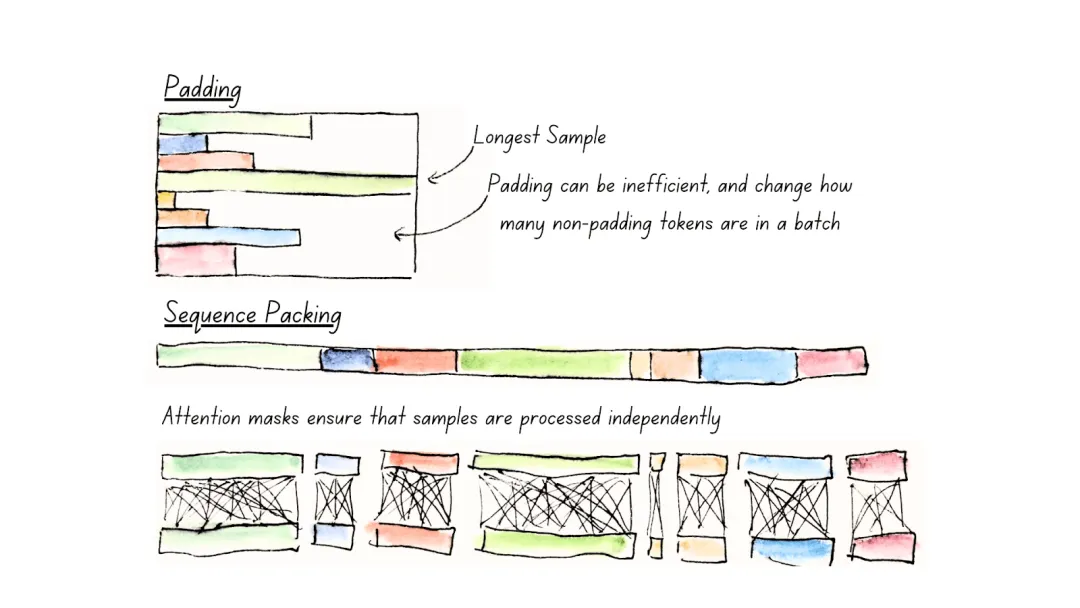

Another core mechanism that helps ModernBERT improve efficiency is Unpadding and sequence Packing.

In order to be able to process multiple sequences in the same batch, the encoder model requires that the sequences have the same length so that parallel calculations can be performed. The traditional way to do this is to rely on padding: find out which sentence is the longest and add meaningless tokens to fill the sequence.

While padding solves this problem, it's not elegant: a lot of computation ends up being wasted on padding tokens that don't provide any semantic information.

Compare padding and sequence packing. Sequential packing (unpadding) avoids computational waste on filling tokens in the model.

Unpadding solves this problem by not keeping the fill tokens, but removing them all and concatenating them into small batches of batch size 1, thus avoiding unnecessary computation. If you're using Flash Attention, unpadding is even faster, 10-20% faster than previous methods.

train

One area where encoders lag behind is training data. In the common understanding, this only refers to the training data scale, but this is not the case: previous encoders (such as DeBERTaV3) have trained long enough to possibly even break through the trillion tokens scale.

But the problem is data diversity: many older models are trained on a limited corpus, often including Wikipedia and Wikibooks. It is clear that these mixed data are single text modes: they contain only high-quality natural text.

ModernBERT's training data, by contrast, comes from a variety of English-language sources, including web documentation, code, and scientific articles. The model trains 2 trillion tokens, most of which are unique, instead of repeating them 20 to 40 times as was often the case with previous encoders. The impact of this is obvious: ModernBERT implements SOTA on coding tasks, among all open source encoders.

flow

The team stuck with the original BERT training method and made some minor upgrades inspired by subsequent work, including the removal of the Next-Sentence prediction target because it had no obvious benefit with increased overhead, and increased masking from 15% to 30%.

Both models adopt a three-stage training process. First, we trained the data of 1.7T tokens with a sequence length of 1024, and then adopted a long context adaptation phase to train the data of 250B tokens with a sequence length of 8192. At the same time, keep the total number of tokens per batch roughly the same by reducing the batch size. Finally, the 50B tokens data with different samples are annealed according to the long context extension idea emphasized in ProLong.

As a result, three-stage training ensures that the model performs well, is competitive on long-context tasks, and does not affect its ability to handle short contexts.

In addition, there is another benefit: for the first two stages, the team trained using a constant learning rate after the warm-up phase was completed, only performing the learning rate decay for the last 50B tokens, and following the ladder (warm-up - stable - decay) learning rate. What's more: Inspired by Pythia, teams can release every direct intermediate checkpoint in these stable phases. The main reason for this is to support future research and applications: Anyone can start training again from the team's pre-decay checkpoint and anneal the domain data suitable for their intended use.

craftsmanship

Finally, the team used two tricks to speed up the implementation.

The first technique is common: Because the initial training step updates the random weights, batch size warm-up is used. Start with a smaller batch size, so that the same number of tokens will update the model weight more frequently, and gradually increase the batch size until the final training size is reached. This greatly speeds up the initial model training phase, where the model learns the most basic language understanding.

The second trick is less common: weight initialization by tiling larger models, inspired by Microsoft's Phi series models. The trick is based on the following realization: Why use random numbers to initialize the ModernBERT-large initial weights when we have a very good set of ModernBERT-base weights? Indeed, it turns out that tiling ModernBERT-base weights to ModernBERT-large is better than initializing random weights. In addition, this technique, like batch size warm-up, provides an added benefit after stacking, resulting in faster initial training.

Together, ModernBERT became the new small, efficient encoder-only model of the SOTA family and provided BERT with a much needed rework. It also proves that encoder-only models can be improved with modern methods and still provide very strong performance on some tasks and achieve very attractive size/performance ratios.

2025-02-17

2025-02-14

2025-02-13

13004184443

Room 607, 6th Floor, Building 9, Hongjing Xinhuiyuan, Qingpu District, Shanghai

gcfai@dongfangyuzhe.com

WeChat official account

friend link

13004184443

立即获取方案或咨询

top