Home > Information > News

#News ·2025-01-03

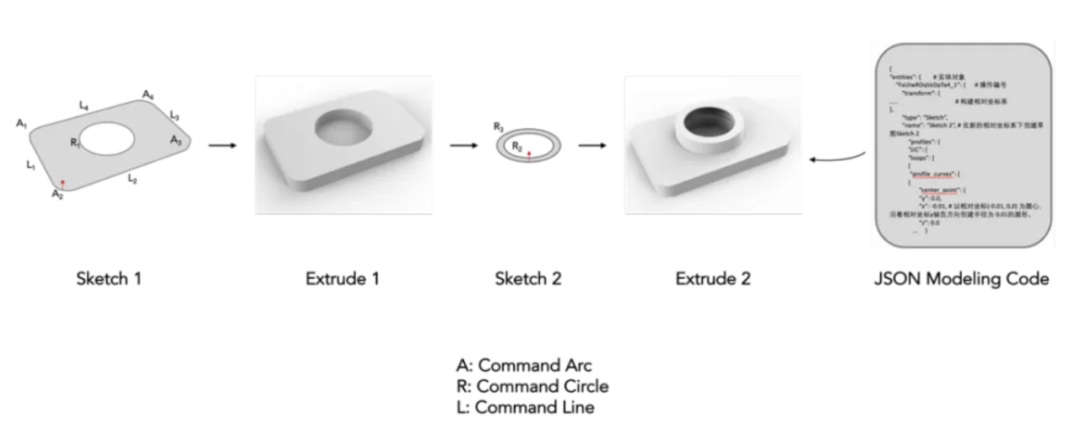

Computer aided design (CAD) has become a standard method of design, drawing, and modeling in many industries. Today, almost every object manufactured begins with parametric CAD modeling. CAD construct sequence is a type of CAD model representation, which is different from Mesh type triangular mesh and B-rep format point, line and plane representation. It is described as a series of modeling operations. The complete parameters and procedures for determining the 3D starting point and the plane orientation of the 3D sketch, drawing the 2D sketch, and stretching the sketch into a 3D solid shape are stored and represented in JSON code format. This kind of representation is most similar to the process of building CAD models by professional modeling engineers, and can be directly imported into modeling software such as AutoDesk and ProE. Building these CAD models requires domain expertise and spatial reasoning skills, as well as high learning costs.

Figure 1. Schematic diagram of CAD modeling code

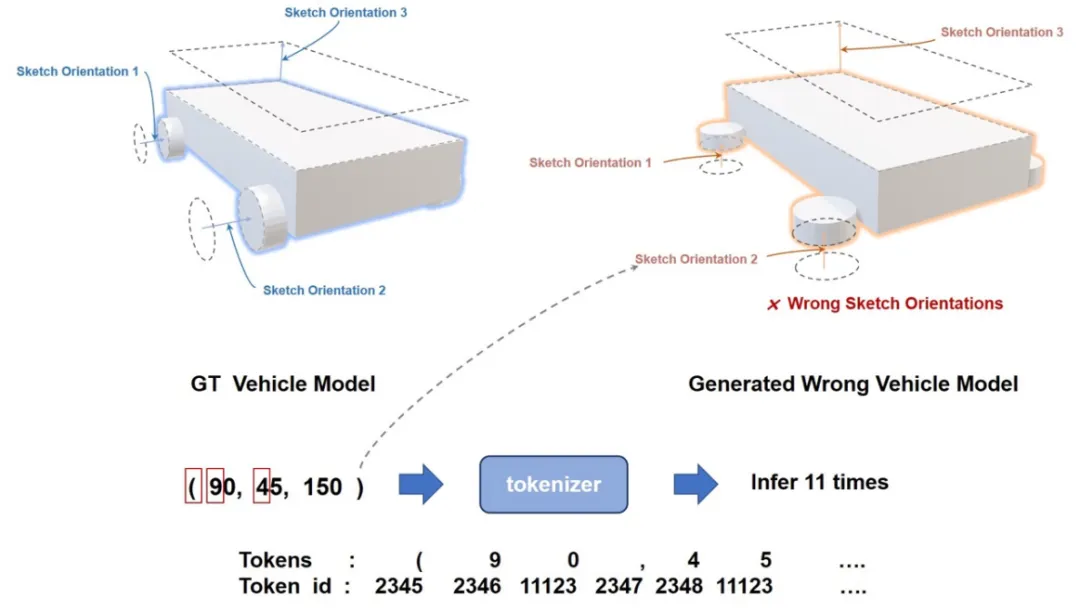

As one of the key capabilities of spatial intelligence, spatial modeling capability poses a serious challenge to MLLM. Although MLLM has shown excellent performance in generating 2D web layout code, such methods still have problems in the field of 3D modeling, such as generating four cars parallel to the wheels under the car. This is because MLLM is limited by the 1D inference inertia of large language models when reasoning 3D sketch angles and 3D spatial positions, and it is difficult to understand the real spatial meaning behind complex numbers.

FIG. 2. Cause analysis of poor 3D modeling effect of the original multi-modal large model

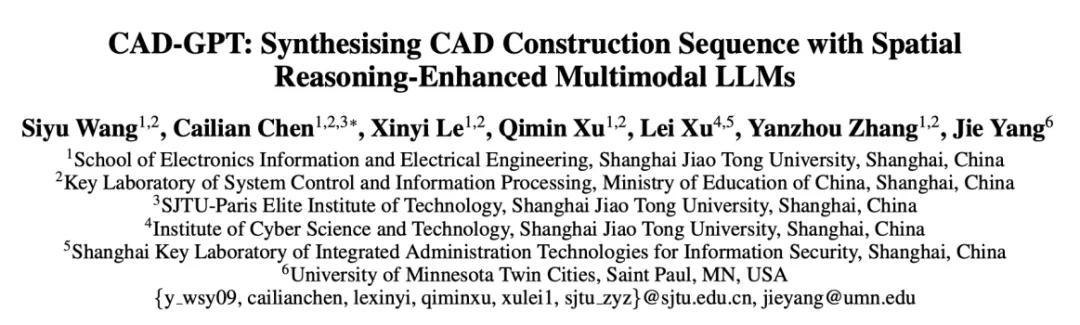

Recently, an i-WiN research team from Shanghai Jiao Tong University proposed CAD-GPT, a multi-modal large language model specially used for CAD modeling. Combined with a specially designed spatial positioning mechanism for 3D modeling, the 3D parameters are mapped to 1D language information dimensions, which improves the spatial reasoning ability of MLLM. The accurate CAD modeling construction sequence generation based on a single image or a sentence description is realized. The study, titled CAD-GPT: Synthesising CAD Construction Sequence with Spatial Reasoning-Enhanced Multimodal LLMs, was accepted by AAAI 2025.

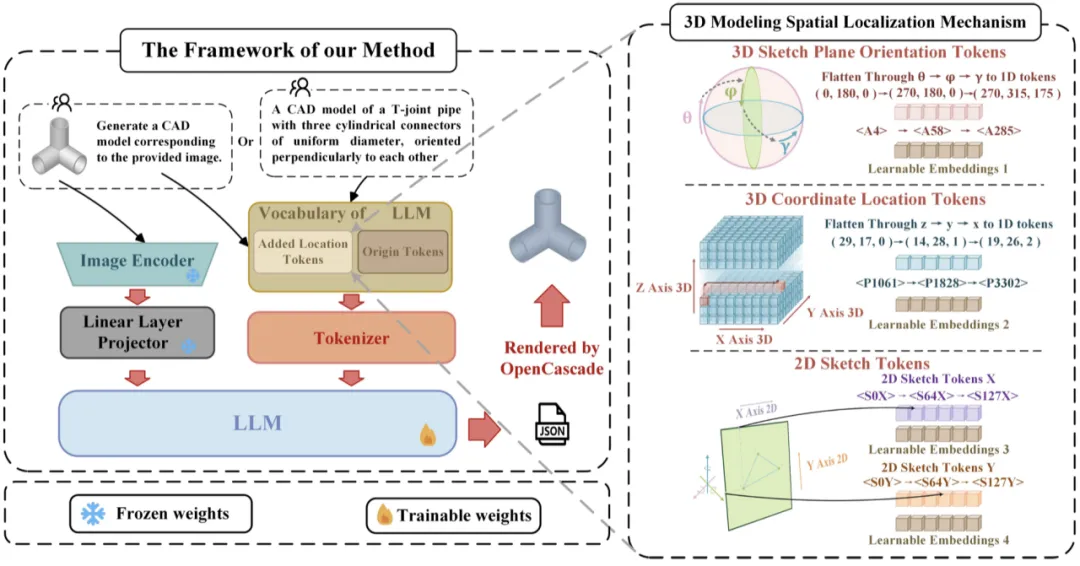

We define the key 3D and 2D modeling parameters as modeling languages that can be understood by large language models, so as to facilitate the understanding and generation of large models. Specifically, three series of positioning tokens are designed to replace the parameters of 3D sketch plane origin coordinates, 3D sketch plane angles, and 2D sketch curve coordinates. By expanding the features of global spatial 3D coordinates and sketch plane 3D rotation Angle into one-dimensional language feature space, they are transformed into two different types of 1D location tokens. In addition, 2D sketches are discretized and converted into special 2D tokens. These tokens are incorporated into the original LLM thesaurus. At the same time, three classes of custom learnable location embeddings adapted to three tokens are included to bridge the gap between language and spatial location.

Based on DeepCAD data set, 160k fixed Angle rendering CAD model image and 18k natural language description data set were generated, and CAD modeling data set specially used for training multimodal large language model was constructed, which was convenient for subsequent work to train large models to generate CAD model modeling sequence.

We used the LLaVA-1.57B version as the base model. The training consists of two phases: first training on the image2CAD task, and then fine-tuning on the text2CAD task by reducing the learning rate. In addition, due to the long length of CAD modeling sequence, we extended the window length of LLM to 8192 through hyperparameter adjustment based on extrapolation.

Figure 3. CAD-GPT schematic diagram

Figure 4. Display of various CAD models generated by CAD-GPT

The model in Figure 4 demonstrates the ability to generate precise semantic sketches (such as hearts and the letter "E"), CAD generation with categories (such as tables, chairs, and keys), spatial reasoning (such as tables and perpendicular cylinders), and the ability to generate identical models of different sizes (such as three different-sized connectors with two circular holes).

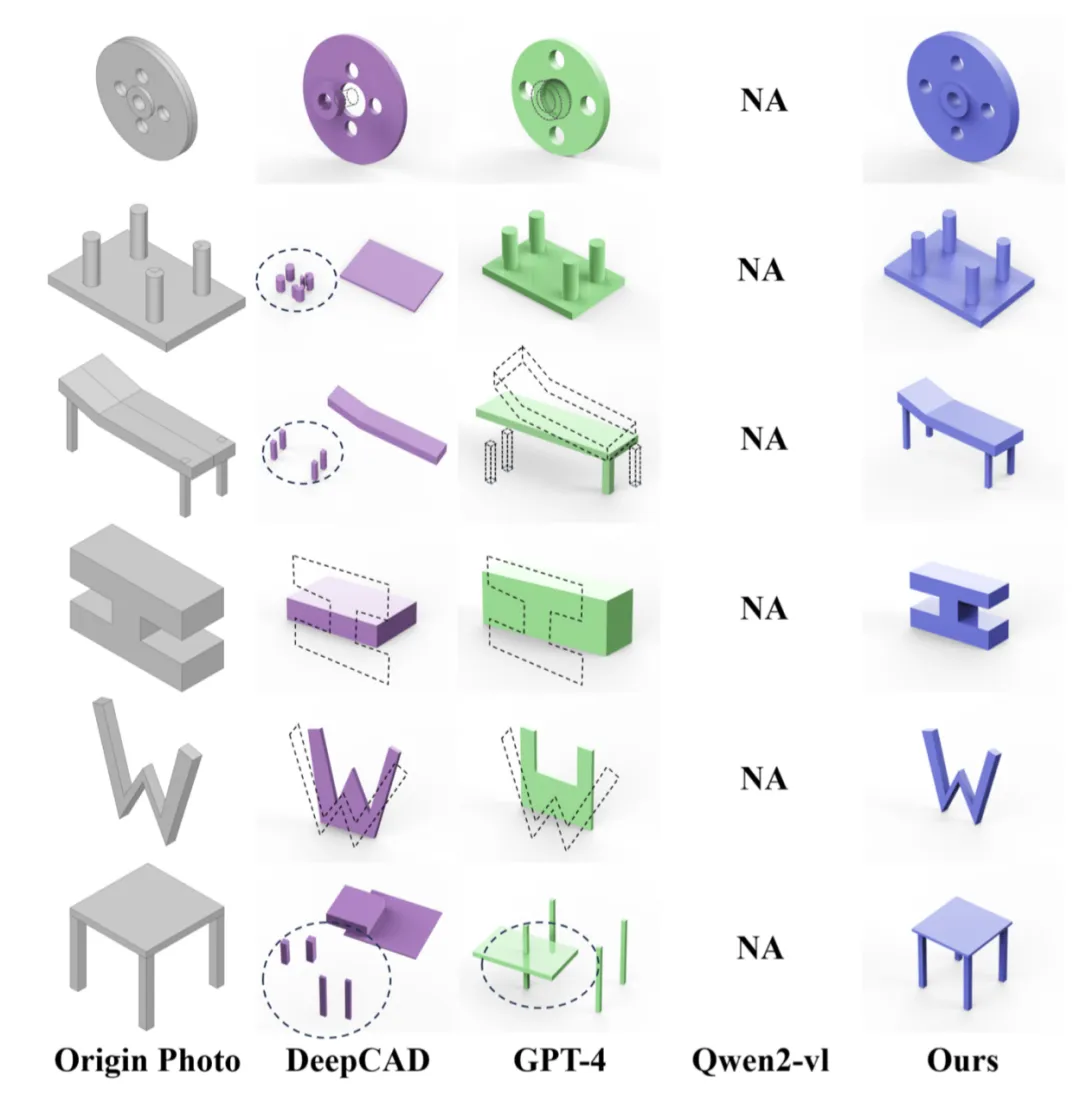

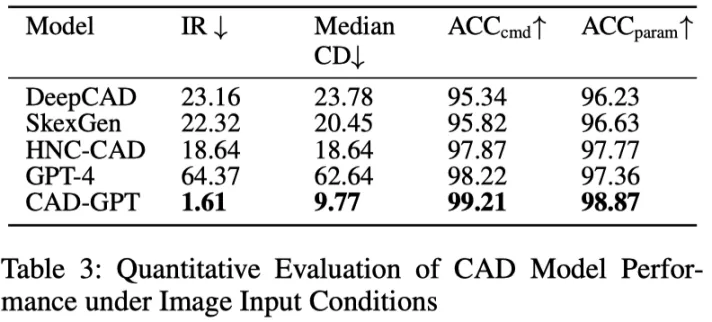

CAD-GPT was compared with three representative methods. The first is DeepCAD, which demonstrates advanced generative techniques in CAD modeling. The second, GPT-4, represents the cutting edge of closed-source multimodal large-scale models. The third is Qwen2-VL-Max, one of the leading open source multimodal large-scale models. In contrast, the output produced by CAD-GPT is both accurate and beautiful.

Figure 5. Comparison of image-based CAD generation effects

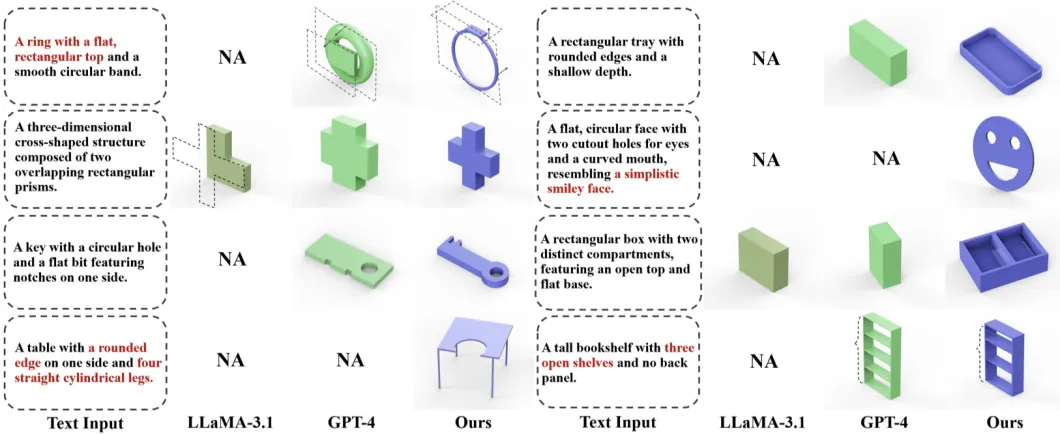

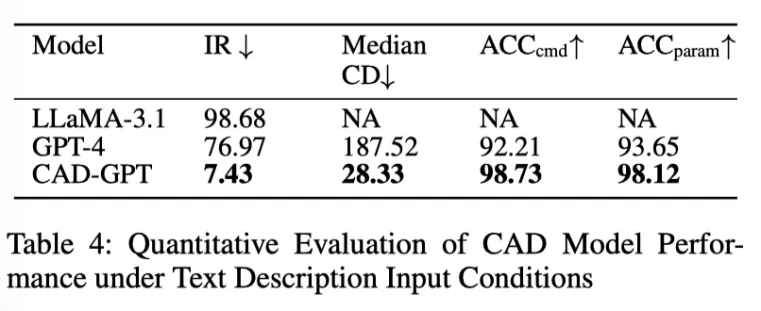

This paper selects two representative large language models: the leading closed-source model GPT-4 and the most advanced open source model LLaMA-3.1 (405B). As shown in Figure 6, our model consistently produces highly accurate, aesthetically pleasing output, and displays semantic information corresponding to the text description.

Figure 6. Comparison of CAD generation results based on text description

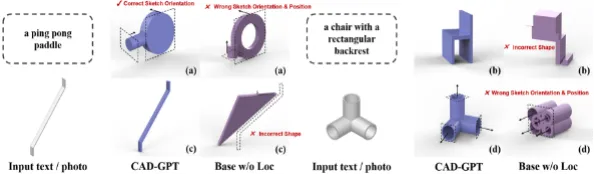

Figure 7 shows the difference in whether a 3D modeling spatial positioning mechanism is added to train the model. As shown in the figure, with the addition of a positioning mechanism, CAD-GPT can accurately reason spatial angles, position changes, and generate accurate 2D sketches.

FIG. 7. Display of ablation experimental results

In this paper, CAD-GPT, a large multi-modal model with 3D modeling spatial positioning mechanism, is proposed to improve the spatial reasoning ability. The proposed model is good at inferring the change of 3D direction and 3D spatial position of the sketch, and accurately rendering the 2D sketch. With these capabilities, CAD-GPT has demonstrated excellent performance in generating accurate CAD models under single image and text input conditions.

2025-02-17

2025-02-14

2025-02-13

13004184443

Room 607, 6th Floor, Building 9, Hongjing Xinhuiyuan, Qingpu District, Shanghai

gcfai@dongfangyuzhe.com

WeChat official account

friend link

13004184443

立即获取方案或咨询

top