Home > Information > News

#News ·2025-01-03

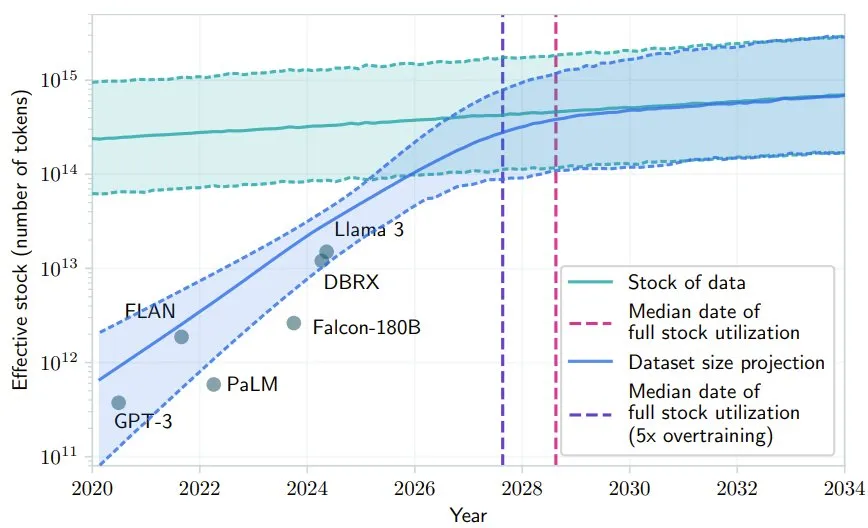

There's been a lot of discussion in the AI community lately about whether Scaling Law is hitting a wall. One reason for Scaling Law against the wall is that AI is almost running out of high-quality data, with one study predicting that if LLM continues on its current trajectory, it will be used up by 2028 or so.

Image Source: Will we run out of data? Limits of LLM scaling based on human-generated data

A study by Carnegie Mellon University (CMU) and Google DeepMind titled "VLM Agents Generate their Own Memories: Distilling Experiences into Embodied Mind Programs" found that it may be possible to address this lack of high-quality data by using low-quality data and feedback. The proposed ICAL allows LLMS and VLMS to create effective prompt words based on suboptimal presentations and human feedback, thereby improving decision making and reducing reliance on expert presentations. The paper is a Spotlight paper for NeurIPS 2024, and the project code has also been published.

We know that humans have an excellent ability to learn with small samples, and by combining observed behavior with a model of the internal world, can quickly generalize from a single task demonstration to a relevant situation. Humans can distinguish between factors that are or are not associated with success and predict possible failure. Through repeated practice and feedback, humans quickly find the right abstraction to help mimic and adapt tasks to various situations. This process facilitates the continuous improvement and transfer of knowledge across a variety of tasks and environments.

Recent research has explored the use of large language models (LLM) and Visual-language models (VLM) to extract high-level insights from trajectories and experiences. These insights are generated by models through introspection, and by attaching them to prompt words, their performance can be improved - taking advantage of their powerful contextual learning capabilities.

Existing approaches typically focus more on verbal task reward signals, storing human corrections after failure, using domain experts to manually write or manually pick examples (without introspection), or using language to shape strategies and rewards. The point is that these methods are often text-based, don't include any visual cues or presentations, or use introspection only in case of failure, but this is just one of several ways that humans and machines can integrate experiences and extract insights.

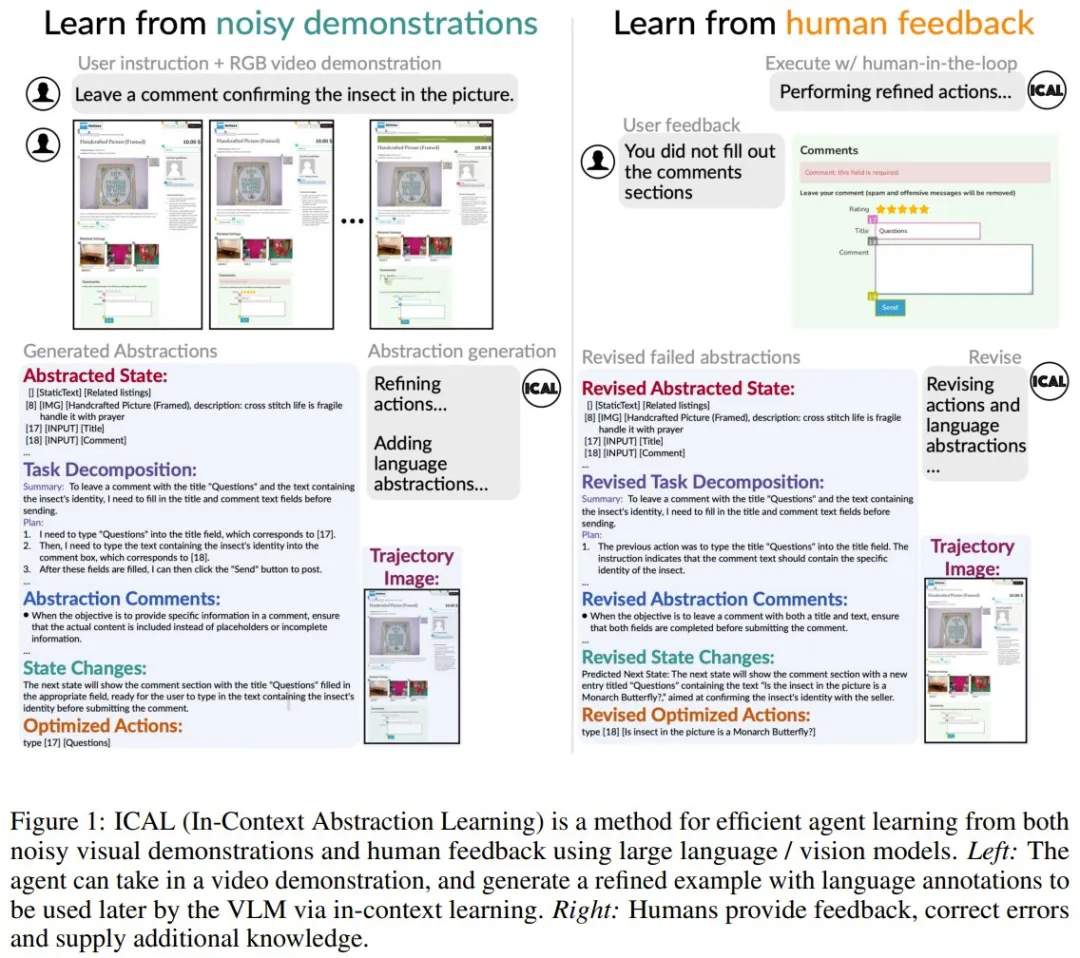

The approach of the CMU and DeepMind research team is: given the suboptimal presentation and human natural language feedback, the VLM learns to solve the new task by learning contextual experience abstraction. This method is named In-Context Abstraction Learning (ICAL).

ICAL This approach allows VLM to create multimodal abstractions for unfamiliar domains by prompting them.

We know that previous research efforts usually only store and retrieve successful motion plans or trajectories. But ICAL is different, with an emphasis on learning an abstraction that actually contains dynamic and critical knowledge about the task, as shown in Figure 1.

Specifically, ICAL handles four types of cognitive abstraction:

When there are optimal or suboptimal presentations, ICAL translates those presentations into optimized trajectories through the prompt VLM, while creating relevant linguistic and visual abstractions. These abstractions are then optimized by executing these trajectories in the environment, guided by natural language feedback from humans.

Each step of the abstraction generation process uses previously derived abstractions, allowing the model to not only improve its execution, but also improve its abstraction capabilities.

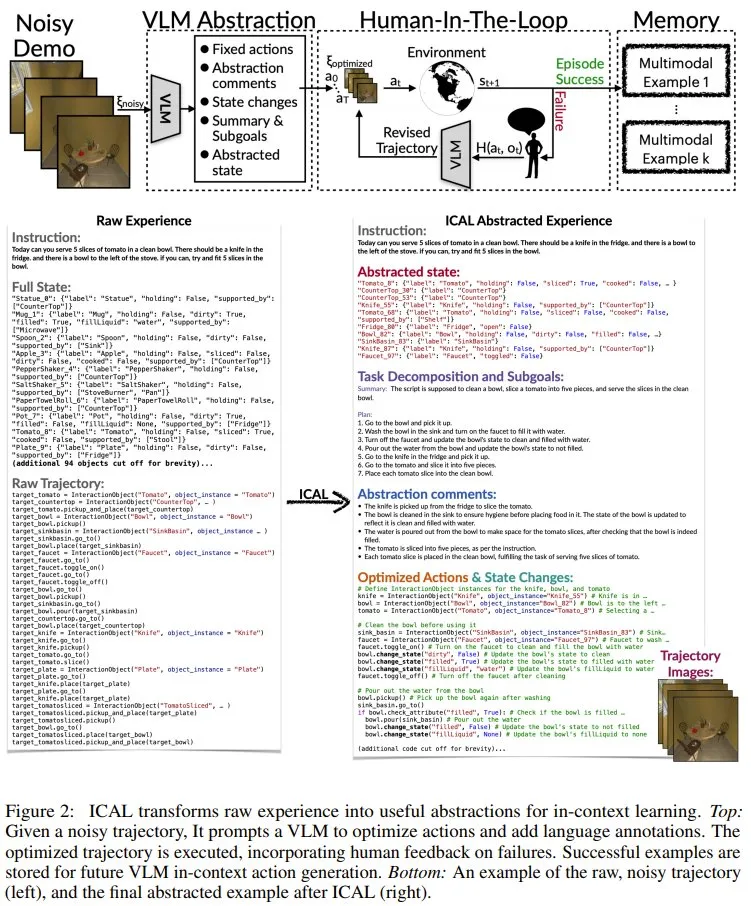

Figure 2 gives an overview of ICAL.

Each iteration begins with a noisy trajectory. ICAL abstracts this in two stages:

After successful execution of the trace, it is archived in a growing sample library. These examples are used as contextual references for the agent to provide references to previously unseen instructions and environments during the learning and reasoning phases.

Overall, this learned abstraction can summarize key information about action sequences, state transitions, rules, and areas of concern, and can be explicitly expressed through free-form natural language and visual representations.

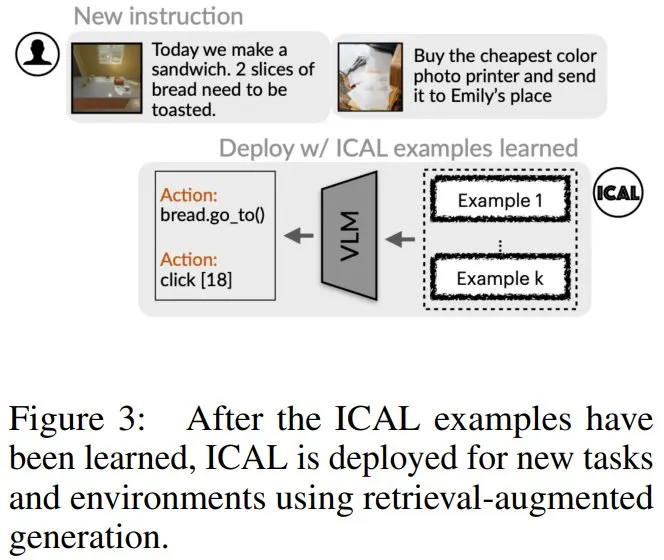

Once an ICAL sample is learned, it can be deployed using retrieval augmentation generation for new tasks and new environments.

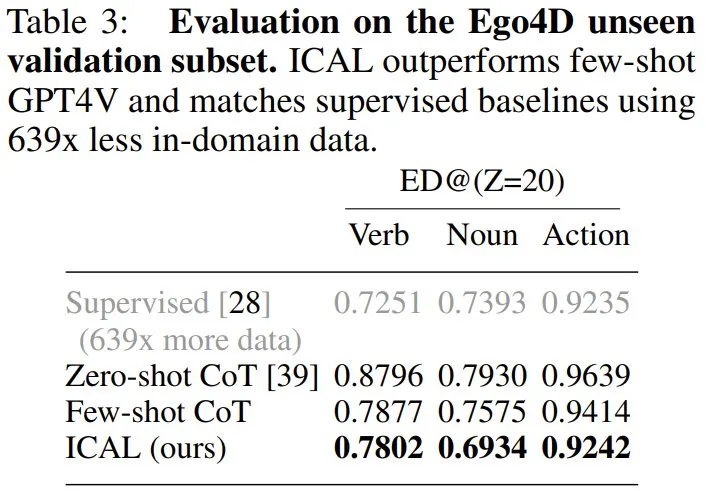

The researchers tested ICAL's task planning capabilities in TEACh and VisualWebArena, and its motion prediction capabilities in the Ego4D benchmark. Among them, TEACh aims at conversational teaching in the family environment, VisualWebArena is a multimodal automated network task, and Ego4D is used for video action prediction.

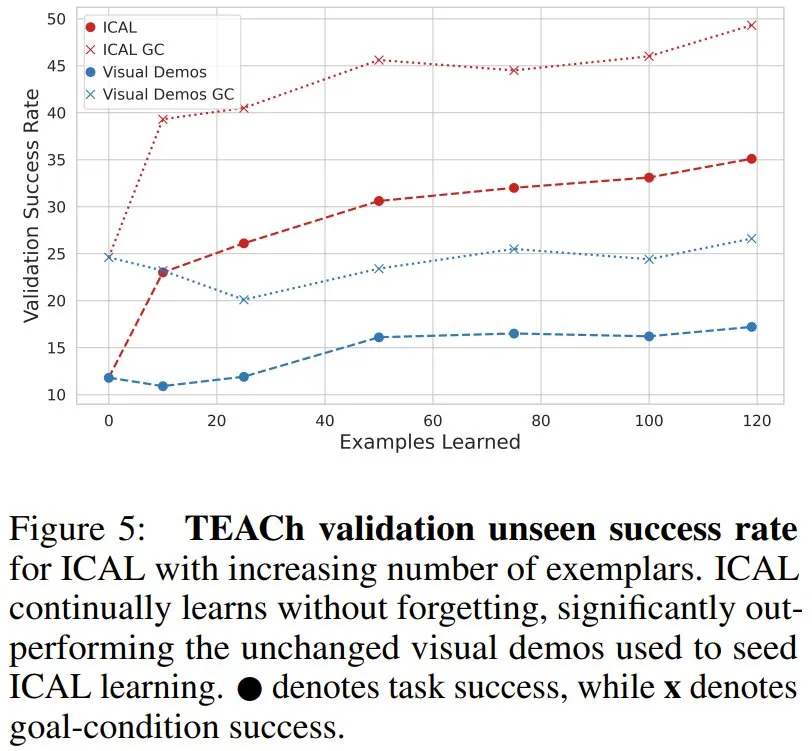

First, the team found that ICAL outperformed fixed presentations for instruction following tasks in a home setting.

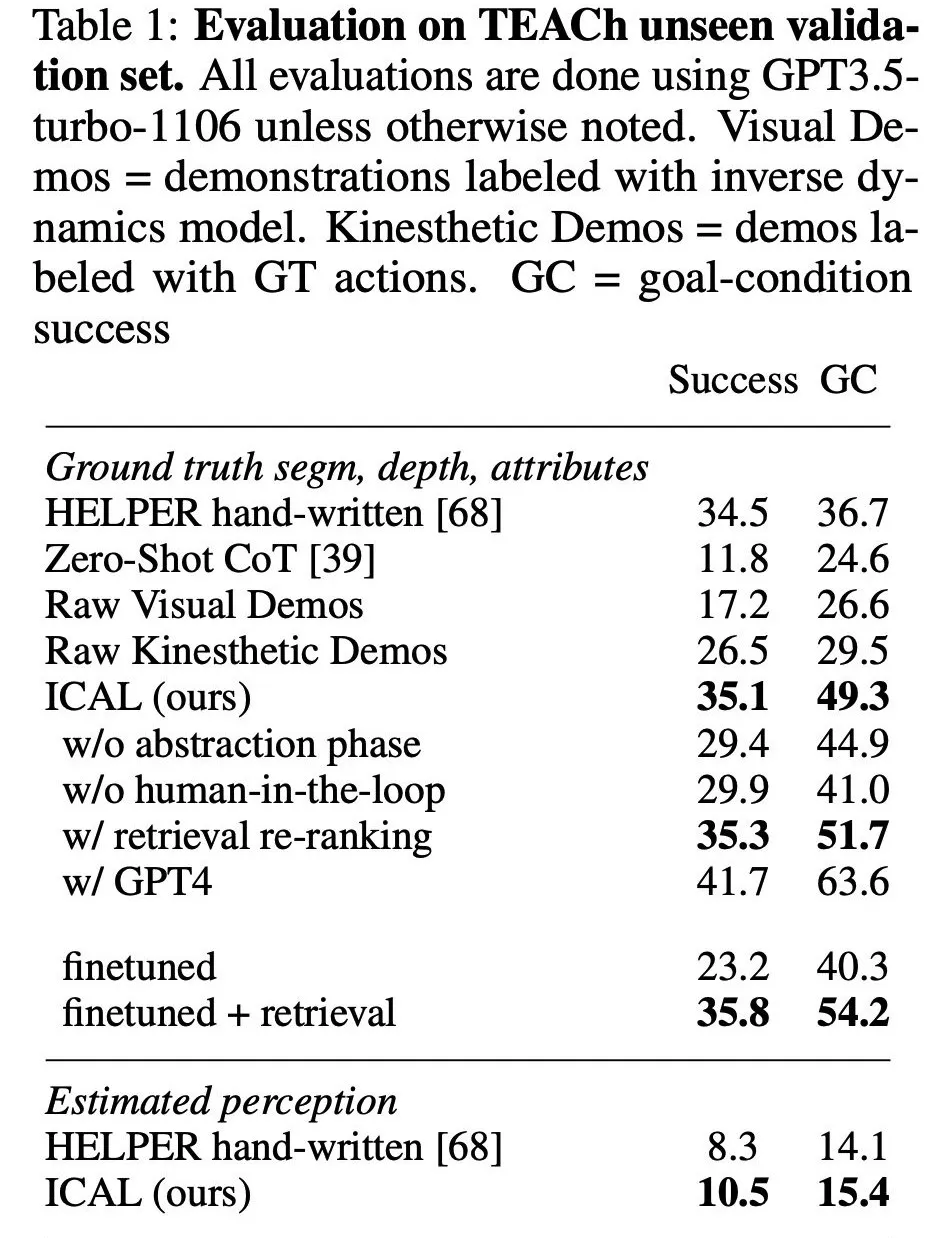

Table 1 presents the results of the study on the previously unseen TEACh validation set, where performance on new instructions, houses, and objects is evaluated.

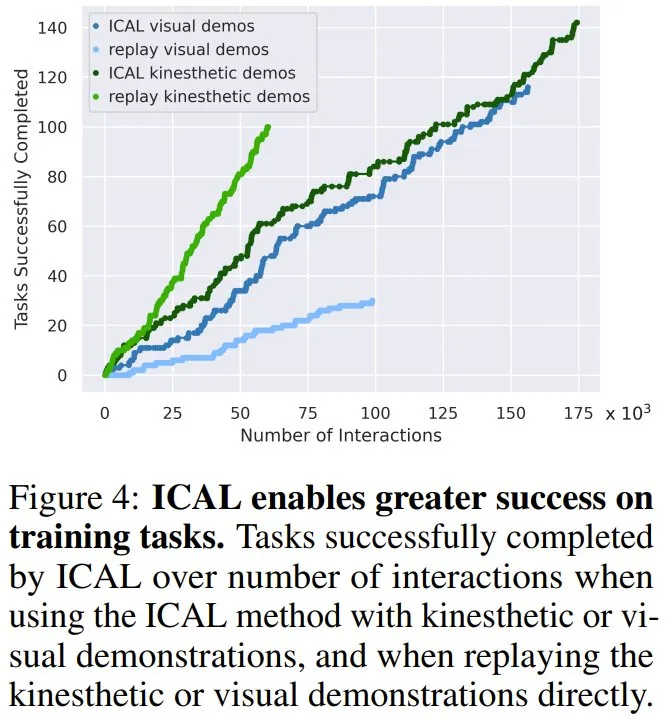

As shown in Figure 4, ICAL's modification of the noisy trajectories resulted in a higher success rate for the training task, with kinesthetic and visual presentations improving the success rate by 42% and 86%, respectively, compared to mimicking the original trajectories. This shows that ICAL not only adds useful abstractions, but also corrects errors in passive video presentations and improves success rates in the original presentation environment.

As shown in Table 1, ICAL outperformed the unprocessed demo as a contextual example on the unseen task, achieving a 17.9% improvement in success rate over the original demo with predicted actions and an 8.6% improvement over the demo with real action annotations. This highlights the effectiveness of the newly proposed abstract approach in improving sample quality to improve contextual learning, unlike the previous dominant approach of saving and retrieving successful action plans or trajectories but not abstracting.

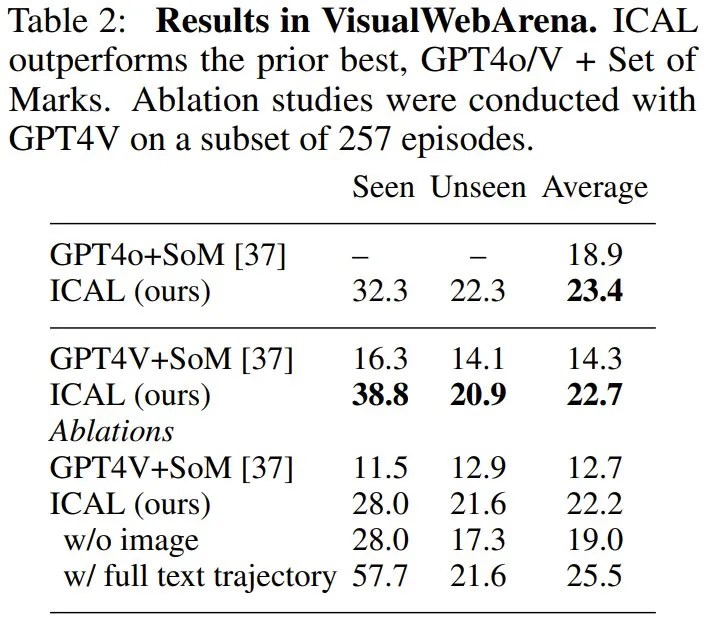

In addition, for visual networking tasks, ICAL also achieved SOTA performance. On VisualWebArena, the new agent outperforms the combination of GPT4 + Set of Marks, increasing from 14.3% to 22.7% with GPT4V and from 18.9% to 23.4% with GPT4o.

In the Ego4D setup, ICAL outperformed the smaller sample GPT4V using the Chain of Mind, reducing noun and action editing distances by 6.4 and 1.7, respectively, and was similar to the fully supervised approach - but using 639 times less in-domain training data.

Overall, the new approach significantly reduces reliance on expert examples and is consistently superior to contextual learning that "lacks action planning and trajectories of such abstractions."

In addition, with the increase in the number of examples, ICAL can be significantly improved. This suggests that the new method can scale well, too.

2025-02-17

2025-02-14

2025-02-13

13004184443

Room 607, 6th Floor, Building 9, Hongjing Xinhuiyuan, Qingpu District, Shanghai

gcfai@dongfangyuzhe.com

WeChat official account

friend link

13004184443

立即获取方案或咨询

top