Home > Information > News

#News ·2025-01-03

AI writing artifact, was actually Stanford open source!

While OpenAI and Perplexity grappled with the Perplexity Google search pie, the Stanford research team "made thunder in silence" and launched the STORM& Co-Storm system, which supports avoiding information blind spots, fully integrating reliable information and writing long Wikipedia posts from scratch.

picture

picture

Behind the model is search by Bing, as well as GPT-4o mini blessed.

picture

picture

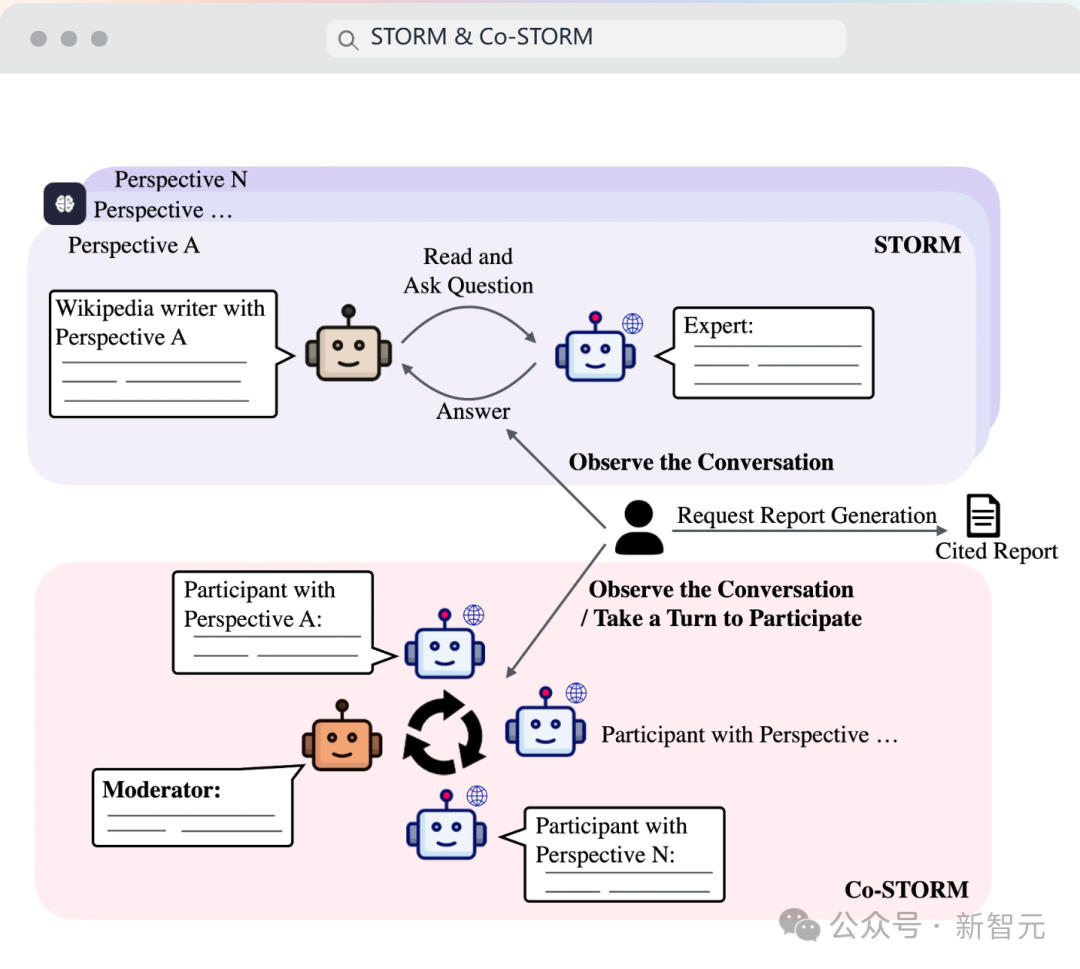

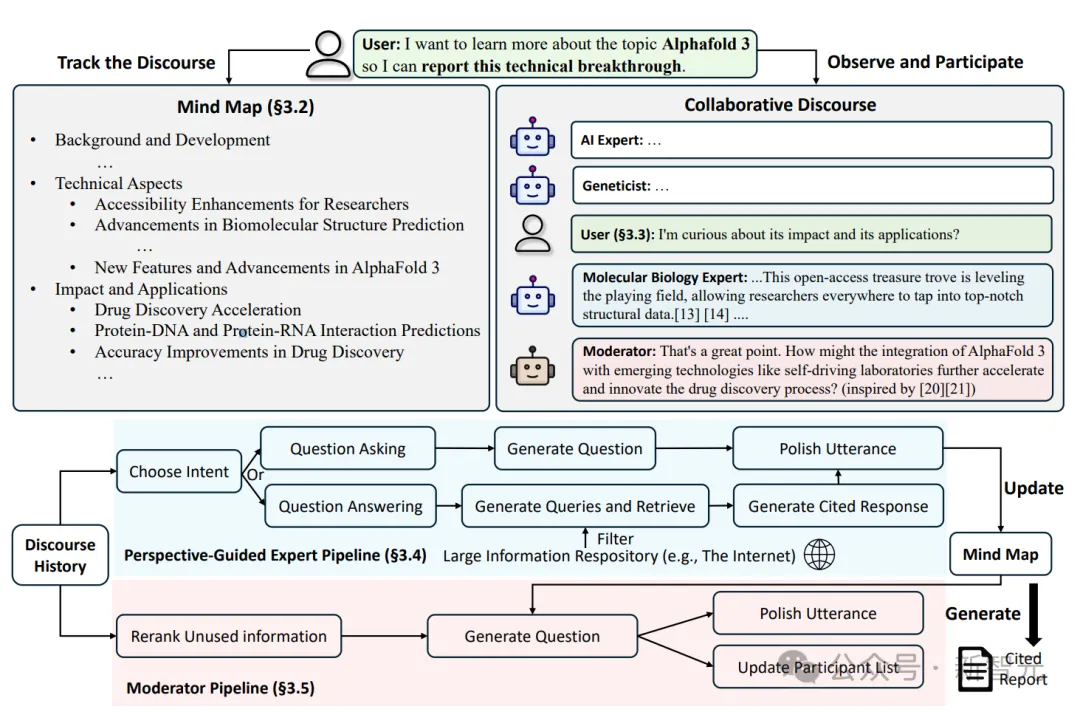

In simple terms, the STORM&Co-STORM system is divided into two parts.

STORM uses "LLM experts" and "LLM facilitators" to answer questions from multiple angles, from outline to paragraph and article iteration.

Co-STORM enables multiple agents to talk to each other and generate interactive dynamic mind maps to avoid missing information needs that the user does not notice.

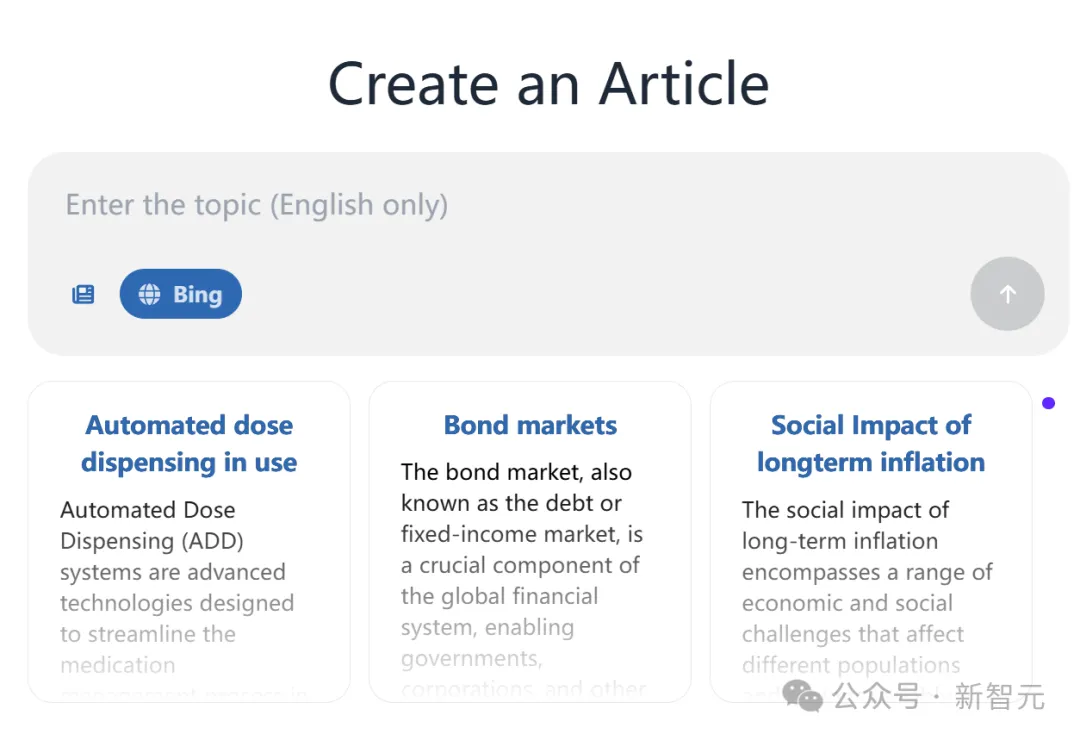

The system simply needs to input English subject words to generate long, high-quality articles (such as Wikipedia articles) that effectively integrate information from multiple sources.

picture

picture

Experience the link: https://storm.genie.stanford.edu/

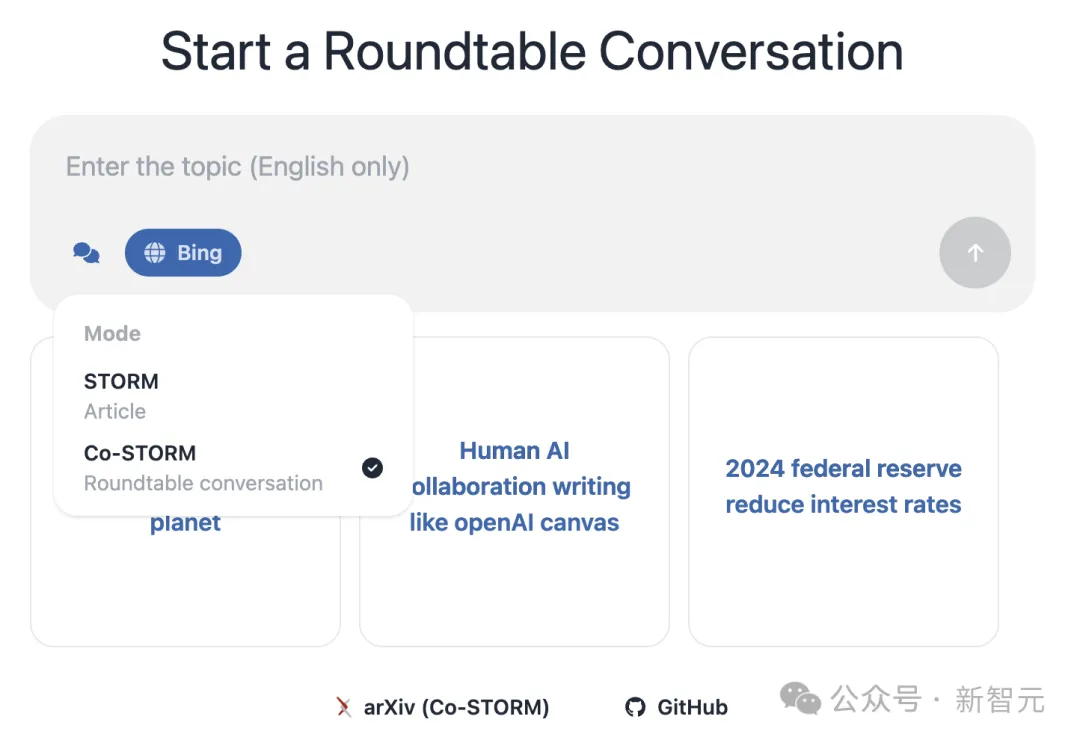

Go to the home page, you can choose STORM and Co-STORM modes.

picture

picture

Given a topic, STORM can form a long, structured, "both physical and mental" article in 3 minutes.

picture

picture

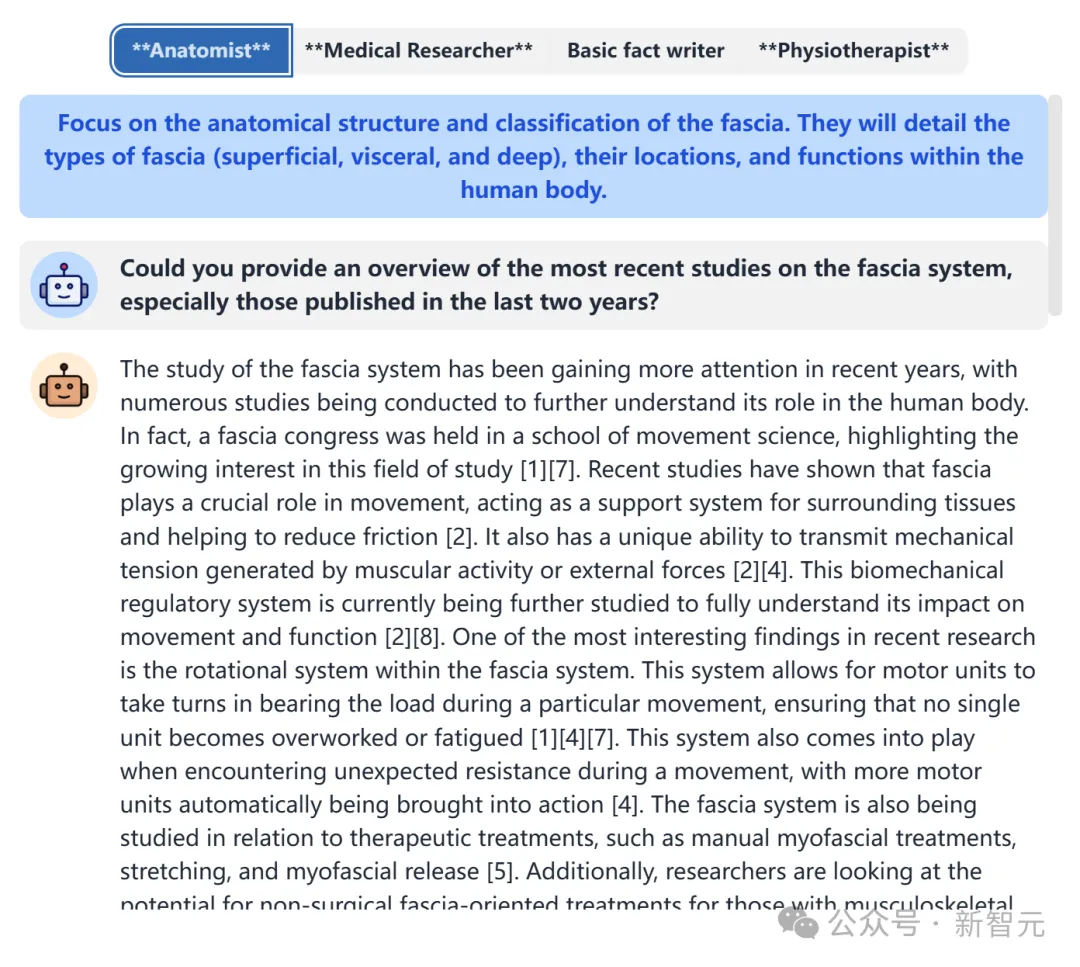

We can also click "See BrainSTORMing Process" on the given article to get the brainstorming process of different LLM roles as shown in the following picture.

picture

picture

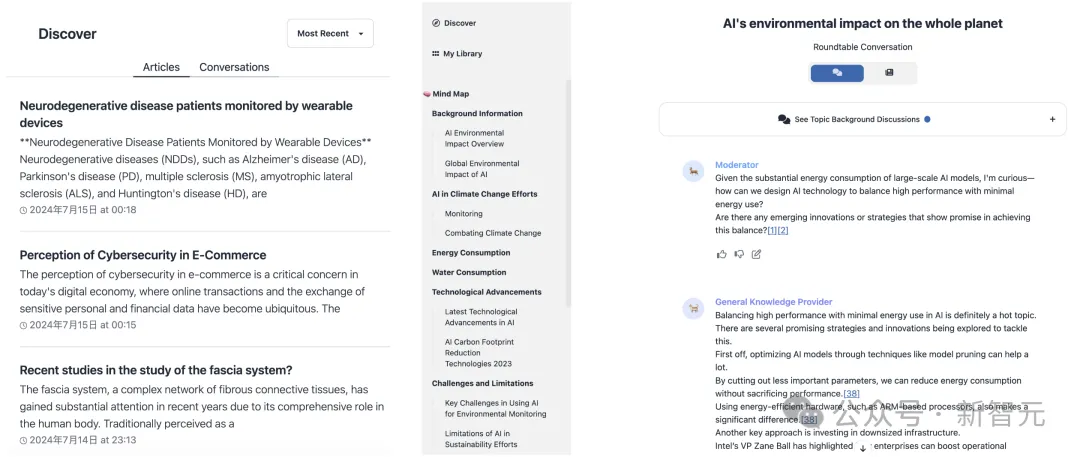

In the "Discovery" column, you can also refer to some current articles generated by other scholars, as well as examples of chats.

picture

picture

In addition, personally generated articles and chat logs can be found in the sidebar My Library.

As soon as the system was released, everyone began to experience it, and many people marveled that STORM & Co-STORM was really brilliant!

"You just type in a topic and it searches hundreds of sites and turns the main findings into an article. The point is that it's free for everyone!"

picture

picture

Netizen Josh Peterson also used STORM to automatically generate a podcast in combination with NotebookLLM for the first time.

The process goes like this: Use STORM to generate 4 articles, then submit 2 of them to GPT-4o for analysis and propose subsequent topics. Finally, add them to NotebookLM and you're done with an audio podcast.

picture

picture

Pavan Kumar, for his part, thinks STORM reveals a major trend: "You don't have to have a PhD to produce what you would expect from an emerging PhD. The course content in the coming year will be comparable to what you would expect from a 4-7 year course today."

picture

picture

picture

picture

The thesis links: https://arxiv.org/pdf/2402.14207

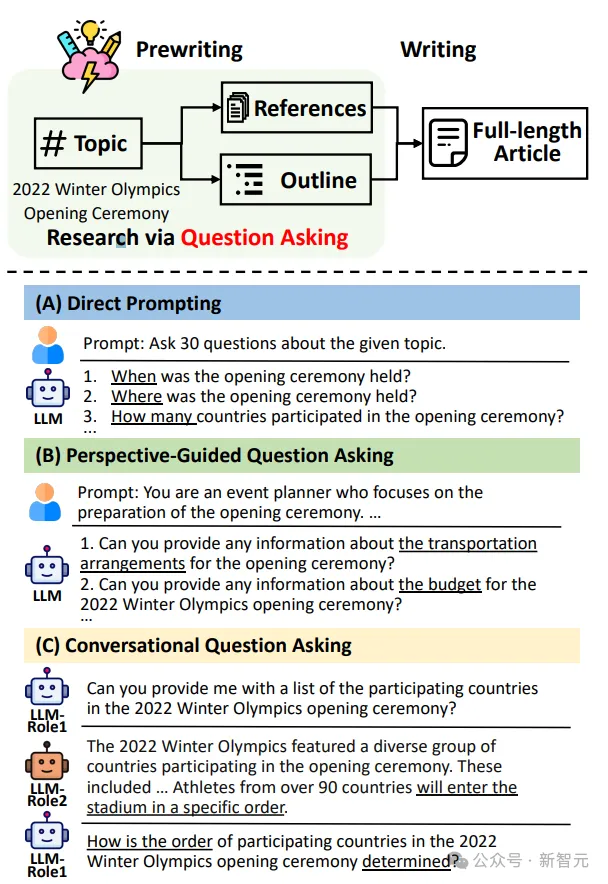

Traditional long-form writing (such as a Wikipedia article) requires a lot of manual preparation, including data gathering and outline building, and current generative writing methods often ignore these steps.

However, this also means that the generated articles are often faced with the problem of incomplete information Angle coverage and insufficient content.

STORM can use multiple LLM-roles to question and answer each other to make the content of the article more detailed and comprehensive.

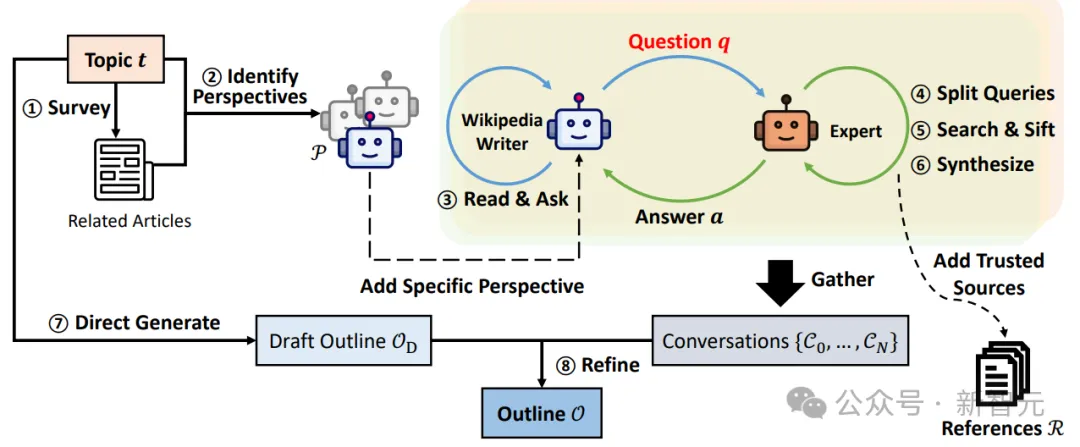

As shown below, the STORM system is divided into three phases:

- In order to cover different aspects of the topic, the system introduces multi-role simulations (e.g., experts, regular users) and generates point-of-view guided questions - Figure (A) shows the limited effect of simple question generation, Figure (B) demonstrates the increased diversity of question generation through point-of-view guided questions

- Generate a preliminary outline using the model's built-in knowledge. - The system simulates questions through dialogue (Figure C) and refines the outline to give it more depth

- Generate articles section by section based on the outline, using retrieved information to add credibility and citations to the content

picture

picture

Starting with a given topic, the STORM system determines the various perspectives covering that topic by consulting the relevant Wikipedia article (steps 1-2).

It then simulates a conversation between a Wikipedia writer asking a question from a given perspective and an expert based on a reliable online source (steps 3-6).

Based on the inherent knowledge of the LLM, the dialogue content collected from different perspectives was finally carefully choreographed as a writing outline (Steps 7-8).

The STORM system automates the entire writing process

The STORM system automates the entire writing process

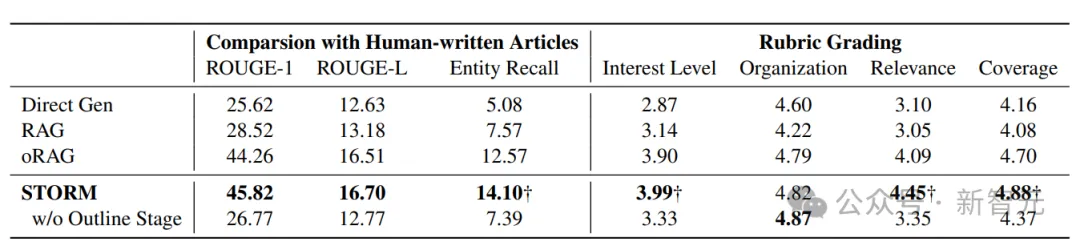

Because earlier studies used different Settings and did not use a large language model (LLM), direct comparisons are difficult.

So the researchers used the following three LLM-based baseline methods:

1. Direct Gen: A baseline method that directly prompts the LLM to generate an outline, which is then used to create a complete article.

2. RAG: A baseline method of Retrieval augmented-generation that searches by topic and uses the search results to generate an outline or complete article along with the topic.

3. oRAG (Outline Driven RAG) : Exactly the same as RAG in outline creation, but further retrievable additional information through chapter headings to generate article content chapter by chapter.

picture

picture

As can be seen from the above table, articles generated using STORM are not inferior to human level, and also better than several paradigms of LLM-generated articles, such as oRAG, which is the most effective.

But there is no denying that the quality of Storm-generated articles still lags behind that of carefully revised, human-written articles in terms of neutrality and verifiability.

While STORM finds different perspectives when researching a given topic, the information gathered may still lean toward mainstream sources on the Internet and may contain promotional content.

Another limitation of the study is that while the researchers focused on generating Wikipedia-like articles from scratch, they also only considered generating freely organized text. High-quality, human-written Wikipedia articles often contain structured data and multimodal information.

Therefore, the most critical challenge for generating articles with LLM is still to generate high-quality articles with multimodal structures based on facts.

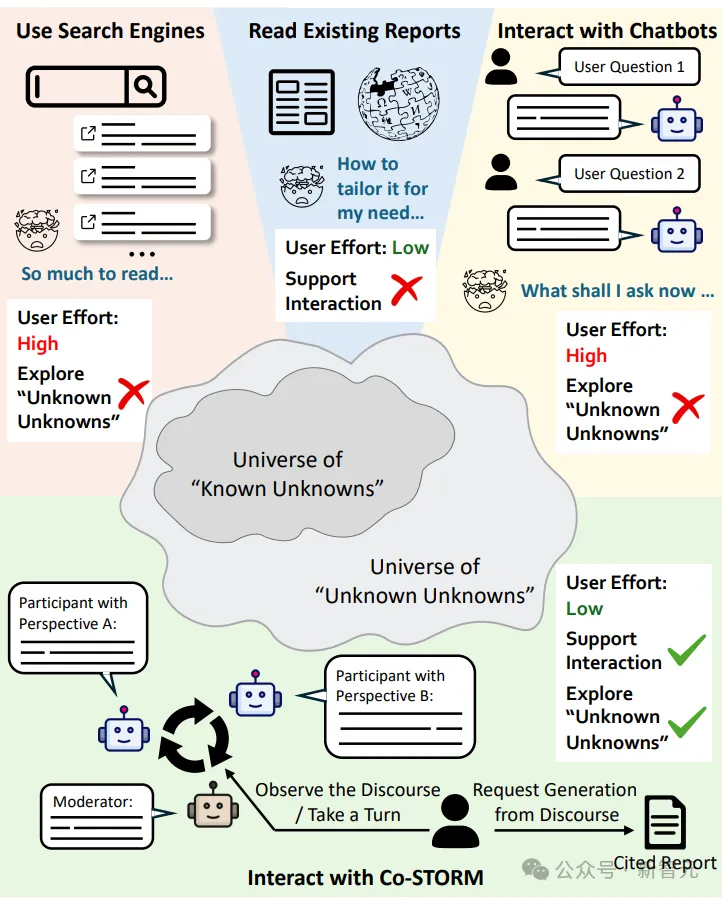

For some learning tasks, information collection and integration often result in missing information due to personal or search engine preferences, so that information blind spots (i.e. unrealized information needs) cannot be reached.

The Co-STORM proposed by the research team in the following paper aims to improve this situation and greatly improve learning efficiency.

picture

picture

The thesis links: https://www.arxiv.org/abs/2408.15232

In my study and work, I am faced with the need to read too much redundant information when using search engines, and I don't know how to ask accurate questions when chatting with Chatbots. However, neither of these two ways of obtaining information can reach the "information blind spot", and the cognitive burden is not small.

What about reading the existing reports? While this reduces the cognitive burden, it does not support interaction and does not allow us to go further into deep learning.

Unlike the above information acquisition methods, Co-STORM agents can ask questions on behalf of users, can obtain new information in various directions, and explore their own "information blind spots." The information is then organized through a dynamic mind map and a comprehensive report is finally generated.

picture

picture

As shown below, Co-STORM consists of the following modules:

- Multi-agent dialogue: A simulated dialogue between an "expert" and a "facilitator" to discuss various aspects of the topic.

Dynamic mind mapping: Track conversations in real time and organize information hierarchically to help users understand and engage.

- Report generation: The system generates well-cited, informative summary reports based on mind maps.

picture

picture

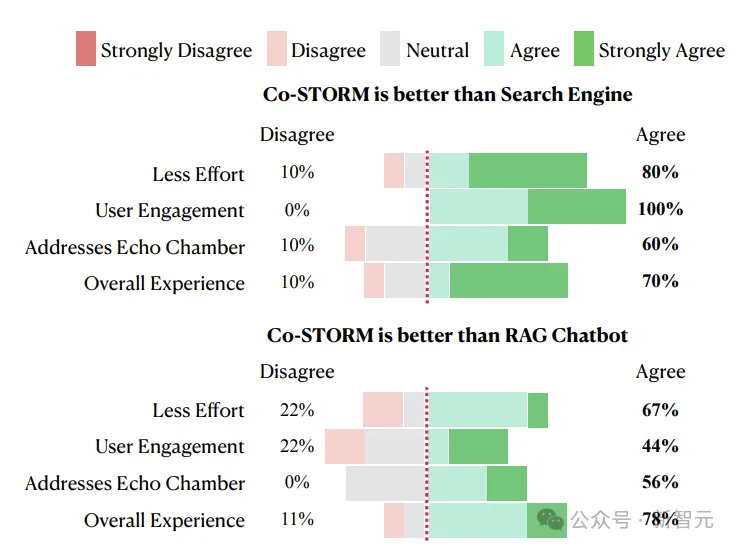

To give a more realistic representation of the user experience, the researchers conducted a human assessment of 20 volunteers, comparing the performance of Co-STORM with that of traditional search engines and RAG Chatbots. The results show:

Co-STORM significantly improves the depth and breadth of information

- Users find it effective in guiding exploration of blind spots

- 70% of users prefer Co-STORM, saying it significantly reduces cognitive burden - and users particularly recognize the help of dynamic mind mapping in tracking and understanding information

picture

picture

However, STORM& Co-Storm currently only supports English interaction, and in the future, the official team may expand it to have multi-language interaction capabilities.

picture

picture

Finally, as user TSLA felt, "We are living in an extraordinary era. Today, not only is all information within reach, but even the way you access it can be completely tailored to your level, making it possible to learn anything."

picture

picture

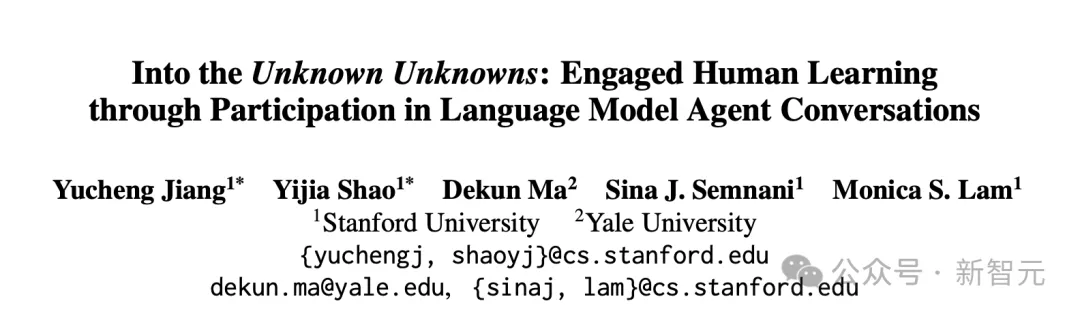

Introduction to the main author

Yucheng Jiang is a master's student in computer science at Stanford University.

His research aims to improve learning, decision making, and productivity by creating systems that work seamlessly with users.

Yijia Shao is a second-year doctoral student in Stanford University's Natural Language Processing (NLP) lab, supervised by Professor Yang Diyi.

Previously, she was an undergraduate at Yuanpei College of Peking University, where she began to engage in machine learning and natural language processing research through her collaboration with Professor Bing Liu.

https://x.com/dr_cintas/status/1874123834070360343

https://storm.genie.stanford.edu/

2025-02-17

2025-02-14

2025-02-13

13004184443

Room 607, 6th Floor, Building 9, Hongjing Xinhuiyuan, Qingpu District, Shanghai

gcfai@dongfangyuzhe.com

WeChat official account

friend link

13004184443

立即获取方案或咨询

top