Home > Information > News

#News ·2025-01-03

On January 2, the Intelligent Industry Research Institute of Tsinghua University (AIR) released a paper on December 24, 2024, introducing the introduction of AutoDroid-V2 AI model, which uses a small language model on mobile devices to significantly improve the degree of automation of natural language control.

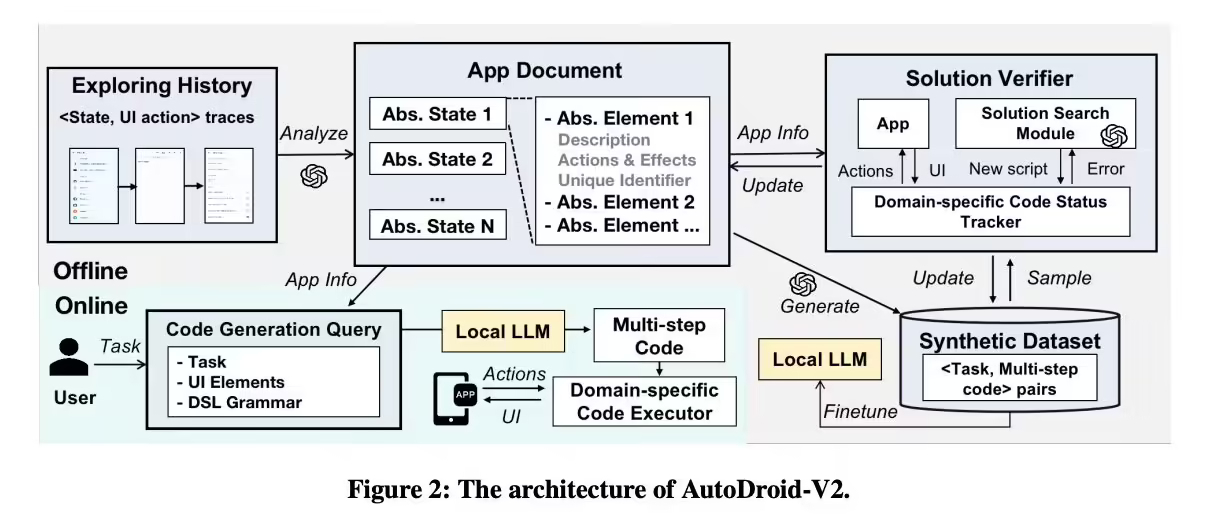

The system adopts script-based method and makes use of the coding capability of the device side small language model (SLM) to execute user instructions efficiently. AutoDroid-V2 offers significant advantages in terms of efficiency, privacy and security over traditional approaches that rely on cloud-based large language models (LLM).

Large Language models (LLMs) and Visual language models (VLM) revolutionize the automation of mobile device control through natural language commands, providing solutions for complex user tasks.

The mainstream of automated control devices adopts the "Step-wise GUI agents" mode, which operates by querying each GUI state, making dynamic decisions and reflecting on the LLM, continuously processing the user's task, and observing the GUI state until it is completed.

However, this approach relies heavily on the cloud-based model, and there are privacy and security risks when sharing personal GUI pages, in addition to serious problems such as large user side traffic consumption and high server side centralized service costs, which hinder the large-scale deployment of GUI agents.

Unlike traditional step-by-step operations, AutoDroid-V2 generates multi-step scripts based on user instructions to perform multiple GUI operations at once, significantly reducing query frequency and resource consumption.

Using a small language model on the device for script generation and execution avoids reliance on a powerful cloud model, effectively protects user privacy and data security, and reduces server-side costs.

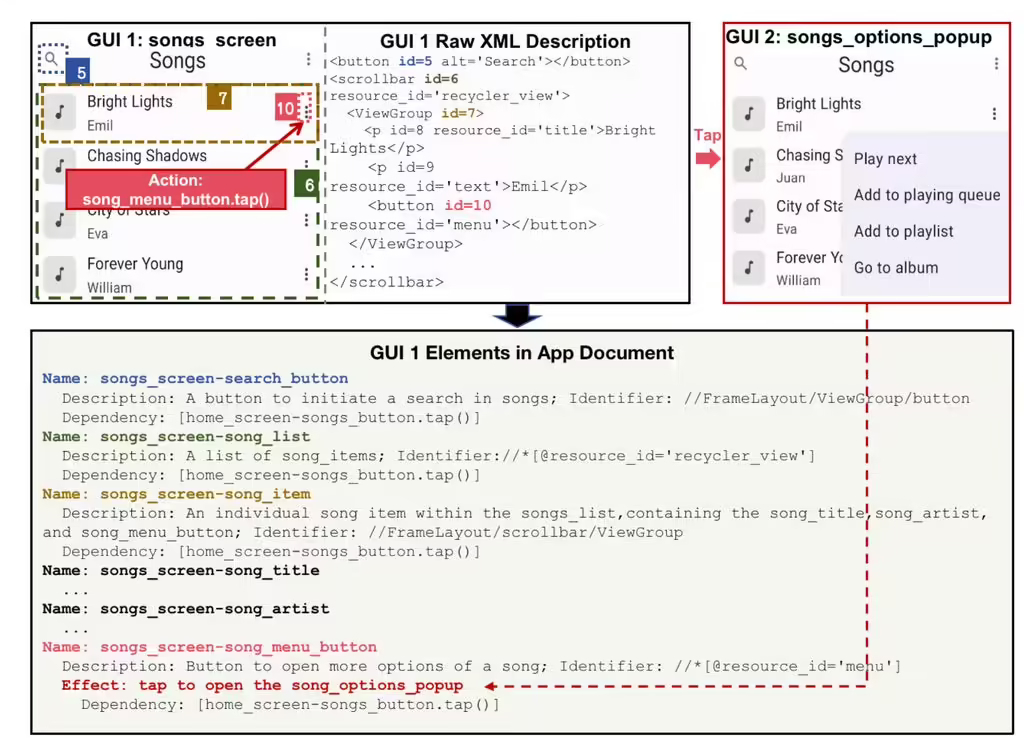

The model builds application documentation during the offline phase, including AI-guided GUI state compression, element XPath automatic generation, and GUI dependency analysis to lay the foundation for script generation.

In addition, after a user submits a task request, the local LLM generates a multi-step script that is executed by a domain-specific interpreter to ensure reliable and efficient operation.

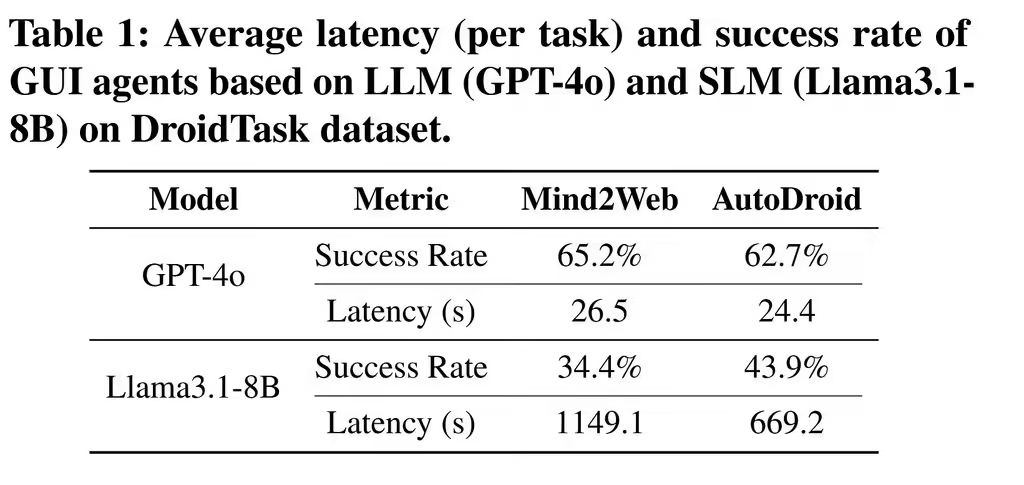

On a benchmark basis, 226 tasks were tested on 23 mobile apps, achieving a 10.5%-51.7% improvement in task completion compared to baselines such as AutoDroid, SeeClick, CogAgent and Mind2Web.

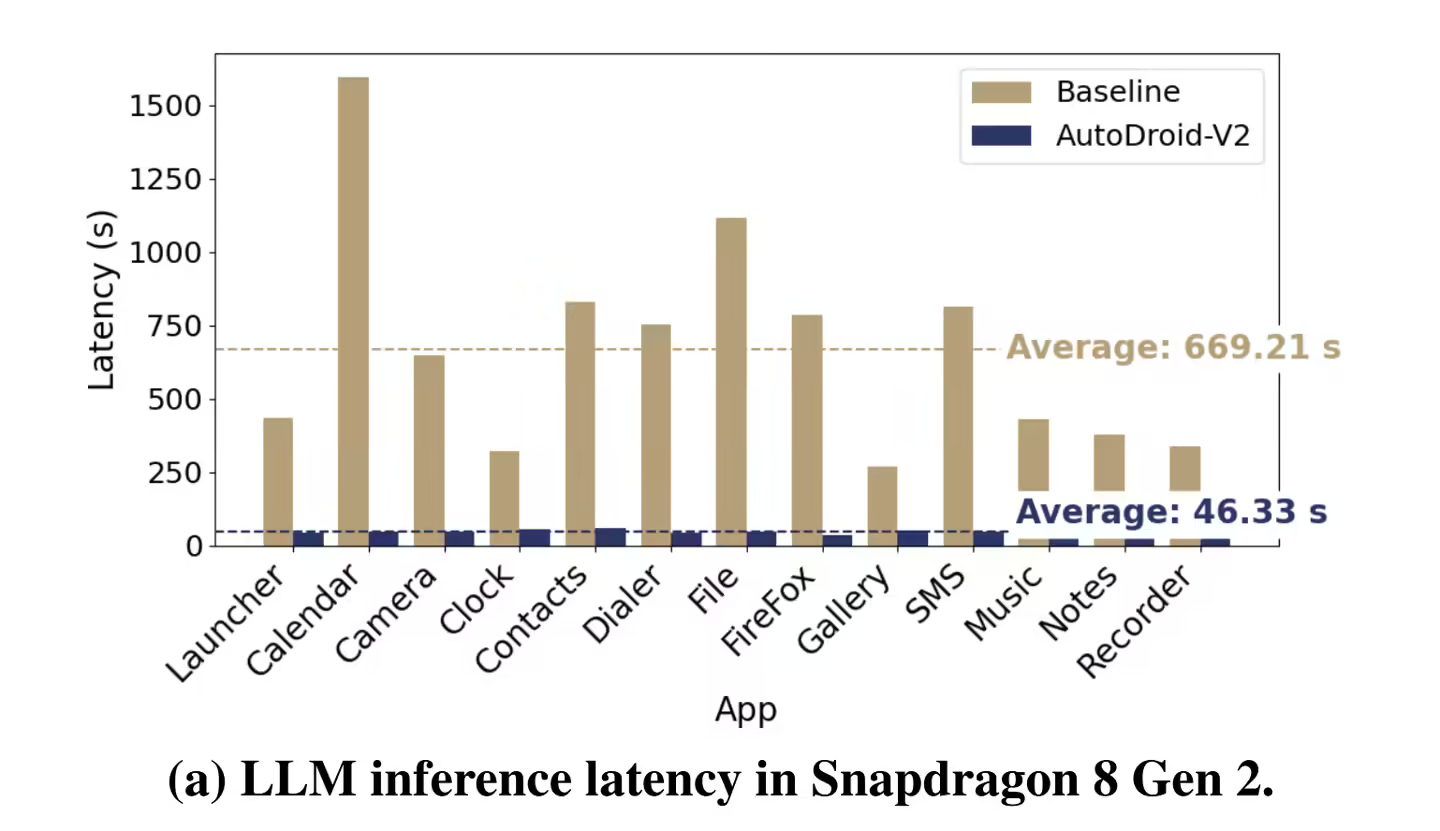

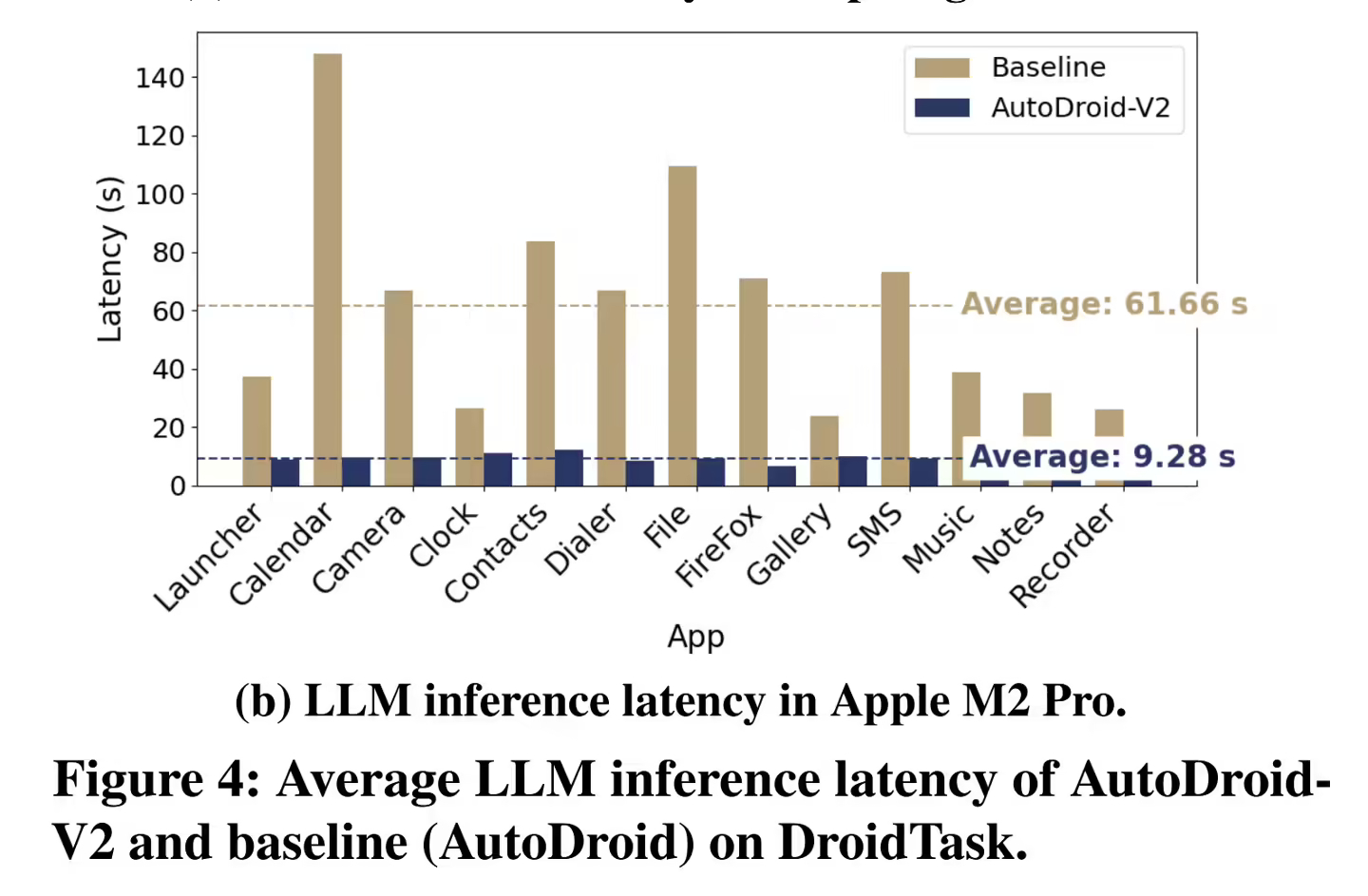

In terms of resource consumption, the input and output token consumption is reduced to 43.5 and 5.8 respectively, and the LLM inference delay is reduced to 5.7 to 13.4.

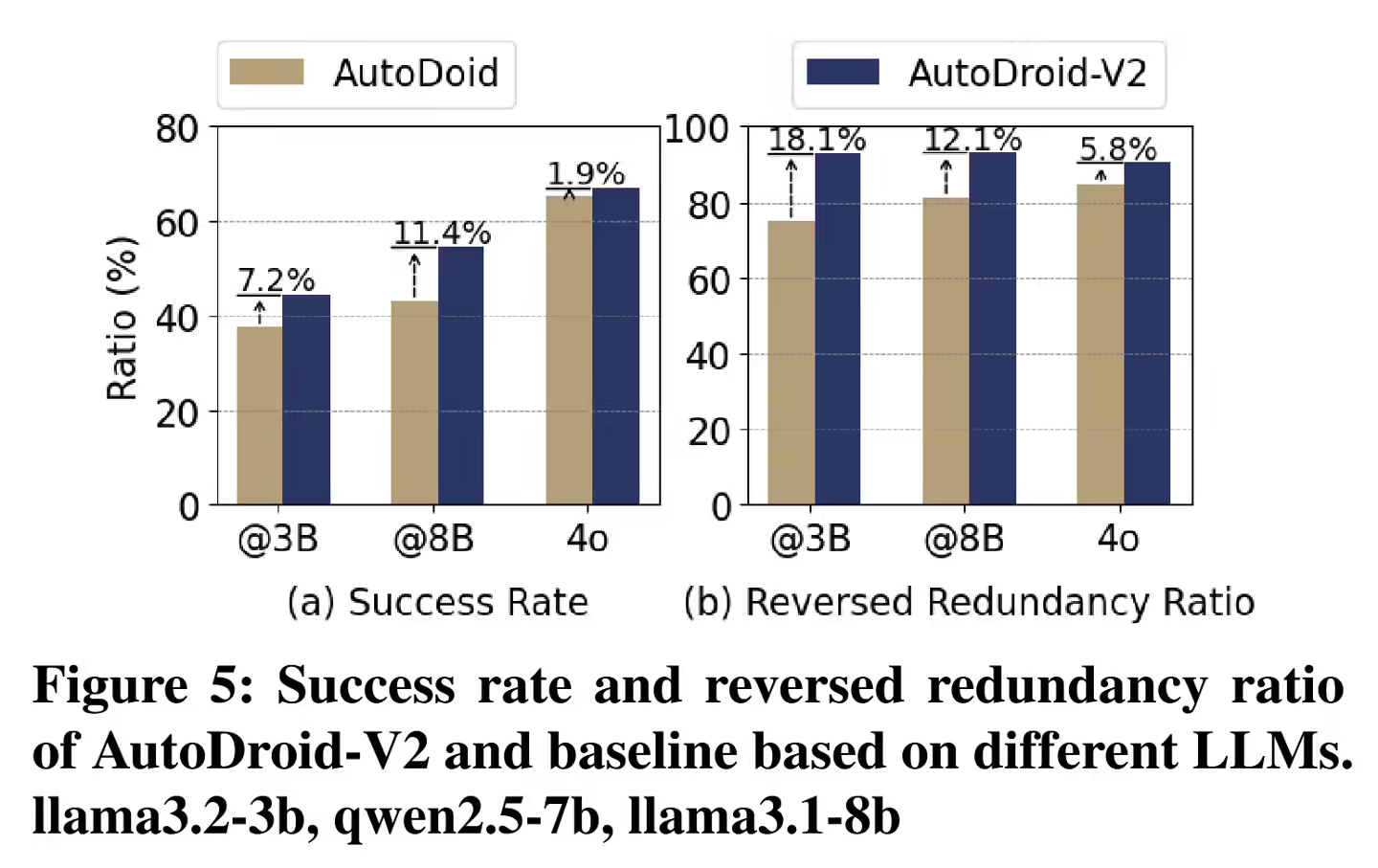

In cross-LLM tests, LLAMA 3.2-3B, QWEN 2.5-7B and LLAMa 3.1-8B showed the same performance, the success rate was 44.6%-54.4%, and the reverse redundancy ratio was 90.5%-93.0%.

IT House includes a reference address

2025-02-17

2025-02-14

2025-02-13

13004184443

Room 607, 6th Floor, Building 9, Hongjing Xinhuiyuan, Qingpu District, Shanghai

gcfai@dongfangyuzhe.com

WeChat official account

friend link

13004184443

立即获取方案或咨询

top