Home > Information > News

#News ·2025-01-09

How to make robots plan future actions based on task guidance and real-time observation has always been the core scientific problem in the field of embodied intelligence. However, achieving this goal is constrained by two key challenges:

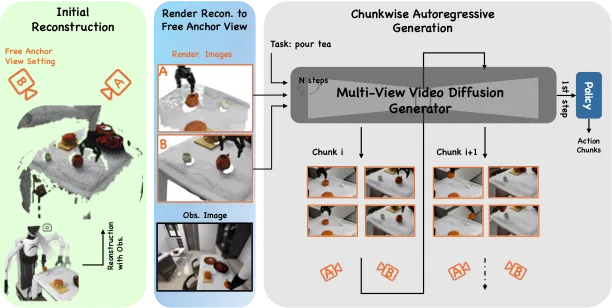

To solve the above problems, the Zhiyuan robot team proposed the EnerVerse architecture, through autoregressive diffusion model, to generate the future embodied space while guiding the robot to complete complex tasks. Different from the simple application of video generation model in existing methods, EnerVerse combines the requirements of embodied tasks and innovatively introduces Sparse Memory mechanism and Free Anchor View (FAV), which not only improves 4D generation capability, but also improves 4D generation capability. Achieved a significant breakthrough in motion planning performance. The experimental results show that EnerVerse not only has excellent future space generation capability, but also achieves current optimal (SOTA) performance in robot motion planning tasks.

The project homepage and paper are online, and the model and related data sets will be open source soon:

The core of robot motion planning is to predict and complete a series of complex future operations based on real-time observations and task instructions. However, existing methods have the following limitations when dealing with complex embodied tasks:

To this end, EnerVerse solves these bottlenecks through a block-generated autoregressive diffusion framework, combined with an innovative sparse memory mechanism and a free anchoring perspective (FAV) approach.

Block by block Diffusion generation: Next Chunk Diffusion

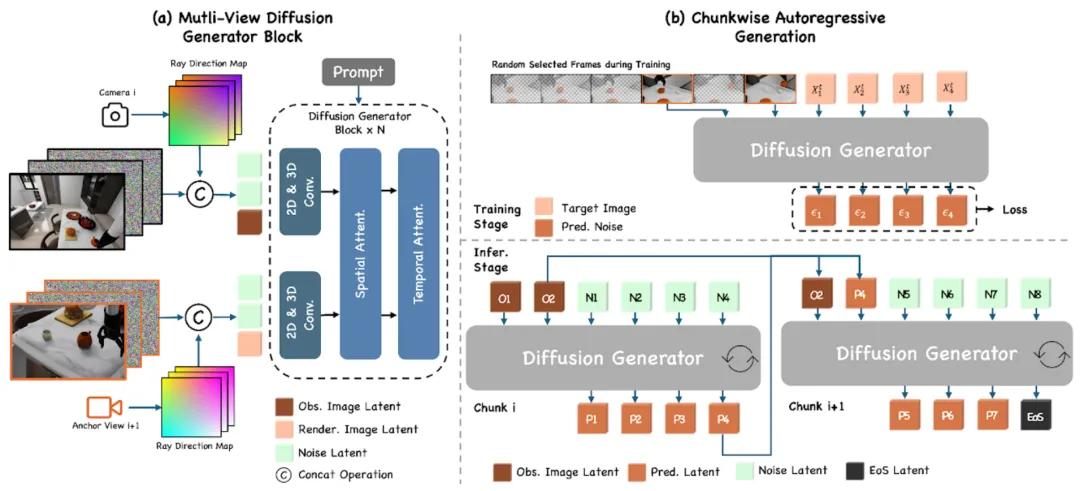

EnerVerse uses an autoregressive diffusion model generated block by block to guide robot motion planning by gradually generating future embodied space. Key designs include:

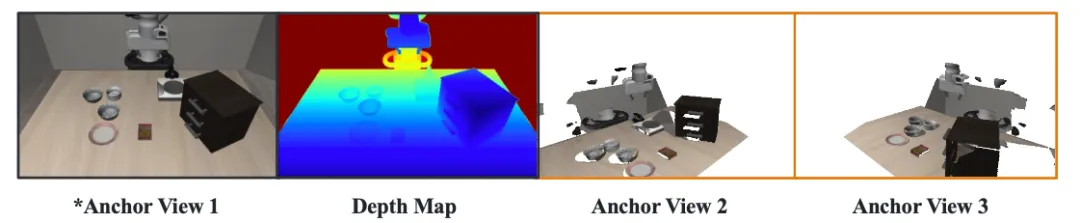

Flexible 4D generation: Free Anchor View (FAV)

In response to the need for complex occluded environments and multiple viewing angles in embodied operations, EnerVerse proposes a free anchoring viewing Angle (FAV) method to flexibly represent 4D Spaces. Its core advantages include:

Efficient action planning: Diffusion Policy Head

EnerVerse opens up the whole chain of future space generation and robot action planning by integrating the Diffusion Policy Head downstream of the generation network. Key designs include:

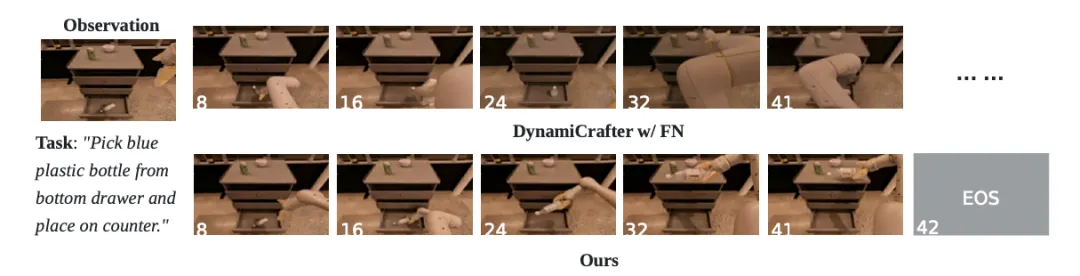

1. Video generation performance

EnerVerse has demonstrated excellent performance in both short - and long-range mission video generation:

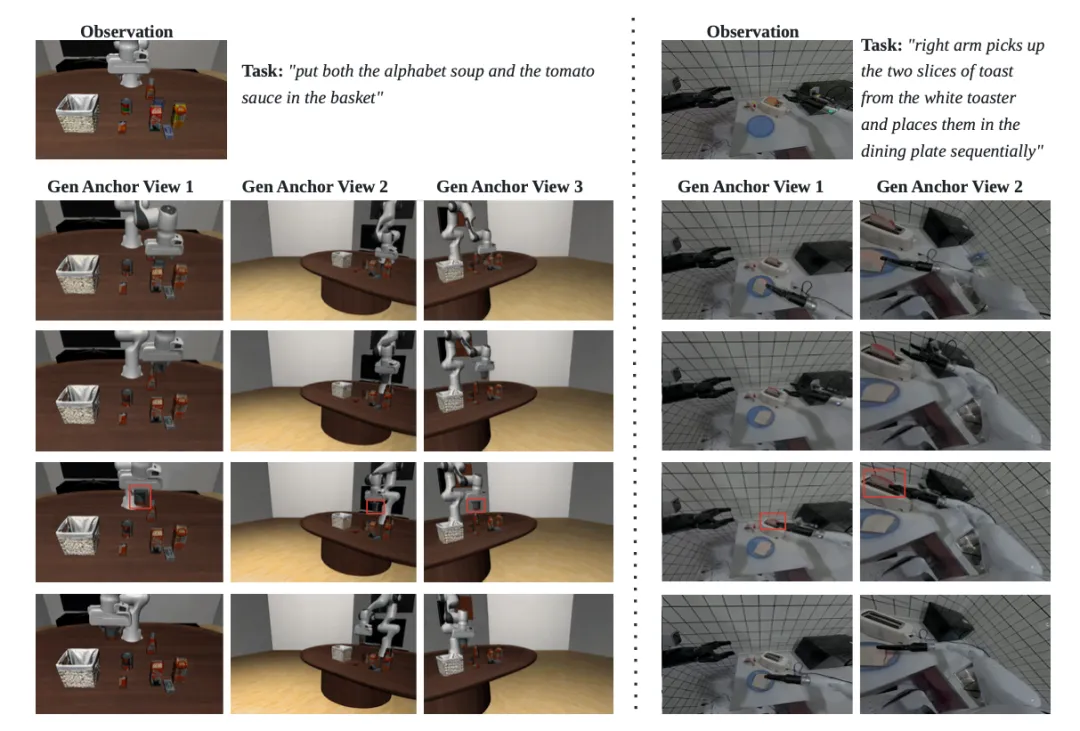

In addition, the quality of multi-view video generated by EnerVerse in LIBERO simulation scenarios and AgiBot World real scenarios has been fully verified.

The corresponding generated video is as follows:

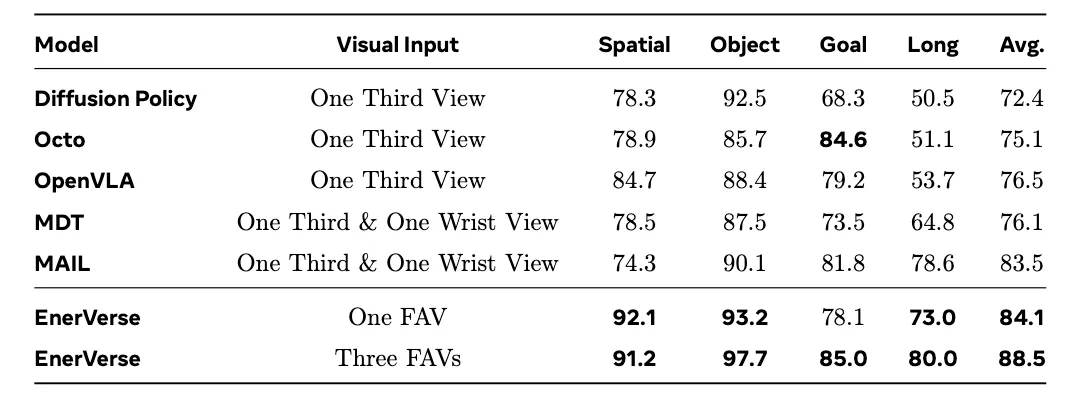

2. Ability to plan movements

In the LIBERO benchmark test, EnerVerse achieved significant advantages in robot motion planning tasks:

It is worth noting that all the tasks on LIBERO-Long require the machine to perform multiple steps, as shown in the following video:

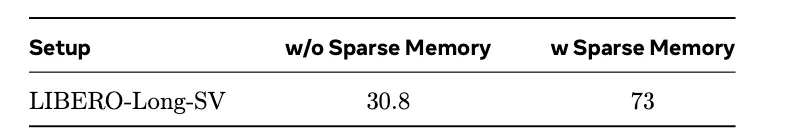

3. Analysis of ablation and training strategies

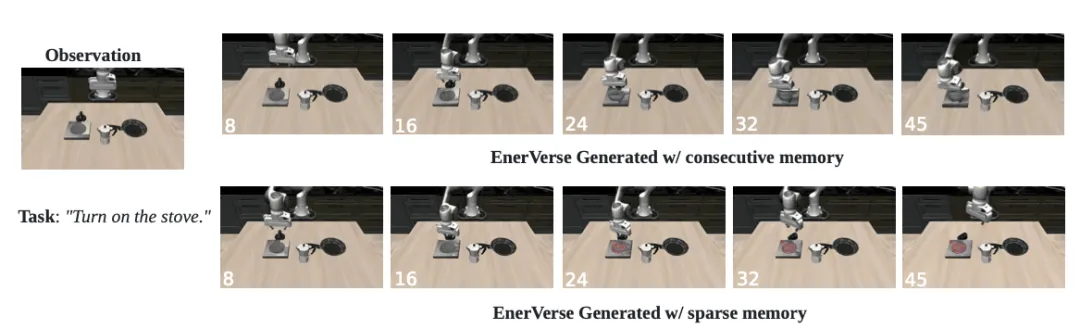

Sparse memory mechanism: Ablation experiments show that sparse memory is crucial to the logical rationality of long range sequence generation and the accuracy of long range action prediction.

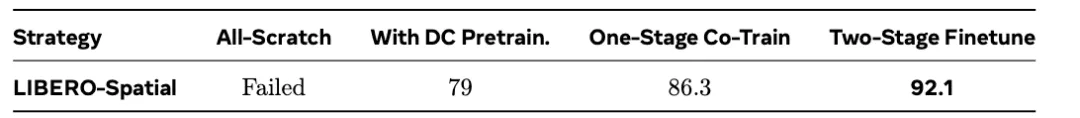

Two-stage training strategy: The two-stage strategy of future space generation training followed by action prediction training can significantly improve the performance of action planning.

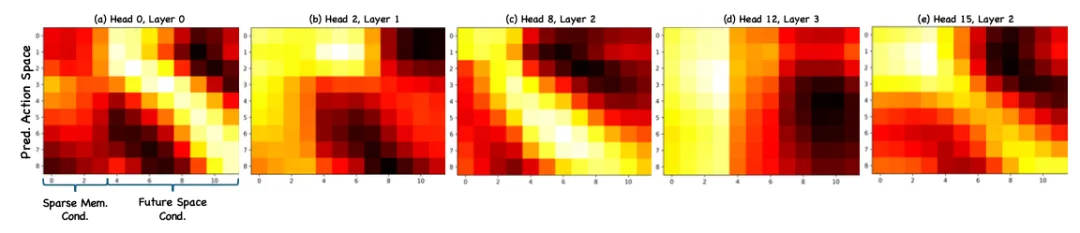

4. Attention visualization

Through the cross attention module in the visualized Diffusion strategy head, it is found that the future space generated by EnerVerse has strong temporal consistency with the predicted action space. This intuitively demonstrates the relevance and advantages of EnerVerse in future space generation and motion planning tasks.

With the EnerVerse architecture, Intelligent robots have created a new direction for future embodied intelligence. Through future space generation guided motion planning, EnerVerse not only breaks through the technical bottleneck of robot task planning, but also provides a new paradigm for the research of multi-modal and long-range tasks.

Introduction of the author

The main scientific research members of EnerVerse are from the embodied algorithm team of Zhiyuan Robotics Research Institute. Huang Siyuan is a joint doctoral student of Shanghai Jiao Tong University and Shanghai Artificial Intelligence Laboratory, under the guidance of Professor Li Hongsheng of CUHK-MMLab. During his doctoral research, he focused on the research of embodied intelligence and efficient agents based on multimodal large models. Presented multiple papers as first author or co-first author at top conferences such as CoRL, MM, IROS, ECCV, etc. Another co-author, Chen Liliang, is an expert in embodied algorithm of intelligent robot, mainly responsible for the research of embodied space intelligence and world model.

2025-02-17

2025-02-14

2025-02-13

13004184443

Room 607, 6th Floor, Building 9, Hongjing Xinhuiyuan, Qingpu District, Shanghai

gcfai@dongfangyuzhe.com

WeChat official account

friend link

13004184443

立即获取方案或咨询

top