Home > Information > News

#News ·2025-01-09

December 2024 Paper "SafeDrive:" from USC, U Wisconsin, U Michigan, Tsinghua University and the University of Hong Kong Knowledge- and Data-Driven Risk-Sensitive Decision-Making for Autonomous Vehicles with Large Language Models."

Recent advances in autonomous vehicles (AVs) leverage large language models (LLM) to perform well in normal driving scenarios. However, ensuring security in a dynamic, high-risk environment and managing safety-critical long-tail events remains a major challenge. To address these issues, SafeDrive, a knowledge - and data-driven risk-sensitive decision framework, improves AV security and adaptability. The proposed framework introduces a modular system consisting of: (1) a risk module for comprehensively quantifying multi-factor coupled risks involving driver, vehicle and road interactions; (2) A memory module for storing and retrieving typical scenes to improve adaptability; (3) An LLM-driven reasoning module for context-aware security decisions; (4) A reflective module for improving decisions through iterative learning.

By combining knowledge-driven insight with adaptive learning mechanisms, the framework ensures robust decision making under uncertain conditions. For real-world traffic datasets featuring dynamic and high-risk scenarios, including highways (HighD), intersections (InD), and roundups (Rounds), Extensive evaluation validated the framework's ability to improve decision safety (achieving a 100% safety rate), replicate human-like driving behavior (more than 85% decision consistency), and effectively adapt to unpredictable scenarios.

Quantification of risk. Risk quantification is crucial for AV collision avoidance. Classical methods considering vehicle dynamics, such as collision time (TTC) [12], time to the front (THW) [13], reaction time (TTR) [14] and lane crossing time (TLC) [15], are widely used in traffic scenarios due to their simplicity. However, these approaches are often inadequate in dynamic, multi-dimensional environments where risk factors change rapidly and interact in complex ways [13]. To address these limitations, Mobileye's Shalev-Shwartz proposes liability Sensitive Security (RSS) [16], a model that aims to provide a more interpretive white-box security guarantee. However, there are still some difficulties, such as determining a large number of parameters.

To overcome these limitations, advanced methods have been proposed. The Artificial Potential Field (APF) method [17] uses potential fields to simulate vehicle risk and thus achieve basic collision avoidance. Gerdes [18] extended the APF by incorporating lane markings to create detailed risk maps. Wang [19] and [20] combined the road potential field with vehicle dynamics and driver behavior to improve the accuracy of risk simulation and reduce the risk of collision in complex scenarios. However, these methods tend to focus on current traffic conditions, rely on numerous parameters, and lack adaptability in uncertain environments. Kolekar [21] introduced the driver risk field (DRF), which is a two-dimensional model that combines the driver's subjective risk perception based on probabilistic beliefs. By integrating subjective risk assessment, these improved APF and DRF methods better simulate traffic system dynamics and enhance multi-dimensional risk assessment. However, the DRF proposed by Kolekar [22] only considers the risk of the driving direction (forward semicircle of the vehicle), and cannot provide a comprehensive all-round risk quantification.

LLM in decision making. Decision-making is critical to autonomous driving because it directly determines whether a vehicle can safely and efficiently navigate complex, dynamic, and high-conflict traffic scenarios [23]. Traditional data-driven decision methods have inherent limitations. These algorithms are often seen as black boxes, and their sensitivity to data bias, difficulties in handling long-tail scenarios, and lack of interpretability pose significant challenges in providing human-understandable explanations for their decisions, especially when adapting to data-scarce long-tail scenarios. [24][25]

Advances in LLM provide valuable insights for addressing decision-making challenges in autonomous driving. LLM demonstrates human-level perception, prediction, and planning capabilities [26]. When LLM is used in combination with vector databases as memory, their analytical capabilities are significantly enhanced in some areas [27]. Li proposed the concept of knowledge-driven autonomous driving, indicating that LLM can enhance the decision-making ability in the real world through common sense knowledge and driving experience [28]. Weng proposed the DiLu framework, which combines reasoning and reflection to achieve knowledge-driven, evolving decision making and outperforms reinforcement learning methods [29]. Based on DiLu, Jiang developed a knowledge-driven multi-body framework for automatic driving and proved its efficiency and accuracy in various driving tasks [30]. Fang [31] focused on using LLM as a mind body for collaborative driving in different scenarios.

Recent advances have also highlighted the potential of LLM for multimodal reasoning. Hwang [32] introduced EMMA, an end-to-end multimodal model for motion planning using pre-trained LLM, for best results with nuScenes and WOMD. However, its reliance on image input and high computational costs pose challenges. Sinha [33] proposes a two-stage framework that combines fast anomaly classifiers with back-up reasoning for real-time anomaly detection and reactivity planning, and demonstrates robustness in simulations. These studies highlight the potential of LLM in AV decision making, where real-time reasoning and adaptability are critical. However, most research has focused on simple scenarios that lack adaptability in high-conflict environments.

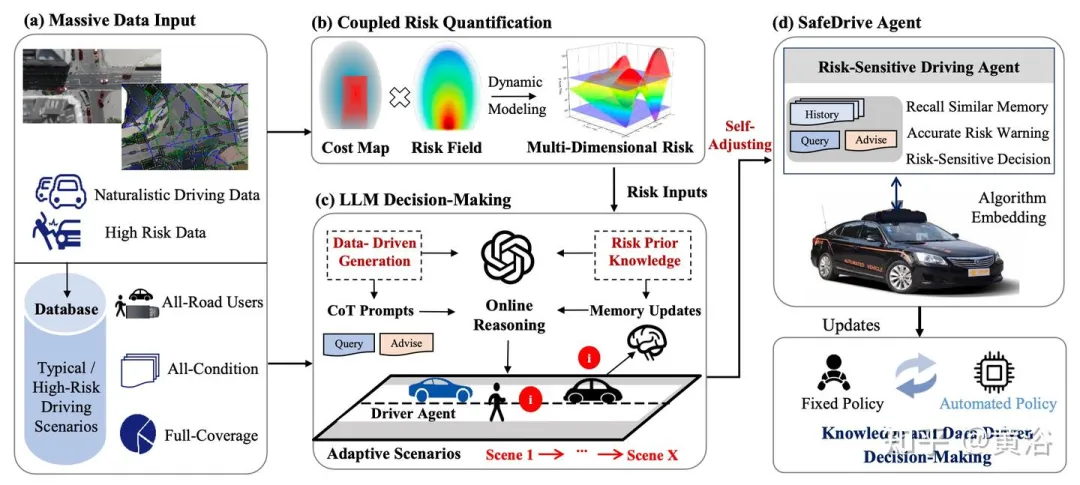

SafeDrive is a knowledge - and data-driven risk-sensitive decision framework based on LLM, as shown in the figure. SafeDrive combines natural driving data with high-stakes scenarios to enable AVs to make adaptive safety decisions in complex, dynamic environments.

The framework starts with a large data entry (Figure a), combining all road users, all condition scenarios, and full coverage data into a comprehensive database of typical and high-risk driving scenarios. In the Coupled risk Quantification module (Figure b), advanced risk modeling, including cost maps and multidimensional risk fields, dynamically quantifies risk to provide detailed input for decision making. The LLM Decision module (Figure c) uses data-driven generation, risk prior knowledge, and Chain of Thought (CoT) reasoning to generate real-time risk-sensitive decisions. In addition, adaptive memory updating ensures that similar experiences can be recalled to improve the decision-making process. These decisions are embedded in the risk-sensitive Driving body (Figure d), which provides accurate risk warnings, reviews past experiences and makes adaptive decisions. The self-adjusting system ensures that risks are identified in real time and driving strategies are constantly updated through a closed-loop reflection mechanism.

Overall, SafeDrive enhances real-time responsiveness, decision security, and adaptability to address challenges in high-risk, unpredictable scenarios.

The concept of perceived risk is defined by Naatanen & Summala [34] as the product of the subjective probability of an event occurring and the consequences of the event. This paper uses a dynamic driver risk field (DRF) model, which can adapt to vehicle speed and steering dynamics, inspired by Kolekar [21-22]. DRF represents a driver's subjective belief about future location, which assigns higher risk when approaching a self-contained vehicle and decreases with increasing distance. Event consequences are quantified by assigning experimentally determined costs to targets in the scenario based on how dangerous they are, independent of subjective assessment. The overall quantified perceived risk (QPR) is calculated as the sum of the event cost and DRF for all grid points. This approach effectively captures uncertainty in drivers' perceptions and actions, providing a comprehensive measure of driving risk.

Driver risk field. This work extends the DRF to enable it to take into account dynamic changes based on vehicle speed and steering Angle. The DRF is calculated using a kinematic car model, where the predicted path depends on the vehicle's position (x/car, y/car), heading φ/car, and steering Angle δ. Assuming a constant steering Angle, the radius of the predicted travel arc is given by the following formula: R/car = L/tan(δ), where L is the wheelbase of the car. Using the position of the vehicle and the radius of the arc, you can find the center of the turn (x/c, y/c), and then calculate the length of the arc s, which represents the distance along the path.

The DRF is modeled as a torus with a Gaussian cross-section. Each target in the environment is assigned a cost, creating a cost graph. The plot is combined with DRF by element multiplication and summed over a grid to calculate quantified perceived risk (QPR).

This index reflects the driver's perception of the likelihood and severity of a potential accident, combining subjective perception with objective risk quantification.

All-round risk quantification. The traditional driver risk field (DRF) focuses only on the forward facing semicircle. In order to achieve a realistic risk assessment of autonomous driving, this model extends it to a 360-degree perspective while incorporating the risks of the front and rear vehicles. Enhance site awareness and safety by including the DRF of the rear vehicle and its collision costs with the self vehicle to create a uniform risk profile from all angles.

The method not only calculates the overall risk, but also assesses the specific risk attributes of each participant. This can identify those that pose a greater danger, allowing for more targeted identification and warning of risks.

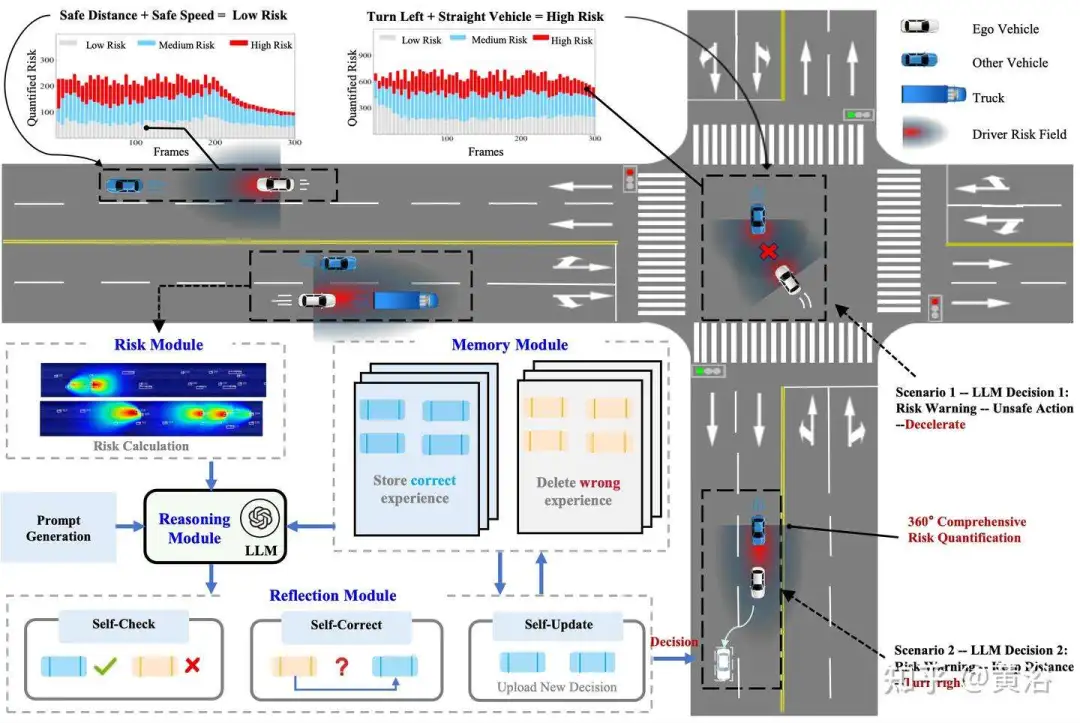

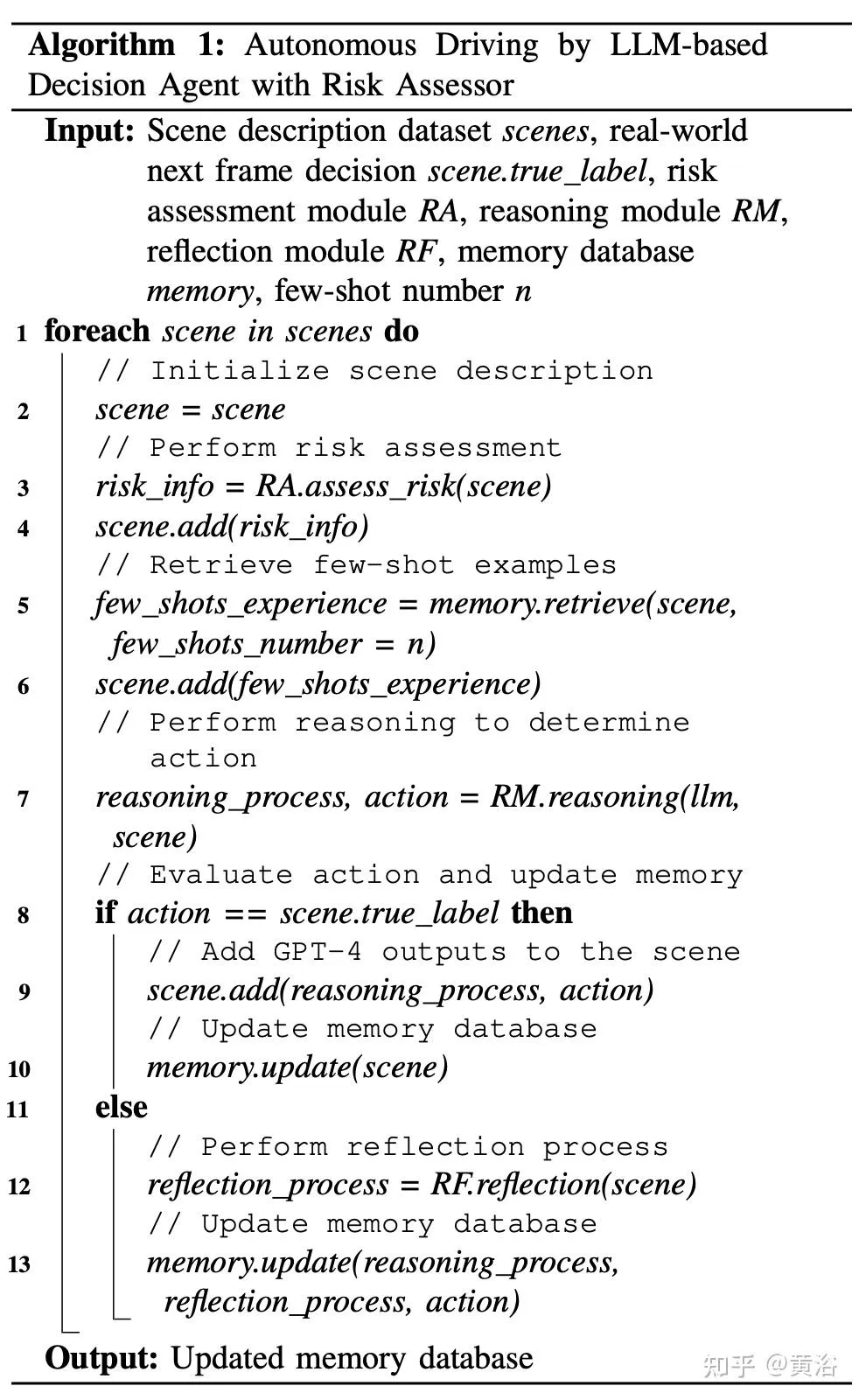

Based on the previously introduced risk quantification and previous knowledge-driven paradigms for autonomous driving systems, SafeDrive, a knowledge-driven and data-driven framework, is proposed using the reasoning power of large models, as shown in the figure. In this paper, GPT-4 acts as a decision agent, driving the reasoning process and generating actions. Manually annotated scene descriptions from real-world data sets paired with the next frame action as truth labels, including HighD (highway), InD (urban intersection), and RounD (roundabout). These descriptions provide environmental context, such as the ID, location, and speed of surrounding vehicles, allowing GPT-4 to interpret the environment and support reasoning and decision making.

The SafeDrive architecture consists of four core modules: risk module, reasoning module, memory module and reflection module. The process is iterative: inference modules make decisions based on system messages, scenario descriptions, risk assessments and store similar memories; The reflection module evaluates decisions and provides a self-reflection process; The memory module stores the correct decision for future retrieval. Using three real-world data sets as inputs, this self-learning loop improves decision accuracy and adaptability for dealing with diverse and complex scenarios. The overall decision algorithm based on LLM is shown in Algorithm 1.

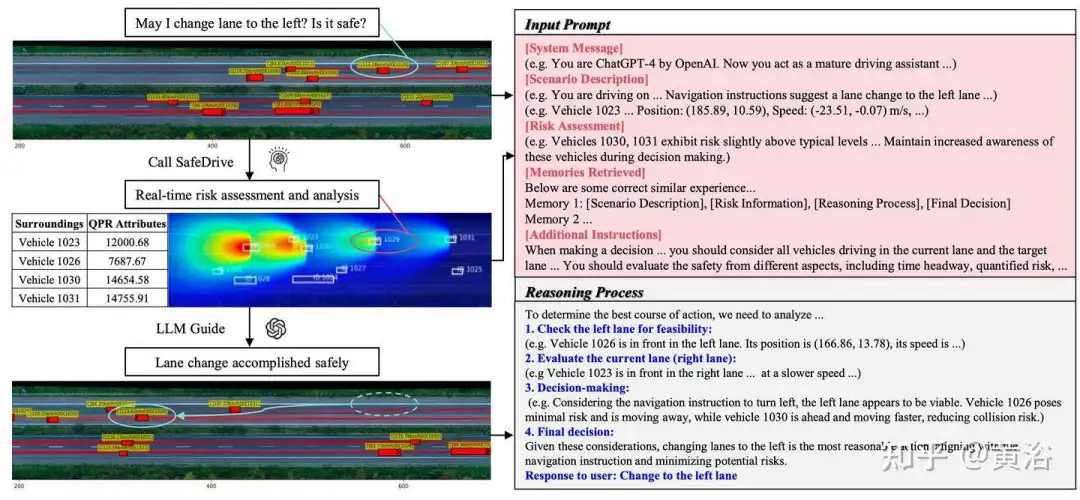

As shown in the figure, in a dynamic scenario, SafeDrive receives user navigation instructions and scene descriptions to assess the risk attributes (such as QPR values), position, and speed of surrounding vehicles in real time. The system then uses LLM reasoning and historical memory for feasibility checks, lane assessments, and decisions to determine the safest course of action, such as lane changes. Overall, by combining multidimensional risk quantification with GPT-4's reasoning, SafeDrive delivers real-time, risk-sensitive decisions. In high-risk scenarios such as highways and intersections, it identifies unsafe behavior and makes adaptive decisions (such as slowing down or turning). The closed-loop reflection mechanism ensures continuous optimization and enhances responsiveness, adaptability and safety.

Risk module. The risk module generates a detailed textual risk assessment for each participant based on the risk quantification model described above and the defined thresholds. These thresholds are experimentally determined, taking into account the risk distribution and common safety criteria, addressing both vertical and horizontal risks. This integration ensures greater caution in decision-making, guiding GPT-4 driving intelligence to effectively avoid or mitigate unsafe behavior.

Reasoning module. The inference module facilitates the system decision process through three key components. It begins with a system message that defines the role of GPT-4 driving intelligence, Outlines the expected response format, and emphasizes the safety principles of decision making. After receiving inputs consisting of scenario descriptions and risk assessments, the module interacts with the memory module to retrieve similar successful past samples and their correct reasoning processes. Finally, the action decoder translates the decision into specific actions for its own vehicle, such as speeding up, slowing down, turning, changing lanes, or holding idle. This structured approach ensures that informed and security-conscious decisions are made.

Memory module. The memory module is the core component of the system, which enhances decision making by drawing on past driving experiences. It uses GPT embedding to store vectorized scenes in a vector database. The database is initialized with a set of manually created examples, each containing a scenario description, risk assessment, template reasoning process, and correct actions. When encountering new scenarios, the system retrieves relevant experiences by matching vectorized descriptions with similarity scores. After the decision process, new samples are added to the database. This dynamic framework supports continuous learning, enabling the system to adapt to different driving conditions.

Reflection module. The reflection module evaluates and corrects bad decisions made by the driving mind, initiating the thought process and thinking about why the mind chose the wrong action. The corrected decision and its reasoning are stored in a memory module as a reference to prevent similar mistakes in the future. This module not only allows the system to evolve continuously, but also provides developers with detailed log information, enabling them to analyze and refine system messages to improve the decision logic of the intelligence body.

2025-02-17

2025-02-14

2025-02-13

13004184443

Room 607, 6th Floor, Building 9, Hongjing Xinhuiyuan, Qingpu District, Shanghai

gcfai@dongfangyuzhe.com

WeChat official account

friend link

13004184443

立即获取方案或咨询

top