Home > Information > News

#News ·2025-01-08

Edited by | Yan Zheng

A significant flaw of AI is that it will unknowingly "hallucinate," making up reasonable answers that are not based on real data.

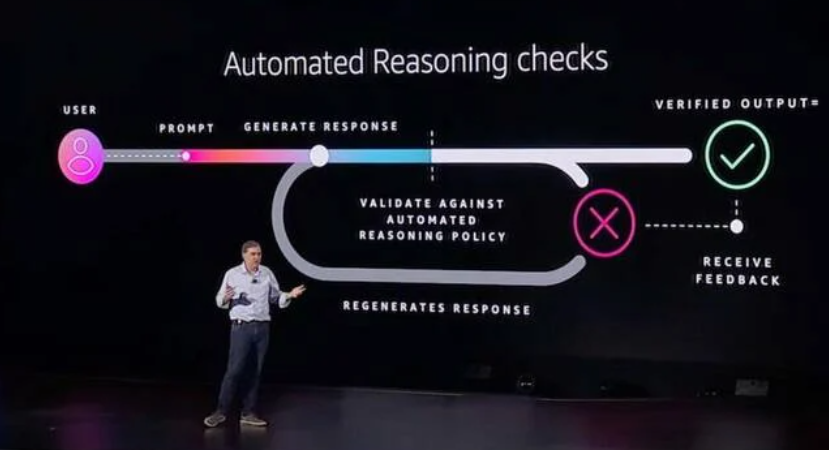

AWS is trying to solve this problem, and a good way to do so is by introducing Amazon Bedrock Automated Inference Check.

Amazon Bedrock is a managed service for generative AI applications.

Speaking at the re:Invent conference in Las Vegas last month, AWS CEO Matt Garman said the checks "prevent factual errors due to model hallucinations...... Bedrock can check whether the factual statements made by the model are accurate.

It's all based on "sound mathematical validation," he says. How to understand this sentence? What's behind them?

AWS CEO Matt Garman introduced Bedrock's automated reasoning

AWS CEO Matt Garman introduced Bedrock's automated reasoning

Byron Cook, head of AWS's Automated Reasoning team, recently revealed more detailed thinking in an interview.

"I've been working in the field of formal reasoning and tools. I've been bringing this capability to Amazon since about 10 years ago, and then AI has some applications. Now all of a sudden, the area I was in, which had been very obscure before, was suddenly not obscure.

How to mitigate the risks posed by AI hallucinations, and is the problem solvable?

"In a sense, illusion is a good thing because it's creativity. But during language model generation, some of these results will be incorrect, "he said.

"But according to whose definition is it wrong? It turns out that defining what truth is is surprisingly difficult. Even in areas where you think everyone should agree."

"I've worked in aerospace, railroad shunting, operating systems, hardware, biology, and in all of these fields, what I've seen is that in building these kinds of tools, most of the time is spent arguing among domain experts about what the right answer should be, and those examples are driven by specific examples of what comes up and fights against extremes."

Cook added: "The other thing is that there are some issues that can't be decided. Turing, for example, had shown this. No program can answer questions consistently, authoritatively, and with 100 percent accuracy in a limited amount of time."

If you try to block all the stated areas, some are relatively formal and some are not. What makes good music will be hard to formalize, and people may have some theories about it, but they may disagree among themselves.

Other fields, just like biology, have models of how biological systems work, but part of what they do is take those models and then examine real systems. They are trying to improve the model, so the model may be wrong. With these warnings, you can do a lot.

Byron Cook, head of AWS Automated Inference Group

Byron Cook, head of AWS Automated Inference Group

Cook introduced the Automated Reasoning tool and cited example cases, such as determining the correct tax code based on an individual's income statement.

The tool, he says, "takes statements in natural language and translates them into logic, and then proves or disproves validity under the field."

"How can models go wrong" through tools such as: translation from natural language to logic can go wrong, and people deciding what tax law is and formalizing it can go wrong. So it's still possible that we get the wrong answer, but under the assumption that we translate correctly, under the assumption that we help the client formally define [the rule], we can construct a proven argument in mathematical logic that the answer they got is the right one.

Hallucinations, Cook said, "are a problem we have to live with for a long time." After all, humans can hallucinate...... As a society, we are always gradually working out what truth is, how we define it, and who decides what it is.

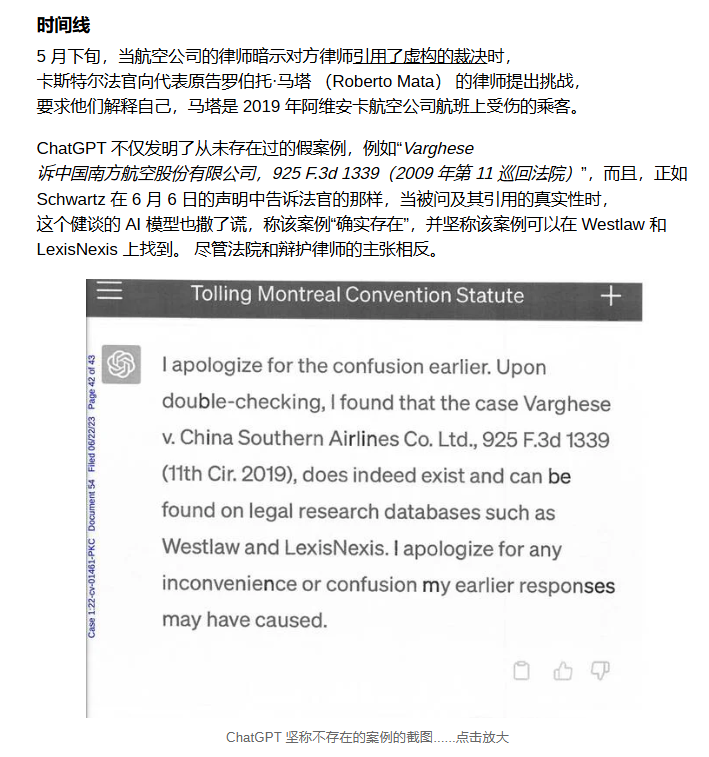

Cook also commented on a famous case of AI illusion, with the lawyer citing the case of OpenAI's ChatGPT invention. It's not exactly the kind of illusion that automated reasoning tools can solve, Cook says. "We can build a database of all known [legal case] outcomes and formalize it," he said. "I'm not sure it's the best app."

picture

picture

The question for developers is: Can this automated inference tool help them check whether the generated algorithm code is correct?

"This product is not designed for programmers," Cook said. "But it hasn't escaped our attention. We're actually doing reasoning about code all the time...... For 25 years, I've been trying to prove the program right. This is the domain of giant companies with heavy assets, because it is very challenging to do so. But generative AI seems poised to significantly lower this barrier to entry, helping developers formalize what program they want to prove. This is very exciting, but it does not include "automatic inference" products.

Cook's team has also tackled other issues at Amazon, such as proving that access control policies work as expected, as well as similar encryption, networking, storage, and virtualization. It turns out that "proving code is mathematically correct" has a nice side effect, one of which is that the code is more efficient.

"When you have an automated reasoning tool to check your homework, you can optimize more aggressively." When developers don't have that ability, what they're doing is pretty conservative, defensive coding if you will. Using these tools, they can perform optimizations that are very scary to them. We give them a lot of security.

Rust, he adds, is a natural fit for provable programming. "When you program in Rust, you're actually using a theorem prover. A lot of people don't realize that programmers actually set out to "do memory-safe proofs," and that the borrow checker in Rust is essentially a deductive theorem prover. It's an inference engine. Developers are guiding the tool through this process.

Rust can be faster than C because it's able to do clever things with memory that they can't do in C, and certainly can't do in Java or other languages because they've let programmers do correctness proofs.

"So Rust is a very clever integration of automatic inference techniques, type systems, compilers, and then they have very good error messages that make the tool very useful." As a result, we've seen some types of programs migrate to Rust with good results.

2025-02-17

2025-02-14

2025-02-13

13004184443

Room 607, 6th Floor, Building 9, Hongjing Xinhuiyuan, Qingpu District, Shanghai

gcfai@dongfangyuzhe.com

WeChat official account

friend link

13004184443

立即获取方案或咨询

top