Home > Information > News

#News ·2025-01-08

In addition to improving data efficiency, the MeCo method in this paper guarantees little increase in computational overhead and complexity.

Danqi Chen, assistant professor of computer science at Princeton University, and his team have a new paper, this time focusing on "using metadata to accelerate pre-training."

We know that language models achieve excellent generality by being trained on large networked corpora. Diversity training data highlights a fundamental challenge: Unlike people who naturally adapt their understanding to data sources, language models process everything as an equivalent sample.

This approach to heterogeneous source data in the same way creates two problems: it ignores important contextual signals that contribute to understanding, and it prevents the model from reliably displaying appropriate behaviors, such as humor or facts, in specialized downstream tasks.

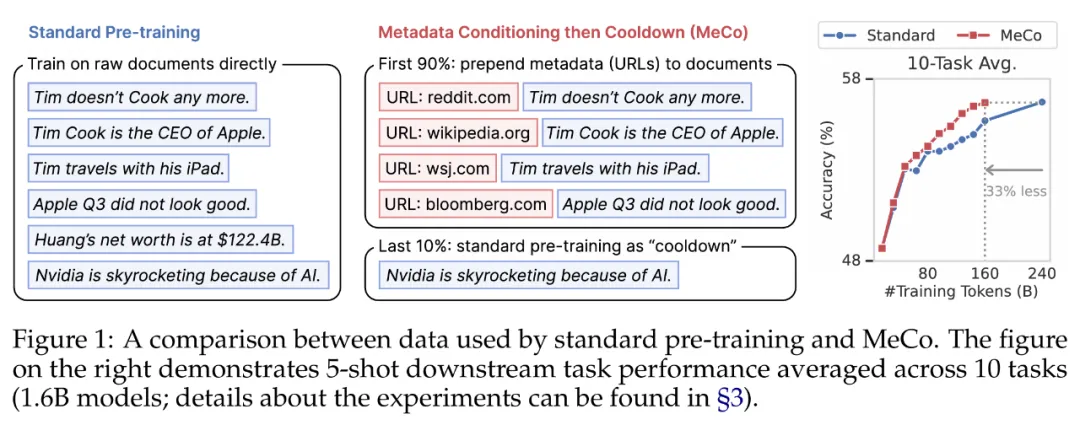

In the face of these challenges, and to provide more information about each document source, Danqi Chen's team proposes in this paper to use the document's corresponding metadata during pre-training by adding a widely available source URL before each document. And to ensure that the model runs efficiently with or without metadata during inference, a cooldown is implemented in the last 10% of training. They call this pre-training method Metadata Conditioning then Cooldown (MeCo).

Metadata conditions have been used in previous work to guide model generation and improve the robustness of the model against malicious suggestions, but the researchers confirmed the general utility of the proposed approach through two key points. First, they demonstrate that this paradigm can directly accelerate the pre-training of language models and improve downstream task performance. Second, MeCo's cooling phase ensures that the model can perform inference without metadata, unlike previous approaches.

The main contributions of this paper include the following:

First, MeCo greatly accelerates the pre-training process. The researchers demonstrated that MeCo enabled the 1.6B model to achieve the same average downstream performance as the standard pre-trained model with 33% less training data. MeCo showed consistent gains at model sizes (600M, 1.6B, 3B, and 8B) and data sources (C4, RefinedWeb, and DCLM).

Second, MeCo opens up a new way to guide models. During inference, adding the appropriate real or synthetic URL before prompting can induce the desired model behavior. For example, using "factquizmaster.com" (not a real URL) can improve the performance of general knowledge tasks, such as zero sample general knowledge questions, by 6% in absolute performance. Conversely, using "wikipedia.org" (real URL) can reduce the likelihood of toxic generation by several times compared to standard unconditional reasoning.

Ablation experiments of MeCo design selection have shown that it is compatible with different types of metadata. Ablation experiments using hash urls and model-generated topics show that the primary role of metadata is to group documents by source. Thus, even without urls, MeCo can effectively merge different types of metadata, including more fine-grained options.

The results show that MeCo can significantly improve the data efficiency of language models, while hardly increasing the computational overhead and complexity of the pre-training process. In addition, MeCo offers enhanced controllability, promising the creation of more controllable language models, and its general compatibility with more fine-grained and creative metadata is worth further exploration.

In short, as a simple, flexible and effective training paradigm, MeCo can improve the practicality and controllability of language models at the same time.

Tianyu Gao also interacted with readers in the comments section and answered the question "Does MeCo need to balance overfitting and underfitting?" He stated that one assumption of this paper is that MeCo performs implicit data mixing optimization (DoReMi, ADO) and upsamples underfit and more useful domains.

Lucas Beyer, an OpenAI researcher, said he had done similar work on visual language models (VLM) a long time ago, which was interesting but ultimately not very useful.

Methodology overview

The method in this paper includes the following two training stages, as shown in Figure 1 below.

Pre-training with metadata conditions (first 90%) : The model is trained on concatenated metadata and documents and follows the following template "URL: en.wikipedia.org [document]". When other types of metadata are used, the URL is replaced with the appropriate metadata name. The researchers calculated the cross-entropy loss of document tokens only, ignoring tokens from templates or metadata. In their initial experiments, they found that training with these tokens compromised downstream task performance.

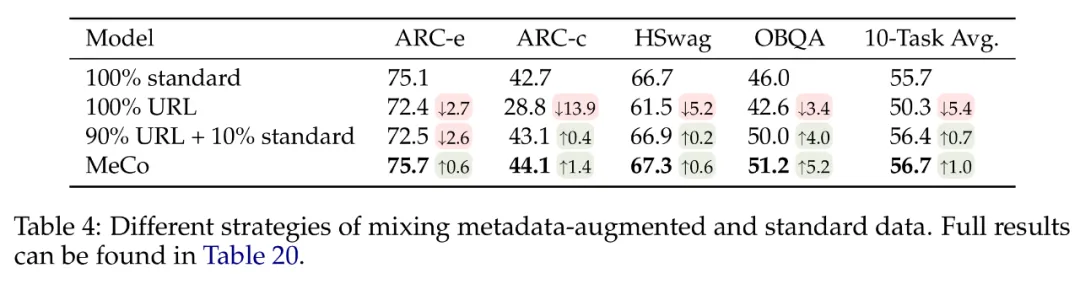

Cooling with standard data (last 10%) : For models trained only with metadata enhanced data, performance deteriorates without metadata (see Table 4 below). To ensure generality, the researchers trained the model using a standard pre-training document without any metadata during the cooling phase, which covers the last 10% of the pre-training process.

The cooling phase inherits the learning rate plan and optimizer state from the metadata condition phase, that is, it initializes the learning rate, model parameters, and optimizer state from the last checkpoint of the previous phase, and continues to adjust the learning rate according to the plan.

The researchers also used the following two techniques in all experiments, and preliminary experiments showed that they improved the performance of the baseline pre-trained model:

The researchers used the Transformer architecture and LLAMA-3Tokenizer used in the Llama series models for all experiments, using four model sizes: 600M, 1.6B, 3B, and 8B. They used standard optimization Settings for the language model, namely the AdamW optimizer and the Cosine learning rate plan.

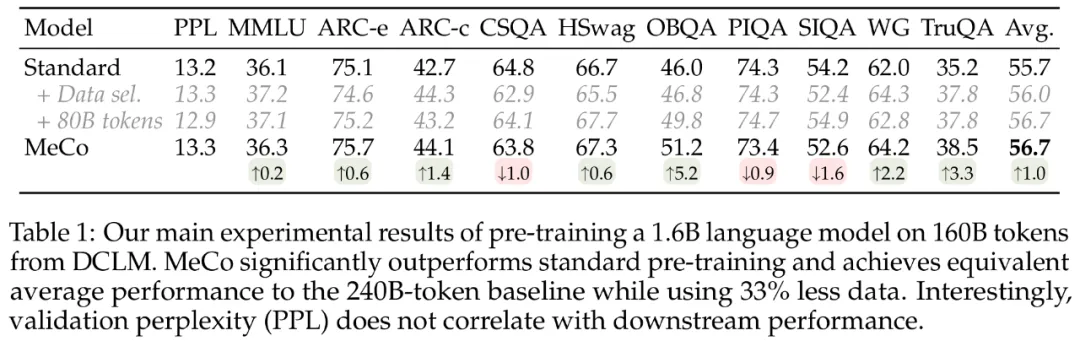

Table 1 below shows the main results of the pre-training of the 1.6B language model on the 160B token on the DCLM. They first observed that MeCo performed significantly better than standard pre-training methods in most tasks. MeCo also goes beyond the data-picking baseline. And unlike data-picking methods, MeCo does not incur any computational overhead; it takes advantage of the readily available URL information in the pre-trained data.

More importantly, MeCo achieved performance comparable to standard pre-training methods while using 33% less data and computation, representing a significant increase in data efficiency.

Table 1 below is the confusion index, indicating that verification confusion has nothing to do with downstream performance. It is worth noting that when comparing the 240B baseline model to the 160B MeCo model, the baseline model exhibits much less confusion due to the large amount of data, but the two models achieve similar average performance.

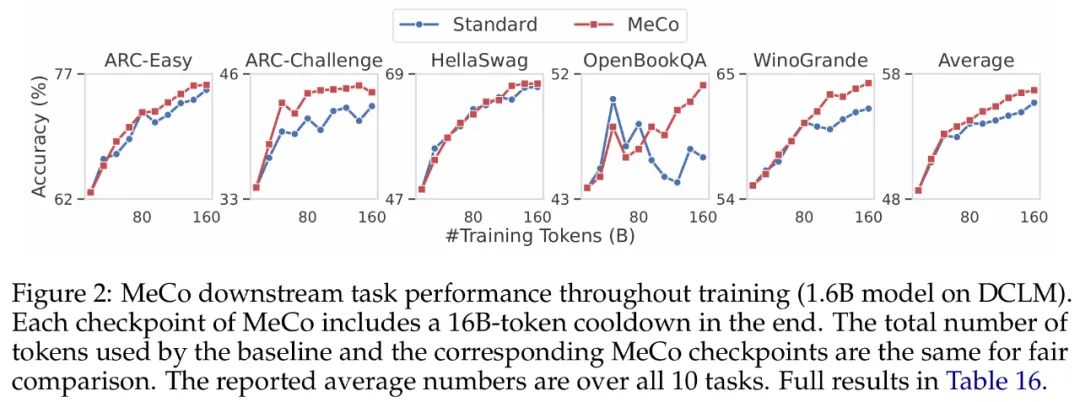

In Figure 2, the researchers show the performance changes of downstream tasks throughout the pre-training process. For MeCo, each checkpoint in the diagram contains a cooling phase using 16B tokens (10% of the total trained tokens). For example, the 80B checkpoint contains conditional training for 64B tokens and cooling for 16B tokens. They observed that MeCo consistently outperformed the baseline model, especially late in training.

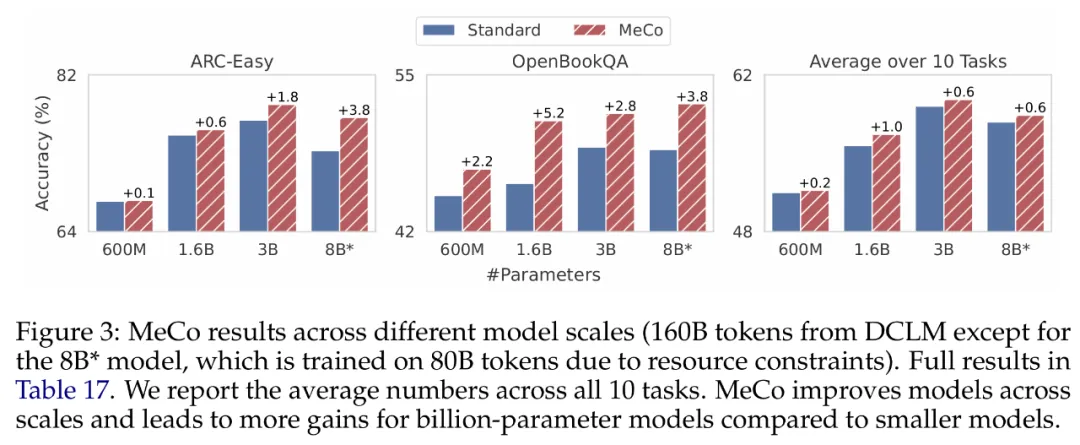

Figure 3 below shows the results for different model sizes (600 M, 1.6B, 3B, and 8B). The researchers used the same optimized hyperparameters and the same amount of data (160B on the DCLM) to train all models, with the 8B model being an exception, which was trained using 80B tokens and had a low learning rate due to resource constraints and training instability.

The researchers observed that MeCo improved model performance at all scales. And MeCo looks like it can bring more improvements to larger models, with models with gigabytes of parameters showing more significant gains compared to 600M. Note, however, that this is a qualitative observation, and the scaling of downstream task performance is less smooth than the pre-training loss.

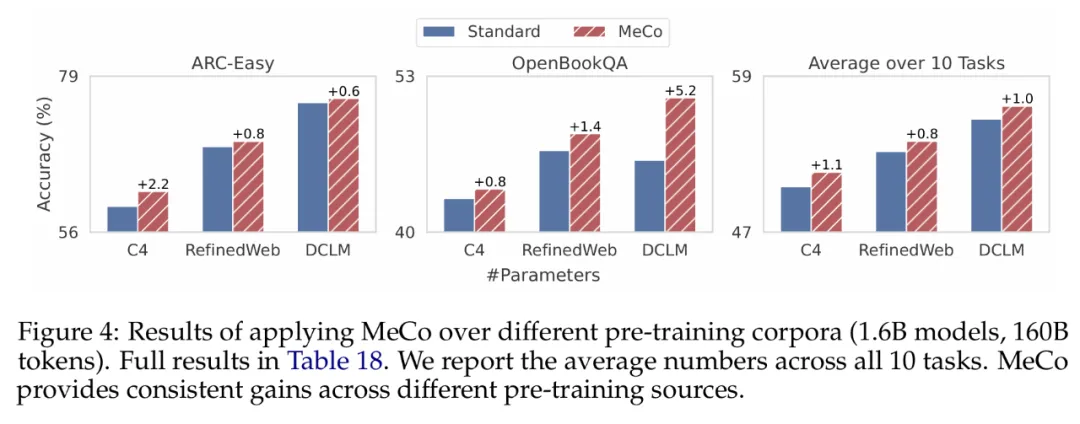

The researchers trained a 1.6B model on 160B tokens based on three different data sources (C4, RefinedWeb, and DCLM), and the results are shown in Figure 4 below. If average downstream performance is used as a data quality metric, the three data sources are sorted as DCLM > RefinedWeb > C4. They observed that MeCo achieved consistent and significant gains across different data sources, both in average accuracy and for individual tasks.

Please refer to the original paper for more technical details.

2025-02-17

2025-02-14

2025-02-13

13004184443

Room 607, 6th Floor, Building 9, Hongjing Xinhuiyuan, Qingpu District, Shanghai

gcfai@dongfangyuzhe.com

WeChat official account

friend link

13004184443

立即获取方案或咨询

top