Home > Information > News

#News ·2025-01-08

This article is reprinted with the authorization of AIGC Studio public account, please contact the source for reprinting.

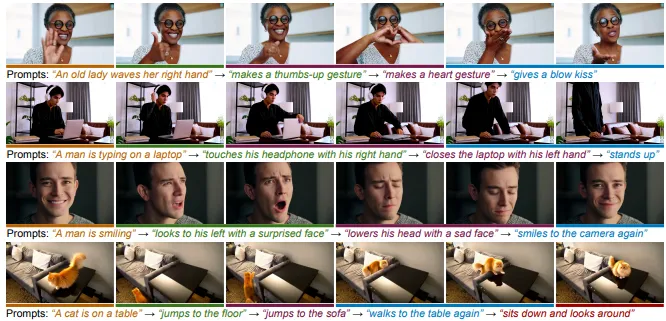

MinT is the first text-to-video model capable of generating sequential events and controlling their time stamps. Use MinT to generate time-controlled multi-event videos. Given a series of event text prompts and their required start and end timestamps, MinT can synthesize smoothly connected events with a consistent theme and background. In addition, it can flexibly control the time span of each event. The image below shows the results of successive gestures, daily activities, facial expressions, and cat movements.

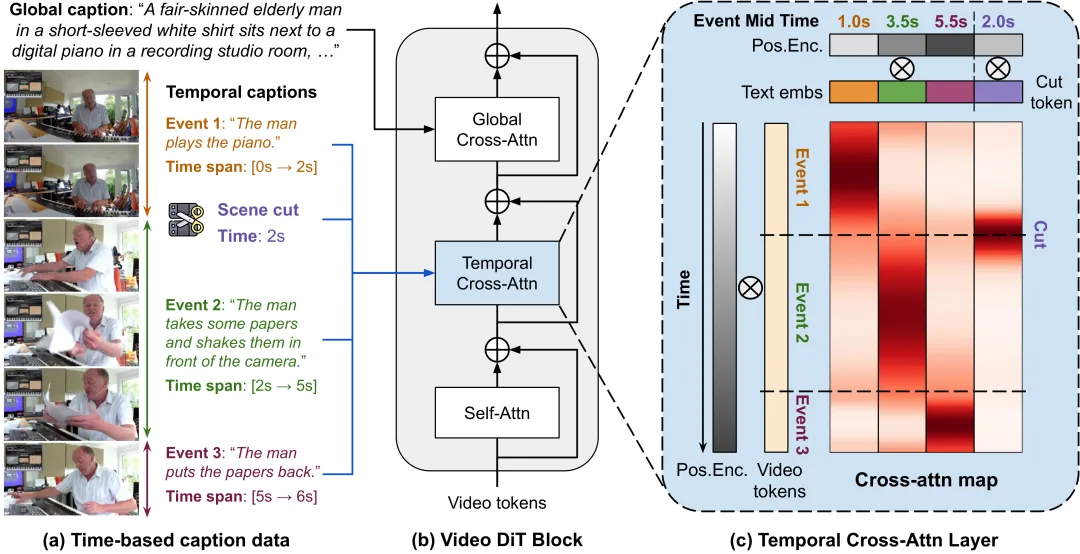

A real-world video consists of a series of events. It is not feasible to generate such sequences with precise time control using existing video generators because these generators rely on a piece of text as input. When generating multiple events with a single prompt, these methods often ignore certain events or fail to put them in the correct order. To address this limitation, we came up with MinT, a multi-event video generator with time control. Our main insight is to bind each event to a specific time period in the generated video, which allows the model to focus on one event at a time. To achieve time-aware interaction between event captions and video tags, we designed a time-based location coding method called ReRoPE. This coding helps guide cross-attention operations. By fine-tuning pre-trained video diffusion converters on time-based data, our approach can generate coherent videos with smooth connection events. For the first time in the literature, our model provides control over the timing of events in a generated video. Numerous experiments have shown that MinT performs far better than existing open source models.

Here we present some high resolution video (1024x576). We use colored borders and captions to indicate the time period of each event. We first pause the video before each event, and then play it again consecutively. You can find more 512x288 videos here.

Existing video generators have difficulty generating continuous events. It was compared to the SOTA open source models CogVideoX-5B and Mochi 1 and the commercial models Kling 1.5 and Gen3-Alpha. Connect all time captions to a long prompt and run their online API to generate video. Our tips for the SOTA model can be found in Tips.

Existing models often miss events from the results, or merge multiple events and confuse their order. In contrast, MinT seamlessly synthesizes all events in the desired time span. For more analysis of SOTA model behavior, see Appendix C.6 of the paper. See more comparisons here.

MinT has fine-tuned for time-captioned videos that primarily depict human-centered events. However, we show that our model still has the ability of the base model to generate new concepts. Here, we show videos that MinT generates conditional on out-of-distribution prompts

We use LLM to expand short prompts into detailed global captions and time captions, which can generate more interesting videos with richer action. The instructions we use for LLM can be found in the tips. Here, we use raw Short prompts (called Short) and detailed Global captions (called Global) to compare the video generated by our base model. This allows the average user to use our model without the hassle of specifying events and timestamps.

Long videos tend to contain a wealth of events, but are also accompanied by many scene cuts. Training the video generator directly with them will result in unwanted sudden shot transitions in the generated results. Instead, we recommend explicitly conditioning the model during training based on a scene switch timestamp. Once models learn this conditioning, we can set them to zero to generate clip-free videos when reasoning. Here, we compare videos generated with different scene switching conditions. We pause the video during the input scene switch time (highlighted with cyan border). Our model introduces the required lens transitions while still preserving the subject identity and scene background

We demonstrated MinT's fine-grained control over event timing. In each example, we offset the start and end times of all events by specific values. Therefore, each line shows the smooth progress of the event

MinT is a multi-event video generation framework with event time control. Methods A unique location coding method is used to guide the temporal dynamics of the video to produce smoothly connected events and consistent themes. With the help of the LLM paper, we further designed a prompt enhancer that can generate motion-rich videos from simple prompts.

2025-02-17

2025-02-14

2025-02-13

13004184443

Room 607, 6th Floor, Building 9, Hongjing Xinhuiyuan, Qingpu District, Shanghai

gcfai@dongfangyuzhe.com

WeChat official account

friend link

13004184443

立即获取方案或咨询

top