Home > Information > News

#News ·2025-01-06

The release of OpenAI o1 and o3 models has proved that reinforcement learning can make large models possess high order reasoning ability of rapid iterative trial and error and deep thinking like humans. Today, the Scaling Law based on imitation learning is gradually questioned, and the exploration-based reinforcement learning is expected to bring new Scaling Law.

Recently, the NLP Lab of Tsinghua University, Shanghai AI Lab, Department of Electronics of Tsinghua University, OpenBMB community and other teams proposed a new Reinforcement learning method combining Process REwards - PRIME (Process Reinforcement through IMplicit REwards).

Using the PRIME method, the researchers do not rely on any distillation data and imitation learning, only 8 A100, cost about 10,000 yuan, less than 10 days, can efficiently train a 7B model with mathematical ability more than GPT-4o, Llama 3.1-70B Eurus-2-7B-PRIME.

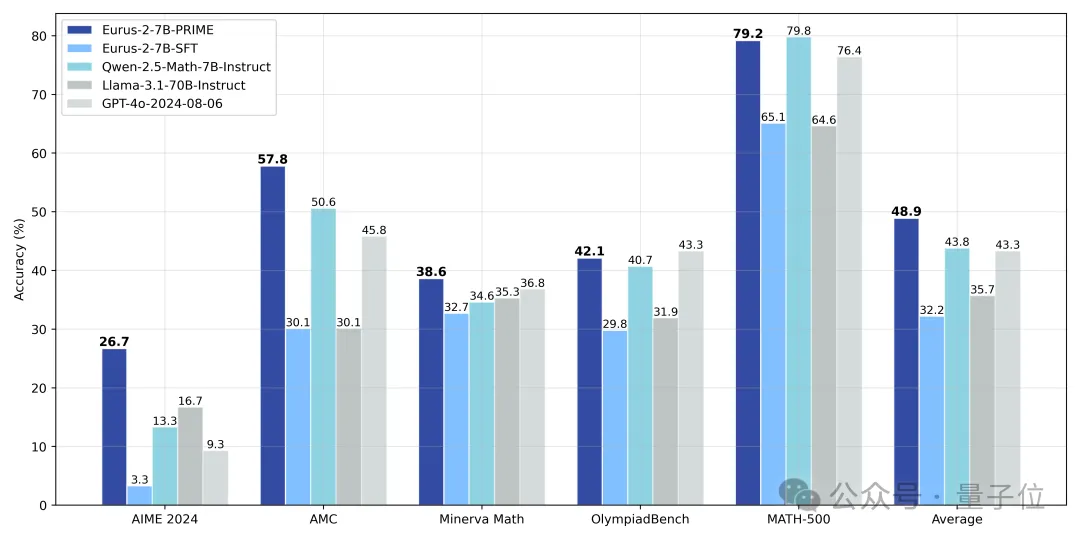

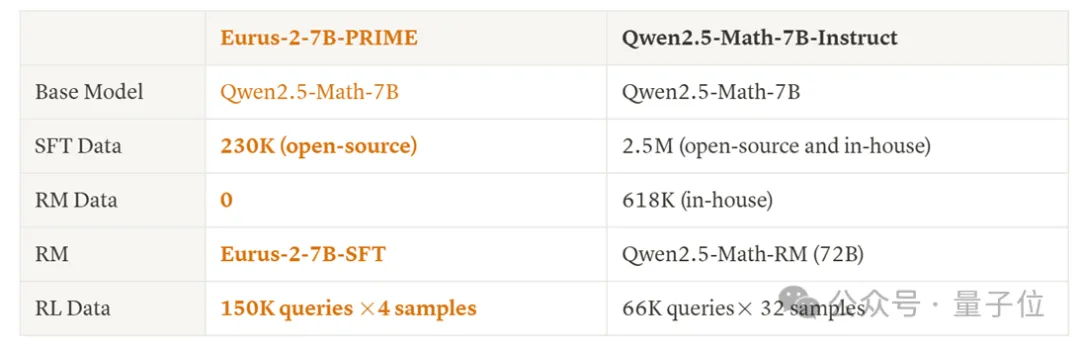

Specifically, using Qwen2.5-Math-7B-Base as a base model, the researchers trained a new model, Eurus-2-7B-PRIME, and achieved 26.7% accuracy on the US IMO selection exam AIME 2024, significantly surpassing GPT-4o. Llama3.1-70B and Qwen2.5-Math-7B-Instruct that only 1/10 of the Qwen Math data was used. Among them, the reinforcement learning method PRIME brought an absolute improvement of 16.7% to the model, far more than any known open source solution.

Once the project was open source, it exploded in the overseas AI community, and nearly 300star was obtained on Github in just a few days.

In the future, the PRIME approach and stronger base models have the potential to train models close to OpenAI o1.

For a long time, the open source community has relied heavily on data-driven imitation learning to enhance model inference, but the limitations of this approach are obvious - stronger inference requires higher-quality data, which is always scarce, making imitation and distillation difficult to sustain.

Although the success of OpenAI o1 and o3 proves that reinforcement learning has a higher ceiling, there are two key challenges in reinforcement learning: (1) how to obtain precise and scalable intensive rewards; (2) How to design reinforcement learning algorithms that can take full advantage of these rewards.

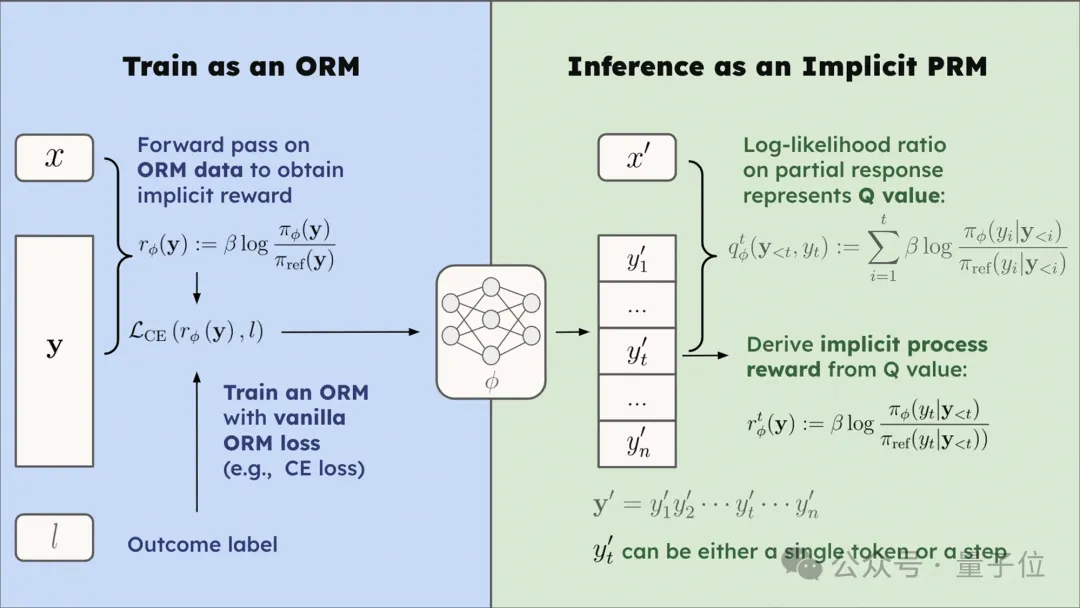

PRIME algorithm starts from the idea of implicit process reward to solve these two problems. The implicit process reward model can only be trained on the data of the outcome reward model (ORM), that is, the final right or wrong answer, while the implicit process reward model can be modeled and a process reward model can be automatically trained, and the whole process has strict theoretical guarantees.

Detailed derivation see: https://huggingface.co/papers/2412.01981

Based on this nature of the implicit process reward model, the researchers point to three major advantages of applying it to reinforcement learning:

Implicit process reward solves the three major problems of how to use PRM in large-scale model reinforcement learning, how to train, and how to extend. Reinforcement learning can be started without even training additional reward models, with excellent usability and scalability.

The PRIME algorithm flow is shown below, which is an online reinforcement learning algorithm that seamlessly applies the process rewards of each token to the reinforcement learning flow.

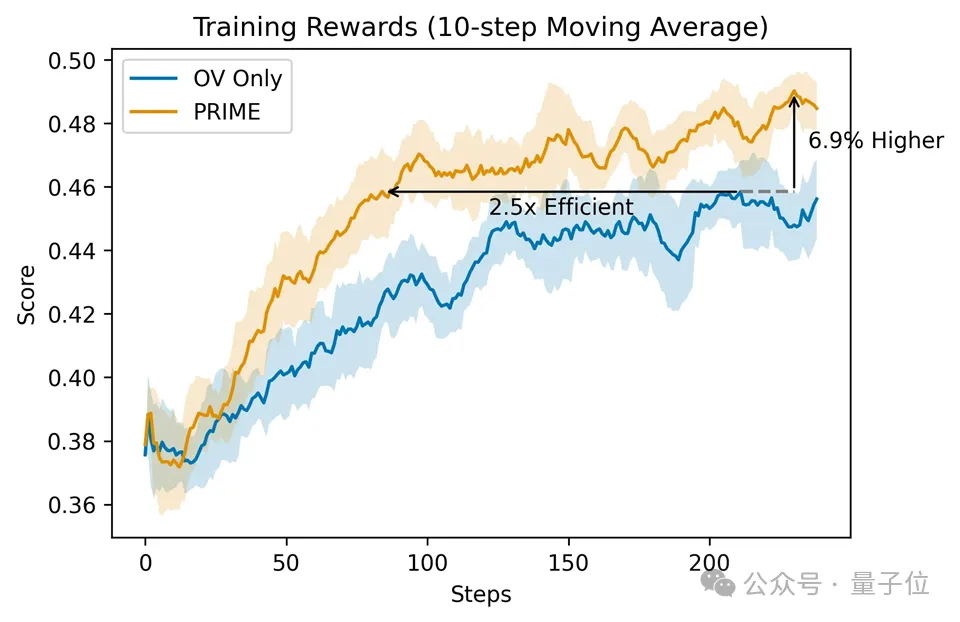

The researchers compared the PRIME algorithm with the baseline method in detail.

Compared to results monitoring alone, PRIME has a 2.5-fold increase in sampling efficiency and a significant improvement in downstream tasks.

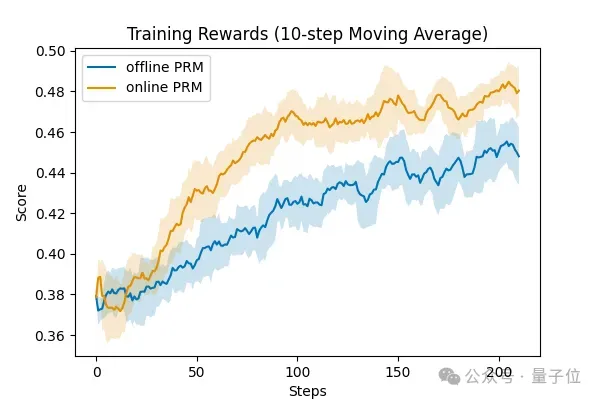

The researchers also verified the importance of online PRM update, it can be seen that online PRM update is significantly better than fixed PRM update, which also proves the PRIME algorithm design and rationality.

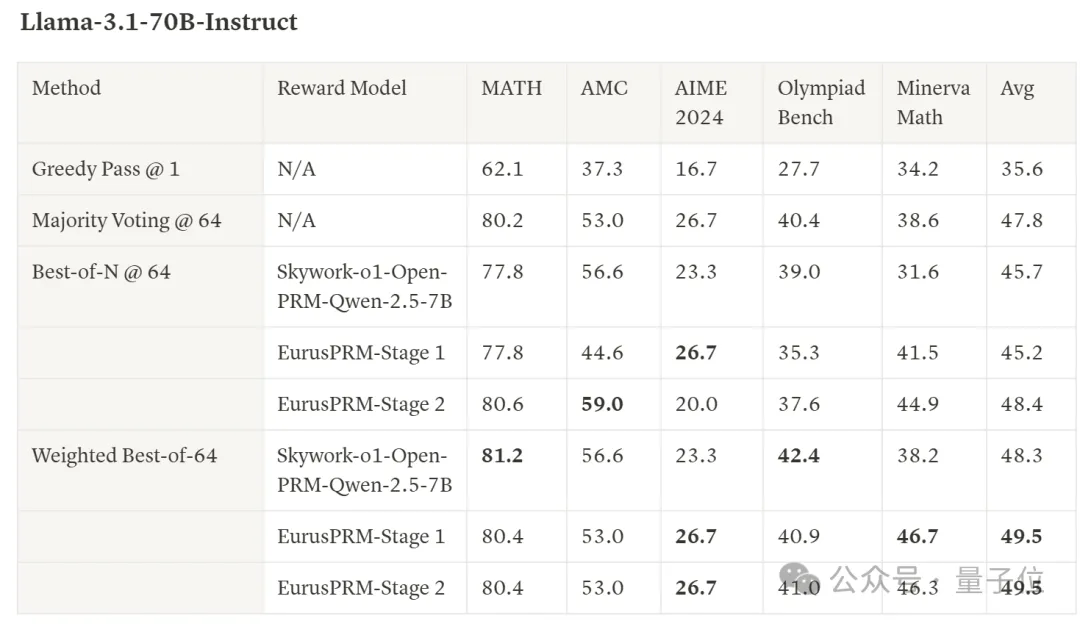

In addition, the researchers collected additional data and trained EurusPRM with SOTA levels based on Qwen2.5-Math-Instruct to achieve open source leadership in Best-of-N sampling.

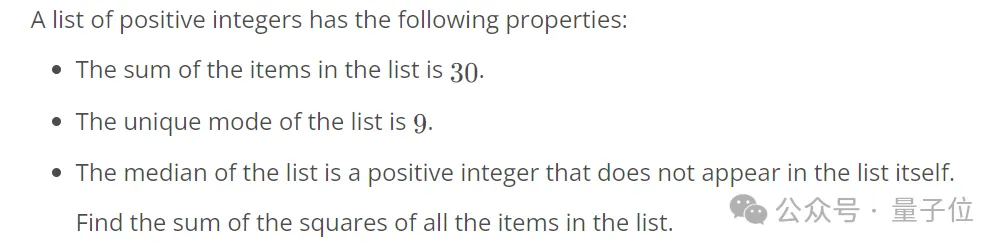

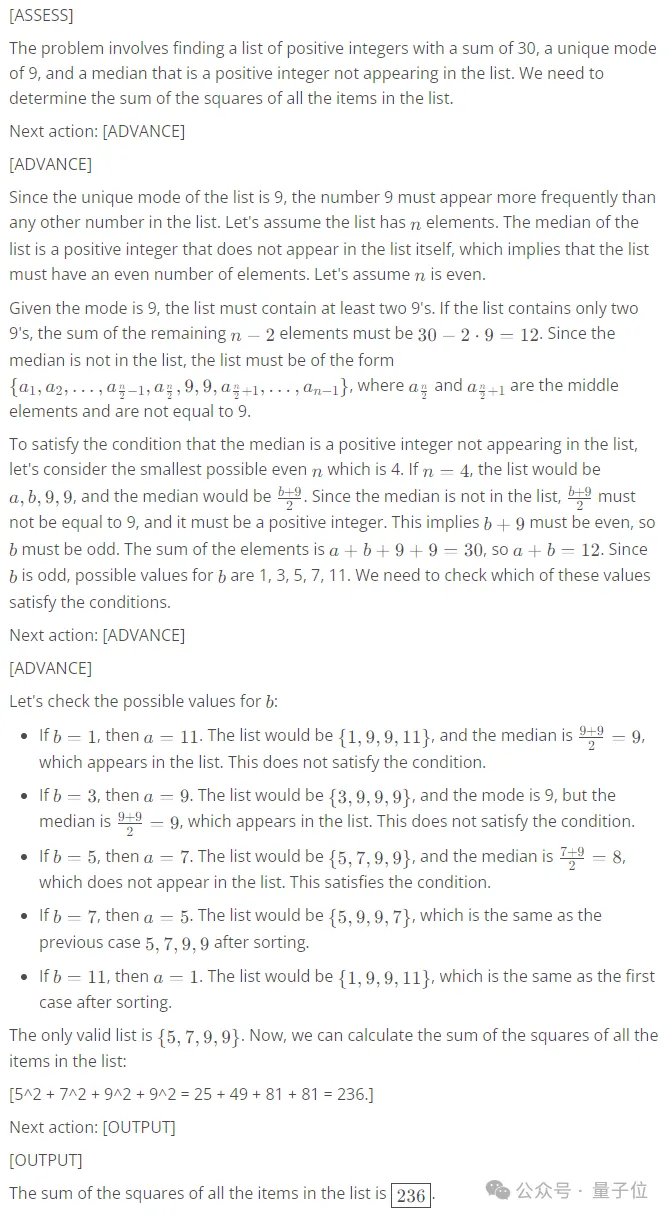

Question (AIME 2024 question, Claude-3.5-Sonnet error)

Answer

Question

Which number is larger? 9.11 or 9.9?

Answer

Reinforcement learning is a bridge between existing agents (large models) and the real world (world models, embodied intelligence), and a path to internalize world feedback into model intelligence, which will play an important role in the development of the next generation of artificial intelligence. PRIME algorithm innovatively combines implicit process reward with reinforcement learning, solves the problem of sparse reward in large model reinforcement learning, and is expected to further improve the complex reasoning ability of large models.

blog link: https://curvy-check-498.notion.site/Process-Reinforcement-through-Implicit-Rewards-15f4fcb9c42180f1b498cc9b2eaf896fGitHu b Link: https://github.com/PRIME-RL/PRIME

2025-02-17

2025-02-14

2025-02-13

13004184443

Room 607, 6th Floor, Building 9, Hongjing Xinhuiyuan, Qingpu District, Shanghai

gcfai@dongfangyuzhe.com

WeChat official account

friend link

13004184443

立即获取方案或咨询

top