Home > Information > News

#News ·2025-01-06

Today's article comes from the public number fan contribution, Tsinghua University joint bytes put forward a new method of virtual dressing: can use the reference clothing and text prompt to customize the figure, the core is to solve the problem of multi-dress combination, text response and clothing details.

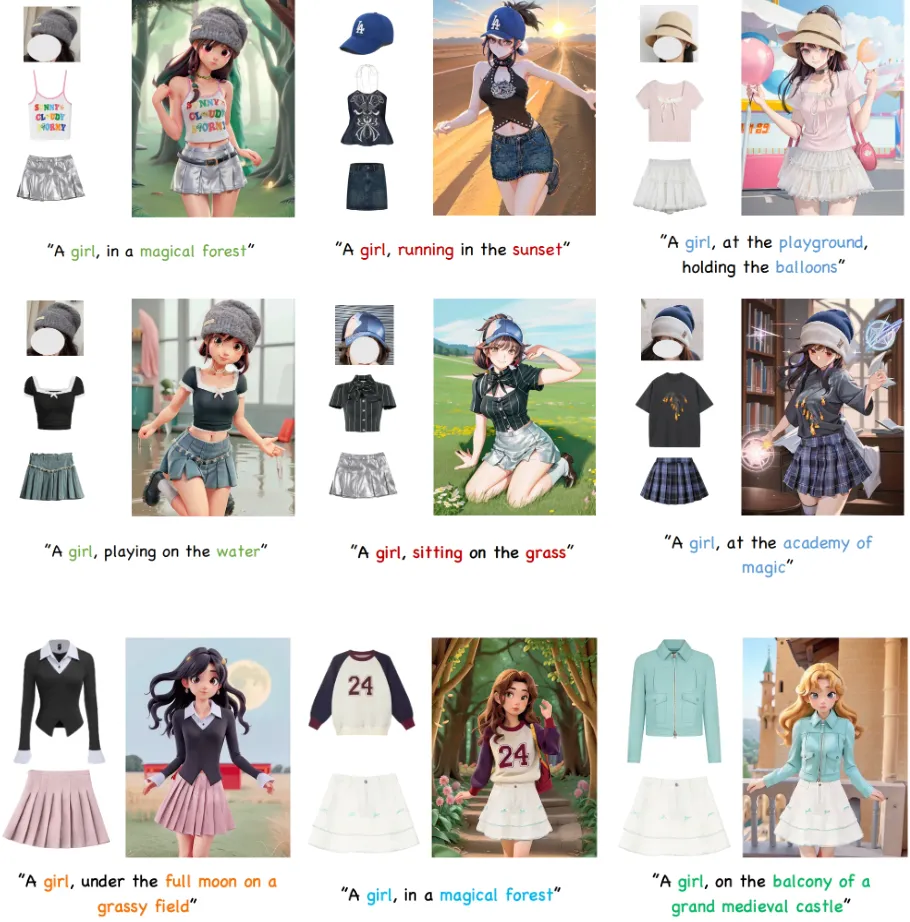

• Reliability: AnyDressing is suitable for a variety of scenes and complex clothing.

• Compatibility: AnyDressing is compatible with LoRA and plugins such as ControlNet and FaceID.

• Project page: https://crayon-shinchan.github.io/AnyDressing/

• Huggingface: https://huggingface.co/papers/2412.04146

• Code: https://github.com/Crayon-Shinchan/AnyDressing

Paper: https://arxiv.org/abs/2412.04146

AnyDressing: Enables customizable virtual dressing of multiple garments through potential diffusion model

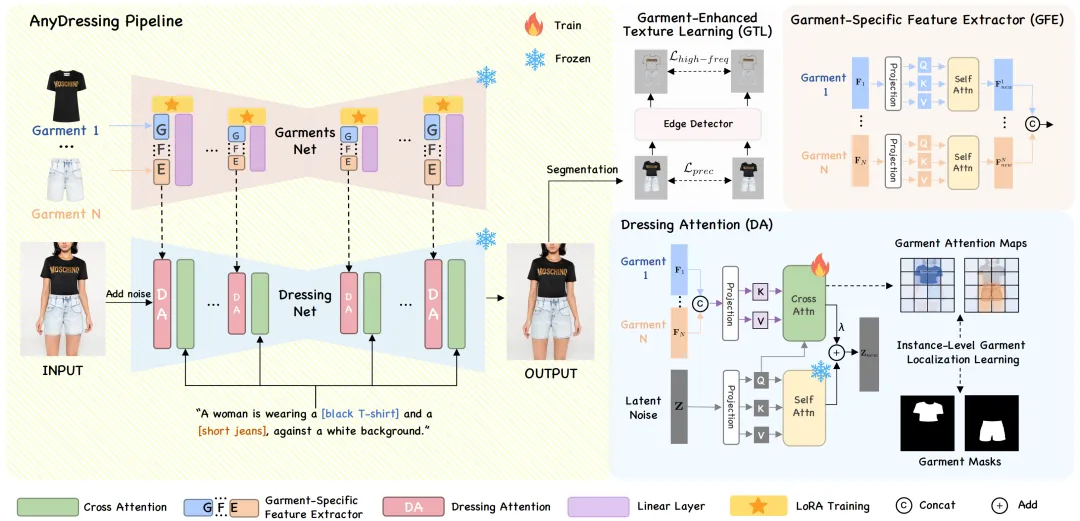

Recent advances in image generation for cloth-centric text and image cues based on diffusion models are impressive. However, existing methods lack support for various costume combinations and have difficulty retaining costume details while being faithful to text prompts, thus limiting their performance in different scenes. In this paper, we focus on a new task, multi-costume virtual dressing, and propose a novel AnyDressing method for customizing characters according to arbitrary clothing combinations and arbitrary personalized text prompts. AnyDressing consists of two main networks, GarmentsNet and DressingNet, which are used to extract detailed clothing features and generate custom images, respectively. Specifically, we propose an efficient and scalable module in GarmentsNet called a cloth-specific feature extractor for encoding clothing textures separately in parallel. This design ensures network efficiency while preventing clothing confusion. At the same time, we design an adaptive dress attention mechanism and a novel instance-level clothing location learning strategy in DressingNet to accurately inject multiple clothing features into their corresponding areas. This method effectively integrates the texture clues of multiple garments into the generated image and further enhances the consistency of text and image. In addition, we introduced a clothing enhanced texture learning strategy to improve the fine-grained texture details of clothing. Thanks to our careful design, AnyDressing can be used as a plug-in module to easily integrate with any community control extension for diffusion models, thereby increasing the variety and controllability of synthesized images. A large number of experiments show that AnyDressing has achieved the most advanced results.

AnyDressing Given N target costumes, customize the character wearing multiple target costumes. GarmentsNet utilizes the Garment Specific Feature Extractor (GFE) module to extract detailed features from multiple garments. DressingNet uses the dressing-Attention (DA) module and instance-level clothing positioning learning mechanism to integrate these features together for virtual Dressing. In addition, the clothing Enhanced Texture Learning (GTL) strategy further enhances detail.

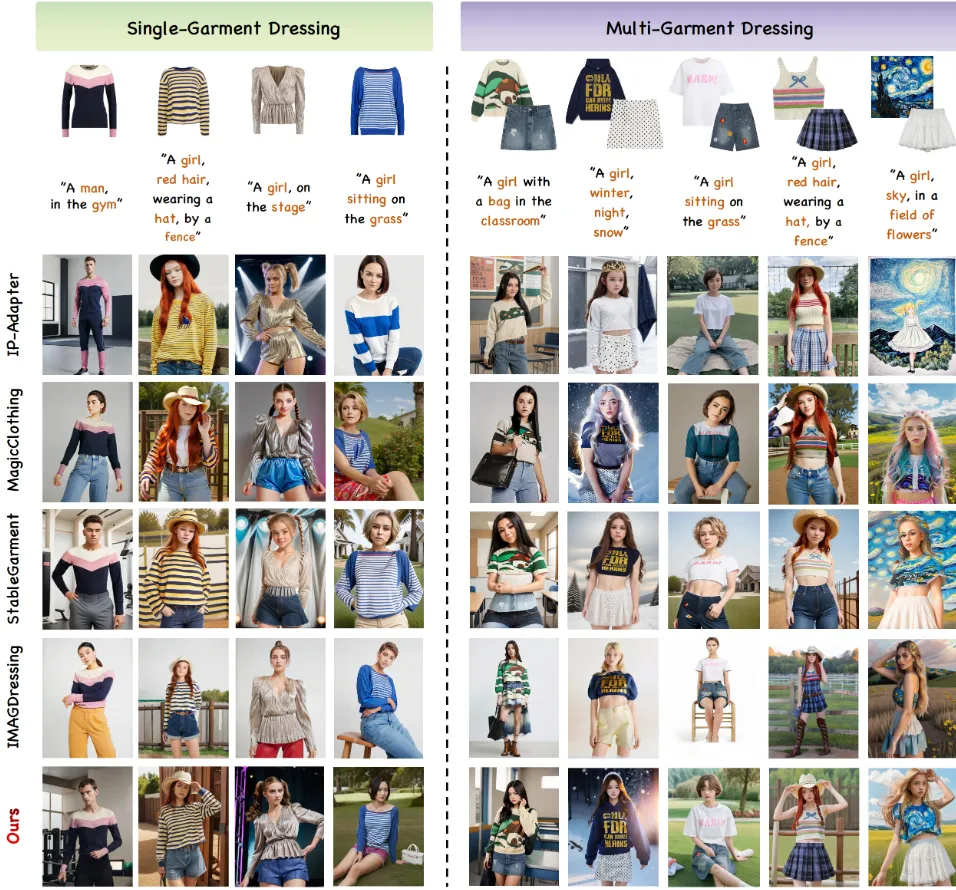

Qualitative comparison with the most advanced one-piece and multi-piece dressing methods.

This paper introduces AnyDressing, which consists of two core networks (GarmentsNet and DressingNet), focusing on a new task, namely multi-garment virtual dressing. GarmentsNet uses garment specific feature extractor module to efficiently encode multiple garment features in parallel. DressingNet uses the dressing-Attention module and instance-level clothing positioning learning mechanism to integrate these features into virtual Dressing. In addition, an enhanced texture learning strategy is designed to further enhance texture details. This approach can be seamlessly integrated with any community control plug-in. A large number of experiments show that AnyDressing has achieved the most advanced results.

2025-02-17

2025-02-14

2025-02-13

13004184443

Room 607, 6th Floor, Building 9, Hongjing Xinhuiyuan, Qingpu District, Shanghai

gcfai@dongfangyuzhe.com

WeChat official account

friend link

13004184443

立即获取方案或咨询

top