Home > Information > News

#News ·2025-01-06

This article is reprinted with the authorization of AIGC Studio public account, please contact the source for reprinting.

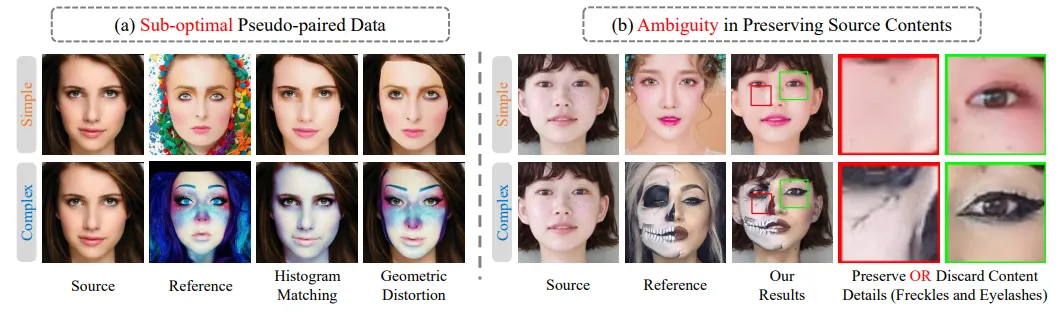

Current makeup transfer technology faces two main challenges:

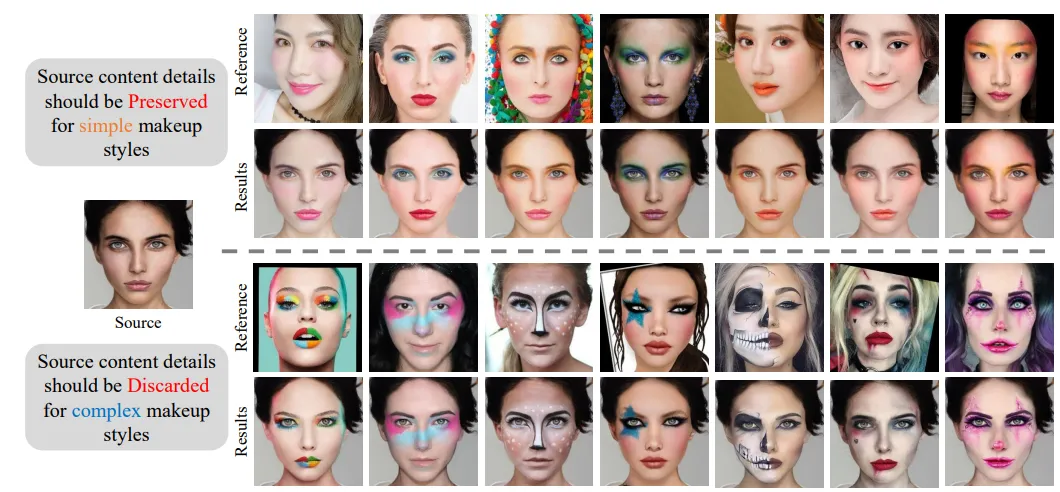

The method introduced to you today is the self-supervised hierarchical makeup transfer method (SHMT) proposed by Ali and Wuhan Institute of Technology, which can naturally and accurately apply a variety of makeup styles to a given facial image. SHMT avoids the misdirection of pseudo-paired data by adopting the self-supervised learning strategy of "decoupling and reconstruction". At the same time, SHMT uses the Laplace Pyramid to break down layers of texture details and flexibly control the retention and loss of makeup styles. In addition to color matching, the method offers the flexibility to retain or discard texture details for various makeup styles without changing the shape of the face.

This paper examines the challenging task of makeup transfer, aiming to accurately and naturally apply various makeup styles to a given face image. Due to the lack of pairing data, current methods often synthesize sub-optimal pseudo-basic facts to guide model training, resulting in low makeup fidelity. In addition, different makeup styles often have different effects on the face, but existing methods struggle to handle this diversity. To address these issues, we propose a novel self-supervised layered cosmetic transfer (SHMT) method via a latent diffusion model. Following the "decoupling and reconstruction" paradigm, SHMT works in a self-supervised manner, free from the misdirection of imprecise pseudo-paired data. In addition, in order to accommodate various makeup styles, layered texture details are broken down through the Laplacian pyramid and content representations are selectively introduced. Finally, we design a novel iterative double alignment (IDA) module that dynamically adjusts the injection conditions of the diffusion model to correct alignment errors caused by domain gaps between content and makeup representations. Extensive quantitative and qualitative analyses demonstrate the effectiveness of our approach.

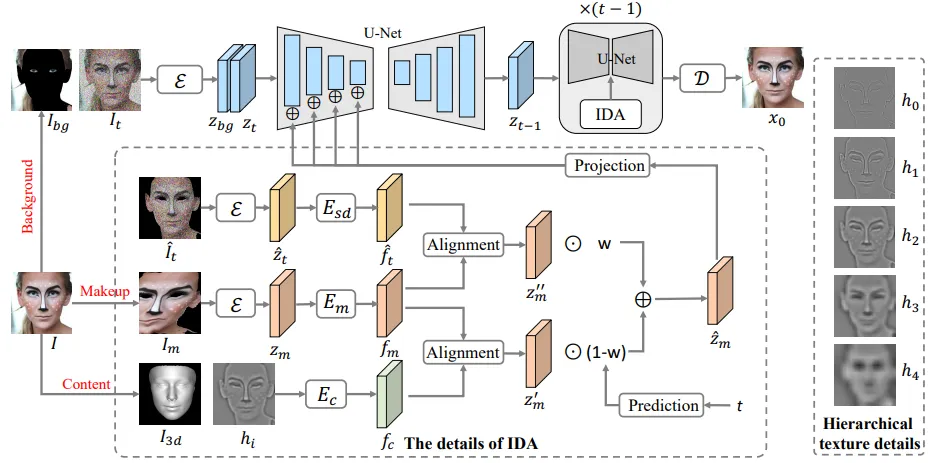

The framework of SHMT. The face image I is decomposed into background area Ibg, makeup representation Im, and content representation (I3d, hi). The makeup transfer process is simulated by reconstructing the original image from these components. Layered texture detail hi was constructed to respond to different makeup styles. At each de-noising step t, IDA uses noisy intermediate results to dynamically adjust the injection conditions to correct alignment errors.

The framework of SHMT. The face image I is decomposed into background area Ibg, makeup representation Im, and content representation (I3d, hi). The makeup transfer process is simulated by reconstructing the original image from these components. Layered texture detail hi was constructed to respond to different makeup styles. At each de-noising step t, IDA uses noisy intermediate results to dynamically adjust the injection conditions to correct alignment errors.

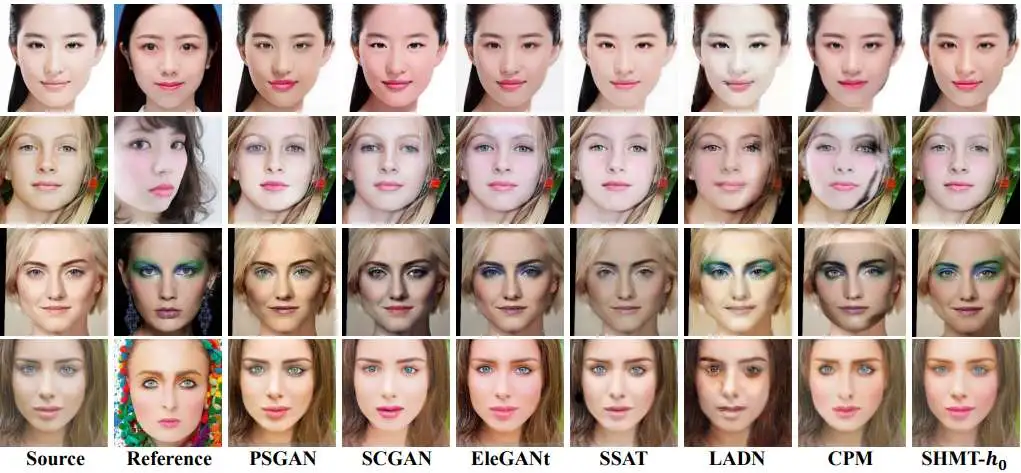

Qualitatively compared to a GAN-based baseline on simple makeup styles.

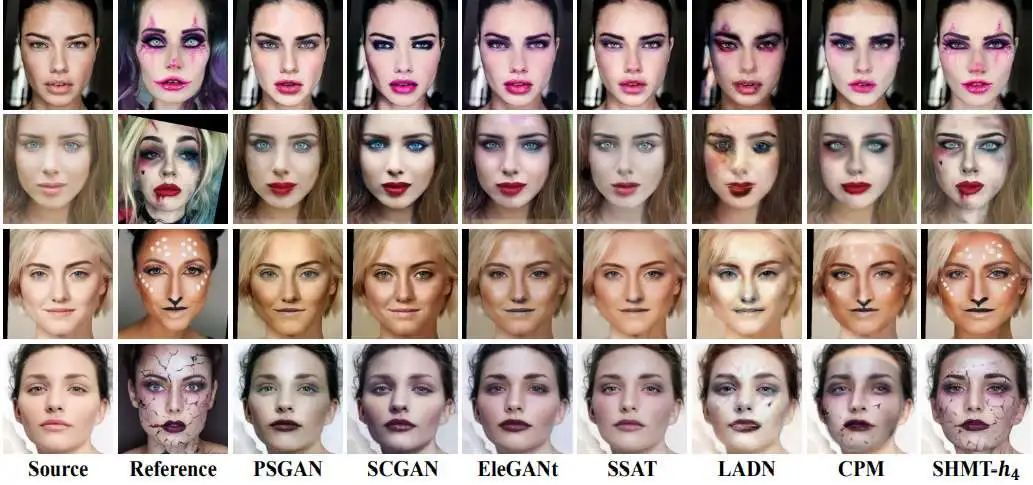

Qualitative comparison with a complex GAN-based makeup baseline.

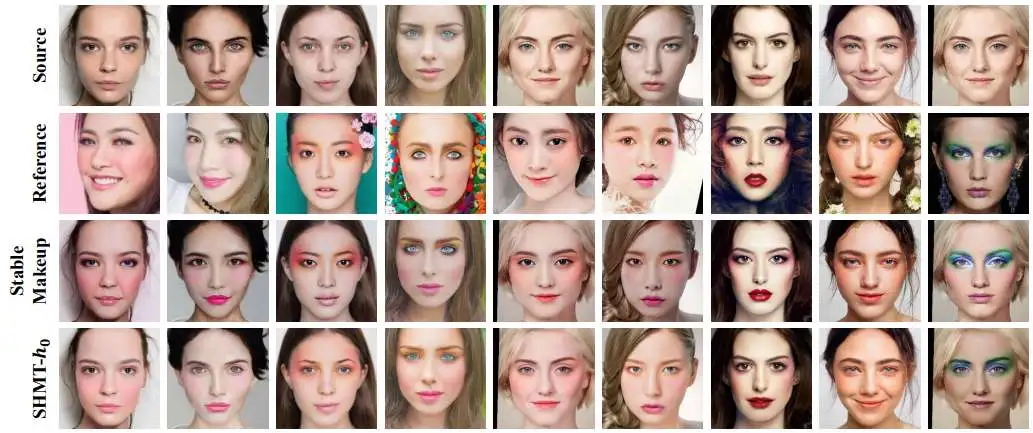

Qualitative comparison of simple makeup and stable makeup baseline

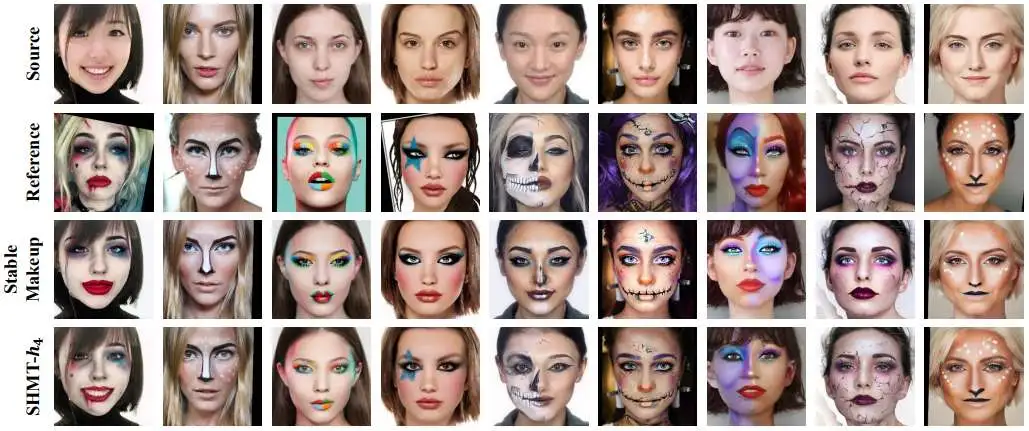

Qualitative comparison of complex makeup with stable makeup baseline.

In this paper, a self-supervised layered makeup transfer (SHMT) method is proposed. This method uses self-supervised strategy to train the model and gets rid of the misdirection of pseudo-paired data in previous methods. Thanks to layered texture details, SHMT has the flexibility to control how much texture detail is retained or discarded, making it adaptable to a variety of makeup styles. In addition, the proposed IDA module can effectively correct alignment errors, thereby improving makeup fidelity. Both quantitative and qualitative analyses demonstrate the validity of our SHMT method.

2025-02-17

2025-02-14

2025-02-13

13004184443

Room 607, 6th Floor, Building 9, Hongjing Xinhuiyuan, Qingpu District, Shanghai

gcfai@dongfangyuzhe.com

WeChat official account

friend link

13004184443

立即获取方案或咨询

top