Home > Information > News

#News ·2025-01-03

In the movie "Iron Man," Tony Stark's assistant Jarvis (J.A.R.V.I.S.) can help him control various systems and complete tasks automatically, which has made countless viewers envy.

Now, such a super intelligent assistant has finally become a reality!

With the explosive evolution of multimodal large language models, OS Agents have emerged, and they can seamlessly control computers and phones, and automatically do tedious tasks for you.

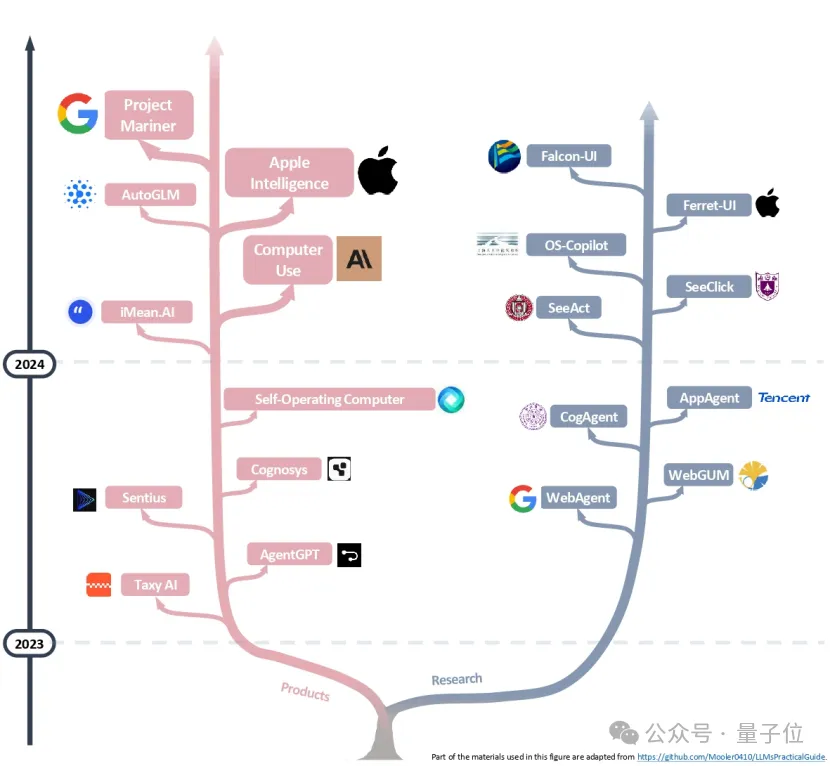

From Anthropic's Computer Use, to Apple's Apple Intelligence, to Jepu AI's AutoGLM, and Google DeepMind's Project Mariner, the ambitions of tech giants all point to the same goal: Create a real sense of the operating system intelligent assistant.

OS Agents are no longer just "assistants," they are rewriting the rules of the game for "human-computer interaction."

Recently, Zhejiang University, together with OPPO and ten other institutions, jointly sorted out a review article "OS Agents: A Survey on MLLM-based Agents for General Computing Devices Use not only explains the core technical structure of OS Agents in detail, but also takes stock of their evaluation methods and future challenges.

Could the next big thing in tech be OS Agents?

Super AI assistants like Jarvis, commonly referred to as OS Agents, are capable of automating various tasks on computing devices such as computers or mobile phones through environments and interfaces (such as graphical user interfaces, GUIs) provided by operating systems (OS).

OS Agents have enormous potential to improve the lives of billions of users around the world, and imagine a world where everyday activities such as online shopping and booking travel can be done seamlessly by these agents, which will dramatically increase people's efficiency and productivity.

In the past, AI assistants such as Siri[1], Cortana[2], and Google Assistant[3] have demonstrated this potential. However, due to the limited modeling capabilities in the past, these products can only accomplish a limited task.

Fortunately, with the development of multimodal large language models, With the Gemini[4], GPT[5], Grok[6], Yi[7] and Claude[8] series models (ranked according to the LLM Leaderboard Chatbot Arena updated on December 22, 2024 [9]), new possibilities have opened up in this field.

(M)LLMs demonstrate an impressive ability to enable OS Agents to better understand complex tasks and execute them on computing devices.

Basic model companies and handset makers have been making a lot of moves in this area recently, Examples include Computer Use[10] by Anthropic, Apple Intelligence[11] by Apple, AutoGLM[12] by Chipu AI, and Project Mariner by Google DeepMind [13].

Among them, Computer Use uses Claude[14] to interact directly with the user's computer to achieve seamless task automation.

At the same time, academia has proposed various ways to build OS Agents based on (M)LLM.

For example, OS-Atlas[15] proposed a basic GUI model. By synthesizing GUI operation data across multiple platforms, it greatly improved the model's ability to operate GUI and improved the performance of OOD tasks.

Os-copilot [16] is an OS Agents framework that enables agents to automate a wide range of computer tasks with little supervision, and has demonstrated its generalization and self-improvement capabilities in a variety of applications.

This paper is a comprehensive review of OS Agents.

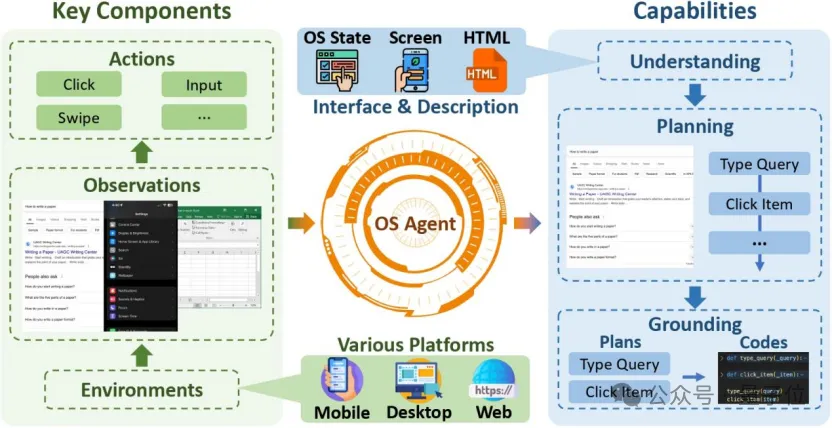

Firstly, the foundation of OS Agents is clarified, its key elements including environment, observation space and action space are discussed, and core capabilities such as understanding, planning and performing operations are outlined.

Then, it examines the methods for building OS Agents, focusing on the development of the domain-specific underlying models and agent frameworks for OS Agents.

It then reviews evaluation protocols and benchmarks in detail, showing how OS Agents can be evaluated across a variety of tasks.

Finally, the paper discusses current challenges and points out potential directions for future research, including security and privacy, personalization and self-evolution.

This paper aims to sort out the status quo of OS Agents research and provide help for academic research and industrial development.

To further drive innovation in the field, the team also maintains an open source GitHub repository of 250+ papers on OS Agents and other related resources, which is still being updated. (Link at end of article ~)

To achieve the general control of computing devices by OS Agents, it is necessary to interact with the environment, input and output interfaces provided by the operating system.

To meet this interaction requirement, existing OS Agents rely on three key elements:

After these key elements of OS Agents, how to correctly and effectively interact with the operating system needs to test the abilities of OS Agents themselves in all aspects.

The core capabilities that OS Agents must master can be summarized as follows:

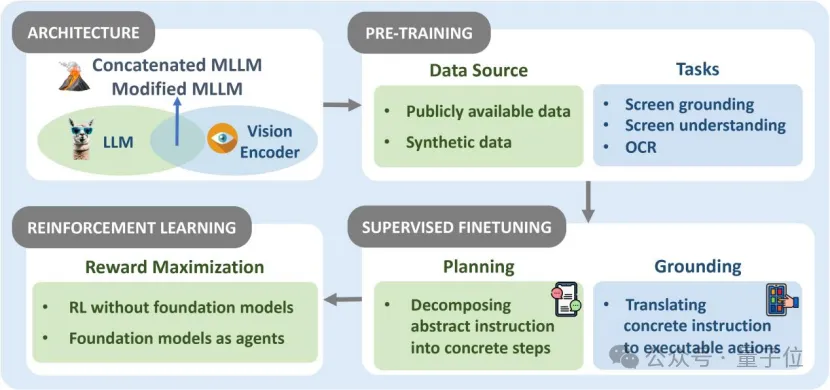

To build OS Agents that can perform tasks efficiently, the core lies in developing the basic model of adaptation.

These models not only need to understand complex screen interfaces, but also perform tasks in multimodal scenarios.

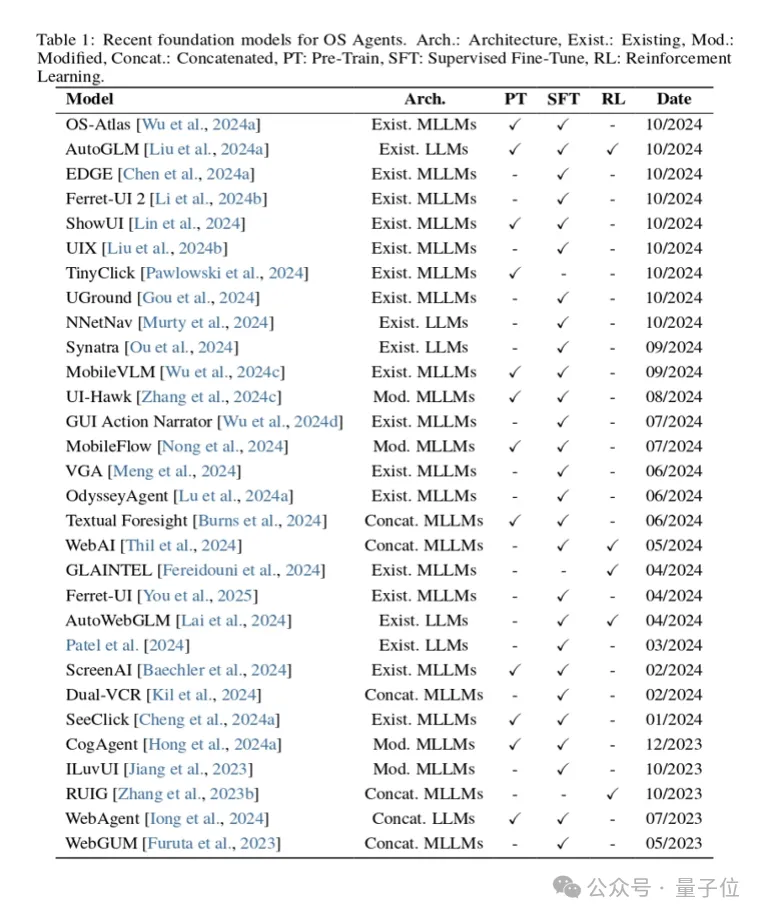

The following is a detailed summary and summary of the structure and training strategy of the basic model:

Architecture: The main model architecture is divided into four categories: 1. Existing LLMs: Existing LLMs uses the open source large language model architecture directly, inputs structured screen interface information to LLMS in the form of text, so that the model can perceive the environment; 2. Existing MLLMs: Existing MLLMS directly adopts the open source multi-modal large language model architecture, integrates text and visual processing capabilities, improves the understanding of GUI, and reduces the feature loss caused by textual visual information; 3, Concatenated MLLMs: LLM and visual encoder bridge, higher flexibility, can choose different language models and visual models according to task requirements for combination; 4. Modified MLLMs: The existing MLLM architecture is optimized to solve scenarios-specific challenges, such as adding additional modules (high-resolution visual encoders or image segmentation modules, etc.) to perceive and understand screen interface details in greater detail.

Pre-training: Pre-training lays the foundation for model construction and improves the ability to understand the screen interface through massive data. Data sources include public data sets and synthetic data sets. The pre-training tasks cover Screen Grounding, Screen Understanding, and optical character recognition (OCR).

Supervised Fine-tuning: Supervised fine-tuning makes the model better fit the GUI scene, which is an important means to improve the planning and execution ability of OS Agents. For example, by recording the track of task execution to generate training data, or using HTML to render screen interface details, improve the model's ability to generalize to different GUIs.

Reinforcement Learning: Reinforcement learning at this stage realizes the paradigm shift from (M)LLMs as feature extraction to (M)LLM-as-Agent, which helps OS Agents interact in dynamic environment and constantly optimize decisions based on reward feedback. This approach not only improves the alignment degree of agents, but also provides stronger generalization and task adaptation for visual and multimodal agents.

Recent papers on the basic model of OS Agents are summarized as follows:

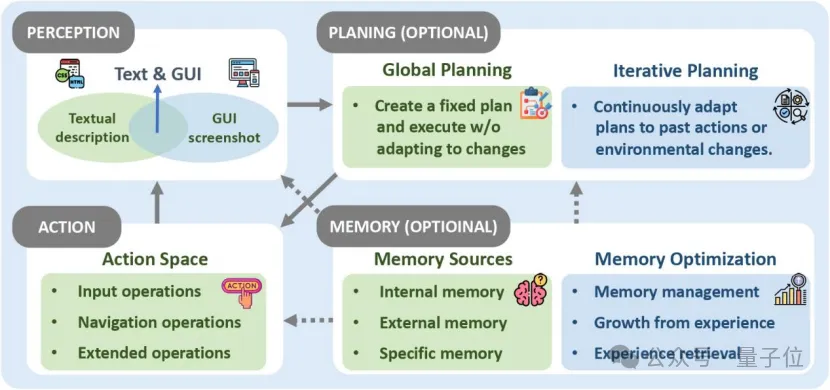

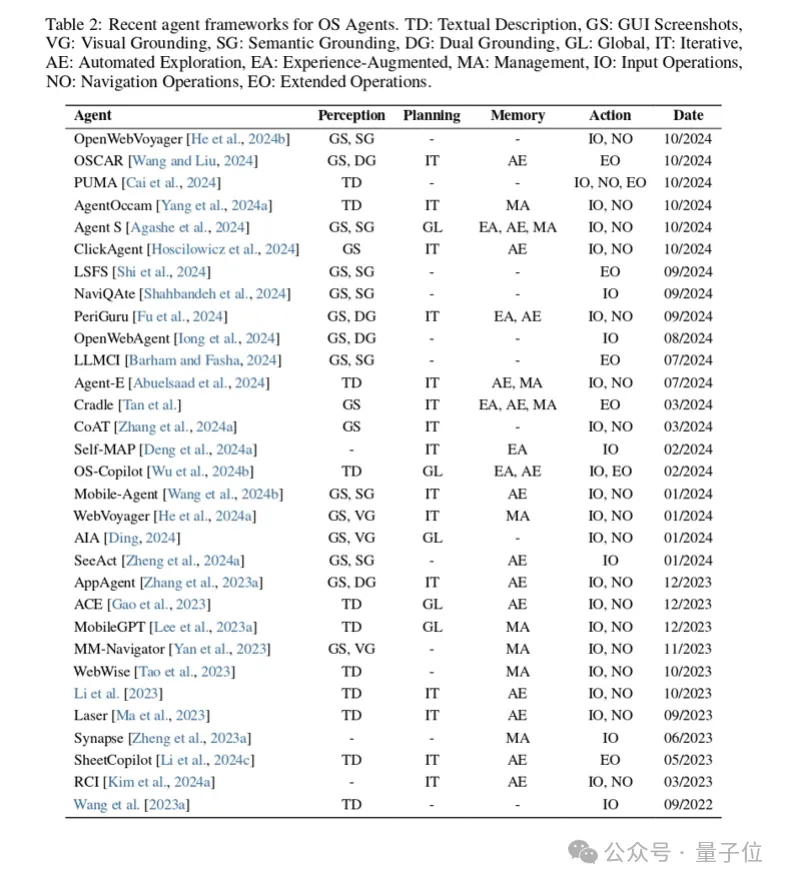

In addition to a powerful basic model, OS Agents also need to be combined with an Agent framework to enhance perception, planning, memory and action capabilities.

These modules work together to enable OS Agents to respond efficiently to complex tasks and environments.

The following is a summary of the four key modules in the OS Agents framework:

Perception: Perception acts as the "eyes" of OS Agents, observing the environment through input multimodal data (e.g., screenshots, HTML documents). We subdivide awareness into: 1. Text awareness: converting the state of the operating system into a structured text description, such as a DOM tree or HTML file; 2. Screen interface perception: Use visual encoder to understand screen interface screenshots, and accurately identify key elements through visual positioning (such as buttons, menus) and semantic connections (such as HTML tags).

Planning: As the "brain" of OS Agents, planning is responsible for formulating execution strategies for tasks, which can be divided into: 1. Global planning: generate a complete plan at a time and execute it; 2. Iterative planning: Dynamically adjust the plan as the environment changes, so that the agent can adapt to the real-time updated screen interface and task requirements.

Memory: The "memory"; section of the OS Agents framework helps store task data, operation history, and environment state. Memory is divided into three types: 1, Internal memory (Internal Memory) : store operation history, screen shots, status data and dynamic environment information, support the context of task execution understanding and trajectory optimization. For example, parsing screen layout with screenshots or generating decisions based on historical actions; 2, External Memory (External Memory) : provide long-term knowledge support, such as by calling external tools (such as API) or knowledge base to obtain domain background knowledge, to assist the decision of complex tasks; 3, Specific Memory (Specific Memory) : focus on the knowledge and user needs of specific tasks, such as storage subtask decomposition methods, user preferences or screen interface interaction functions, to provide highly targeted operation support. In addition, we also summarize a variety of memory optimization strategies.

Action: We define the action scope of OS Agents as the action space, which includes the way of operating system interaction. We subdivide it into three categories: 1. Input operation: Input is the basis of interaction between OS Agents and digital screen interface, mainly including mouse operation, touch operation and keyboard operation; 2. 2. Navigation operation: enable OS Agents to explore and move to the target platform to obtain information needed for task execution; 3, extended operation: breaking through the limitations of traditional screen interface interaction, to provide agents with more flexible task execution capabilities, such as: code execution and API calls.

Recent papers on the OS Agents framework are summarized as follows:

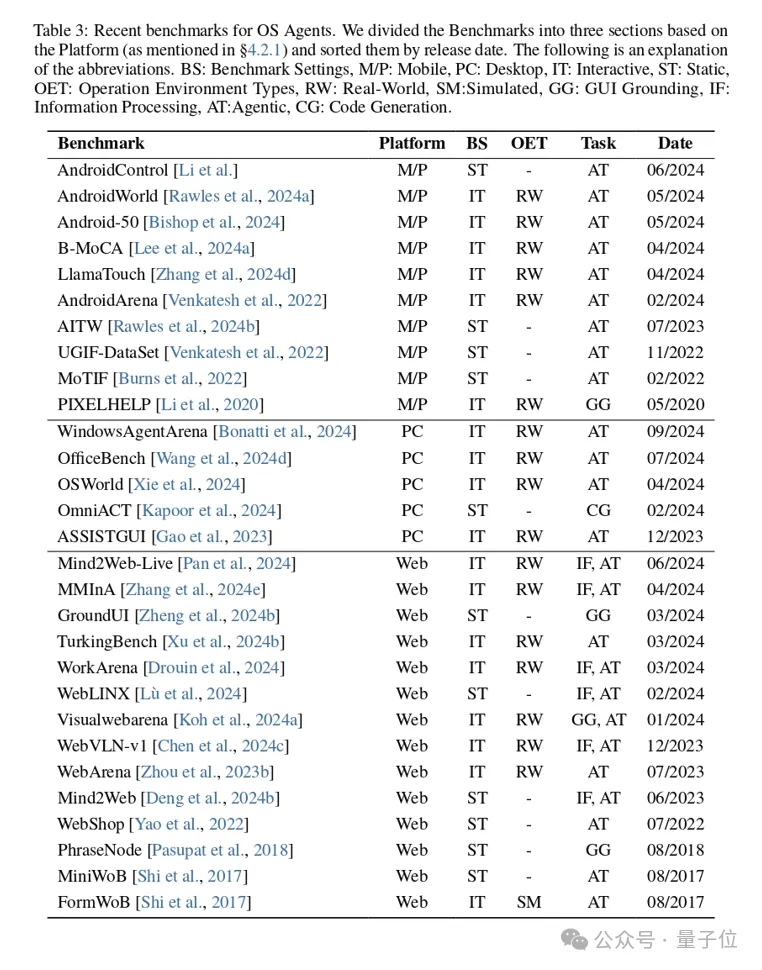

Scientific evaluation has played a key role in the development of OS Agents, helping developers measure the performance of agents in various scenarios.

The table below summarizes recent papers on OS Agents evaluation benchmarks:

The core of operating system agent evaluation can be summarized into two key questions: how the evaluation process should be conducted and what needs to be evaluated.

The following article will focus on these two issues, the operating system agent evaluation principles and indicators.

To fully evaluate the performance of OS Agents, the researchers developed a variety of evaluation benchmarks covering different platforms, environment Settings, and task categories.

These benchmarks provide scientific basis for measuring the adaptability and dynamic task execution ability of agents across platforms.

Evaluation Platform: Evaluation platform builds an integrated evaluation environment, different platforms have unique challenges and evaluation priorities, we mainly divide them into three categories: Mobile platform (Mobile), Desktop platform (Desktop) and Web platform (Web).

Benchmark Setting: This section divides the evaluation environment for OS Agents into two broad categories: Static environment and Interactive environment, and further subdivide the interactive environment into Simulated environment and Real World environment. Static environments are good for off-line evaluation of basic tasks, while interactive environments (especially real-world environments) are better for fully testing the actual capabilities of OS Agents in complex dynamic scenarios. Real-world environments emphasize generalization and dynamic adaptability, which are important directions for future assessment.

Tasks: To fully assess the capabilities of OS Agents, current benchmarks integrate a variety of specialized tasks, ranging from system-level tasks (such as installing and uninstalling applications) to everyday application tasks (such as sending emails and shopping online). It can be divided into the following categories: 1. GUI Grounding: evaluates the ability of OS Agents to convert commands into screen interface operations, that is, how they interact with specified operable elements in the operating system. 2. Information Processing: Evaluate the ability of OS Agents to efficiently process and summarize information, especially in dynamic and complex environments, to extract useful information from large amounts of data; Agentic Tasks: Evaluate the core capabilities of OS Agents, such as the ability to plan and execute complex tasks. This type of task provides the agent with goals or instructions to complete the task without explicit guidance.

This section discusses the main challenges and future directions of OS Agents, focusing on Safety & Privacy and Personalization & Self-Evolution.

Security and privacy are areas that must be paid attention to in OS Agents development.

OS Agents face a variety of attack methods, including indirect prompt injection attacks, malicious pop-up Windows, and adversarial instruction generation, which can cause the system to perform incorrect operations or disclose sensitive information.

Although there are currently security frameworks for LLMs, defense mechanisms for OS Agents are still inadequate.

Current research mainly focuses on designing defense schemes specifically to deal with special threats such as injection attacks and backdoor attacks, and it is urgent to develop a comprehensive and extensible defense framework to improve the overall security and reliability of OS Agents.

In order to evaluate the robustness of OS Agents in different scenarios, some agent security benchmarks are also introduced to comprehensively test and improve the security performance of the system, such as ST-WebAgentBench[17] and MobileSafetyBench[18].

Personalized OS Agents need to constantly adjust their behavior and functionality based on user preferences.

Multimodal large language models are gradually supporting the understanding of user history and dynamic adaptation to user needs, and OpenAI's Memory capabilities [19] have made some progress in this direction.

Improve personalization and performance by allowing agents to continuously learn and optimize through user interaction and task execution.

In the future, the memory mechanism will be extended to more complex forms, such as audio, video, sensor data, etc., to provide more advanced predictive capabilities and decision support.

At the same time, it supports user data-driven self-optimization to enhance user experience.

The development of multimodal large language models has created new opportunities for OS Agents, bringing the idea of implementing advanced AI assistants closer to reality.

This review aims to provide an overview of the fundamentals of OS Agents, including their key components and capabilities.

In addition, the article reviews multiple approaches to building OS Agents, with a special focus on domain-specific base models and agent frameworks.

In the evaluation protocols and benchmarks, team members analyzed the various evaluation metrics in detail and categorized the benchmarks by environment, setting, and task.

Looking ahead, the team identified challenges that require ongoing research and attention, such as security and privacy, personalization and self-evolution. These areas are the focus of further research.

This review summarizes the current state of the field and points out potential directions for future work aimed at contributing to the continued development of OS Agents and enhancing their application value and practical significance in academia and industry.

If there is any mistake, welcome everyone to criticize and correct, the author also said, looking forward to the exchange of peers and friends!

Link to thesis: https://github.com/OS-Agent-Survey/OS-Agent-Survey

Project homepage: https://os-agent-survey.github.io/

2025-02-17

2025-02-14

2025-02-13

13004184443

Room 607, 6th Floor, Building 9, Hongjing Xinhuiyuan, Qingpu District, Shanghai

gcfai@dongfangyuzhe.com

WeChat official account

friend link

13004184443

立即获取方案或咨询

top