Home > Information > News

#News ·2025-01-02

Since Bytedance and POSTECH's research team released a groundbreaking research result called "1.58-bit FLUX," which successfully quantified the weight parameter of the state-of-the-art text-to-image (T2I) generation model FLUX.1-dev to 1.58 bits while maintaining the quality of generating 1024x1024 images. Opening new avenues for deploying large T2I models on resource-constrained mobile devices, the work has been published on arXiv with an open source library (the code has not yet been uploaded)

In simple terms, FLUX's (launched by the Black Forest Lab, a team of Stable Diffusion authors) super-powerful AI drawing model is "compressed". As we all know, the current AI drawing models, such as DALLE 3, Stable Diffusion 3, Midjourney, etc., show strong image generation capabilities, and have great potential in real-world applications. However, these models require billions of parameters and high inference memory requirements, making them difficult to deploy on mobile devices such as mobile phones

This is like, you want to use the phone to shoot an 8K super HD movie, and the phone's memory directly burst, isn't it embarrassing?

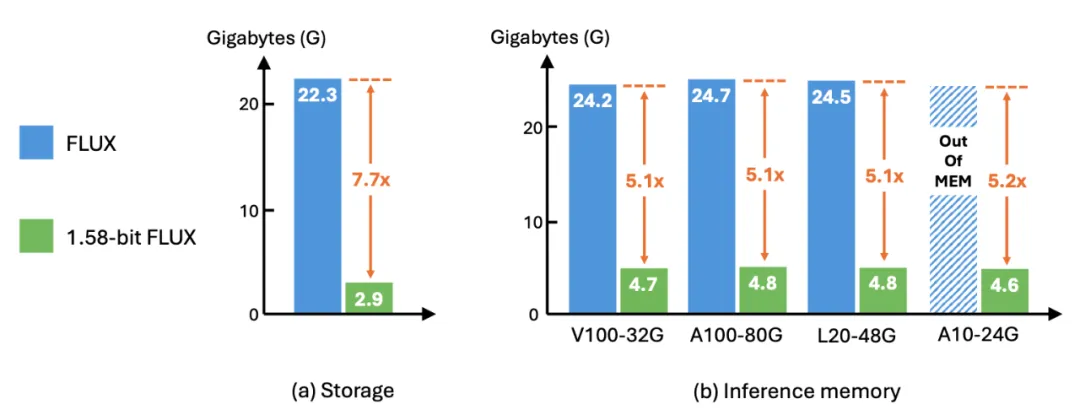

The FLUX model, which was already very strong, has now been "compressed" into 1.58-bit FLUX, and the volume has been directly reduced by 7.7 times! This means that in the future, running these super AI drawing models on the mobile phone is no longer a dream!

The research team chose the open source and excellent performance of the FLUX.1-dev model as the quantization target, and explored the very low bit quantization scheme. By quantifying 99.5% of the vision Transformer parameters in the model to 1.58 bits, i.e. limiting the parameter values to {-1, 0, +1}, and developing a customized kernel specifically for 1.58 bit operations, the 1.58-bit FLUX has achieved significant improvements in model size, inference memory, and inference speed

In fact, "1.58-bit" can be understood as a super efficient way to "pack". You can think of the parameters of an AI model as small building blocks that might otherwise have many colors and many shapes. The "1.58-bit" is like a magic storage box that reduces these blocks to just three types: "-1," "0," and "+1."

As a result, blocks that used to need a lot of space to store can now be put into a small box, and these blocks can form almost the same pattern as the original! Does this look a lot like the compression software you normally use? Except, this is super compression for AI models!

Core technology and innovation

1. Data-independent 1.58-bit quantization: Different from previous quantization methods that require image data or mixed precision schemes, the quantization process of 1.58-bit FLUX does not rely on image data at all, and can only be completed through self-supervision of the Flux.1-dev model. This greatly simplifies the quantification process and makes it more universal

2. Customized 1.58-bit operation kernel: In order to further improve the inference efficiency, the research team developed a kernel optimized for 1.58-bit operation. The kernel significantly reduces the memory footprint of inference and increases the inference speed

Experimental results and analysis

The experimental results show that the 1.58-bit FLUX achieves the following significant improvements:

7.7x reduction in model storage: The model storage space is significantly reduced due to the quantization of weights to 2 over a specific signed integer

5.1x reduction in inference memory: Inference memory usage is significantly reduced across all GPU types, especially on resource-constrained devices such as the A10-24G

Reasoning speed: Especially on lower performance Gpus such as the L20 and A10, reasoning speed is increased by up to 13.2%

This is probably the biggest question on everyone's mind. After all, if the quality of the painting deteriorates, then what is the point of "slimming"?

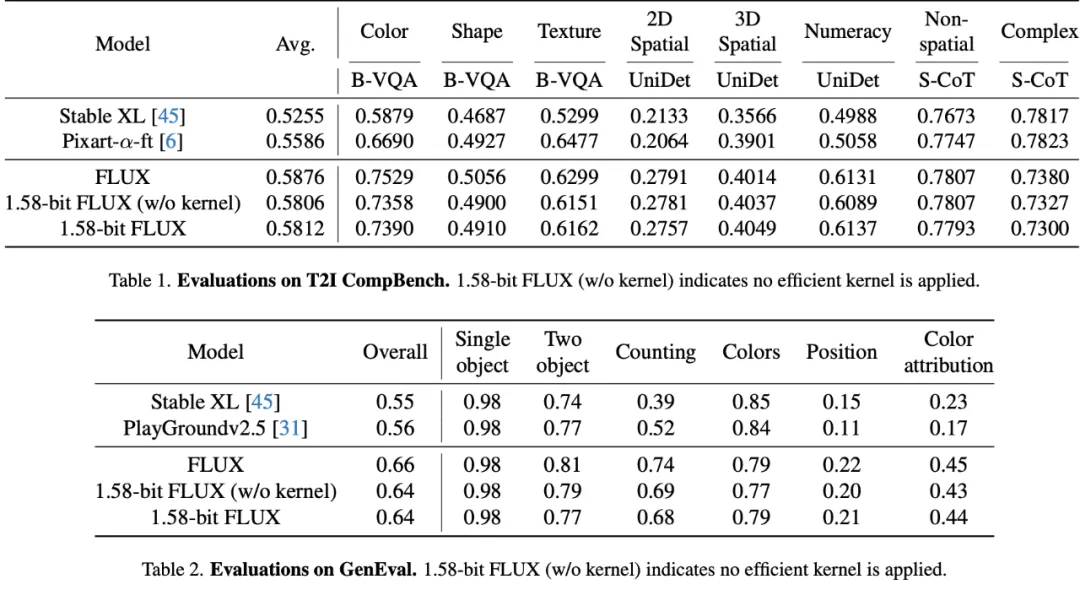

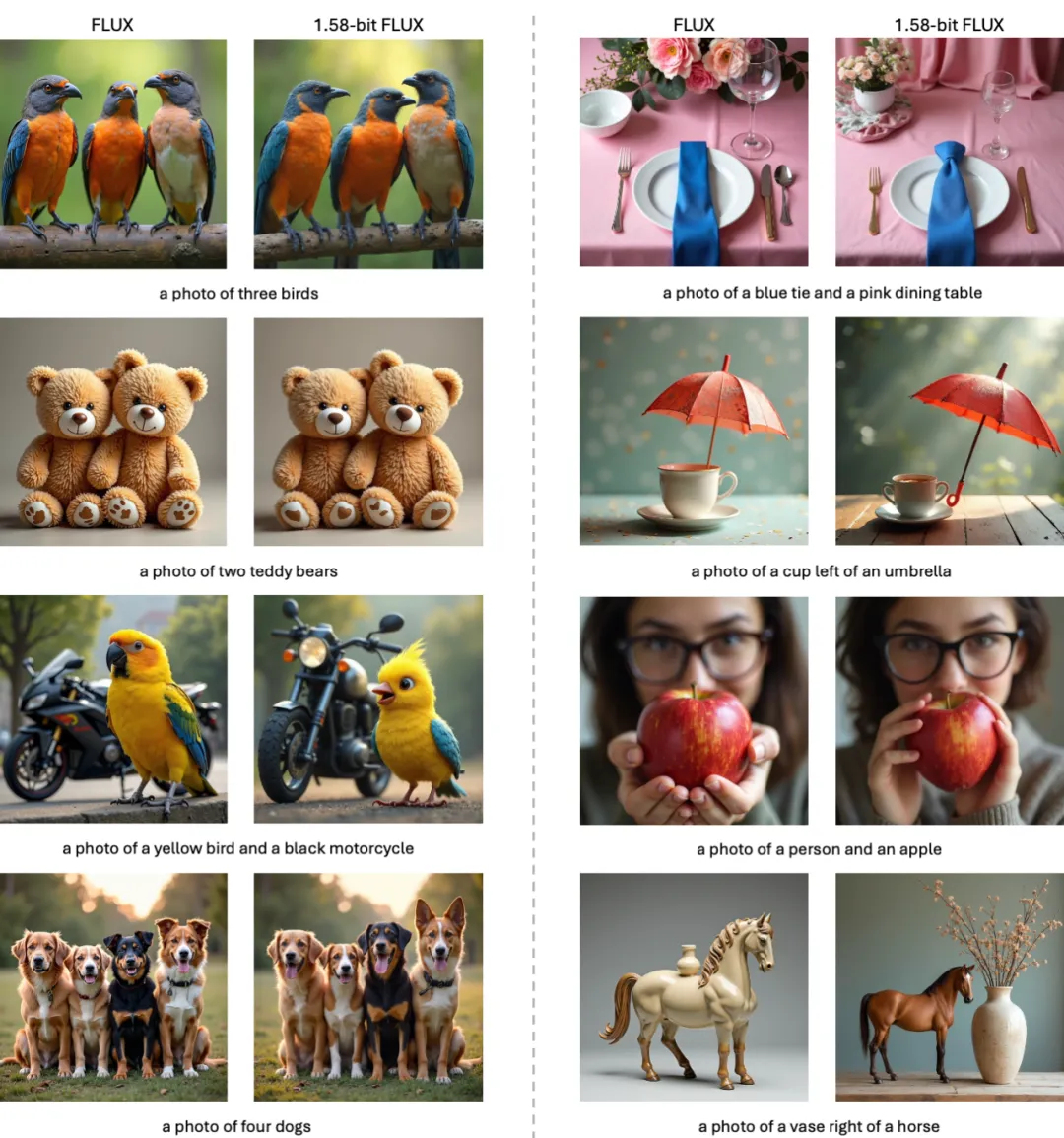

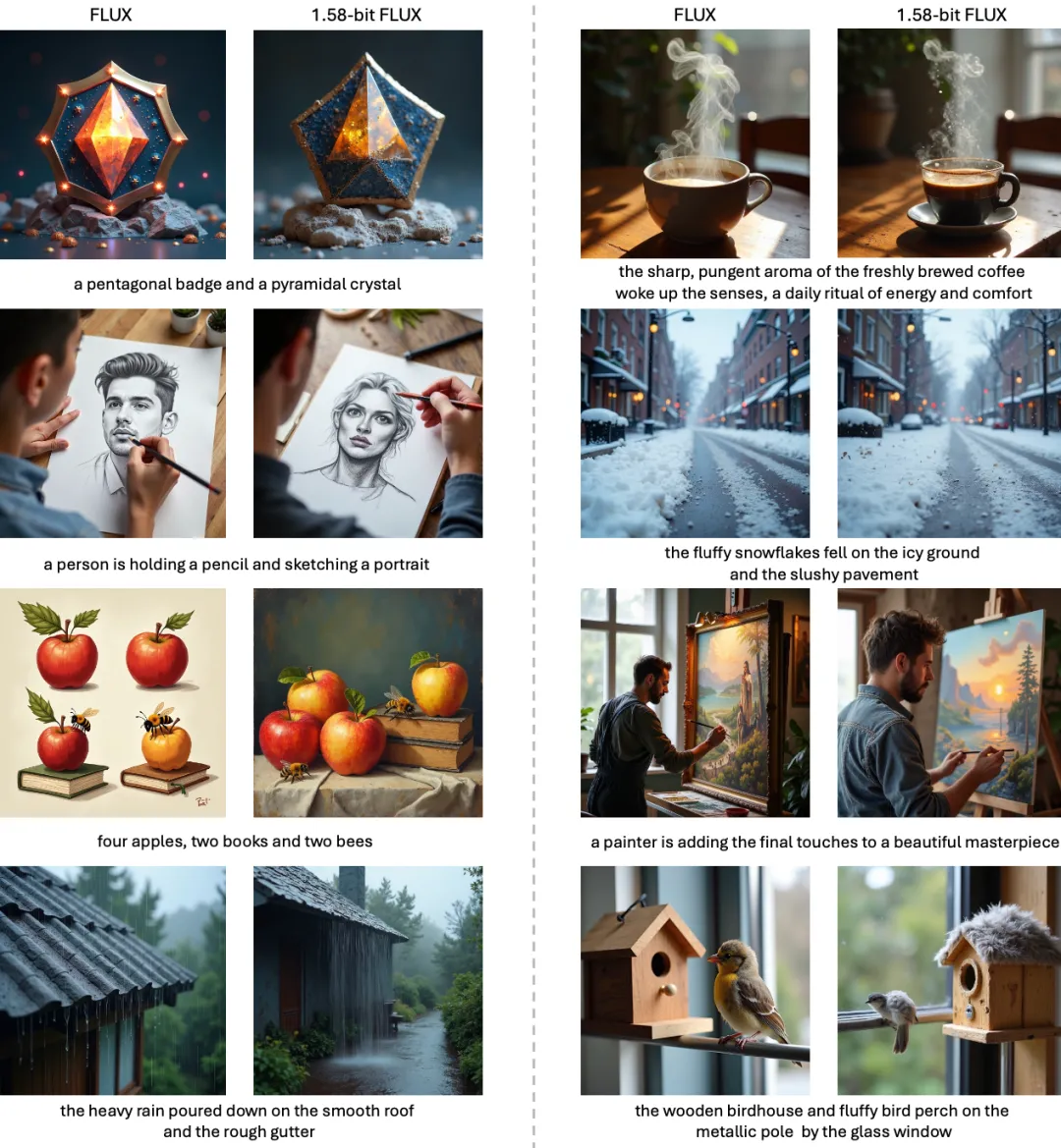

Rest assured, the research team has already thought of this! In GenEval and T2I Compbench, two authoritative test platforms, they conducted rigorous comparative testing of the models before and after "compression". The results show that the picture quality of the 1.58-bit FLUX is almost the same as the original!

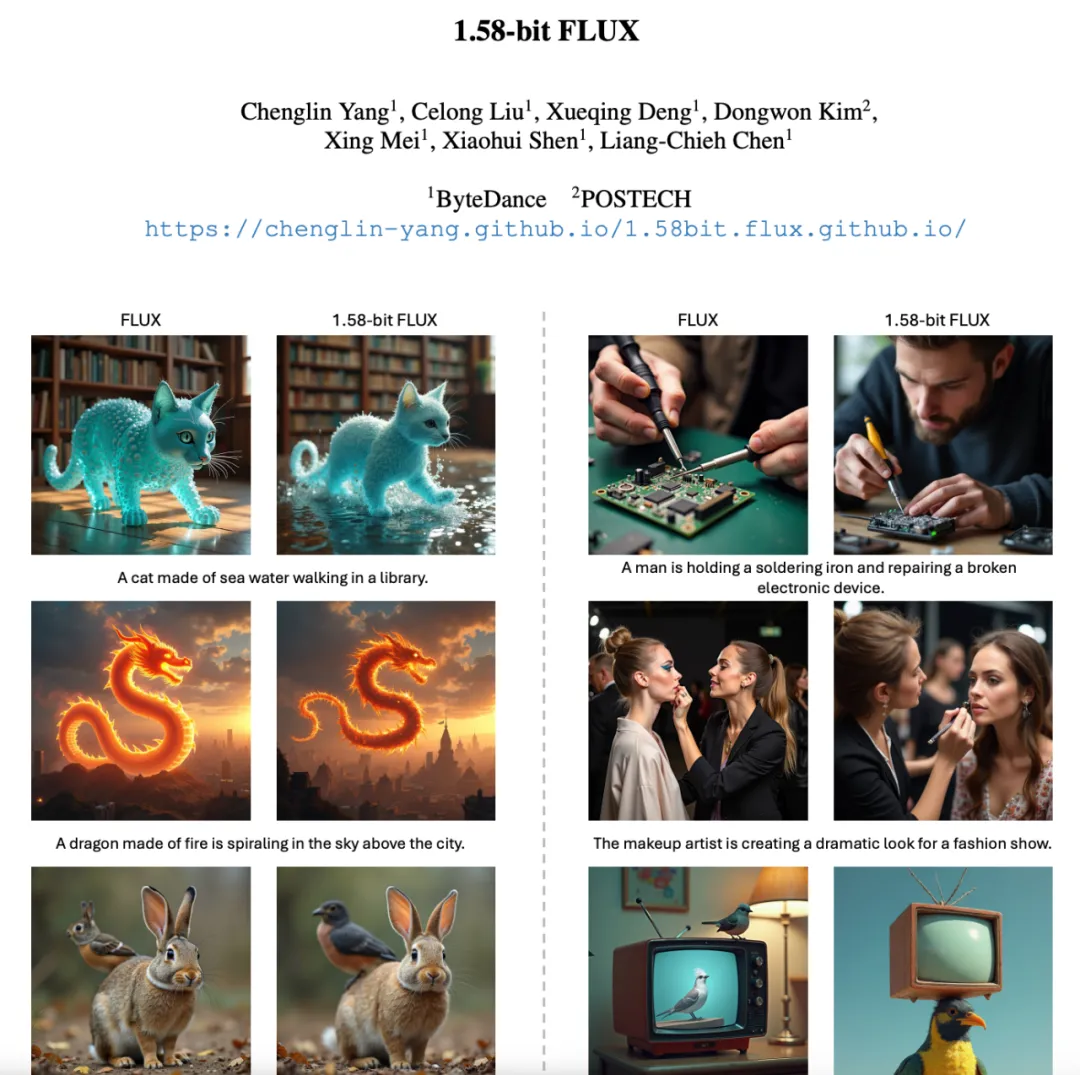

The paper also released a large number of comparison pictures, such as "a sea cat walking in the library", "a fire dragon circling over the city" and so on, these boundless images, 1.58-bit FLUX can be easily controlled, full of details, and the effect is amazing!

The biggest significance of this technology is that it allows us to see the possibility of running large AI drawing models on mobile phones! Previously, we could only experience the fun of AI drawing on a computer, or even with a professional server. Now, with the advent of 1.58-bit FLUX, we may only need a mobile phone in the future to create AI anytime, anywhere!

2025-02-17

2025-02-14

2025-02-13

13004184443

Room 607, 6th Floor, Building 9, Hongjing Xinhuiyuan, Qingpu District, Shanghai

gcfai@dongfangyuzhe.com

WeChat official account

friend link

13004184443

立即获取方案或咨询

top