Home > Information > News

#News ·2025-01-02

Transformer Crossover CV missions are nothing new for the last year or two.

Since Google proposed Vision Transformer (ViT) in October 2020, various vision Transformers have begun to play a role in image synthesis, point cloud processing, visual-language modeling and other fields.

After that, the implementation of Vision Transformer in PyTorch has become a research hotspot. There are a lot of great projects on GitHub, and today I'm going to introduce you to one of them.

The project is called "vit-pytorch"; and it is a Vision Transformer implementation that demonstrates a simple way to achieve SOTA results for visual classification in PyTorch using only a single transformer encoder.

The project currently has 7.5k of stars and was created by Phil Wang, who has 147 repositories on GitHub.

The address of the project: https://github.com/lucidrains/vit-pytorch

The project authors also provide a GIF showing:

First look at the installation, use, parameters, distillation and other steps of Vision Transformer-PyTorch.

The first step is to install:

The second step is to use:

The third step is the required parameters, including the following:

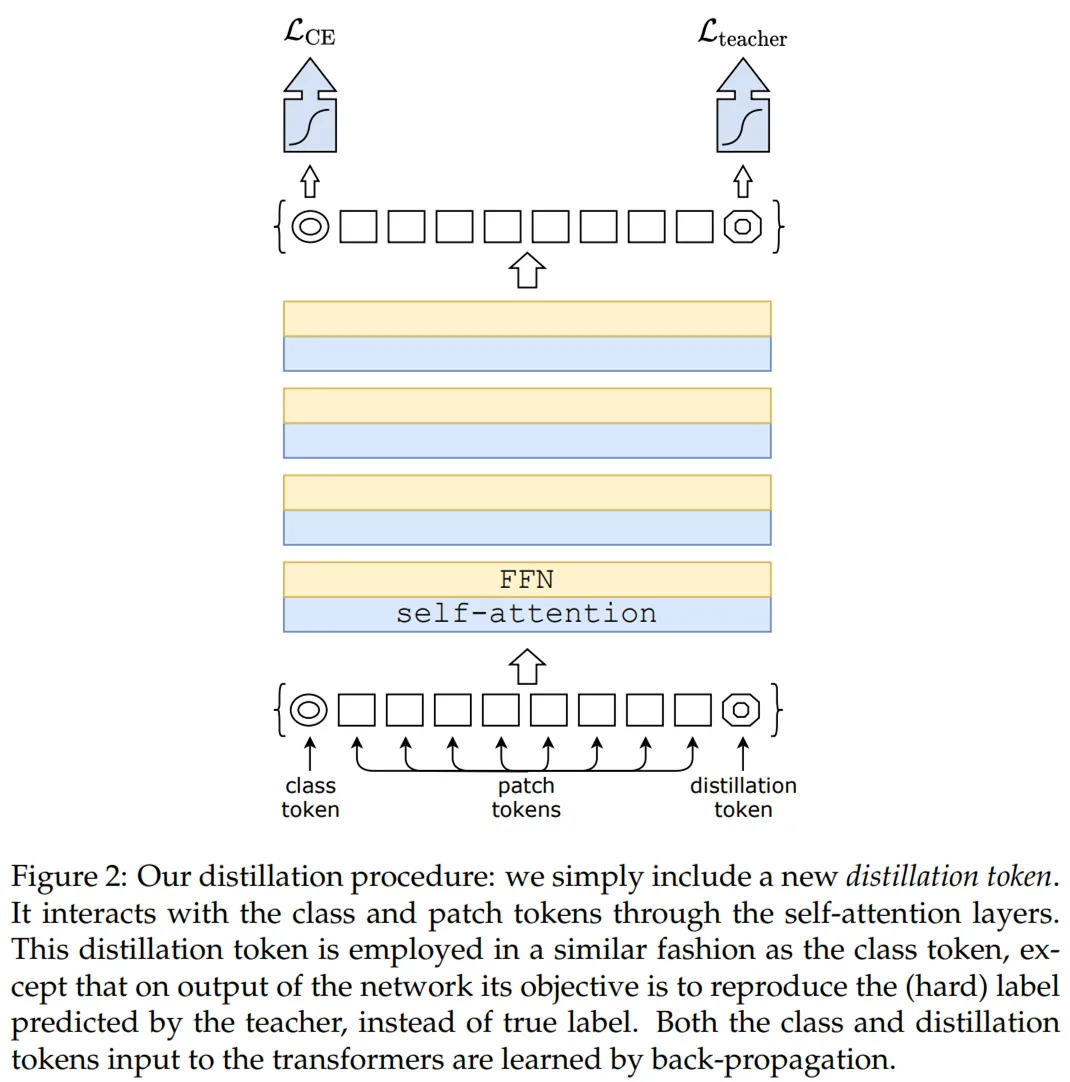

Finally, distillation, using a process developed by Facebook AI and the Sorbonne paper "Training data-efficient image transformers & distillation through attention."

Address: https://arxiv.org/pdf/2012.12877.pdf

The code distilled from ResNet50 (or any teacher network) to vision transformer is as follows:

In addition to Vision Transformer, the project also provides PyTorch implementations of other ViT variant models such as Deep ViT, CaiT, tokes-to-token ViT, PiT, etc.

Readers interested in the PyTorch implementation of the ViT model should refer to the original project.

2025-02-17

2025-02-14

2025-02-13

13004184443

Room 607, 6th Floor, Building 9, Hongjing Xinhuiyuan, Qingpu District, Shanghai

gcfai@dongfangyuzhe.com

WeChat official account

friend link

13004184443

立即获取方案或咨询

top