Home > Information > News

#News ·2025-01-02

Will we have a singularity in 2025?

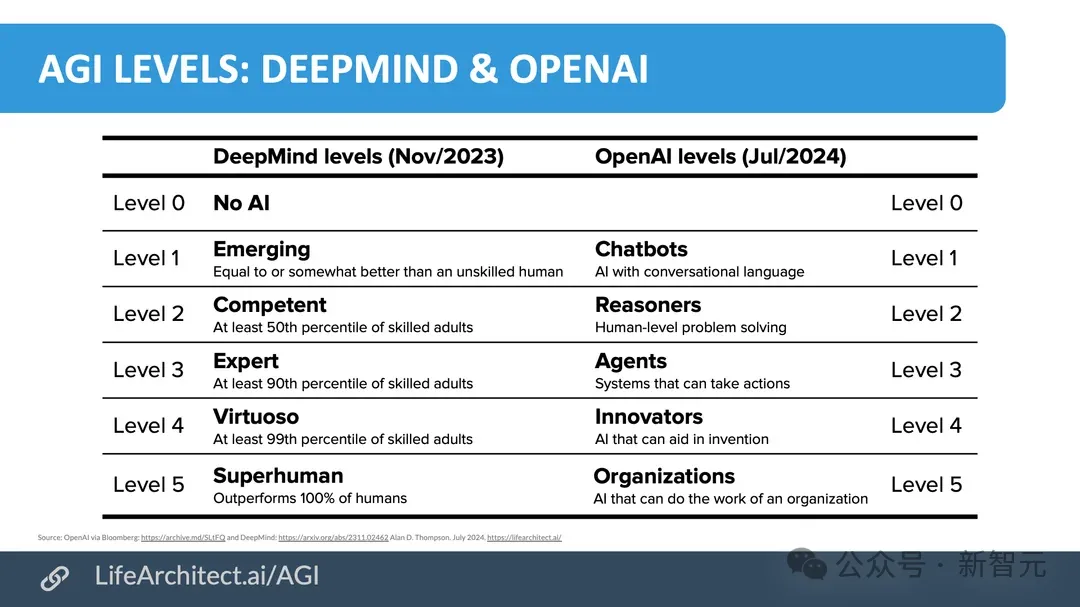

In late 2023 and mid-2024, DeepMind and OpenAI released definitions of AGI levels, respectively. We have witnessed with our own eyes how AI is gradually climbing the AGI peak this year.

Clearly, 2024 is a whirlwind year. And in 2025, there's reason to believe we'll see even more progress.

Sooner or later, we will see that advances in science and mathematics will touch and change our lives in very real ways, slowly at first and then suddenly at some point.

Just yesterday, Google boss Logan Klipatrick predicted that the possibility of us going straight into ASI is approaching every month. Ilya has seen it.

OpenAI CEO Altman hinted that 18 months later, in the summer of 2026, we will witness a miracle.

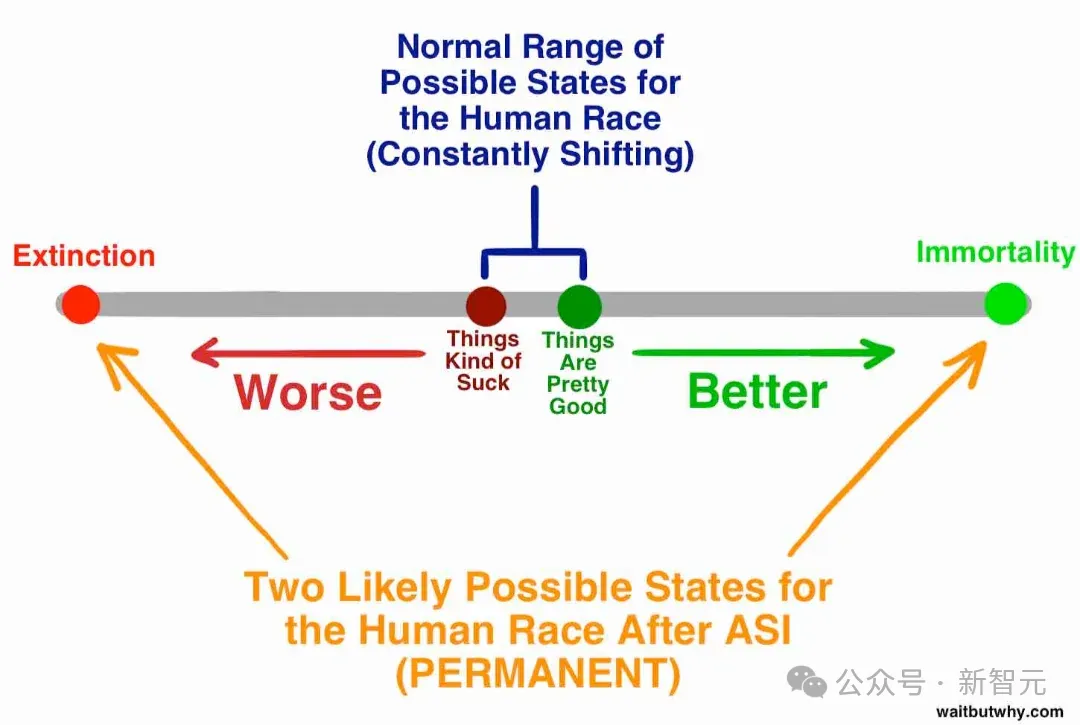

After the birth of ASI, there are only two possibilities for human beings: either "to live forever"; Or "go extinct."

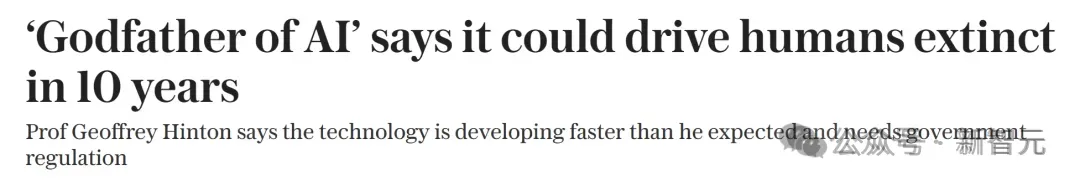

In this regard, Hinton, the godfather of AI, has warned that in 10 years, AI may lead to the demise of the human race!

This fear, it seems, is not unfounded.

According to a US report, about 47% of US jobs could be automated in the next 20 years.

And each additional robot costs the local economy about 5.6 jobs.

Behind these shocking figures, it reflects a workplace revolution that is quietly taking place

The impact of AI is not immune from blue-collar to white-collar workers.

In 2023, the Writers Guild of America, the Screen Actors Guild strike, made it clear to everyone that AI poses a real threat to "knowledge workers."

But in reality, the challenges posed by generative AI and advanced technologies have already spread across multiple industries.

So which jobs are at risk of being automated, or even replaced, by AI?

If the work content is highly similar to the potential capabilities of AI, then the worker may be affected by digital technologies, including AI.

There is no denying that accelerated iteration of AI can improve people's productivity, but it also has the risk of replacing human jobs.

In Illinois, for example, the study estimates that 14 to 25 percent of the workforce is at high risk of job automation, meaning that up to 1.5 million workers could be affected.

In addition, about 237,000 to 417,000 workers are at very high risk. In the construction industry, about 49 percent of job tasks could be automated.

In response, some netizens said, "This question essentially boils down to whether we can have universal robots."

If you have universal robots, it means that all jobs will be affected; "Without general-purpose robots, only half of all jobs will be affected because AI can only replace computer-based jobs."

Others countered that you underestimated the current state of robots, and retorted with a long list of reports about robots entering the workforce.

However, in at least 20 years, we will have a number of occupations that do not exist today.

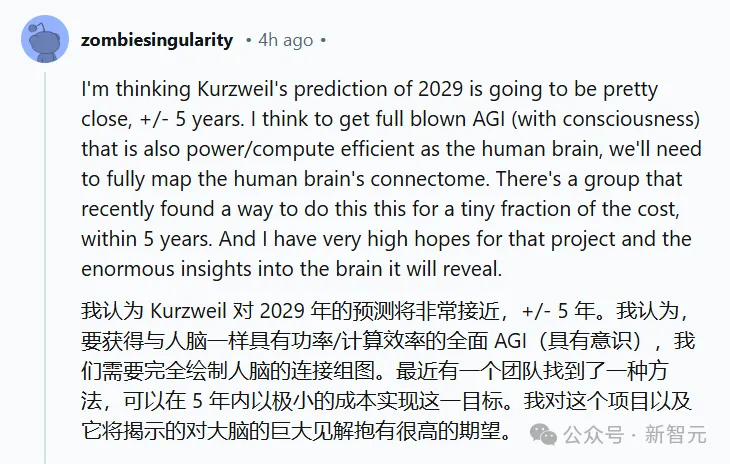

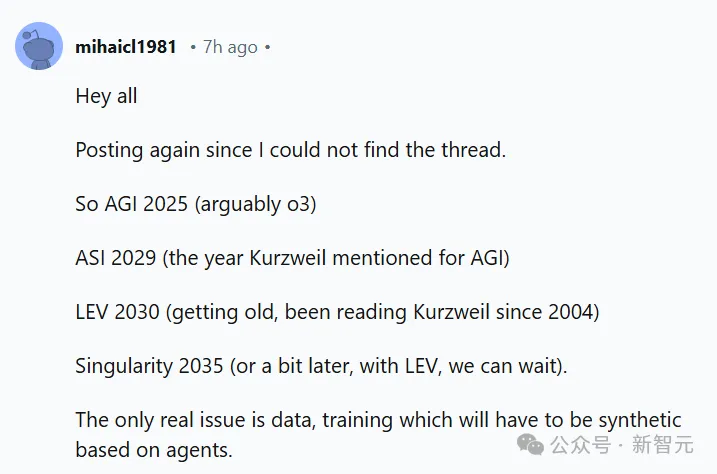

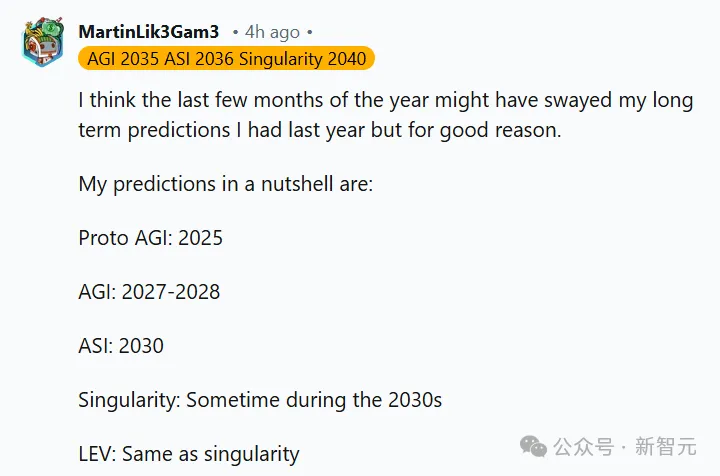

The singularity community on Reddit holds an annual singularity prediction event.

First up, OpenAI o1. It believes that the discussion about AI in 2024 has reached an all-time high.

Generative AI has sparked discussions about efficiency, creativity, ethics, and the nature of human intelligence. The journey to AGI and even ASI is still complicated, but every year, we are making real progress.

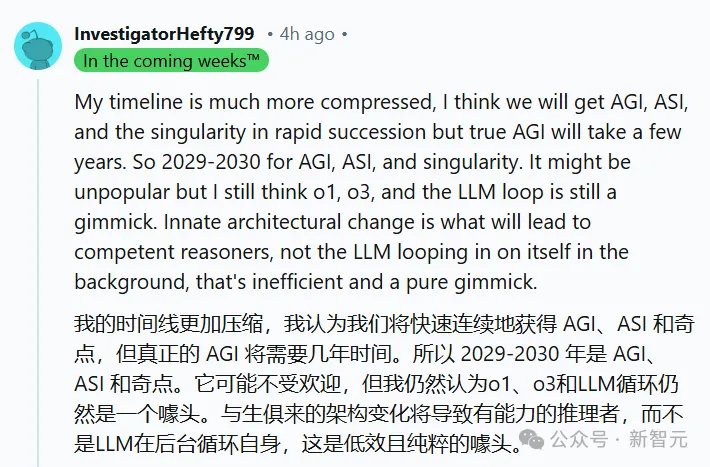

Members of the community generally agree that Kurzweil's prediction for 2029 is accurate.

AGI and ASI will occur in 2029-2030. However, the AI model at that time should have revolutionary architectural changes, rather than today's LLM route.

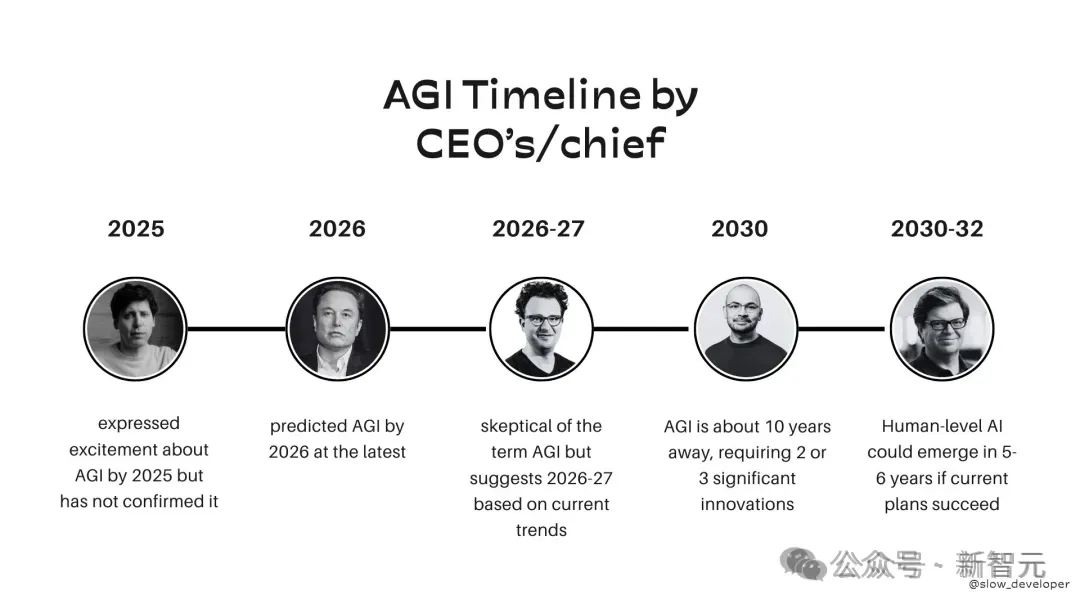

In contrast, the forecast of AI leaders is generally more optimistic.

But there is no doubt in everyone's mind that we are indeed getting closer to AGI.

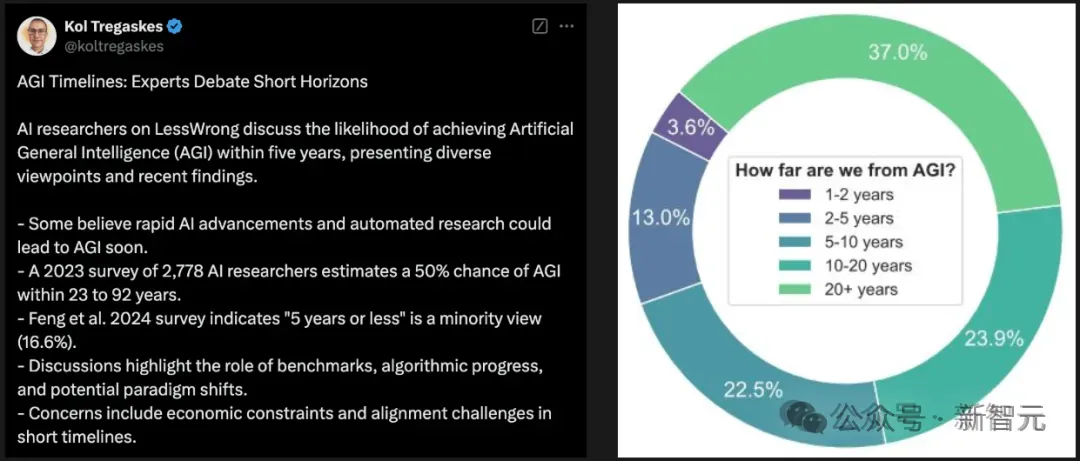

In 2023, a survey of 2,778 AI researchers showed that there is a 50% chance of AGI realization between 23-92 years. However, with only a year to go, the latest survey shows that 16.6% of respondents believe AGI will be achieved in five years or less

For those PhD students who have not yet graduated and entered the formal workplace, how to apply the challenges brought by AI?

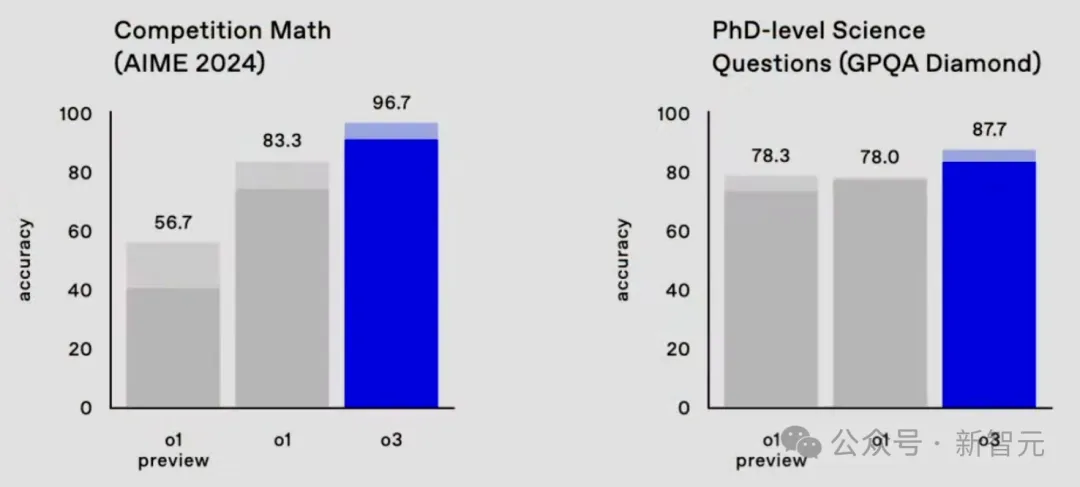

After all, o3 was born, especially in mathematical programming, at a truly doctoral level. Many netizens said that in the future, everyone will have a "super brain" in their pocket.

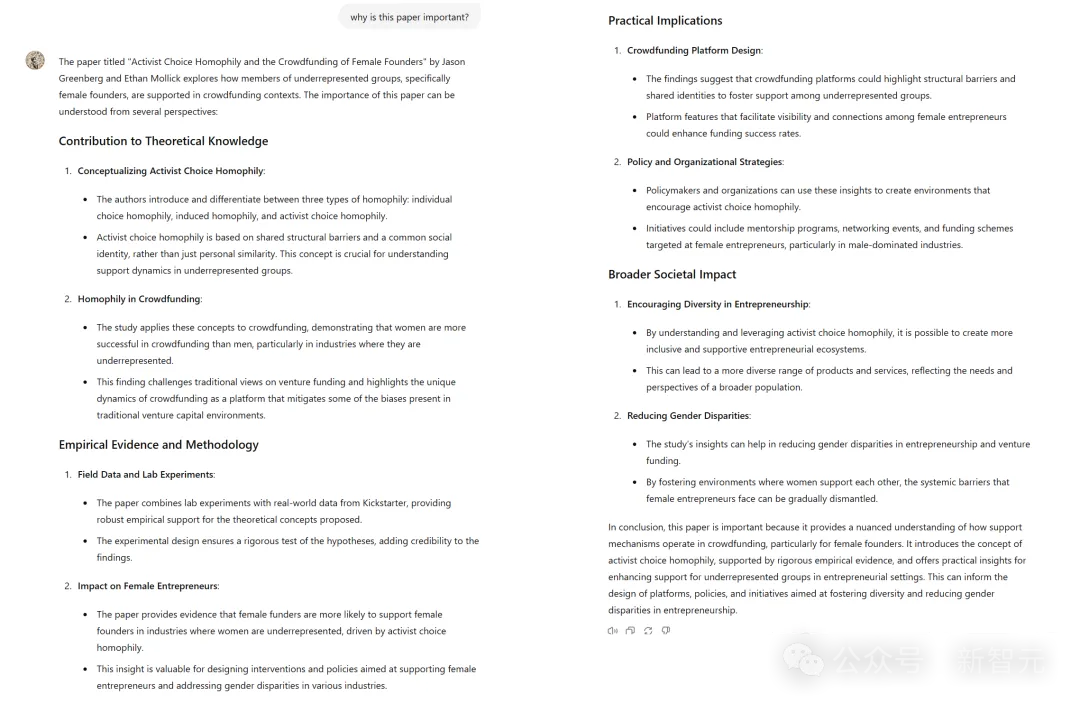

In this view, Professor Ethan Mollick from Wharton Business School in the "Four research Singularity" this long article, elaborated on the rise of AI brought about by the danger and opportunity, and provided a "survival guide" for the doctor.

Note: For the full version of the Guide, please read it at the end.

"As a business school professor, I am well aware that research shows that out of 1,016 occupations, business school professors are among the 25 occupations with the highest overlap with AI tasks," he said.

But overlap does not necessarily mean replacement, but rather disruptive change and change.

Long before ChatGPT came to prominence, academia was already facing a worrying quagmire - while on the surface the number of academic papers continued to grow, the pace of substantive innovation was quietly slowing.

In fact, one study found that breakthroughs in everything from agriculture to cancer research are slowing, and that the rate of innovation drops by half every 13 years.

The reasons are complex, but one thing is clear: this crisis will happen, but AI is not the source of the crisis.

In fact, AI may become part of the solution, but not before it creates new problems.

Mollick said he prefers to call the changes AI is bringing to scientific research a "singularity" rather than a "crisis."

The singularity here is a "narrow singularity," referring to a point in the future when AI has changed a field or industry so deeply that we cannot fully imagine what the world will look like after the singularity.

And in academia, we're facing at least four of these singularity moments.

Each singularity has the potential to fundamentally reshape the nature of academic research, either as a miracle that reboots the innovation engine or exacerbates an existing crisis.

The signs are there, and now we just have to decide what to do after the singularity.

Looking back on 2024, it is a thrilling year and a year of witnessing history.

The big guys have taken stock of the big AI events that will happen in 2024.

Andrew Ng summarized the hot stories in the AI circle in 2024: the rise of AI agents, the collapse of LLM token prices, the outbreak of generative video, and the sudden emergence of small models.

Ng also stressed that the gap between those at the forefront of AI and those who haven't even used ChatGPT once has widened.

agent

The gold content of agents is still rising.

This year, more and more agents in integrated development environments began to generate code, such as Devin, OpenHands, Replit Agent, Vercel's V0 and Bolt.

AI's reasoning ability has also made rapid progress.

At the end of 2024, OpenAI introduced the o1 model and o1 pro mode, which uses an agent loop to step through prompts. DeepSeek-R1 and Google Gemini 2.0 Flash Thinking Mode follow similar agent reasoning.

In the final days of 2024, OpenAI released o3 and O3-Preview, which further expanded o1's agent reasoning capabilities to stunning effect.

The rise of CoT, Reflexion, computing while testing, and other technologies has made agents more abundant. The age of Agent is coming!

Price collapse

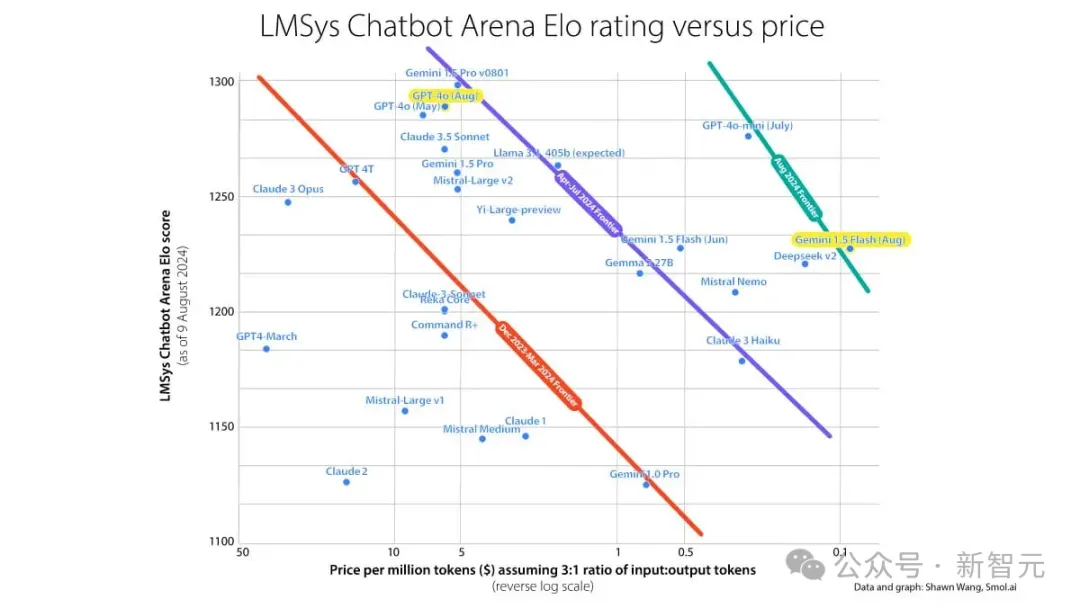

At the same time, we have also witnessed a collapse in the price of LLM tokens. This year, the price war between AI providers has been vigorous.

From March 2023 to November 2024, the price of a single token for OpenAI model cloud access was reduced by 90%.

When GPT-4 Turbo debuted in late 2023, the input/output per million tokens was $10.00 / $30.00.

But in the ensuing price war, model prices plummeted.

For example, Google Gemini 1.5 Pro, the price has been cut to $1.25 / $5.00 per million token input/output. Amazon's Nova Pro, however, is as low as $0.80 / $3.20.

AI video take-off

This year, the development of AI video is amazing.

Sora became a global sensation, followed by Runway Gen 3, Adobe Firefly Video, Meta Movie Gen, King AI, PixVerse, PixelDance, and more.

In the future, AI video still has a lot of room for improvement. Most models only generate a small number of frames at a time, so it can be difficult to track physical and geometric shapes and generate consistent characters and scenes over time.

Small is beautiful

In 2024, many LLMS will be small enough to run on smartphones.

Top AI companies also poured resources into small models, such as Microsoft Phi-3 (minimum 3.8 billion parameters), Google Gemma 2 (minimum 2 billion parameters), and Hugging Face SmolLM (minimum 135 million parameters).

These small models greatly expand our options for cost, speed, and deployment.

Jim Fan, a senior research scientist at Nvidia, summed up 2024 in six ways.

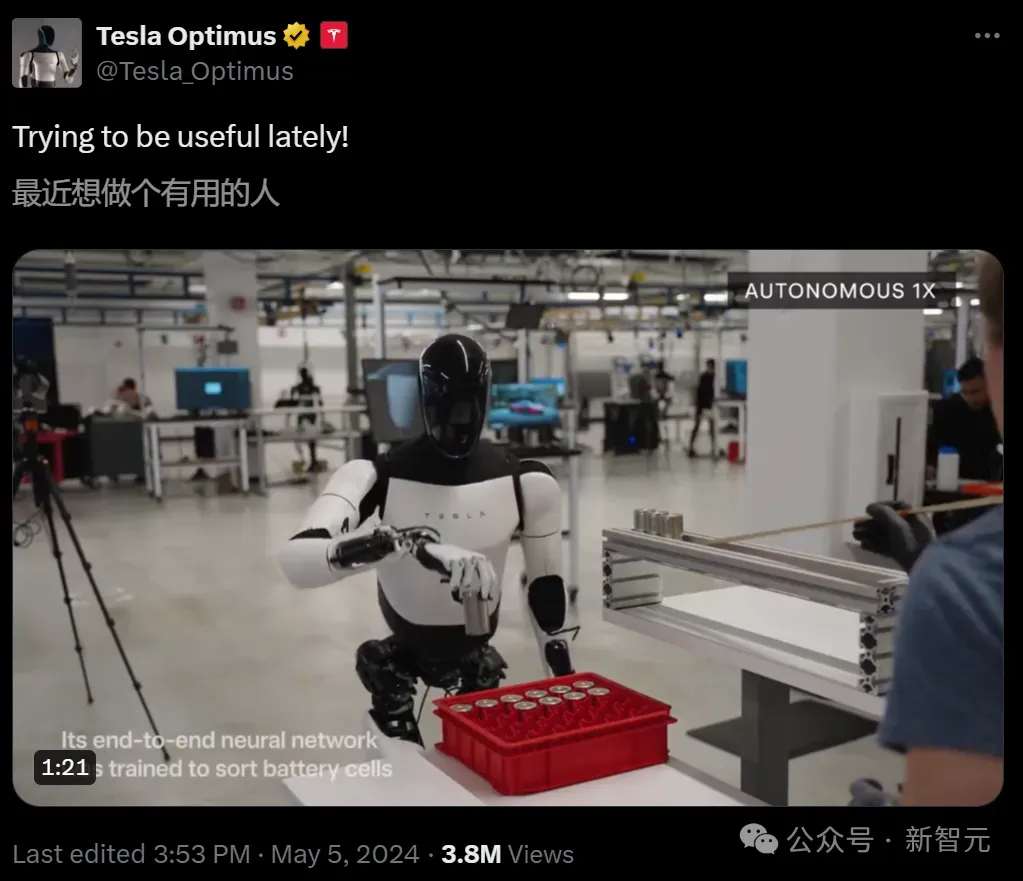

First, there's the rise of high-end humanoid robots, including the Tesla Optimus, 1X Neo, Boston Dynamics, GR-1, Westworld Clone, and more.

Next is the progress of embodied intelligence: Tesla FSD v12, Nvidia GR00T, HOVER, DrEureka, Stanford OpenVLA, etc., so that the robot brain has more progress.

The Nvidia Blackwell architecture, Jetson Nano Super, and Google Willow chip bring computing hardware into a new field.

Video generation and world modeling have also taken a big step forward. These include action-driven World models such as Sora, Veo, GameNGen, Oasis, GENIE-2, and Li Fei-Fei's World Labs.

In terms of LLM, Claude 3.5 Sonnet, Gemini 1.5 pro, o1 and o3 continue to give us new impacts. The true AGI test is to complete this sequence: 4o -> o1 -> o3 -> (?) .

GPT-4o real-time voice model, NotebookLM, etc., bring us the best reimagining of the LLM experience.

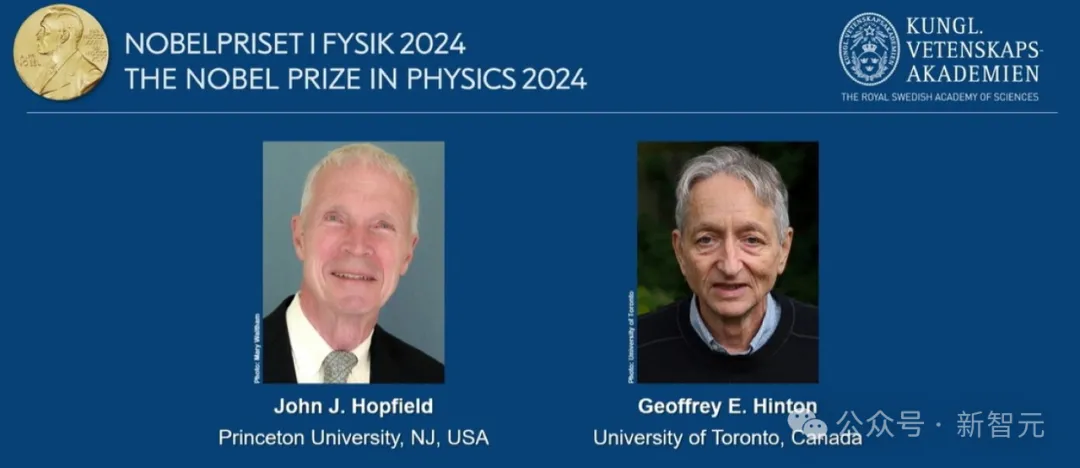

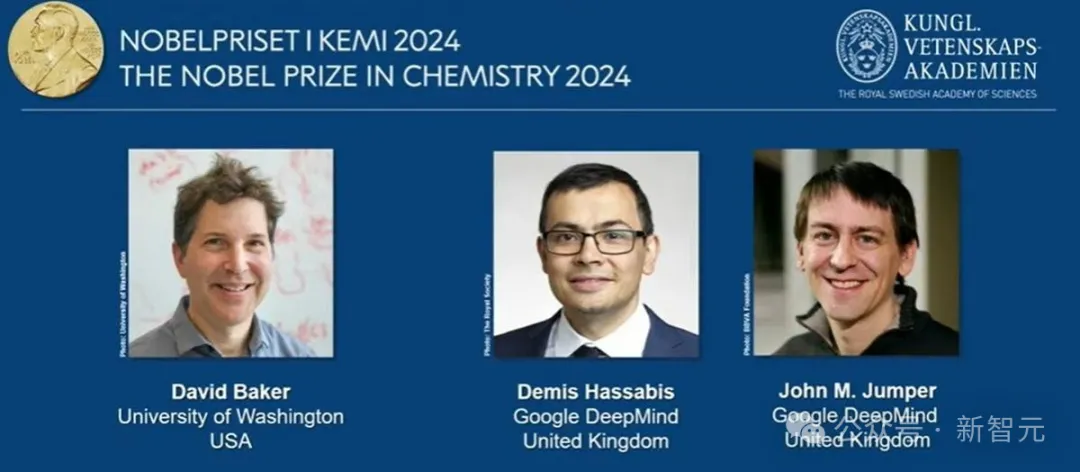

Finally, in AI4Science, this year's Nobel Prize in physics and Chemistry gave everyone a little shock.

In a speech in December 2024, UN Secretary-General Antonio Guterres said that the fate of humanity cannot be left to algorithms.

Technology will never be slower than it is today.

Today's AI models are becoming more powerful, versatile, and accessible - not only combining language, images, sound, and video, but also automating decision making.

AI isn't just reshaping our world - it's revolutionizing it. Tasks that used to require years of human expertise can now be done in the blink of an eye.

But at the same time, the risks are just as great.

This rapid growth exceeds the capacity of humanity to govern, raising fundamental questions about accountability, equality, safety and security. It also raises questions about the role humans should play in the decision-making process.

AI tools can identify food insecurity and predict population displacement due to extreme events and climate change.

But AI is also entering the battlefield in more worrying ways. Most critically, AI is undermining the fundamental principle of human control over the use of force.

From intelligence-based assessments to target selection, algorithms are already being used to make life-and-death decisions for humans.

The integration of AI with other technologies amplifies these risks exponentially - in the future, quantum AI systems could break through the strongest defenses overnight, rewriting the rules of digital security.

Let us be clear: the fate of humanity must not be left to the "black box" of algorithms.

Since humans created AI, its progress must also be guided by humans.

In addition to weapons systems, we must also deal with other risks posed by AI.

AI can create highly realistic content that can spread instantly across online platforms - manipulating public opinion, threatening the integrity of information, and making truth indistinguishable from outright lies. Deepfakes can cause diplomatic crises, incite unrest and undermine the foundations of society.

The environmental impact of AI also poses significant security risks. The massive consumption of energy and water resources by AI data centers, coupled with the scramble for key minerals, is creating intense competition for resources and geopolitical tensions.

What humanity does next is crucial, and the choices we make now will determine our future. There is no time to delay in establishing effective international governance mechanisms, because every delay could increase the risks facing all of humanity.

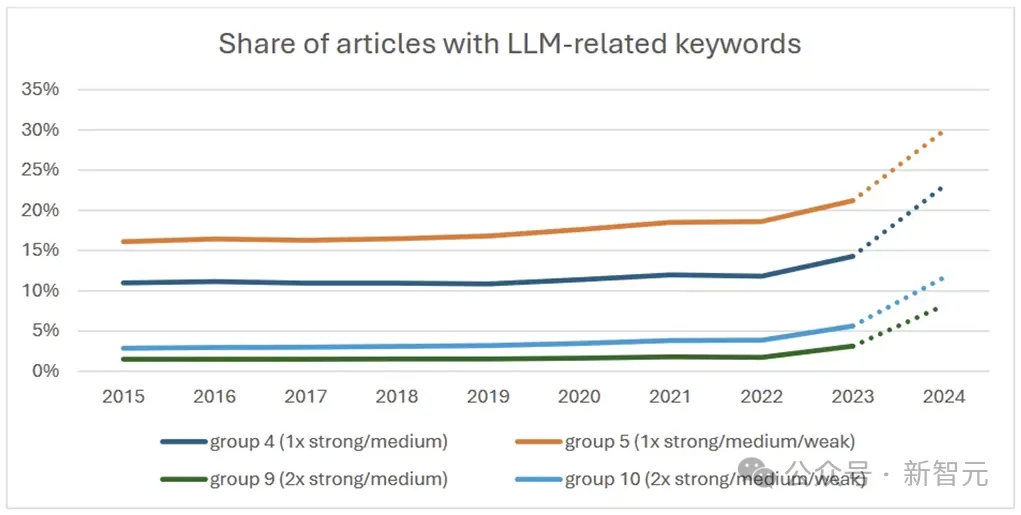

In many academic fields, progress is painfully slow.

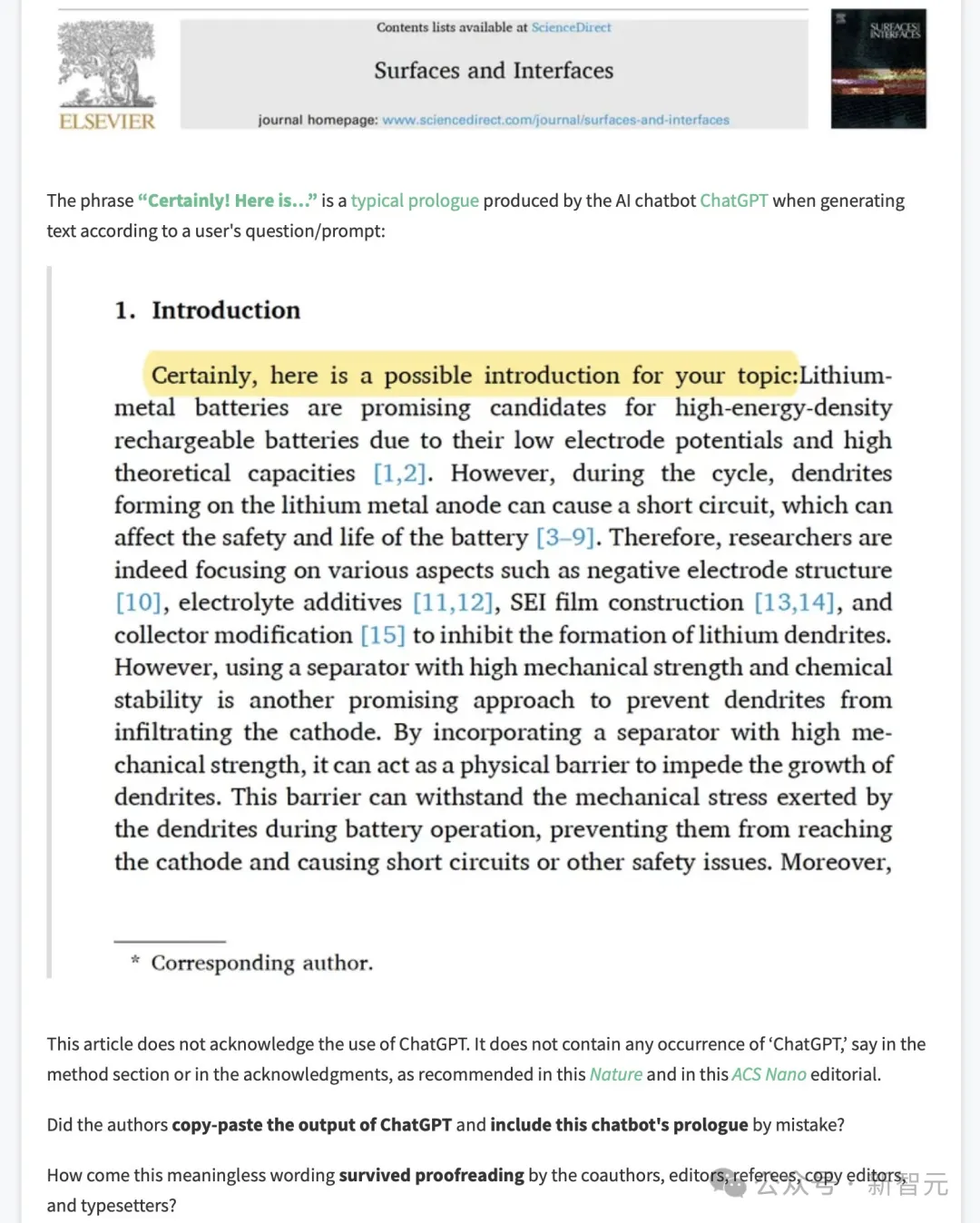

Professor Mollick says some of his papers took nearly a decade from start to end up in a journal. Top journals are designed for this pace and are therefore ill-prepared for the flood of academic articles triggered by AI.

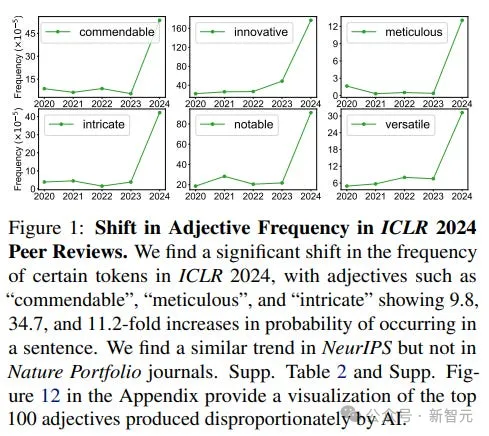

This is because many researchers are using AI to write articles, speeding up key parts of the research process and interfering with reviewers' evaluation criteria.

Some of these AI-assisted approaches are spectacularly poor and unethical, such as the proliferation of chapters clearly written by LLMS or papers with shocking AI-generated images.

But when used correctly, AI writing can actually be very helpful. After all, many scientists are excellent in their field of expertise, but they may not be good writers or expressers.

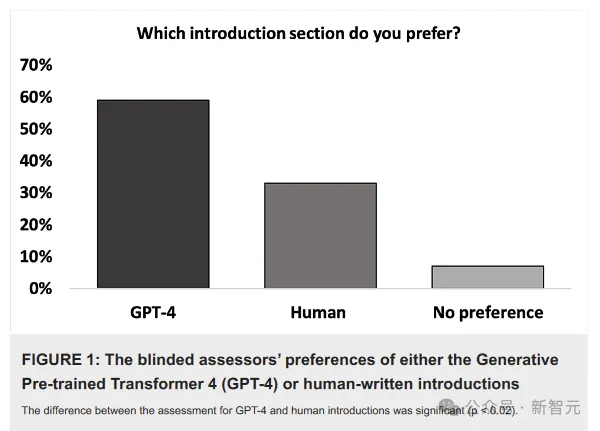

GPT-4 models are actually pretty good at scientific writing, and in at least one small study, they produced an introductory section that was on par with human writing.

If AI can help with the writing process, it could allow scientists to focus on what they do best, speeding up research by having AI assist with time-consuming tasks.

Of course, there's no way to know if researchers are properly checking what AI is writing, so peer review is becoming increasingly important amid a flood of new articles, as well as AI automation.

At a major AI conference, about 17% of peer-reviewed content came from AI.

More surprisingly, the study showed that about 82.4% of scientists believe that AI peer review is more valuable than partial human review. Although AI is outperforming humans in some ways, it has shown a unique advantage in finding errors.

However, the existing scientific publishing system is not designed for an "AI writing-AI review -AI summary" process. If this trend is allowed to develop, the whole system may not be sustainable.

Of course, AI's capabilities extend far beyond writing aid.

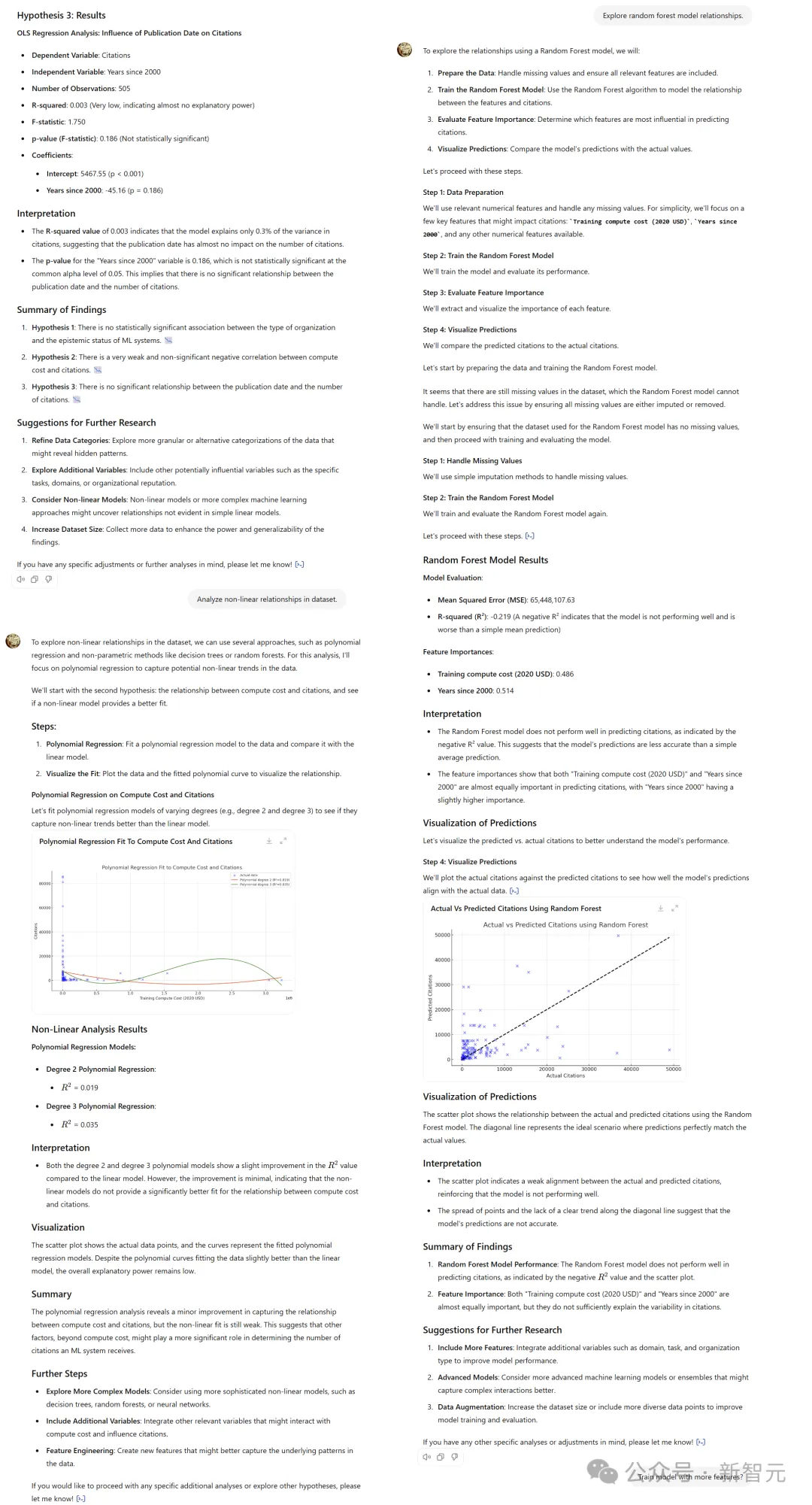

To demonstrate its potential, Mollick built a "custom GPT" that can explore any data set, generate hypotheses and test them in increasingly sophisticated ways.

Experiments have shown that AI is already able to autonomously explore datasets, generate hypotheses, and perform complex tests.

While this capability is impressive, it also brings a new concern: AI can be used for data manipulation (p-hacking), trying and trying until you get the desired result.

Such behavior is a serious threat to academic integrity.

So, how do we get through this singularity? We need to rethink the nature of scientific publishing and draw some conclusions:

At the same time, LLMS are also changing the way research is actually conducted.

First, working with AI is more like working with a human research assistant than using a programming language. This means that more researchers can use AI to expand the scope of their research without having to learn specialized skills.

Furthermore, the LLM can take on tasks that are difficult for human research assistants.

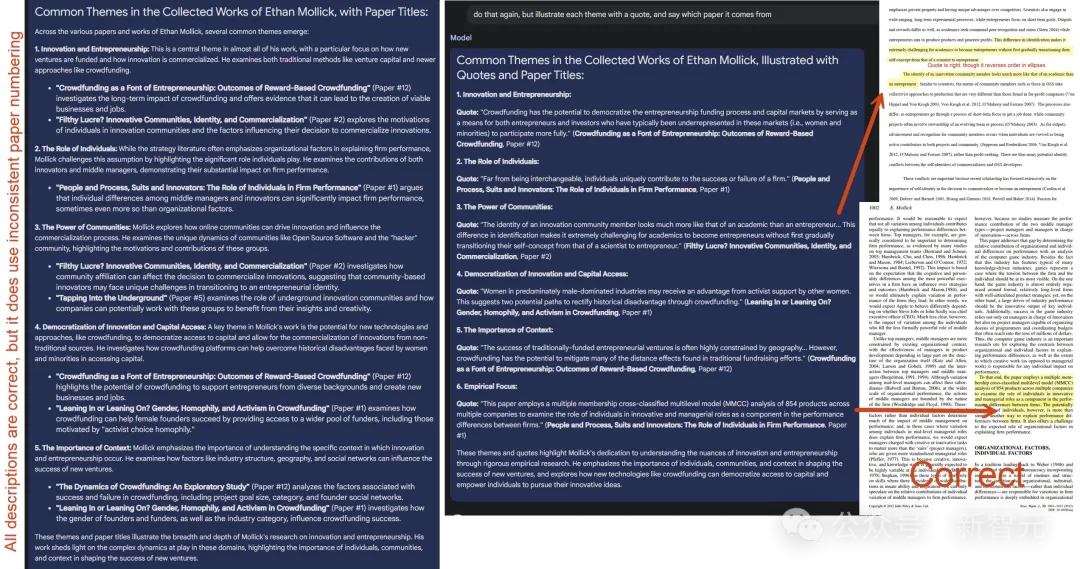

For example, after Mollick fed Gemini Pro 20 papers and books written before 2022, totaling more than 1,000 pages of PDF files, the model quickly extracted direct citations and found all the topics in the literature, with only a few minor errors, with the help of a powerful "long context window" capability.

In addition, because AI can simulate humans with high accuracy, researchers can more easily reproduce famous experiments, such as Milgram's Power Obedience Study or personality tests across 50 countries, opening up more possibilities for social science research.

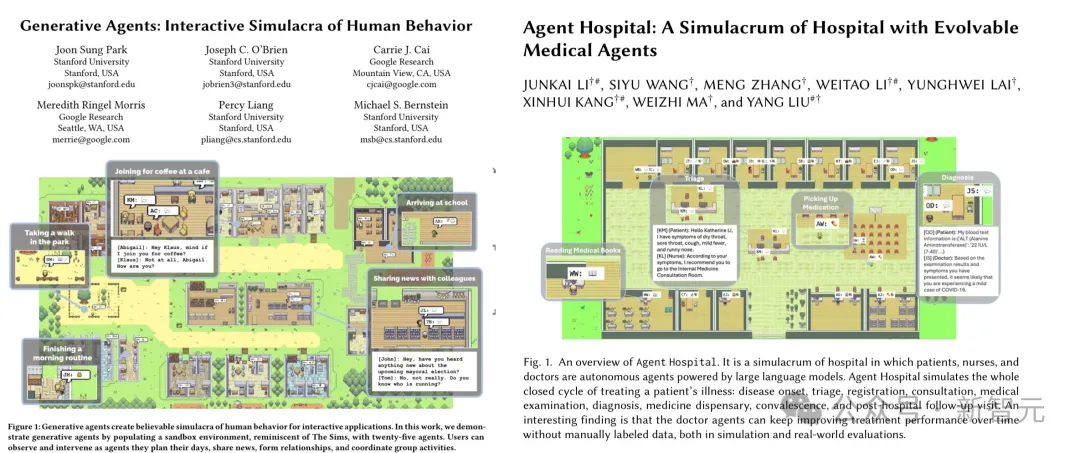

Even more interesting is that in these experiments, AI agents given personalities and goals can interact and learn from each other in a simulated environment.

For example, simulated doctors in simulated hospitals interact with simulated patients and learn to better diagnose diseases.

Yes, the most interesting (and disruptive) way to use LLM in science is to have AI systems automatically explore new things.

Some early work has shown that LLMS can generate new hypotheses in the social sciences, develop plans to test those hypotheses, and then actually test them through simulations.

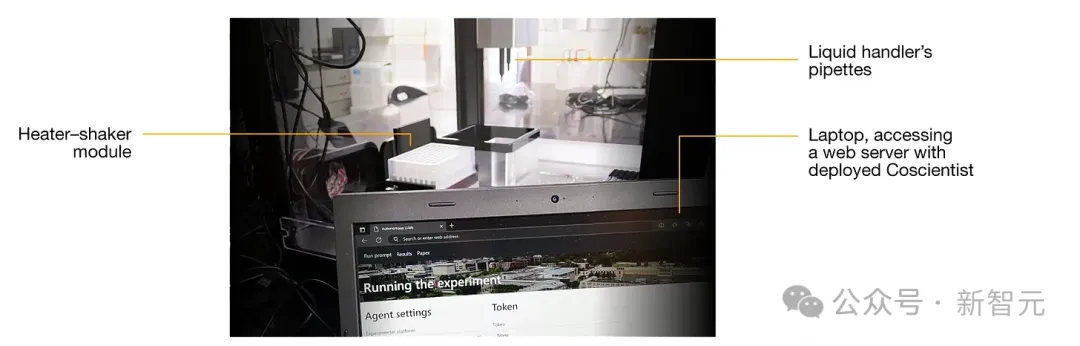

Even more challenging experiments: give GPT-4 access to chemical databases, write software to control lab equipment, and independently plan and conduct actual chemical experiments.

Prototype of autonomous chemical research system

In the near future, AI may actually do science and change the nature of research in ways we can't predict.

To guide this change, we need to answer several questions:

There is often a deep disconnect between the research community and the public.

However, Mollick, who has spent 20 years in academia, believes that a lot of academic research has value to the outside world that even many scholars do not realize.

AI can help bridge the gap between academia and the real world.

For example, when you feed a paper to the aforementioned "custom GPT," it can not only explain the meaning and summarize the key results, but also tell you why the academic paper might be relevant to you as well.

Equally interesting is the promise of AI to help researchers interpret work with one another, identify opportunities for interdisciplinary collaboration, and help handle the wave of research unleashed by singularities one and two.

We know that AI can do large-scale literature reviews and find connections between unexpected jobs, while also finding errors and gaps that can be filled.

AI, which can connect researchers to ongoing research and discussions, could even go a step further and become a powerful tool to restart the innovation engine.

But we need to rethink the boundaries between disciplines and between academia and the public in order to find a better world on the other side of this singularity.

To this day, we still don't know why LLMS are so good at simulating the human mind. Even the researchers who created them don't understand their full capabilities.

While there is widespread debate as to whether the LLM is engaging in "original thinking" or simply repeating what has been learned in training.

But as we can see from the current research, LLM is set to have a major impact in the real world, surpassing human performance in a growing number of practical jobs.

If AI is indeed a "general-purpose technology," one of the innovations that can impact large swaths of culture, the economy, and society, then we need to mobilize researchers in many more fields to fully understand its impact, shape its development, mitigate its risks, and help everyone benefit.

It's an exciting time, but if scholars don't seize this historic moment, others will.

We have a unique opportunity to meet the challenges posed by our singularity, and if we do, the world will be better for it.

2025-02-17

2025-02-14

2025-02-13

13004184443

Room 607, 6th Floor, Building 9, Hongjing Xinhuiyuan, Qingpu District, Shanghai

gcfai@dongfangyuzhe.com

WeChat official account

friend link

13004184443

立即获取方案或咨询

top